【深度学习】GoogleNet原理解析与tensorflow实现

【深度学习】GoogleNet原理解析与tensorflow实现

1.googleNet系列介绍

2.googlenet思想

3.googleNet实现

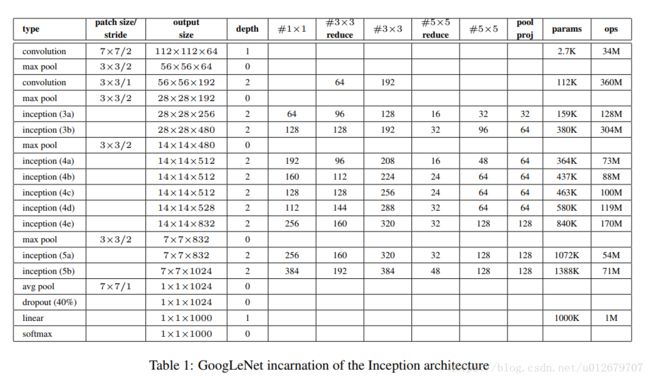

Googe Inception Net首次出现在ILSVRC2014的比赛中(和VGGNet同年),以较大的优势获得冠军。那一届的GoogleNet通常被称为Inception V1,Inception V1的特点是控制了计算量的参数量的同时,获得了非常好的性能-top5错误率6.67%, 这主要归功于GoogleNet中引入一个新的网络结构Inception模块,所以GoogleNet又被称为Inception V1(后面还有改进版V2、V3、V4)架构中有22层深,V1比VGGNet和AlexNet都深,但是它只有500万的参数量,计算量也只有15亿次浮点运算,在参数量和计算量下降的同时保证了准确率,可以说是非常优秀并且实用的模型。

![]()

1.googleNet系列介绍

Google Inception Net是一个大家族,包括:

- 2014年9月的《Going deeper with convolutions》提出的Inception V1.

- 2015年2月的《Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift》提出的Inception V2

- 2015年12月的《Rethinking the Inception Architecture for Computer Vision》提出的Inception V3

- 2016年2月的《Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning》提出的Inception V4

GoogLeNet系列解读

Inception V1:

Inception V1中精心设计的Inception Module提高了参数的利用率;在先前的网络中,全连接层占据了网络的大部分参数,很容易产生过拟合现象;Inception V1去除了模型最后的全连接层,用全局平均池化层代替(将图片尺寸变为1x1)。

![]()

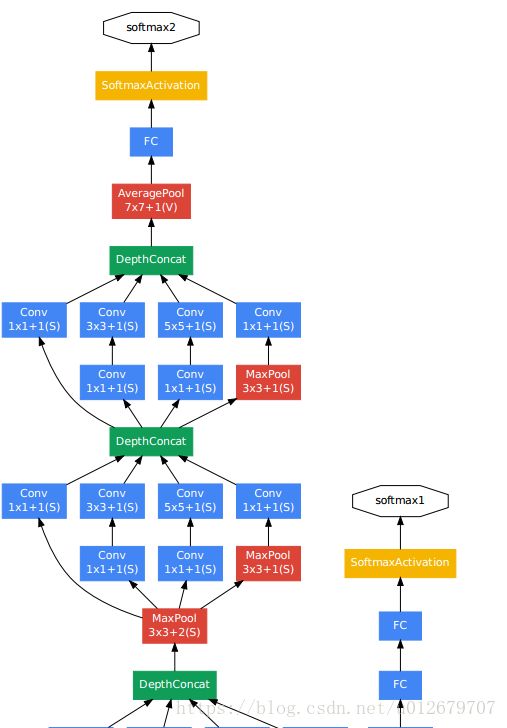

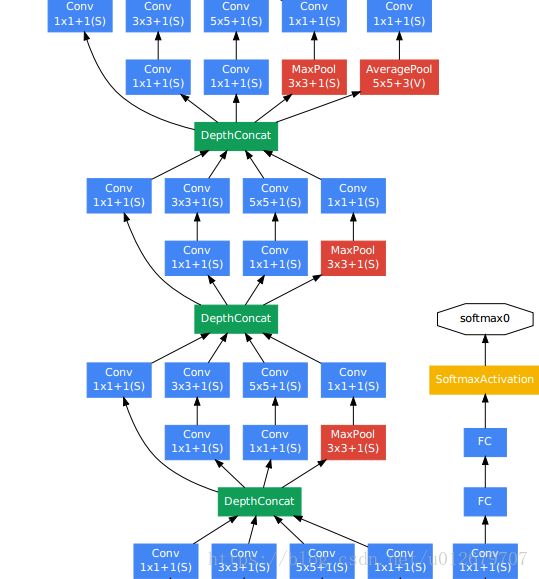

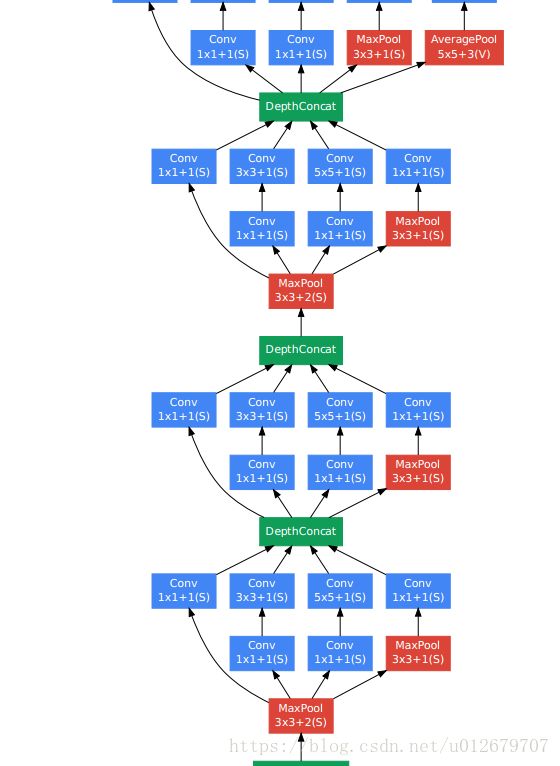

googleNet V1具体结构:

![]()

Inception V2:

Inception V2学习了VGGNet,用两个3*3的卷积代替5*5的大卷积核(降低参数量的同时减轻了过拟合),同时还提出了注明的Batch Normalization(简称BN)方法。BN是一个非常有效的正则化方法,可以让大型卷积网络的训练速度加快很多倍,同时收敛后的分类准确率可以的到大幅度提高。

BN在用于神经网络某层时,会对每一个mini-batch数据的内部进行标准化处理,使输出规范化到(0,1)的正态分布,减少了Internal Covariate Shift(内部神经元分布的改变)。BN论文指出,传统的深度神经网络在训练时,每一层的输入的分布都在变化,导致训练变得困难,我们只能使用一个很小的学习速率解决这个问题。而对每一层使用BN之后,我们可以有效的解决这个问题,学习速率可以增大很多倍,达到之间的准确率需要的迭代次数有需要1/14,训练时间大大缩短,并且在达到之间准确率后,可以继续训练。以为BN某种意义上还起到了正则化的作用,所有可以减少或取消Dropout,简化网络结构。

当然,在使用BN时,需要一些调整:

- 增大学习率并加快学习衰减速度以适应BN规范化后的数据

- 去除Dropout并减轻L2正则(BN已起到正则化的作用)

- 去除LRN

- 更彻底地对训练样本进行shuffle

- 减少数据增强过程中对数据的光学畸变(BN训练更快,每个样本被训练的次数更少,因此真实的样本对训练更有帮助)

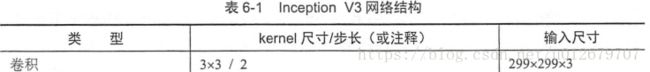

Inception V3:

Inception V3主要在两个方面改造:

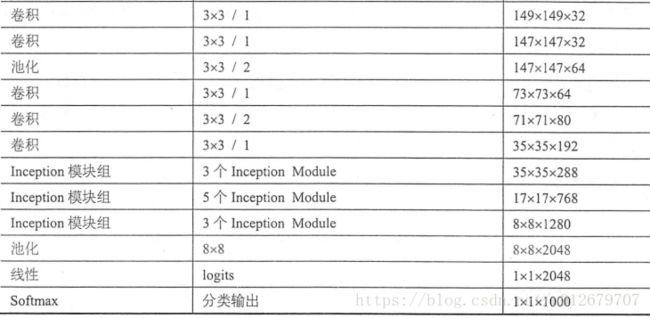

- 引入了Factorization into small convolutions的思想,将一个较大的二维卷积拆成两个较小的一位卷积,比如将7*7卷积拆成1*7卷积和7*1卷积(下图是3*3拆分为1*3和3*1的示意图)。 一方面节约了大量参数,加速运算并减去过拟合,同时增加了一层非线性扩展模型表达能力。论文中指出,这样非对称的卷积结构拆分,结果比对称地拆分为几个相同的小卷积核效果更明显,可以处理更多、更丰富的空间特征、增加特征多样性。

3*3卷积核拆分为1*3卷积和3*1卷积示意图: ![]()

- 另一方面,Inception V3优化了Inception Module的结构,现在Inception Module有35*35、17*17和8*8三种不同的结构,如下图。这些Inception Module只在网络的后部出现,前部还是普通的卷积层。并且还在Inception Module的分支中还使用了分支。

Inception V3中三种结构的Inception Module:

Inception V4:

Inception V4相比V3主要是结合了微软的ResNet,有兴趣的可以查看《Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning》论文。

2.GoogLeNet思想

Motivation

深度学习以及神经网络快速发展,人们不再只关注更给力的硬件、更大的数据集、更大的模型,而是更在意新的idea、新的算法以及模型的改进。

一般来说,提升网络性能最直接的方式就是增加网络的大小:

1.增加网络的深度

2.增加网络的宽度

这样简单的解决办法有两个主要的缺点:

1.网络参数的增多,网络容易陷入过拟合中,这需要大量的训练数据,而在解决高粒度分类的问题上,高质量的训练数据成本太高;

2.简单的增加网络的大小,会让网络计算量增大,而增大计算量得不到充分的利用,从而造成计算资源的浪费 一般来说,提升网络性能最直接的办法就是增加网络深度和宽度,这也就意味着巨量的参数。但是,巨量参数容易产生过拟合也会大大增加计算量。

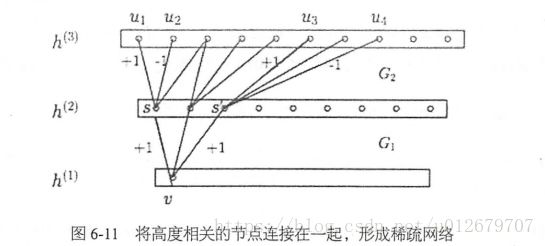

文章认为解决上述两个缺点的根本方法是将全连接甚至一般的卷积都转化为稀疏连接。一方面现实生物神经系统的连接也是稀疏的,另一方面有文献表明:对于大规模稀疏的神经网络,可以通过分析激活值的统计特性和对高度相关的输出进行聚类来逐层构建出一个最优网络。这点表明臃肿的稀疏网络可能被不失性能地简化。 虽然数学证明有着严格的条件限制,但Hebbian准则有力地支持了这一点:fire together,wire together。

早些的时候,为了打破网络对称性和提高学习能力,传统的网络都使用了随机稀疏连接。但是,计算机软硬件对非均匀稀疏数据的计算效率很差,所以在AlexNet中又重新启用了全连接层,目的是为了更好地优化并行运算。所以,现在的问题是有没有一种方法,既能保持网络结构的稀疏性,又能利用密集矩阵的高计算性能。大量的文献表明可以将稀疏矩阵聚类为较为密集的子矩阵来提高计算性能,据此论文提出了名为Inception 的结构来实现此目的。

主要思想:

inception架构的主要思想是建立在找到可以逼近的卷积视觉网络内的最优局部稀疏结构,并可以通过易实现的模块实现这种结构。

将Hebbian原理应用在神经网络上,如果数据集的概率分布可以被一个很大很稀疏的神经网络表达,那么构筑这个网络的最佳方法是逐层构筑网络:将上一层高度相关的节点聚类,并将聚类出来的每一个小簇连接到一起。

1 . 什么是Hebbian原理?

神经反射活动的持续与重复会导致神经元连续稳定性持久提升,当两个神经元细胞A和B距离很近,并且A参与了对B重复、持续的兴奋,那么某些代谢会导致A将作为使B兴奋的细胞。总结一下:“一起发射的神经元会连在一起”,学习过程中的刺激会使神经元间突触强度增加。

2.这里我们先讨论一下为什么需要稀疏的神经网络是什么概念?

人脑神经元的连接是稀疏的,研究者认为大型神经网络的合理的连接方式应该也是稀疏的,稀疏结构是非常适合神经网络的一种结构,尤其是对非常大型、非常深的神经网络,可以减轻过拟合并降低计算量,例如CNN就是稀疏连接。

3.为什么CNN就是稀疏连接?

在符合Hebbian原理的基础上,我们应该把相关性高的一簇神经元节点连接在一起。在普通的数据集中,这可能需要对神经元节点做聚类,但是在图片数据中,天然的就是临近区域的数据相关性高,因此相邻的像素点被卷积操作连接在一起(符合Hebbian原理),而卷积操作就是在做稀疏连接。

4. 怎样构建满足Hebbian原理的网络?

在CNN模型中,我们可能有多个卷积核,在同一空间位置但在不同通道的卷积核的输出结果相关性极高。我们可以使用1*1的卷积很自然的把这些相关性很高的、在同一空间位置但是不同通道的特征连接在一起。

3.googleNet实现

tensorflow.contrib.slim.python.slim.nets中已经搭建好了经典卷积神经网络:

alexnet\vgg\inception_v1_v2_v3\resnet

下面,具体利用tensorflow实现GoogleNet V3网络结构:

# -*- coding:utf-8 -*-

"""

@author:Lisa

@file:gooleNet_V3.py

@note:from

@time:2018/6/26 0026下午 8:12

"""

import tensorflow as tf

from datetime import datetime

import time

import math

slim=tf.contrib.slim

#产生截断的正态分布

trunc_normal =lambda stddev:tf.truncated_normal_initializer(0.0,stddev)

parameters =[] #储存参数

#why?为什么要定义这个函数?

#因为若事先定义好slim.conv2d各种默认参数,包括激活函数、标准化器,后面定义卷积层将会非常容易:

# 1.代码整体美观

# 2.网络设计的工作量会大大减轻

def inception_v3_arg_scope(weight_decay=0.00004,

stddev=0.1,

batch_norm_var_collection='moving_vars'):

"""

#定义inception_v3_arg_scope(),

#用来生成网络中经常用到的函数的默认参数(卷积的激活函数、权重初始化方式、标准化器等)

:param weight_decay: 权值衰减系数

:param stddev: 标准差

:param batch_norm_var_collection:

:return:

"""

batch_norm_params={

'decay':0.9997, #衰减系数decay

'epsilon':0.001, #极小值

'updates_collections':tf.GraphKeys.UPDATE_OPS,

'variables_collections':{

'beta':None,

'gamma':None,

'moving_mean':[batch_norm_var_collection],

'moving_variance':[batch_norm_var_collection],

}

}

with slim.arg_scope([slim.conv2d,slim.fully_connected],

weights_regularizer=slim.l2_regularizer(weight_decay)):

"""

slim.arg_scope()是一个非常有用的工具,可以给函数的参数自动赋予某些默认值

例如:

slim.arg_scope([slim.conv2d,slim.fully_connected],weights_regularizer=slim.l2_regularizer(weight_decay)):

会对[slim.conv2d,slim.fully_connected]这两个函数的参数自动赋值,

将参数weights_regularizer的默认值设为slim.l2_regularizer(weight_decay)

备注:使用了slim.arg_scope后就不需要每次重复设置参数,只需在修改时设置即可。

"""

# 设置默认值:对slim.conv2d函数的几个参数赋予默认值

with slim.arg_scope(

[slim.conv2d],

weights_initializer=tf.truncated_normal_initializer(stddev=stddev), #权重初始化

activation_fn=tf.nn.relu, #激励函数

normalizer_fn=slim.batch_norm, #标准化器

normalizer_params=batch_norm_params ) as sc: #normalizer_params标准化器的参数

return sc #返回定义好的scope

def inception_V3_base(input,scope=None):

end_points= {}

# 第一部分--基础部分:卷积和池化交错

with tf.variable_scope(scope,'inception_V3',[input]):

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d],

stride=1,padding='VALID'):

net1=slim.conv2d(input,32,[3,3],stride=2,scope='conv2d_1a_3x3')

net2 = slim.conv2d(net1, 32, [3, 3],scope='conv2d_2a_3x3')

net3 = slim.conv2d(net2, 64, [3, 3], padding='SAME',

scope='conv2d_2b_3x3')

net4=slim.max_pool2d(net3,[3,3],stride=2,scope='maxPool_3a_3x3')

net5 = slim.conv2d(net4, 80, [1, 1], scope='conv2d_4a_3x3')

net6 = slim.conv2d(net5, 192, [3, 3], padding='SAME',

scope='conv2d_4b_3x3')

net = slim.max_pool2d(net6, [3, 3], stride=2, scope='maxPool_5a_3x3')

#第二部分--Inception模块组:inception_1\inception_2\inception_2

with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d],

stride=1,padding='SAME'):

#inception_1:第一个模块组(共含3个inception_module)

#inception_1_m1: 第一组的1号module

with tf.variable_scope('inception_1_m1'):

with tf.variable_scope('Branch_0'):

branch_0=slim.conv2d(net,64,[1,1],scope='conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch1_1 = slim.conv2d(net, 48, [1, 1], scope='conv2d_1a_1x1')

branch1_2 = slim.conv2d(branch1_1, 64, [5, 5],

scope='conv2d_1b_5x5')

with tf.variable_scope('Branch_2'):

branch2_1 = slim.conv2d(net, 64, [1, 1], scope='conv2d_2a_1x1')

branch2_2 = slim.conv2d(branch2_1, 96, [3, 3],

scope='conv2d_2b_3x3')

branch2_3 = slim.conv2d(branch2_2, 96, [3, 3],

scope='conv2d_2c_3x3')

with tf.variable_scope('Branch_3'):

branch3_1 = slim.avg_pool2d(net, [3, 3], scope='avgPool_3a_3x3')

branch3_2 = slim.conv2d(branch3_1, 32, [1, 1],

scope='conv2d_3b_1x1')

#使用concat将4个分支的输出合并到一起(在第三个维度合并,即输出通道上合并)

net=tf.concat([branch_0,branch1_2,branch2_3,branch3_2],3)

# inception_1_m2: 第一组的 2号module

with tf.variable_scope('inception_1_m2'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope='conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch1_1 = slim.conv2d(net, 48, [1, 1], scope='conv2d_1a_1x1')

branch1_2 = slim.conv2d(branch1_1, 64, [5, 5],

scope='conv2d_1b_5x5')

with tf.variable_scope('Branch_2'):

branch2_1 = slim.conv2d(net, 64, [1, 1], scope='conv2d_2a_1x1')

branch2_2 = slim.conv2d(branch2_1, 96, [3, 3],

scope='conv2d_2b_3x3')

branch2_3 = slim.conv2d(branch2_2, 96, [3, 3],

scope='conv2d_2c_3x3')

with tf.variable_scope('Branch_3'):

branch3_1 = slim.avg_pool2d(net, [3, 3], scope='avgPool_3a_3x3')

branch3_2 = slim.conv2d(branch3_1, 64, [1, 1],

scope='conv2d_3b_1x1')

# 使用concat将4个分支的输出合并到一起(在第三个维度合并,即输出通道上合并)

net = tf.concat([branch_0, branch1_2, branch2_3, branch3_2], 3)

# inception_1_m2: 第一组的 3号module

with tf.variable_scope('inception_1_m3'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 64, [1, 1], scope='conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch1_1 = slim.conv2d(net, 48, [1, 1], scope='conv2d_1a_1x1')

branch1_2 = slim.conv2d(branch1_1, 64, [5, 5],

scope='conv2d_1b_5x5')

with tf.variable_scope('Branch_2'):

branch2_1 = slim.conv2d(net, 64, [1, 1], scope='conv2d_2a_1x1')

branch2_2 = slim.conv2d(branch2_1, 96, [3, 3],

scope='conv2d_2b_3x3')

branch2_3 = slim.conv2d(branch2_2, 96, [3, 3],

scope='conv2d_2c_3x3')

with tf.variable_scope('Branch_3'):

branch3_1 = slim.avg_pool2d(net, [3, 3], scope='avgPool_3a_3x3')

branch3_2 = slim.conv2d(branch3_1, 64, [1, 1],

scope='conv2d_3b_1x1')

# 使用concat将4个分支的输出合并到一起(在第三个维度合并,即输出通道上合并)

net = tf.concat([branch_0, branch1_2, branch2_3, branch3_2], 3)

#inception_2:第2个模块组(共含5个inception_module)

# inception_2_m1: 第2组的 1号module

with tf.variable_scope('inception_2_m1'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 384, [3, 3],stride=2,

padding='VALID',scope='conv2d_0a_3x3')

with tf.variable_scope('Branch_1'):

branch1_1 = slim.conv2d(net, 64, [1, 1], scope='conv2d_1a_1x1')

branch1_2 = slim.conv2d(branch1_1, 96, [3, 3],

scope='conv2d_1b_3x3')

branch1_3 = slim.conv2d(branch1_2, 96, [3, 3],

stride=2,

padding='VALID',

scope='conv2d_1c_3x3')

with tf.variable_scope('Branch_2'):

branch2_1 = slim.max_pool2d(net, [3, 3],

stride=2,

padding='VALID',

scope='maxPool_2a_3x3')

# 使用concat将4个分支的输出合并到一起(在第三个维度合并,即输出通道上合并)

net = tf.concat([branch_0, branch1_3, branch2_1], 3)

# inception_2_m2: 第2组的 2号module

with tf.variable_scope('inception_2_m2'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1],scope='conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch1_1 = slim.conv2d(net, 128, [1, 1], scope='conv2d_1a_1x1')

branch1_2 = slim.conv2d(branch1_1, 128, [1, 7],

scope='conv2d_1b_1x7')

branch1_3 = slim.conv2d(branch1_2, 128, [7, 1],

scope='conv2d_1c_7x1')

with tf.variable_scope('Branch_2'):

branch2_1 = slim.conv2d(net, 128, [1, 1], scope='conv2d_2a_1x1')

branch2_2 = slim.conv2d(branch2_1, 128, [7, 1],

scope='conv2d_2b_7x1')

branch2_3 = slim.conv2d(branch2_2, 128, [1, 7],

scope='conv2d_2c_1x7')

branch2_4 = slim.conv2d(branch2_3, 128, [7, 1],

scope='conv2d_2d_7x1')

branch2_5 = slim.conv2d(branch2_4, 128, [1, 7],

scope='conv2d_2e_1x7')

with tf.variable_scope('Branch_3'):

branch3_1 = slim.avg_pool2d(net, [3, 3], scope='avgPool_3a_3x3')

branch3_2 = slim.conv2d(branch3_1, 192, [1, 1],

scope='conv2d_3b_1x1')

# 使用concat将4个分支的输出合并到一起(在第三个维度合并,即输出通道上合并)

net = tf.concat([branch_0, branch1_3, branch2_5,branch3_2], 3)

# inception_2_m3: 第2组的 3号module

with tf.variable_scope('inception_2_m3'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1],scope='conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch1_1 = slim.conv2d(net, 160, [1, 1], scope='conv2d_1a_1x1')

branch1_2 = slim.conv2d(branch1_1, 160, [1, 7],

scope='conv2d_1b_1x7')

branch1_3 = slim.conv2d(branch1_2, 192, [7, 1],

scope='conv2d_1c_7x1')

with tf.variable_scope('Branch_2'):

branch2_1 = slim.conv2d(net, 160, [1, 1], scope='conv2d_2a_1x1')

branch2_2 = slim.conv2d(branch2_1, 160, [7, 1],

scope='conv2d_2b_7x1')

branch2_3 = slim.conv2d(branch2_2, 160, [1, 7],

scope='conv2d_2c_1x7')

branch2_4 = slim.conv2d(branch2_3, 160, [7, 1],

scope='conv2d_2d_7x1')

branch2_5 = slim.conv2d(branch2_4, 192, [1, 7],

scope='conv2d_2e_1x7')

with tf.variable_scope('Branch_3'):

branch3_1 = slim.avg_pool2d(net, [3, 3], scope='avgPool_3a_3x3')

branch3_2 = slim.conv2d(branch3_1, 192, [1, 1],

scope='conv2d_3b_1x1')

# 使用concat将4个分支的输出合并到一起(在第三个维度合并,即输出通道上合并)

net = tf.concat([branch_0, branch1_3, branch2_5,branch3_2], 3)

# inception_2_m4: 第2组的 4号module

with tf.variable_scope('inception_2_m4'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1],scope='conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch1_1 = slim.conv2d(net, 160, [1, 1], scope='conv2d_1a_1x1')

branch1_2 = slim.conv2d(branch1_1, 160, [1, 7],

scope='conv2d_1b_1x7')

branch1_3 = slim.conv2d(branch1_2, 192, [7, 1],

scope='conv2d_1c_7x1')

with tf.variable_scope('Branch_2'):

branch2_1 = slim.conv2d(net, 160, [1, 1], scope='conv2d_2a_1x1')

branch2_2 = slim.conv2d(branch2_1, 160, [7, 1],

scope='conv2d_2b_7x1')

branch2_3 = slim.conv2d(branch2_2, 160, [1, 7],

scope='conv2d_2c_1x7')

branch2_4 = slim.conv2d(branch2_3, 160, [7, 1],

scope='conv2d_2d_7x1')

branch2_5 = slim.conv2d(branch2_4, 192, [1, 7],

scope='conv2d_2e_1x7')

with tf.variable_scope('Branch_3'):

branch3_1 = slim.avg_pool2d(net, [3, 3], scope='avgPool_3a_3x3')

branch3_2 = slim.conv2d(branch3_1, 192, [1, 1],

scope='conv2d_3b_1x1')

# 使用concat将4个分支的输出合并到一起(在第三个维度合并,即输出通道上合并)

net = tf.concat([branch_0, branch1_3, branch2_5,branch3_2], 3)

# inception_2_m5: 第2组的 5号module

with tf.variable_scope('inception_2_m5'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1],scope='conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch1_1 = slim.conv2d(net, 160, [1, 1], scope='conv2d_1a_1x1')

branch1_2 = slim.conv2d(branch1_1, 160, [1, 7],

scope='conv2d_1b_1x7')

branch1_3 = slim.conv2d(branch1_2, 192, [7, 1],

scope='conv2d_1c_7x1')

with tf.variable_scope('Branch_2'):

branch2_1 = slim.conv2d(net, 160, [1, 1], scope='conv2d_2a_1x1')

branch2_2 = slim.conv2d(branch2_1, 160, [7, 1],

scope='conv2d_2b_7x1')

branch2_3 = slim.conv2d(branch2_2, 160, [1, 7],

scope='conv2d_2c_1x7')

branch2_4 = slim.conv2d(branch2_3, 160, [7, 1],

scope='conv2d_2d_7x1')

branch2_5 = slim.conv2d(branch2_4, 192, [1, 7],

scope='conv2d_2e_1x7')

with tf.variable_scope('Branch_3'):

branch3_1 = slim.avg_pool2d(net, [3, 3], scope='avgPool_3a_3x3')

branch3_2 = slim.conv2d(branch3_1, 192, [1, 1],

scope='conv2d_3b_1x1')

# 使用concat将4个分支的输出合并到一起(在第三个维度合并,即输出通道上合并)

net = tf.concat([branch_0, branch1_3, branch2_5,branch3_2], 3)

#将inception_2_m5存储到end_points中,作为Auxiliary Classifier辅助模型的分类

end_points['inception_2_m5']=net

# 第3组

# inception_3_m1: 第3组的 1号module

with tf.variable_scope('inception_3_m1'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 192, [1, 1],scope='conv2d_0a_1x1')

branch_0 = slim.conv2d(branch_0,320, [3, 3],

stride=2,

padding='VALID',

scope='conv2d_0b_3x3')

with tf.variable_scope('Branch_1'):

branch1_1 = slim.conv2d(net, 192, [1, 1], scope='conv2d_1a_1x1')

branch1_2 = slim.conv2d(branch1_1, 192, [1, 7],

scope='conv2d_1b_1x7')

branch1_3 = slim.conv2d(branch1_2, 192, [7, 1],

scope='conv2d_1c_7x1')

branch1_4 = slim.conv2d(branch1_3, 192, [3, 3],

stride=2,

padding='VALID',

scope='conv2d_1c_3x3')

with tf.variable_scope('Branch_2'):

branch2_1 = slim.max_pool2d(net, [3, 3],

stride=2,

padding='VALID',

scope='maxPool_3a_3x3')

# 使用concat将4个分支的输出合并到一起(在第三个维度合并,即输出通道上合并)

net = tf.concat([branch_0, branch1_4, branch2_1], 3)

# inception_3_m2: 第3组的 2号module

with tf.variable_scope('inception_3_m2'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 320, [1, 1],scope='conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch1_1 = slim.conv2d(net, 384, [1, 1], scope='conv2d_1a_1x1')

#特殊

branch1_2 = tf.concat([

slim.conv2d(branch1_1, 384, [1, 3], scope='conv2d_1a_1x3'),

slim.conv2d(branch1_1, 384, [3, 1], scope='conv2d_1a_3x1')

], 3)

with tf.variable_scope('Branch_2'):

branch2_1 = slim.conv2d(net, 488, [1, 1], scope='conv2d_2a_1x1')

branch2_2 = slim.conv2d(branch2_1, 384, [3, 3],

scope='conv2d_2b_3x3')

branch2_3 = tf.concat([

slim.conv2d(branch2_2, 384, [1, 3], scope='conv2d_1a_1x3'),

slim.conv2d(branch2_2, 384, [3, 1], scope='conv2d_1a_3x1')

], 3)

with tf.variable_scope('Branch_3'):

branch3_1 = slim.avg_pool2d(net, [3, 3], scope='avgPool_3a_3x3')

branch3_2 = slim.conv2d(branch3_1, 192, [1, 1],

scope='conv2d_3b_1x1')

# 使用concat将4个分支的输出合并到一起(在第三个维度合并,即输出通道上合并)

net = tf.concat([branch_0, branch1_2, branch2_3,branch3_2], 3)

# inception_3_m3: 第3组的 3号module

with tf.variable_scope('inception_3_m3'):

with tf.variable_scope('Branch_0'):

branch_0 = slim.conv2d(net, 320, [1, 1],scope='conv2d_0a_1x1')

with tf.variable_scope('Branch_1'):

branch1_1 = slim.conv2d(net, 384, [1, 1], scope='conv2d_1a_1x1')

#特殊

branch1_2 = tf.concat([

slim.conv2d(branch1_1, 384, [1, 3], scope='conv2d_1a_1x3'),

slim.conv2d(branch1_1, 384, [3, 1], scope='conv2d_1a_3x1')

], 3)

with tf.variable_scope('Branch_2'):

branch2_1 = slim.conv2d(net, 488, [1, 1], scope='conv2d_2a_1x1')

branch2_2 = slim.conv2d(branch2_1, 384, [3, 3],

scope='conv2d_2b_3x3')

branch2_3 = tf.concat([

slim.conv2d(branch2_2, 384, [1, 3], scope='conv2d_1a_1x3'),

slim.conv2d(branch2_2, 384, [3, 1], scope='conv2d_1a_3x1')

], 3)

with tf.variable_scope('Branch_3'):

branch3_1 = slim.avg_pool2d(net, [3, 3], scope='avgPool_3a_3x3')

branch3_2 = slim.conv2d(branch3_1, 192, [1, 1],

scope='conv2d_3b_1x1')

# 使用concat将4个分支的输出合并到一起(在第三个维度合并,即输出通道上合并)

net = tf.concat([branch_0, branch1_2, branch2_3,branch3_2], 3)

return net,end_points

############################## 卷积部分完成 ########################################

#第三部分:全局平均池化、softmax、Auxiliary Logits

def inception_v3(input,

num_classes=1000,

is_training=True,

dropout_keep_prob=0.8,

prediction_fn=slim.softmax,

spatial_squeeze=True,

reuse=None,

scope='inceptionV3'):

with tf.variable_scope(scope,'inceptionV3',[input,num_classes],

reuse=reuse) as scope:

with slim.arg_scope([slim.batch_norm,slim.dropout],

is_training=is_training):

net,end_points=inception_V3_base(input,scope=scope)

#Auxiliary Logits

with slim.arg_scope([slim.conv2d,slim.max_pool2d,slim.avg_pool2d],

stride=1,padding='SAME'):

aux_logits=end_points['inception_2_m5']

with tf.variable_scope('Auxiliary_Logits'):

aux_logits=slim.avg_pool2d(

aux_logits,[5,5],stride=3,padding='VALID',

scope='AvgPool_1a_5x5' )

aux_logits=slim.conv2d(aux_logits,128,[1,1],

scope='conv2d_1b_1x1')

aux_logits=slim.conv2d(aux_logits,768,[5,5],

weights_initializer=trunc_normal(0.01),

padding='VALID',

scope='conv2d_2a_5x5')

aux_logits = slim.conv2d(aux_logits, num_classes, [1, 1],

activation_fn=None,

normalizer_fn=None,

weights_initializer=trunc_normal(0.001),

scope='conv2d_2b_1x1')

if spatial_squeeze:

aux_logits =tf.squeeze(aux_logits,[1,2],name='SpatialSqueeze')

end_points['Auxiliary_Logits']=aux_logits

with tf.variable_scope('Logits'):

net=slim.avg_pool2d(net,[8,8],padding='VALID',

scope='avgPool_1a_8x8')

net=slim.dropout(net,keep_prob=dropout_keep_prob,

scope='dropout_1b')

end_points['PreLogits']=net

logits=slim.conv2d(net,num_classes,[1,1],activation_fn=None,

normalizer_fn=None,

scope='conv2d_1c_1x1')

if spatial_squeeze:

logits=tf.squeeze(logits,[1,2],name='SpatialSqueeze')

end_points['Logits']=logits

end_points['Predictions']=prediction_fn(logits,scope='Predictions')

return logits,end_points

########################### 构建完成

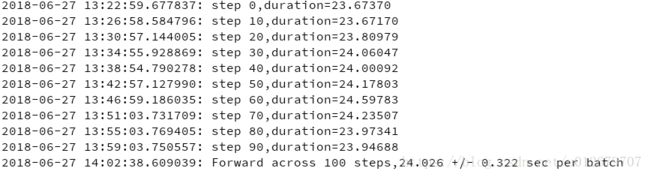

def time_compute(session, target, info_string):

num_batch = 100 #100

num_step_burn_in = 10 # 预热轮数,头几轮迭代有显存加载、cache命中等问题可以因此跳过

total_duration = 0.0 # 总时间

total_duration_squared = 0.0

for i in range(num_batch + num_step_burn_in):

start_time = time.time()

_ = session.run(target )

duration = time.time() - start_time

if i >= num_step_burn_in:

if i % 10 == 0: # 每迭代10次显示一次duration

print("%s: step %d,duration=%.5f " % (datetime.now(), i - num_step_burn_in, duration))

total_duration += duration

total_duration_squared += duration * duration

time_mean = total_duration / num_batch

time_variance = total_duration_squared / num_batch - time_mean * time_mean

time_stddev = math.sqrt(time_variance)

# 迭代完成,输出

print("%s: %s across %d steps,%.3f +/- %.3f sec per batch " %

(datetime.now(), info_string, num_batch, time_mean, time_stddev))

def main():

with tf.Graph().as_default():

batch_size=32

height,weight=299,299

input=tf.random_uniform( (batch_size,height,weight,3) )

with slim.arg_scope(inception_v3_arg_scope()):

logits,end_points=inception_v3(input,is_training=False)

init=tf.global_variables_initializer()

sess=tf.Session()

# 将网络结构图写到文件中

writer = tf.summary.FileWriter('logs/', sess.graph)

sess.run(init)

num_batches=100

time_compute(sess,logits,'Forward')

if __name__=='__main__':

main()

运行结果:

------------------------------------------------------ END ----------------------------------------------------------

参考:

《Going deeper with convolutions》

《tensorflow实战》 黄文坚