使用Elasticsearch做向量空间内的相似性搜索

文章目录

- 什么是Word Embeddings

- 索引`Word Embeddings`

- 评分的余弦相似度

- 局限性

- 通过抽象属性搜索

Elasticsearch做文本检索是基于文本之间的相似性的。在Elasticsearch 5.0中,Elasticsearch将默认算法由TF / IDF切换为Okapi BM25,该算法用于对与查询相关的结果进行评分。对Elasticsearch中的 TF / IDF 和 Okapi BM25感兴趣的可以直接查看Elastic的官方博客。(简单的说,TF/IDF和BM25的本质区别在于,TF/IDF是一个向量空间模型,而BM25是一个概率模型,他们的简单对比可以查看这个 文章)

TF/IDF中是一个向量空间模型,它将查询的每个term都视为向量模型的一个维度。通过该模型可将查询定义为一个向量,而Elasticsearch存储的文档定义另一个。然后,将两个向量的标量积视为文档与查询的相关性。标量积越大,相关性越高。而BM25属于概率模型,但是,它的公式和TF/IDF并没有您所期望的那么大。两种模型都将每个term的权重定义为一些idf函数和某些tf函数的乘积,然后将其作为整个文档对给定查询的分数(相关度)汇总。

但是,如果我们想根据更抽象的内容(例如单词的含义或写作风格)来查找类似的文档,该怎么办?这是Elasticsearch的密集矢量字段数据类型(dense vector)和矢量字段的script-score queries发挥作用的地方。

什么是Word Embeddings

因为计算机不认识字符串,所以我们需要将文字转换为数字。Word Embedding就是来完成这样的工作。 其定义是:A Word Embedding format generally tries to map a word using a dictionary to a vector。

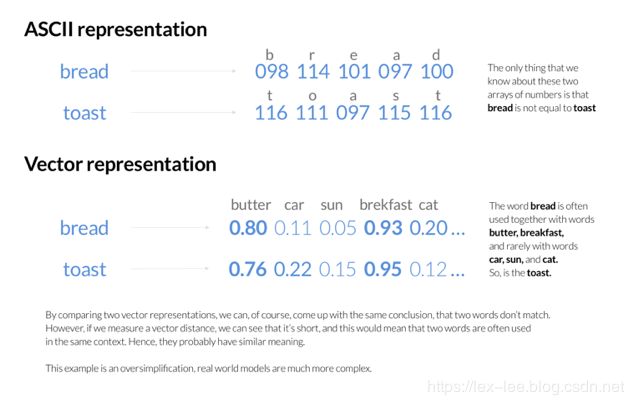

如下图,计算机用ASC码来识别bread(面包)和toast(吐司),从ASC II码上,这两个单词没有任何的相关性,但如果我们将它们在大量的语料中的上下文关系作为向量维度进行分析,就可以看到,他们通常都和butter(黄油)、breakfast(早餐)等单词同时出现。

或许,我们的搜索空间里面没有面包这个词出现,但如果用户搜索了面包,我们可以尝试给他同时返回吐司相关的信息,这些或许会对用户有用

因此,如上图,我们把beard和toast映射为:

bread [0.80 0.11 .0.05 0.93 0.20 ...]

toast [0.76 0.22 0.15 0.95 0.12 ...]

对应的向量数组,即为bread和toast的 Word Embeddings。当然,Word Embeddings的生成可以采用不同的算法,这里不做详述。

索引Word Embeddings

Word Embeddings是词的矢量表示,通常用于自然语言处理任务,例如文本分类或情感分析。相似的词倾向于在相似的上下文中出现。Word Embeddings将出现在相似上下文中的单词映射为具有相似值的矢量表示。这样,单词的语义被一定程度上得以保留。

为了演示矢量场的使用,我们将经过预训练的GloVe 的 Word Embeddings导入到Elasticsearch中。该glove.6B.50d.txt文件将词汇表的400000个单词中的每一个映射到50维向量。摘录如下所示。

public 0.034236 0.50591 -0.19488 -0.26424 -0.269 -0.0024169 -0.42642 -0.29695 0.21507 -0.0053071 -0.6861 -0.2125 0.24388 -0.45197 0.072675 -0.12681 -0.36037 0.12668 0.38054 -0.43214 1.1571 0.51524 -0.50795 -0.18806 -0.16628 -2.035 -0.023095 -0.043807 -0.33862 0.22944 3.4413 0.58809 0.15753 -1.7452 -0.81105 0.04273 0.19056 -0.28506 0.13358 -0.094805 -0.17632 0.076961 -0.19293 0.71098 -0.19331 0.019016 -1.2177 0.3962 0.52807 0.33352

early 0.35948 -0.16637 -0.30288 -0.55095 -0.49135 0.048866 -1.6003 0.19451 -0.80288 0.157 0.14782 -0.45813 -0.30852 0.03055 0.38079 0.16768 -0.74477 -0.88759 -1.1255 0.28654 0.37413 -0.053585 0.019005 -0.30474 0.30998 -1.3004 -0.56797 -0.50119 0.031763 0.58832 3.692 -0.56015 -0.043986 -0.4513 0.49902 -0.13698 0.033691 0.40458 -0.16825 0.033614 -0.66019 -0.070503 -0.39145 -0.11031 0.27384 0.25301 0.3471 -0.31089 -0.32557

为了导入这些映射,我们创建了一个words索引,并在索引mapping中指定dense_vector为vector字段的类型。然后,我们遍历GloVe文件中的行,并将单词和向量分批批量插入该索引中。之后,例如可以使用GET请求检索“ early”一词(/words/_doc/early):

{

"_index": "words",

"_type": "_doc",

"_id": "early",

"_version": 15,

"_seq_no": 503319,

"_primary_term": 2,

"found": true,

"_source": {

"word": "early",

"vector": [0.35948,-0.16637,-0.30288,-0.55095,-0.49135,0.048866,-1.6003,0.19451,-0.80288,0.157,0.14782,-0.45813,-0.30852,0.03055,0.38079,0.16768,-0.74477,-0.88759,-1.1255,0.28654,0.37413,-0.053585,0.019005,-0.30474,0.30998,-1.3004,-0.56797,-0.50119,0.031763,0.58832,3.692,-0.56015,-0.043986,-0.4513,0.49902,-0.13698,0.033691,0.40458,-0.16825,0.033614,-0.66019,-0.070503,-0.39145,-0.11031,0.27384,0.25301,0.3471,-0.31089,-0.32557,-0.51921]

}

}

评分的余弦相似度

对于 GloVe Word Embeddings,两个词向量之间的余弦相似性可以揭示相应词的语义相似性。从Elasticsearch 7.2开始,余弦相似度可作为预定义函数使用,可用于文档评分。

要查找与表示形式相似的单词,[0.1, 0.2, -0.3]我们可以向发送POST请求/words/_search,在此我们将预定义cosineSimilarity函数与查询向量和存储文档的向量值一起用作函数自变量,以计算文档分数。请注意,由于分数不能为负,因此我们需要在函数的结果上添加1.0。

{

"size": 1,

"query": {

"script_score": {

"query": {

"match_all": {}

},

"script": {

"source": "cosineSimilarity(params.queryVector, doc['vector'])+1.0",

"params": {

"queryVector": [0.1, 0.2, -0.3]

}

}

}

}

}

结果,我们得到单词“ rites”的分数约为1.5:

{

"took": 103,

"timed_out": false,

"hits": {

"total": {

"value": 10000,

"relation": "gte"

},

"max_score": 1.5047596,

"hits": [

{

"_index": "words",

"_type": "_doc",

"_id": "rites",

"_score": 1.5047596,

"_source": {

"word": "rites",

"vector": [0.82594,0.55036,-2.5851,-0.52783,0.96654,0.55221,0.28173,0.15945,0.33305,0.41263,0.29314,0.1444,1.1311,0.0053411,0.35198,0.094642,-0.89222,-0.85773,0.044799,0.59454,0.26779,0.044897,-0.10393,-0.21768,-0.049958,-0.018437,-0.63575,-0.72981,-0.23786,-0.30149,1.2795,0.22047,-0.55406,0.0038758,-0.055598,0.41379,0.85904,-0.62734,-0.17855,1.7538,-0.78475,-0.52078,-0.88765,1.3897,0.58374,0.16477,-0.15503,-0.11889,-0.66121,-1.108]

}

}

]

}

}

为了更加容易探索Word Embeddings,我们使用Spring Shell在Kotlin中构建了一个包装器。它接受一个单词作为输入,从索引中检索相应的向量,然后执行script_score查询以显示相关度最高结果:

shell:>similar --to "cat"

{"word":"dog","score":1.9218005}

{"word":"rabbit","score":1.8487821}

{"word":"monkey","score":1.8041081}

{"word":"rat","score":1.7891964}

{"word":"cats","score":1.786527}

{"word":"snake","score":1.779891}

{"word":"dogs","score":1.7795815}

{"word":"pet","score":1.7792249}

{"word":"mouse","score":1.7731668}

{"word":"bite","score":1.77288}

{"word":"shark","score":1.7655175}

{"word":"puppy","score":1.76256}

{"word":"monster","score":1.7619764}

{"word":"spider","score":1.7521701}

{"word":"beast","score":1.7520056}

{"word":"crocodile","score":1.7498653}

{"word":"baby","score":1.7463862}

{"word":"pig","score":1.7445586}

{"word":"frog","score":1.7426511}

{"word":"bug","score":1.7365949}

该项目代码可以在github.com/schocco/es-vectorsearch上找到。

局限性

目前 dense vector 数据类型还是experimental状态。其存储的向量不应超过1024维(Elasticsearch <7.2 的维度限制是500)。

直接使用余弦相似度来计算文档评分是相对昂贵的,应与filter一起使用以限制需要计算分数的文档数量。对于大规模的向量相似性搜索,您可能需要研究更具体的项目,例如FAISS库,以“使用GPU进行十亿规模的相似性搜索”。

通过抽象属性搜索

我们使用Word Embeddings来证明如何使用Elasticsearch来做向量空间的相似性,但相同的概念也应适用于其他领域。我们可以将图片映射到对图片样式进行编码的向量表示中,以搜索以相似样式绘制的图片:

通过余弦相似性去进行向量搜索:

如果我们可以将诸如口味或样式之类的抽象属性映射到向量空间,那么我们还可以搜索与给定查询项共享该抽象属性的新食谱,服装或家具。

由于机器学习的最新进展,许多易于使用的开源库都支持创建特定于域的Embeddings,而Elasticsearch中对向量字段的script score query支持,让我们能轻松实现特定于域的相似性搜索的又一步。