Interspeech 2017 | Far-field Speech Recognition Technology

From October 25 2017, Alibaba iDST Voice Team and Alibaba Cloud Community has been working together on a series of information sharing meetings regarding voice technology, in an effort to share the technological progress reported in Interspeech 2017.

Let us now take a look at the topic that was discussed in this session: far-field speech recognition technology:

1. Introduction to Far-field Speech Recognition Technology

1.1. What is far-field speech recognition?

Far-field speech recognition is an essential technology for speech interactions, and aims to enable smart devices to recognize distant human speech (usually 1m-10m). This technology is applied to many scenarios such as smart home appliances (smart loudspeaker, smart TV), meeting transcription, and onboard navigation. Microphone array is often used to collect speech signals for far-field speech recognition. However, in a real environment, there is a lot of background noise, multipath reflection, reverberation, and even human voice interference, leading to decreased quality of pickup signal. Generally, the accuracy of far-field speech recognition is significantly less than near-field speech recognition.

1.2. Modules of Far-field Speech Recognition System

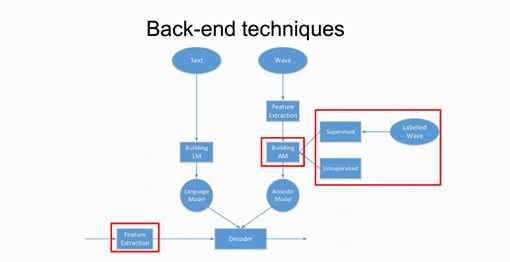

A far-field speech recognition system usually consists of front-end signal processing and back-end speech recognition modules. The front-end module aims to "cleanse" the speech of noise and reverberation using various speech enhancement techniques such as dereverberation and beamforming. The back-end module is similar to an ordinary speech recognition system, and aims to recognize and convert the "cleansed" speech into text.

1.2.1. Front-end Signal Processing Module

Far-field speech often includes palpable reverberation. Reverberation is the persistence of a sound after it is produced. A reverberation is created when a sound or signal is reflected causing a large number of reflections or dispersions (wave propagation) on the surface of objects in the space as the sound emits from the source and disseminates in the air. The direct sound and near-field reflected sound travel from the source into the ear in succession. Generally, when the delay time is less than 50ms-80ms, the accuracy of speech recognition will be obviously affected; if the delay time is very long, the speech recognition will not be greatly affected as the signal energy diminishes. The late reverberation is the main cause for increase in the accuracy of speech recognition; the more obvious the late reverberation is, the lower the accuracy. Weighted prediction error (WPE) is a typical dereverberation method.

Beamforming is another typical front-end signal processing method, that determines the sound source (DOA) by comparing the arrival time of different sounds and the distance between microphones. Once the position of target sound is determined, various audio signal processing methods such as spatial filtering can be used to decrease noise disturbance and improve signal quality. Typical beamforming methods include delay and sum (DS) and minimum variance distortionless response (MVDR).

Recent years have seen a rapid development of speech enhancement technology based on deep neural networks (NN). For the NN-based speech enhancement, the input is usually speech in noise, and the "cleansed" speech is expected with powerful NN-based nonlinear modelling capability. Some representative methods are feature mapping (Xu, 2015) and ideal ratio mask (Wang, 2016).

1.2.2. Back-end Speech Recognition Module

The diagram below, outlines the framework of a back-end speech recognition system. One of its main components is the acoustic modelling (AM) module. Since the end of 2011, the DNN technology has been used for consecutive speech recognition of large vocabulary, significantly decreasing the error rate of speech recognition. The DNN-based acoustic modelling technology has become the hottest area of research. So, what is DNN? In fact, a standard DNN (deep neural network) is not mystical at all, and its structure is not materially different from that of a traditional ANN (artificial neural network). Generally, ANN includes only one hidden layer, but DNN contains at least 3 hidden layers. Addition of layers enables multi-layer nonlinear transformation and significantly improves the modelling capability.

CNN technology has been used for speech recognition since 2012-2013. Back then, the convolution layer and pooling layer were alternated, with the convolution kernel being huge in size but without many layers. The objective was further processing of features and classification of DNN. Things have changed With the evolution of CNN technology in the imaging area, things have changed. It has been concluded that a deeper and better CNN models can be trained when the multi-layer convolution is connected with the pooling layer and the size of convolution kernel decreases. This approach has been applied and refined according to the characteristics of speech recognition.

The LSTM (long short-term memory) model is a special type of recurrent neural network (RNN). Speech recognition is in fact a process of time-sequential modelling, and therefore the RNN is very suitable for the modelling. As a simple RNN is constrained by gradient explosion and gradient dissipation, the training will be very difficult. An LSTM model can better control the signal flow and delivery with input, output and forget gates, and long and short-term memory. It can also mitigate the gradient explosion and dissipation of RNN to some extent. The shortcoming lies in the fact that the calculation is much more complex than DNN, and parallel processing is very difficult due to the recursive connection.

Compared with LSTM model, BLSTM model improves the modelling capability and takes in account the effect of reverse timing information, that is, the impact of the "future" on the "present", which is important in speech recognition. However, this capability will increase the complexity of modelling calculation and requires training with complete sentences: increase in GPU memory consumption -> decrease in the degree of parallelism -> slower model training. Additionally, there are real-time issues in the actual applications. We use a latency-control BLSTM model to overcome these challenges, and we have released the first BLSTM-DNN hybrid speech recognition system in the industry.

2. Introduction to Papers on Far-field Speech Recognition Systems in Interspeech 2017

We selected the following 2 Interspeech papers to present the most recent developments of far-field speech recognition technology from the viewpoints of acoustic model improvement and far-field data modelling:

2.1. Residual LSTM: Design of a Deep Recurrent Architecture for Distant Speech Recognition

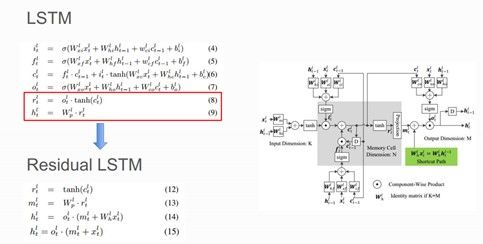

The paper presents a modified LSTM model: residual LSTM network. The purpose of this research is to address the common problem of degradation during the training of deep neural networksi.e., the error rates of training/development sets will increase as the network becomes deeper. This problem is not caused by over-fitting but exists during network learning. Some researchers have attempted to mitigate its effect using highway network and residual network. In this paper, researchers modified the traditional LSTM structure and presented a residual LSTM structure, directly connecting the output of the previous layer with the current layer in the network. The formulas and modified structure are shown in the diagram below.

Compared with the traditional LSTM and highway LSTM structures, the modified network has the following three advantages:

- Less network parameters (decrease by 10% with network configuration in paper)

- Easier training, thanks to the two advantages of residual structureavoiding excessive data processing through nonlinear transformation during front calculation, and restraining gradient dissipation through direct path during the counter propagation of error;

- significant improvement in the final accuracy of recognition, and the degradation problem is eliminated when the layers of neural network increase to 10.

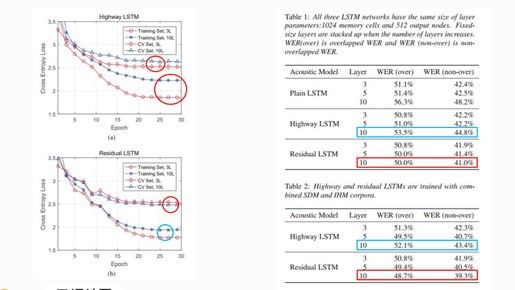

The experiment was conducted on a far-field open dataset AMI. This dataset was a simulated meeting scenario, and the data comprised of far-field recorded data and corresponding near-speaking data. Tests were conducted on two datasets with/without coincident speech interference, with results as we have discussed before.

2.2. Generation of Large-scale Simulated Utterances in Virtual Rooms to Train Deep-neural Networks for Far-field Speech Recognition in Google Home

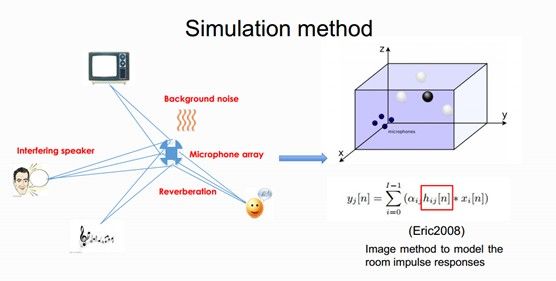

Google recently wrote a paper focusing on how to use near-field speech data to simulate far-field speech data. In a real environment, there is a lot of background noise, multipath reflection and reverberation, which decreases the quality of pickup signal. Generally, the accuracy of far-field speech recognition is much less as compared to near-field speech recognition. Therefore, large amount of far-field speech is required for model training and improvement in accuracy of recognition. Microphone array is often used to collect speech for far-field speech recognition. However, due to the equipment and environment, the cost of recording real far-field data is higher than that of near-field data, and it is difficult to collect large amount of real far-field data. The researchers used near-field data to simulate far-field data for the purpose of model training. With a better approach, the simulated far-field data is "nearer" to the real far-field data, which is helpful in model training. The formula and simulated scenario used for data simulation are shown in the diagram below.

The impulse response in the room can be generated through image method. The number of noise points was randomly selected between 0-3. The noise-signal ratio of simulated far-field data was 0-30dB. The distance between the target speaker andmicrophone array was 1-10m.

The fCLP-LDNN model was used for acoustic modelling. The model structure and final results are shown in the diagram below. When there was noise and interference, the robustness of the acoustic model generated from simulated far-field data training was much better than that of the model generated from near-field "clean" data training, and the word error rate decreased by 40%. The data training method in the paper was used in the model training of Google Home products.

3. Conclusion and Technical Outlook

As the concept of smart loudspeaker and home appliances gains popularity, the far-field speech recognition gains more importance as it become more useful. In the near future, we believe the focus of research on far-field speech recognition technology would be on the following aspects: 1. better front-end processing method, e.g., front-end processing matching deep neural networks; 2. better back-end modelling method; 3. Combined front and back-end model training; 4. far-field data simulation method, which is essential for initial model iteration of products; 5. faster model adaption based on noisy environment, scenario, and SNR, etc.

We expect that the far-field speech recognition technology will become more sophisticated and easier to use with joint efforts of the academic and industrial communities.