实验环境

VIP:172.16.80.201

VS1:172.16.80.101

VS2:172.16.80.103

RS1:172.16.80.100

RS2:172.16.80.102

操作系统CentOS。

VS1和VS2之间通过keepalived实现高可用的ipvs集群。后端RS1和RS2运行Nginx服务。

VS1和VS2配置

一、通过keepalived实现高可用

1、安装keepalived

[root@VS1 ~]#yum install keepalived ipvsadm -y

[root@VS2 ~]#yum install keepalived ipvsadm -y

2、配置VRRP实例,VS1为master,VS2为backup。

VS1配置:

[root@VS1 ~]#vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keadmin@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id CentOS7A.luo.com

vrrp_mcast_group4 224.0.0.22

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass haha

}

virtual_ipaddress {

172.16.80.201/16

}

}

VS2配置

[root@VS2 ~]#vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs { <==全局配置段开始

notification_email {

root@localhost <==故障邮件的收件人

}

notification_email_from keadmin@localhost <==故障邮件的发件人

smtp_server 127.0.0.1 <==故障邮件的发件服务器

smtp_connect_timeout 30

router_id CentOS7B.luo.com <==路由器ID

vrrp_mcast_group4 224.0.0.22 <==组播地址

} <==全局配置段结束

vrrp_instance VI_1 { <==实例名字为VI_1,备节点的实例名字要和主节点的相同

state BACKUP <==状态为backup

interface ens33 <==通信的接口

virtual_router_id 51 <==实例ID

priority 100 <==优先级

advert_int 1 <==通信检查时间间隔

authentication {

auth_type PASS <==认证类型

auth_pass haha <==认证密码

}

virtual_ipaddress {

172.16.80.201/16 <==虚拟IP

}

}

配置完后,启动VS1和VS2的keepalived

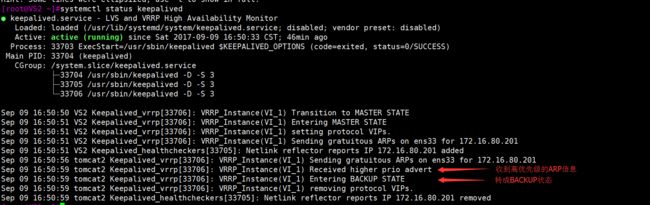

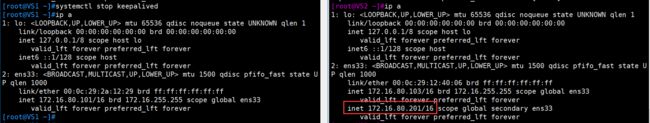

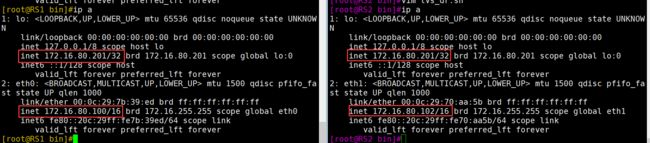

这里我们关停VS1的keepalived服务器模仿VS1故障,此时就能看到VIP漂移到VS2去了:

二、通过keepalived实现lvs调度

以上我们实现通过keepalived实现了ip漂移,接下来我们可以通过keepalived配置lvs调度。当客户端访问虚拟ip 172.16.80.201时,我们可以把客户端的请求调度到后端的RS1和RS2去。

在/etc/keepalived/keepalived.conf文件内,添加virtual_server模块

[root@VS1 ~]#vim /etc/keepalived/keepalived.conf

virtual_server 172.16.80.201 80 { <==虚拟IP地址

delay_loop 3

lb_algo rr <==调度方法

lb_kind DR <==调度类型DR

protocol TCP

real_server 172.16.80.100 80 { <==RS1服务器

weight 1 <==权重

HTTP_GET { <==检查后端服务状态

url {

path /

status_code 200 <==如果后端RS服务器返回200状态码,则表示正常

}

connect_timeout 1 <==连接超时时间

nb_get_retry 3

delay_before_retry 1

}

}

real_server 172.16.80.102 80 { <==RS2服务器

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 1

nb_get_retry 3

delay_before_retry 1

}

}

}

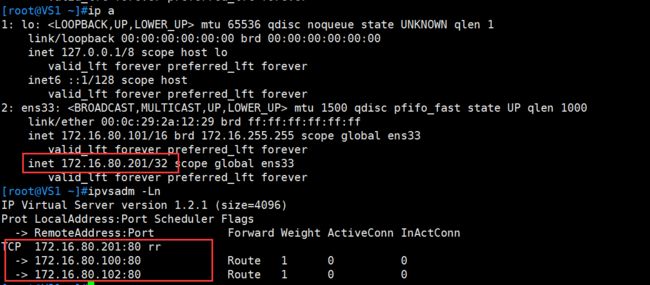

VS2配置与VS1的配置一样。保存配置后重启VS1和VS2的keepalived服务。

可以看到,调度配置已完成。

RS1和RS2配置

1、安装Nginx

[root@RS1 ~]#yum install nginx -y

[root@RS2 ~]#yum install nginx -y

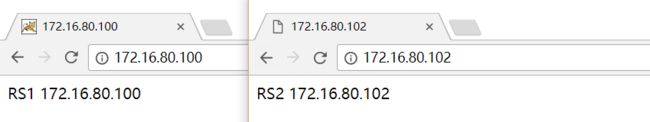

2、配置访问页面,并启动RS1和RS2的Nginx服务

[root@RS1 ~]#vim /usr/share/nginx/html/index.html

RS1 172.16.80.100

[root@RS2 ~]#vim /usr/share/nginx/html/index.html

RS2 172.16.80.102

客户端访问RS1和RS2效果如下:

3、配置RS

编写脚本lvs_dr.sh ,该脚本可以设置内核参数以及vip信息。

[root@RS1 bin]#vim lvs_dr.sh

#!/bin/bash

vip=172.16.80.201

mask=255.255.255.255

iface="lo:0"

case $1 in

start)

ifconfig $iface $vip netmask $mask broadcast $vip up

route add -host $vip dev $iface

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

;;

stop)

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

ifconfig $iface down

;;

*)

echo "Usage:$(basename $0) start|stop"

exit 1

;;

esac

[root@RS1 bin]#chmod u+x lvs_dr.sh

[root@RS1 bin]#bash -x lvs_dr.sh start

+ vip=172.16.80.201

+ mask=255.255.255.255

+ iface=lo:0

+ case $1 in

+ ifconfig lo:0 172.16.80.201 netmask 255.255.255.255 broadcast 172.16.80.201 up

+ route add -host 172.16.80.201 dev lo:0

+ echo 1

+ echo 1

+ echo 2

+ echo 2

验证

当用户访问http://172.16.80.201时,VS服务器轮询方式调度到后端的RS服务器。

当VS其中一台服务器宕机,另一台VS可以迅速接管172.16.80.201的功能。从而实现了高可用。