K8S集群基于metrics server的HPA测试

K8S从1.8版本开始,CPU、内存等资源的metrics信息可以通过 Metrics API来获取,用户可以直接获取这些metrics信息(例如通过执行kubect top命令),HPA使用这些metics信息来实现动态伸缩。本文介绍K8S集群基于metric server的HPA。在开始之前我们需要了解一下Metrics API和Metrics Server。

Metrics API:

1、通过Metrics API我们可以获取到指定node或者pod的当前资源使用情况,API本身不存储任何信息,所以我们不可能通过API来获取资源的历史使用情况。

2、Metrics API的获取路径位于:/apis/metrics.k8s.io/

3、获取Metrics API的前提条件是metrics server要在K8S集群中成功部署

4、更多的metrics资料请参考:https://github.com/kubernetes/metricsMetrics server:

1、Metrics server是K8S集群资源使用情况的聚合器

2、从1.8版本开始,Metrics server默认可以通过kube-up.sh 脚本以deployment的方式进行部署,也可以通过yaml文件的方式进行部署

3、Metrics server收集所有node节点的metrics信息

一、环境准备

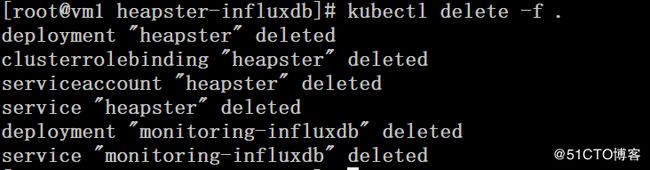

为了避免环境干扰,我们需要删除前文部署好的heapster

# cd yaml/heapster-influxdb/

# kubectl delete -f .二、生成证书文件

这些证书文件主要用在Metrics API aggregator 上

# cat front-proxy-ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

}

}# cat front-proxy-client-csr.json

{

"CN": "front-proxy-client",

"key": {

"algo": "rsa",

"size": 2048

}

}# cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare front-proxy-ca

# cfssl gencert -ca=front-proxy-ca.pem \

-ca-key=front-proxy-ca-key.pem \

-config=/etc/kubernetes/ca-config.json \

-profile=kubernetes \

front-proxy-client-csr.json | cfssljson -bare front-proxy-client

# mv front-proxy* /etc/ssl/kubernetes

# scp -rp /etc/ssl/kubernetes vm2:/etc/ssl/kubernetes

# scp -rp /etc/ssl/kubernetes vm3:/etc/ssl/kubernetes三、修改Master配置文件

1、kube-apiserver

# cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/sbin/kube-apiserver \

--admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--advertise-address=192.168.115.5 \

--bind-address=192.168.115.5 \

--insecure-bind-address=127.0.0.1 \

--authorization-mode=Node,RBAC \

--runtime-config=rbac.authorization.k8s.io/v1alpha1 \

--kubelet-https=true \

--enable-bootstrap-token-auth=true \

--token-auth-file=/etc/kubernetes/token.csv \

--service-cluster-ip-range=10.254.0.0/16 \

--service-node-port-range=8400-9000 \

--tls-cert-file=/etc/ssl/kubernetes/kubernetes.pem \

--tls-private-key-file=/etc/ssl/kubernetes/kubernetes-key.pem \

--client-ca-file=/etc/ssl/etcd/ca.pem \

--service-account-key-file=/etc/ssl/etcd/ca-key.pem \

--etcd-cafile=/etc/ssl/etcd/ca.pem \

--etcd-certfile=/etc/ssl/kubernetes/kubernetes.pem \

--etcd-keyfile=/etc/ssl/kubernetes/kubernetes-key.pem \

--etcd-servers=https://192.168.115.5:2379,https://192.168.115.6:2379,https://192.168.115.7:2379 \

--enable-swagger-ui=true \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/lib/audit.log \

--event-ttl=1h \

--requestheader-client-ca-file=/etc/ssl/kubernetes/front-proxy-ca.pem \

--requestheader-allowed-names=aggregator \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--proxy-client-cert-file=/etc/ssl/kubernetes/front-proxy-client.pem \

--proxy-client-key-file=/etc/ssl/kubernetes/front-proxy-client-key.pem \

--runtime-config=api/all=true \

--enable-aggregator-routing=true \

--v=2

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target2、kube-control-manager

# cat /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/usr/local/sbin/kube-controller-manager \

--address=127.0.0.1 \

--master=http://127.0.0.1:8080 \

--allocate-node-cidrs=true \

--service-cluster-ip-range=10.254.0.0/16 \

--cluster-cidr=172.30.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/ssl/etcd/ca.pem \

--cluster-signing-key-file=/etc/ssl/etcd/ca-key.pem \

--service-account-private-key-file=/etc/ssl/etcd/ca-key.pem \

--root-ca-file=/etc/ssl/etcd/ca.pem \

--leader-elect=true \

--v=2 \

--horizontal-pod-autoscaler-use-rest-clients=true

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target3、重启服务

# systemctl daemon-reload

# systemctl restart kube-apiserver.service

# systemctl restart kube-controller-manager四、部署metrics-server

1、科学上网方式下载docker镜像,并纳入本地仓库统一管理

# docker pull gcr.io/google_containers/metrics-server-amd64:v0.2.1

# docker tag gcr.io/google_containers/metrics-server-amd64:v0.2.1 \

registry.59iedu.com/google_containers/metrics-server-amd64:v0.2.1

# docker push registry.59iedu.com/google_containers/metrics-server-amd64:v0.2.12、获取yaml文件并修改

# git clone https://github.com/stefanprodan/k8s-prom-hpa

# cd k8s-prom-hpa/

# cat metrics-server/metrics-server-deployment.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

containers:

- name: metrics-server

image: registry.59iedu.com/google_containers/metrics-server-amd64:v0.2.1

imagePullPolicy: Always

volumeMounts:

- mountPath: /etc/ssl/kubernetes/

name: ca-ssl

command:

- /metrics-server

- --source=kubernetes.summary_api:''

- --requestheader-client-ca-file=/etc/ssl/kubernetes/front-proxy-ca.pem

volumes:

- name: ca-ssl

hostPath:

path: /etc/ssl/kubernetes# cat metrics-server/metrics-server-service.yaml

apiVersion: v1

kind: Service

metadata:

name: metrics-server

namespace: kube-system

labels:

kubernetes.io/name: "Metrics-server"

spec:

selector:

k8s-app: metrics-server

ports:

- port: 443

protocol: TCP

targetPort: 443

nodePort: 8499

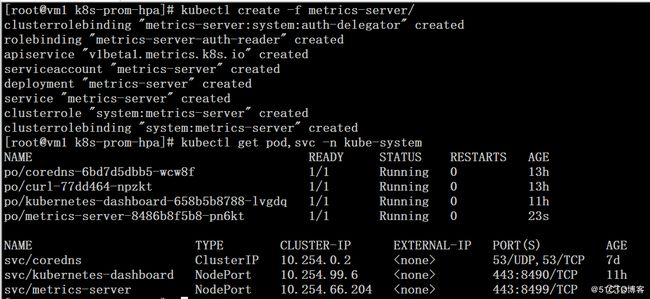

type: NodePort3、通过yaml文件创建对应的资源

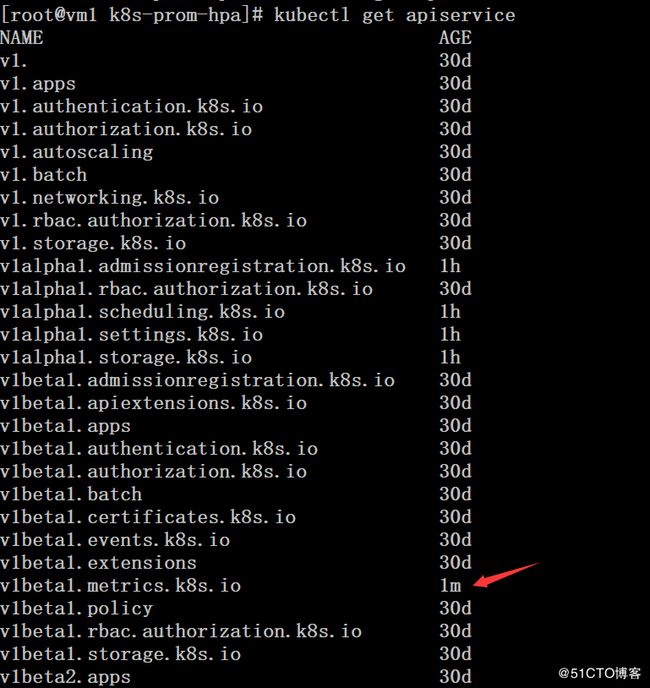

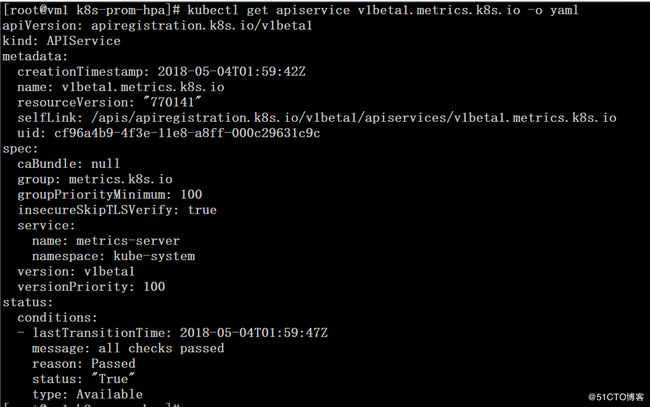

# kubectl create -f ./metrics-server

# kubectl get pod,svc -n kube-system# kubectl get apiservice# kubectl get apiservice v1beta1.metrics.k8s.io -o yaml # wget http://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

# rpm -ivh epel-release-latest-7.noarch.rpm

# yum -y install jq

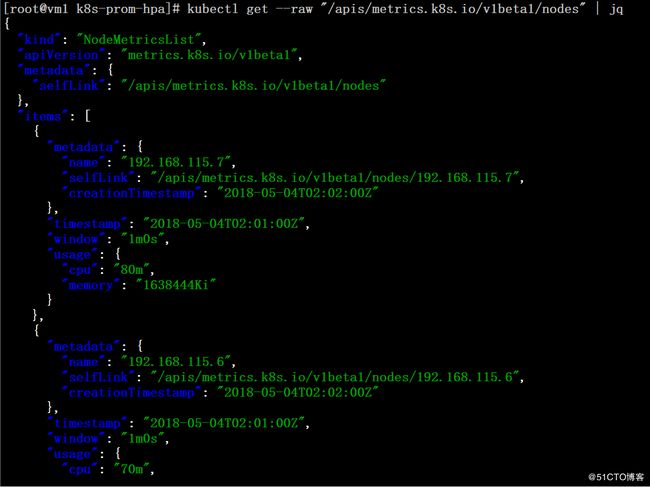

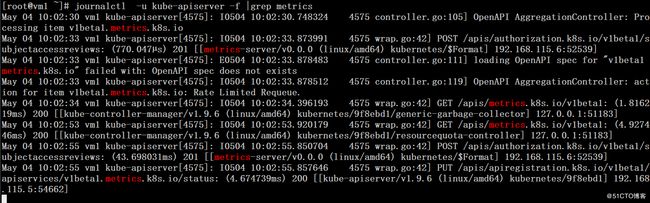

# kubectl get --raw "/apis/metrics.k8s.io/v1beta1/nodes" | jq# journalctl -u kube-apiserver -f |grep metrics

五、创建HPA与测试

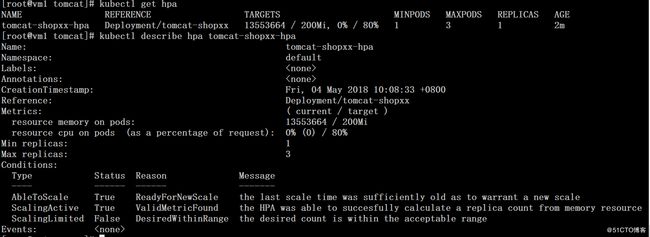

1、通过yaml文件创建hpa

设置tomcat-shopxx deployment的最大最小副本数,HPA对应的pod CPU和内存指标

# kubectl get hpa

# kubectl get pod,svc

# cd yaml/tomcat/

# cat hpa.yaml

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: tomcat-shopxx-hpa

spec:

scaleTargetRef:

apiVersion: extensions/v1beta1

kind: Deployment

name: tomcat-shopxx

minReplicas: 1

maxReplicas: 3

metrics:

- type: Resource

resource:

name: cpu

targetAverageUtilization: 80

- type: Resource

resource:

name: memory

targetAverageValue: 200Mi

# kubectl create -f hpa.yaml# kubectl get hpa

# kubectl describe hpa tomcat-shopxx-hpa

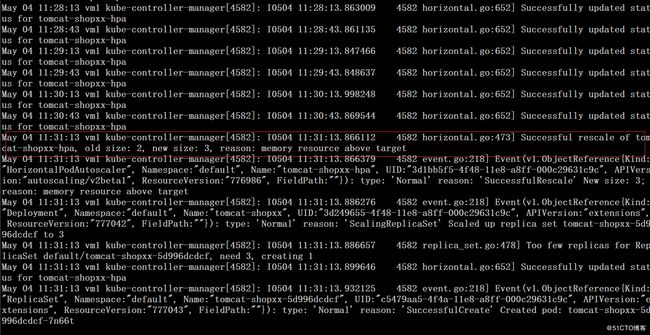

3、观察HPA自动扩展过程中的kube-controller-manager的日志

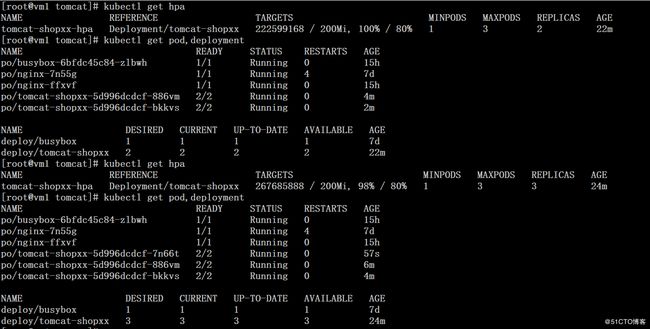

# journalctl -u kube-controller-manager -f# kubectl get hpa

# kubectl get pod,deployment

下文将介绍基于prometheus实现自定义metrics的hpa,尽情关注!

参考:

https://github.com/kubernetes-incubator/metrics-server

https://kubernetes.io/docs/tasks/debug-application-cluster/core-metrics-pipeline/

https://www.cnblogs.com/fengjian2016/p/8819657.html