图卷积神经网络GCN---谱图卷积层代表作

Spectral-based ConvGNN

这篇博客侧重列举谱图卷积的主要发展演变,如果要从头理解建议阅读图卷积神经网络(Graph Convolutional Network)之谱卷积。

基于图谱方法的卷积是图卷积神经网络的一个重要方法。频谱方法的一个常见缺点是它们需要将整个图形加载到内存中以执行图形卷积,这在处理大图形时效率不高。[8]

1 Spectral networks and locally connected networks on graphs

[J. Bruna, 2014, 1] 和 [M. Henaff, 2015, 2]都是与Y. LeCun有关,是谱卷积图神经网络的开山之作。[J. Bruna, 2014, 1]用拉普拉斯矩阵的特征向量作图的傅里叶变换矩阵,再根据卷积定理得到最原始的谱图卷积:

x k + 1 , j = h ( U ∑ i = 1 f k − 1 F k , i , j U T x k , i ) ( j = 1 , ⋯ , f k ) (1.1) x_{k+1,j} = h \left( U \sum_{i=1}^{f_{k-1}} F_{k,i,j} U^T x_{k,i} \right) \quad (j = 1, \cdots, f_k) \tag{1.1} xk+1,j=h(Ui=1∑fk−1Fk,i,jUTxk,i)(j=1,⋯,fk)(1.1)

其中 F k , i , j F_{k,i,j} Fk,i,j是对角阵,即可学习的卷积核, h h h是实非线性函数。

2 Deep convolutional networks on graph-structured data

[M. Henaff, 2015, 2] 在[J. Bruna, 2014, 1]引入了插值操作,让卷积核能够变换变大,即 K : R N 0 × N 0 → R N × N \mathcal{K}: \reals^{N_0 \times N_0} \rightarrow \reals^{N \times N} K:RN0×N0→RN×N:

w g = K w ~ g (2.1) w_g = \mathcal{K} \tilde{w}_g \tag{2.1} wg=Kw~g(2.1)

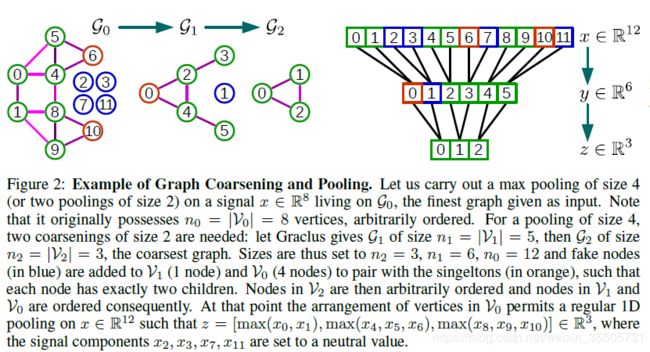

此外,原文还使用了层次图聚类(Hierarchical Graph Clustering)作池化操作。

3 Convolutional neural networks on graphs with fast localized spectral filtering

[M. Defferrard, 2016, 3] 使用Chebyshev多项式作卷积核, K − 1 K-1 K−1阶的核为:

g θ ( Λ ) = ∑ k = 0 K − 1 θ k T k ( Λ ~ ) , Λ ~ = 2 Λ λ max − I N (3.1) \begin{aligned} g_{\theta} (\Lambda) &= \sum_{k=0}^{K-1} \theta_{k} T_k(\tilde{\Lambda}), \\ \tilde{\Lambda} &= \frac{2 \Lambda}{\lambda_{\max} - I_N} \end{aligned} \tag{3.1} gθ(Λ)Λ~=k=0∑K−1θkTk(Λ~),=λmax−IN2Λ(3.1)

巧妙地利用了对称阵的特点,化简了卷积:

x k + 1 , j = h ( U ∑ i = 1 f k − 1 g θ i , j ( Λ ~ ) U T x k , i ) = h ( ∑ i = 1 f k − 1 ( U g θ i , j ( Λ ~ ) U T ) x k , i ) = h ( ∑ i = 1 f k − 1 g θ i , j ( L ~ ) x k , i ) ( j = 1 , ⋯ , f k ) (3.2) \begin{aligned} x_{k+1,j} &= h \left( U \sum_{i=1}^{f_{k-1}} g_{\theta_{i,j}} (\tilde{\Lambda}) U^T x_{k,i} \right) \\ &= h \left( \sum_{i=1}^{f_{k-1}} \left( U g_{\theta_{i,j}} (\tilde{\Lambda}) U^T \right) x_{k,i} \right) \\ &= h \left( \sum_{i=1}^{f_{k-1}} g_{\theta_{i,j}} (\tilde{L}) x_{k,i} \right) \quad (j = 1, \cdots, f_k) \end{aligned} \tag{3.2} xk+1,j=h(Ui=1∑fk−1gθi,j(Λ~)UTxk,i)=h(i=1∑fk−1(Ugθi,j(Λ~)UT)xk,i)=h(i=1∑fk−1gθi,j(L~)xk,i)(j=1,⋯,fk)(3.2)

直接使用拉普拉斯矩阵 L L L的多项式作卷积核。并且使用完全二叉树做池化。

4 Semi-supervised classification with graph convolutional networks

[T. N. Kipf, 2017 4] 在[M. Defferrard, 2016, 3]的基础上又做了化简。取 λ m a x ≈ 2 \lambda_{max} \approx 2 λmax≈2,令参数 θ 0 ′ = − θ 1 ′ = θ \theta_{0}^{'} = - \theta_{1}^{'} = \theta θ0′=−θ1′=θ,图上谱卷积又可以简化为

g θ ′ ⋆ x ≈ θ ( I N + D − 1 2 A D − 1 2 ) x (4.1) g_{\theta^{'}} \star x \approx \theta \left( I_N + D^{-\frac{1}{2}} A D^{-\frac{1}{2}} \right) x \tag{4.1} gθ′⋆x≈θ(IN+D−21AD−21)x(4.1)

注意 I N + D − 1 2 A D − 1 2 I_N + D^{-\frac{1}{2}} A D^{-\frac{1}{2}} IN+D−21AD−21拥有范围为 [ 0 , 2 ] [0,2] [0,2]的特征值,这将会导致数值不稳定性和梯度爆炸/消失。

原文提出了归一化技巧(renormalization trick):

I N + D − 1 2 A D − 1 2 → D ~ − 1 2 A ~ D ~ − 1 2 . I_N + D^{-\frac{1}{2}} A D^{-\frac{1}{2}} \rightarrow \tilde{D}^{-\frac{1}{2}} \tilde{A} \tilde{D}^{-\frac{1}{2}}. IN+D−21AD−21→D~−21A~D~−21.

其中 A ~ = A + I N , D ~ i , i = ∑ j A ~ i , j \tilde{A}=A+I_N,\tilde{D}_{i,i} = \sum_{j}\tilde{A}_{i,j} A~=A+IN,D~i,i=∑jA~i,j。

输入 X ∈ R N × C X \in \mathbb{R}^{N \times C} X∈RN×C, C C C为输入的通道数,经过滤波 Θ ∈ R C × F \Theta \in \mathbb{R}^{C \times F} Θ∈RC×F得到含有 F F F个通道的卷积后结果 Z ∈ R N × F Z \in \mathbb{R}^{N \times F} Z∈RN×F:

Z = D ~ − 1 2 A ~ D ~ − 1 2 X Θ Z = \tilde{D}^{-\frac{1}{2}} \tilde{A} \tilde{D}^{-\frac{1}{2}} X \Theta Z=D~−21A~D~−21XΘ

5 Cayleynets: Graph convolutional neural networks with complex rational spectral filters

[R. Levie, 2017, 5] 与[M. Defferrard, 2016, 3]、[T. N. Kipf, 2017 4]不同,使用的是Cayley多项式做卷积核:

g c ⃗ , h = c 0 + 2 ℜ { ∑ j = 1 r c j ( h λ − i ) j ( h λ + i ) − j } , x ⃗ k + 1 = g c ⃗ , h ( L ) x ⃗ k = c 0 x ⃗ k + 2 ℜ { ∑ j = 1 r c j ( h L − i I N ) j ( h L + i I N ) − j x ⃗ k } . (5.1) \begin{aligned} g_{\vec{c},h} &= c_0 + 2 \Re \left\{ \sum_{j=1}^{r} c_j \left( h \lambda - i \right)^{j} \left( h \lambda + i \right)^{-j} \right\}, \\ \vec{x}_{k+1} &= g_{\vec{c},h}(L) \vec{x}_k \\ &= c_0 \vec{x}_{k} + 2 \Re \left\{ \sum_{j=1}^{r} c_j \left( h L - i I_N \right)^{j} \left( h L + i I_N \right)^{-j} \vec{x}_{k} \right\}. \end{aligned} \tag{5.1} gc,hxk+1=c0+2ℜ{j=1∑rcj(hλ−i)j(hλ+i)−j},=gc,h(L)xk=c0xk+2ℜ{j=1∑rcj(hL−iIN)j(hL+iIN)−jxk}.(5.1)

其中 c ⃗ = { c 0 , c 1 , ⋯ , c r } , h \vec{c}=\{c_0,c_1,\cdots,c_r\},h c={c0,c1,⋯,cr},h是需学习的参数。

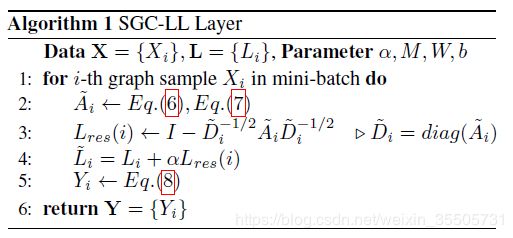

6 Adaptive graph convolutional neural networks

[R. Li, 2018, 6] 将拉普拉斯矩阵 L L L参数化,把分成两部分,一部分是原始的,另一部分是优化后的:

L ^ = L + α L res . (6.1) \hat{L} = L + \alpha L_{\text{res}}. \tag{6.1} L^=L+αLres.(6.1)

参数化部分 L res L_{\text{res}} Lres是与参数化的邻接矩阵 A ^ \hat{A} A^有关,而邻接矩阵 A ^ \hat{A} A^与顶点间的权重有关,因此参数化部分 L res L_{\text{res}} Lres实质上由参数化的距离决定。

使用可学习参数 M M M的马氏距离,得到顶点间的权重(距离):

D ( x i , x j ) = ( x i − x j ) T M ( x i − x j ) , G ( x i , x j ) = exp ( − D ( x i , x j ) 2 σ 2 ) . (6.2) \begin{aligned} \mathbb{D}(x_i,x_j) &= \sqrt{\left( x_i - x_j \right)^T M \left( x_i - x_j \right)}, \\ \mathbb{G}(x_i,x_j) &= \exp \left( \frac{-\mathbb{D}(x_i,x_j)}{2 \sigma^2} \right). \end{aligned} \tag{6.2} D(xi,xj)G(xi,xj)=(xi−xj)TM(xi−xj),=exp(2σ2−D(xi,xj)).(6.2)

卷积层为:

Y = ( U ∑ k = 0 K − 1 ( F ( L , X , Γ ) ) k U T X ) W + b . (6.3) Y = \left( U \sum_{k=0}^{K-1} \left( \mathcal{F}(L,X,\Gamma) \right)^k U^T X \right)W + b. \tag{6.3} Y=(Uk=0∑K−1(F(L,X,Γ))kUTX)W+b.(6.3)

下图中Eq.(6)、Eq.(7)、Eq.(8)分别指的是式(6.2)、(6.3)。

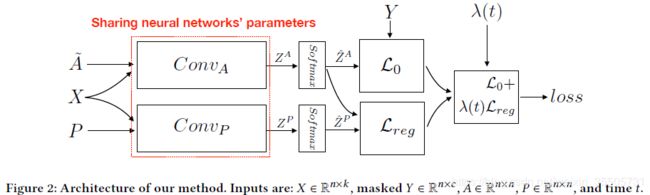

7 Dual graph convolutional networks for graphbased semi-supervised classification

[C. Zhuang, 2018, 7] 使用了两个卷积操作:

- Local Consistency Convolution: C o n v A Conv_A ConvA:

C o n v A ( i ) ( X ) = Z ( i ) = σ ( D ^ − 1 2 A ^ D ^ − 1 2 Z ( i − 1 ) W ( i ) ) . (7.1) Conv_{A}^{(i)}(X) = Z^{(i)} = \sigma \left( \hat{D}^{-\frac{1}{2}} \hat{A} \hat{D}^{-\frac{1}{2}} Z^{(i-1)} W^{(i)} \right). \tag{7.1} ConvA(i)(X)=Z(i)=σ(D^−21A^D^−21Z(i−1)W(i)).(7.1)

其中 A ^ = A + I N , D ^ i , i = ∑ j A ^ i , j \hat{A} = A + I_N, \hat{D}_{i,i} = \sum_{j} \hat{A}_{i,j} A^=A+IN,D^i,i=∑jA^i,j。

- Global Consistency Convolution: C o n v P Conv_P ConvP:

C o n v P ( i ) ( X ) = Z ( i ) = σ ( D − 1 2 P D − 1 2 Z ( i − 1 ) W ( i ) ) . (7.2) Conv_{P}^{(i)}(X) = Z^{(i)} = \sigma \left( D^{-\frac{1}{2}} P D^{-\frac{1}{2}} Z^{(i-1)} W^{(i)} \right). \tag{7.2} ConvP(i)(X)=Z(i)=σ(D−21PD−21Z(i−1)W(i)).(7.2)

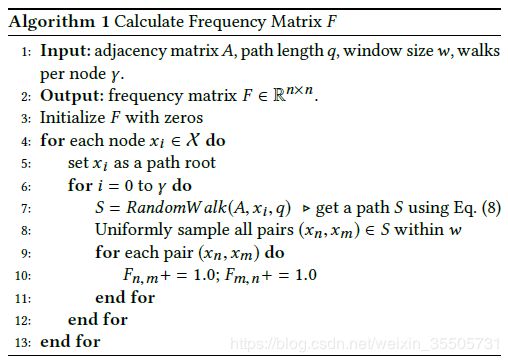

其中 P P P是PPMI矩阵, D i , i = ∑ j P i , j D_{i,i} = \sum_{j} P_{i,j} Di,i=∑jPi,j

要计算PPMI矩阵首先需要计算频率矩阵 F F F,见下图。

上图中的Eq.(8)指的是式(7.3):

p ( s ( t + 1 ) = x j ∣ s ( t ) = x i ) = A i , j ∑ j A i , j . (7.3) p(s(t+1)=x_j|s(t)=x_i) = \frac{A_{i,j}}{\sum_{j} A_{i,j}}. \tag{7.3} p(s(t+1)=xj∣s(t)=xi)=∑jAi,jAi,j.(7.3)

PPMI矩阵的计算过程如下:

p i , j = F i , j ∑ i , j F i , j , p i , ∗ = ∑ j F i , j ∑ i , j F i , j , p ∗ , j = ∑ i F i , j ∑ i , j F i , j , p i , j = max { pmi i , j = log ( p i , j p i , ∗ j ∗ , p ∗ i ∗ , j ) , 0 } . (7.4) \begin{aligned} p_{i,j} &= \frac{F_{i,j}}{\sum_{i,j}F_{i,j}}, \\ p_{i,*} &= \frac{\sum_j F_{i,j}}{\sum_{i,j}F_{i,j}}, \\ p_{*,j} &= \frac{\sum_i F_{i,j}}{\sum_{i,j}F_{i,j}}, \\ p_{i,j} &= \max \left\{ \text{pmi}_{i,j} = \log \left( \frac{p_{i,j}}{p_{i,*j*}, p_{*i*,j}} \right), 0 \right\}. \end{aligned} \tag{7.4} pi,jpi,∗p∗,jpi,j=∑i,jFi,jFi,j,=∑i,jFi,j∑jFi,j,=∑i,jFi,j∑iFi,j,=max{pmii,j=log(pi,∗j∗,p∗i∗,jpi,j),0}.(7.4)

参考文献

-

1 J. Bruna, W. Zaremba, A. Szlam, and Y. LeCun, “Spectral networks and locally connected networks on graphs,” in Proc. of ICLR, 2014.

-

2 M. Henaff, J. Bruna, and Y. LeCun, “Deep convolutional networks on graph-structured data,” arXiv preprint arXiv:1506.05163, 2015.

-

3 M. Defferrard, X. Bresson, and P. Vandergheynst, “Convolutional neural networks on graphs with fast localized spectral filtering,” in Proc. of NIPS, 2016, pp. 3844–3852.

-

4 T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” in Proc. of ICLR, 2017.

-

5 R. Levie, F. Monti, X. Bresson, and M. M. Bronstein, “Cayleynets: Graph convolutional neural networks with complex rational spectral filters,” IEEE Transactions on Signal Processing, vol. 67, no. 1, pp.97–109, 2017.

-

6 R. Li, S. Wang, F. Zhu, and J. Huang, “Adaptive graph convolutional neural networks,” in Proc. of AAAI, 2018, pp. 3546–3553.

-

7 C. Zhuang and Q. Ma, “Dual graph convolutional networks for graphbased semi-supervised classification,” in WWW, 2018, pp. 499–508.

-

8 Wu Z, Pan S, Chen F, et al. A Comprehensive Survey on Graph Neural Networks.[J]. arXiv: Learning, 2019.