项目中用到了ELK,主要是用作日志的收集展示,本文介绍了相关组件的安装及基本使用。

一、ES安装

1. 在官网https://www.elastic.co/cn/downloads/下载ES

2. 需要切成非root用户,否则之后启动会报错can not run elasticsearch as root

3. 解压tar -zxvf elasticsearch-7.3.1-linux-x86_64.tar.gz

4. 进入es目录cd elasticsearch-7.3.1/

5. 启动./bin/elasticsearch

6. 停止Ctrl-C

7. 查看是否能正常访问

二、logstash安装

1. 在官网https://www.elastic.co/cn/downloads/下载安装包logstash-7.3.1.tar.gz

2. 解压tar -zxvf logstash-7.3.1.tar.gz

3. 进入目录cd logstash-7.3.1

4. cd config

5. mkdir conf

6. cd conf

7. vim kafka.conf,其内容为

input {

kafka {

topics => "test1Topic"

type => "kafka"

bootstrap_servers => "192.168.92.128:9092"

codec => "json"

}

}

filter {

}

output {

if [type] == "kafka" {

elasticsearch {

hosts => ["127.0.0.1:9200"]

index => "acctlog-kafka-%{+YYYY.MM.dd}"

}

}

}

8. 启动nohup ./logstash -f /opt/es/logstash-7.3.1/config/conf > outlogs.file 2>&1 &,其中/opt/es/logstash-7.3.1/config/conf为新增的配置文件的目录

三、安装kibana

1. 在官网https://www.elastic.co/cn/downloads/下载安装包lkibana-7.3.1-linux-x86_64.tar.gz

2. 解压tar -zxvf kibana-7.3.1-linux-x86_64.tar.gz

3. 修改配置文件vim kibana.yml

修改以下几项

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.hosts: ["http://localhost:9200"]

i18n.locale: "zh-CN"

4. 启动nohup ./kibana > outlog.file 2>&1 &,查看启动日志

5. 访问控制台http://192.168.92.128:5601/app/kibana ip请根据实际情况修改

四、使用kibana

1. 随便创建个springboot工程,在pom中加上以下依赖

<dependency> <groupId>org.springframework.kafkagroupId> <artifactId>spring-kafkaartifactId> dependency> <dependency> <groupId>com.github.danielwegenergroupId> <artifactId>logback-kafka-appenderartifactId> <version>0.1.0version> dependency> <dependency> <groupId>net.logstash.logbackgroupId> <artifactId>logstash-logback-encoderartifactId> <version>5.2version> dependency>

2. 添加logback-spring.xml,文件可参考网上配置,其中加上如下配置,注意<producerConfig>bootstrap.servers=192.168.92.128:9092producerConfig>要配置成自己kafka的地址,topic名称和上文kafka.conf中的配置对应

<appender name="KafkaAppender" class="com.github.danielwegener.logback.kafka.KafkaAppender"> <encoder class="com.github.danielwegener.logback.kafka.encoding.LayoutKafkaMessageEncoder"> <layout class="net.logstash.logback.layout.LogstashLayout"> <includeContext>falseincludeContext> <includeCallerData>trueincludeCallerData> <customFields>{"system":"test"}customFields> <fieldNames class="net.logstash.logback.fieldnames.ShortenedFieldNames" /> layout> <charset>UTF-8charset> encoder> <filter class="ch.qos.logback.classic.filter.ThresholdFilter"> <level>INFOlevel> filter> <topic>test1Topictopic> <keyingStrategy class="com.github.danielwegener.logback.kafka.keying.HostNameKeyingStrategy" /> <deliveryStrategy class="com.github.danielwegener.logback.kafka.delivery.AsynchronousDeliveryStrategy" /> <producerConfig>bootstrap.servers=192.168.92.128:9092producerConfig> appender> <logger name="Application_ERROR"> <appender-ref ref="KafkaAppender" /> logger> <root level="info"> <appender-ref ref="KafkaAppender" /> root>

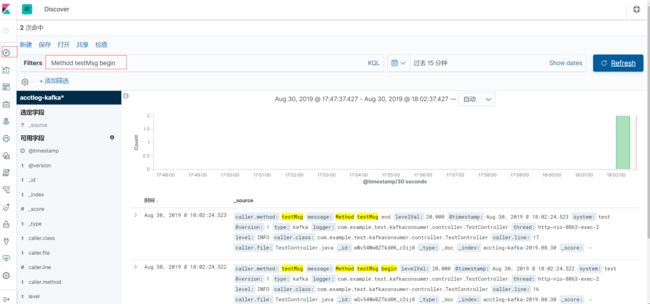

3. 随便写个controller,打印几行日志,方便后续查看

import org.slf4j.Logger; import org.slf4j.LoggerFactory; import org.springframework.web.bind.annotation.RequestMapping; import org.springframework.web.bind.annotation.RestController; @RestController @RequestMapping("/test") public class TestController { private static final Logger logger = LoggerFactory.getLogger(TestController.class); @RequestMapping("/testMsg") public String testMsg() { logger.info("Method testMsg begin"); logger.info("Method testMsg end"); return "hello world"; } }

4. 启动springboot工程(此处可以起一个kafka的consumer,订阅对应topic,查看kafka是否能收到日志)

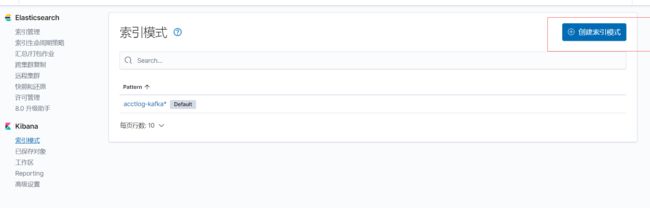

5. 在kibana界面创建索引

索引模式,和前文kafka.conf中的配置对应

6. 调用controller的接口,在kibana中可以成功搜索到

参考官方文档:https://www.elastic.co/guide/en/elasticsearch/reference/current/elasticsearch-intro.html