第11章 Spark Streaming整合Flume&Kafka打造通用流处理基础

11-1 -课程目录

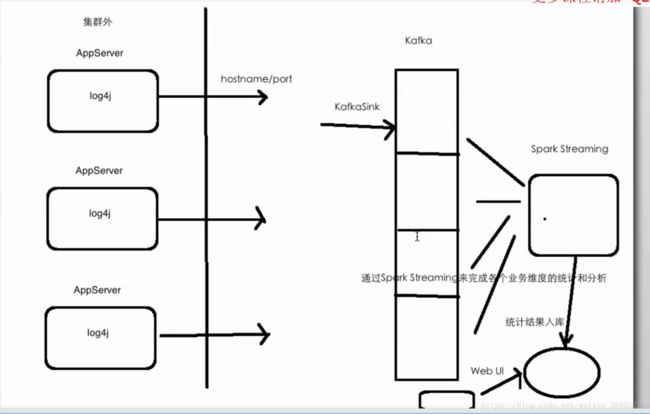

基于Spark Steaming&Flume&kafka打造通用流处理平台

整合日志框架输出到flume

整合flume到kafka

整合kafka到spark streaming

spark streaming对接接收到的数据进行处理

11-2 -处理流程画图剖析

11-3 -日志产生器开发并结合log4j完成日志的输出

模拟日志

代码地址:

https://gitee.com/sag888/big_data/blob/master/Spark%20Streaming%E5%AE%9E%E6%97%B6%E6%B5%81%E5%A4%84%E7%90%86%E9%A1%B9%E7%9B%AE%E5%AE%9E%E6%88%98/project/l2118i/sparktrain/src/test/java/LoggerGenerator.java

源码:

import org.apache.log4j.Logger;

/**

* 模拟日志产生

*/

public class LoggerGenerator {

private static Logger logger = Logger.getLogger(LoggerGenerator.class.getName());

public static void main(String[] args) throws Exception{

int index = 0;

while(true) {

Thread.sleep(1000);

logger.info("value : " + index++);

}

}

}

11-4 -使用Flume采集Log4j产生的日志

streaming.conf

agent1.sources=avro-source

agent1.channels=logger-channel

agent1.sinks=log-sink

#define sources

agent1.sources.avro-sources.type=avro

agent1.sources.avro-sources.bind=0.0.0.0

agent1.sources.avro-sources.port=41414

#define channel

agent1.channels=logger-channel.type=memory\

#define sink

agent1.sinks.log-sink.type=logger

agent1.sources.avro-sources.channels=logger-channel

agent1.sinks.log-sink.channel=logger-channel

配置日志:

log4j.appender.flume = org.apache.flume.clients.log4jappender.Log4jAppender

log4j.appender.flume.Hostname = hadoop000

log4j.appender.flume.Port = 41414

log4j.appender.flume.UnsafeMode = true

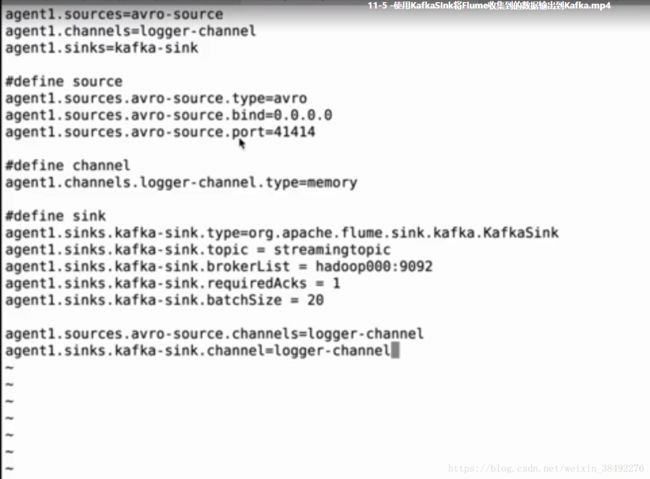

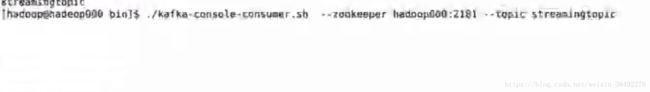

11-5 -使用KafkaSInk将Flume收集到的数据输出到Kafka

1、启动zookeeper

./zkServer.sh start

2、启动kafka

./kafka-server-start.sh -daemon /home/hadoop/app/kafka_2.11-0.9.0.0/config/server.propertie

查看是否启动成功

./kafka -topices.sh --list --zookeeper hadoop000:2181

3)创建topic

./kafka -topics.sh --create --zookeeper localhost:2181 -- replication -factor 1 -- partitions 1 --topic streamingtopic

4)配置

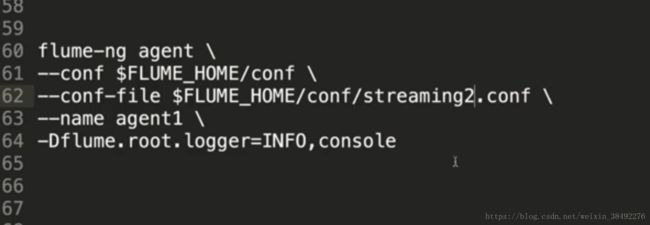

5、启动Flume

11-6 -Spark Streaming消费Kafka的数据进行统计

代码地址:

https://gitee.com/sag888/big_data/blob/master/Spark%20Streaming%E5%AE%9E%E6%97%B6%E6%B5%81%E5%A4%84%E7%90%86%E9%A1%B9%E7%9B%AE%E5%AE%9E%E6%88%98/project/l2118i/sparktrain/src/main/scala/com/imooc/spark/KafkaStreamingApp.scala

代码:

package com.imooc.spark

import org.apache.spark.SparkConf

import org.apache.spark.streaming.kafka.KafkaUtils

import org.apache.spark.streaming.{Seconds, StreamingContext}

/**

* Spark Streaming对接Kafka

*/

object KafkaStreamingApp {

def main(args: Array[String]): Unit = {

if(args.length != 4) {

System.err.println("Usage: KafkaStreamingApp

") }

val Array(zkQuorum, group, topics, numThreads) = args

val sparkConf = new SparkConf().setAppName("KafkaReceiverWordCount")

.setMaster("local[2]")

val ssc = new StreamingContext(sparkConf, Seconds(5))

val topicMap = topics.split(",").map((_, numThreads.toInt)).toMap

// TODO... Spark Streaming如何对接Kafka

val messages = KafkaUtils.createStream(ssc, zkQuorum, group,topicMap)

// TODO... 自己去测试为什么要取第二个

messages.map(_._2).count().print()

ssc.start()

ssc.awaitTermination()

}

}

11-7 -本地测试和生产环境使用的拓展

我们现在是在本地进行测试的,在idea中执行LoggerGenerator,然后使用flume、kafka、以及spark streaming进行操作

在生产上肯定不是这么干的,怎么干呢?

1)打包jar,执行LoggerGenerator类

2)flume、kafka和我们的测试是一样的

3)spark streaming的代码也需要打成jar包,然后使用spark-submit的方式提交,可以根据你们的实际情况选择运行模式:local/yarn/standlone/mesos

在生产上,整个流处理的流程都一样的,区别在于业务逻辑的复杂性。