Sqoop开荒

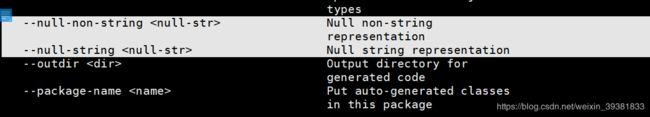

文章目录

- Sqoop 简介

- Sqoop官方介绍

- Sqoop1 使用教程

- Sqoop help

- 基本操作

- 1列出数据库

- 2列出表

- 3 Table导入到HDFS

- 4 指定字段

- 5 import 条件过滤

- 6 SQL嵌入

- 7 增量式抽取

- 8 Options-file 的使用

- Hive

Sqoop 简介

开源 工具

RDBMS---------------------------sqoop---------------------------->HDFS

Sqoop前:

RDBMS----->Hadoop

MR: DBinputformat------------TestOutputFormat

Hadoop------>RDBMS

MR:TestInputFormat--------->DBOutputFormat

MR存在的问题

- MapReduce麻烦

- 效率低(一个MR只能对应一个业务线)

基于MR存在的问题,抽取出一个框架,需要自定义:

- Driver

- username

- password

- url

- DB/table/sql

- hdfs path

- mapper’s

接入到框架之后

新的业务线接入只需要传入参数递给MR即可

- hadoop jar的方式来提交

- 动态的根据业务线传入参数

后期可以采用Spring Boot微服务构建大数据平台

Sqoop官方介绍

Apache Sqoop™ is a tool designed for efficiently transferring bulk data between Apache Hadoop and structured datastores such as relational databases.

Sqoop successfully graduated from the Incubator in March of 2012 and is now a Top-Level Apache project: More information

Latest stable release is 1.4.7 (download, documentation). Latest cut of Sqoop2 is 1.99.7 (download, documentation). Note that 1.99.7 is not compatible with 1.4.7 and not feature complete, it is not intended for production deployment.

Sqoop : SQL - to - Hadoop

RDBMS <---------sqoop-----------> Hadoop(HDFS/Hive)

底层:一个读写操作,只需要map就能搞定 不需要reduce

Sqoop的两个版本 1.X 2.X(1.99.X)

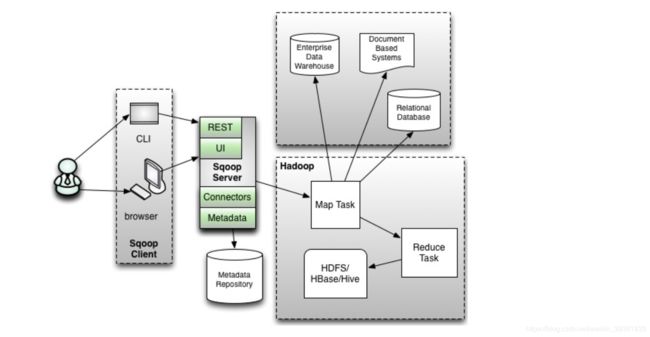

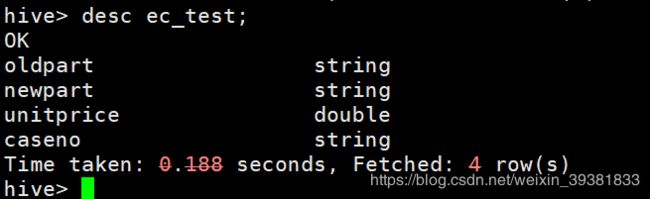

Sqoop 1 架构图

只用到了Map task ,没用到Recude

Sqoop 2(1.99.x)架构图

/opt/cloudera/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567/bin/../lib/sqoop/../accumulo does not exist

/opt/cloudera/parcels/CDH-5.12.1-1.cdh5.12.1.p0.3/lib/sqoop/

解压后放到 sqoop home 的 lib 文件夹下

Sqoop1 使用教程

Sqoop help

基本操作

1列出数据库

sqoop list-databases \

--connect jdbc:mysql://10.103.66.88:3306 \

--username name \

--password password

2列出表

sqoop list-tables \

--connect jdbc:mysql://10.103.66.88:3306/information_schema \

--username \

--password

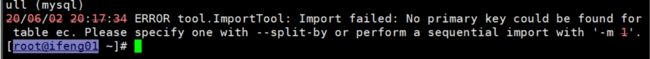

3 Table导入到HDFS

sqoop import \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--table ec

需要用 --split-by 指定主键

或者是 -m 1 顺序导入,没有主键的话推荐这种方式

sqoop import \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--table ec \

-m 1

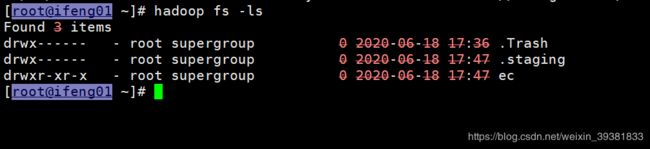

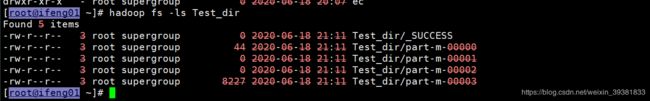

导入成功

尝试一下指定主键

使用–direct确实会变快 但是主键会被打乱

sqoop import \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--table ec \

--split-by OldPart

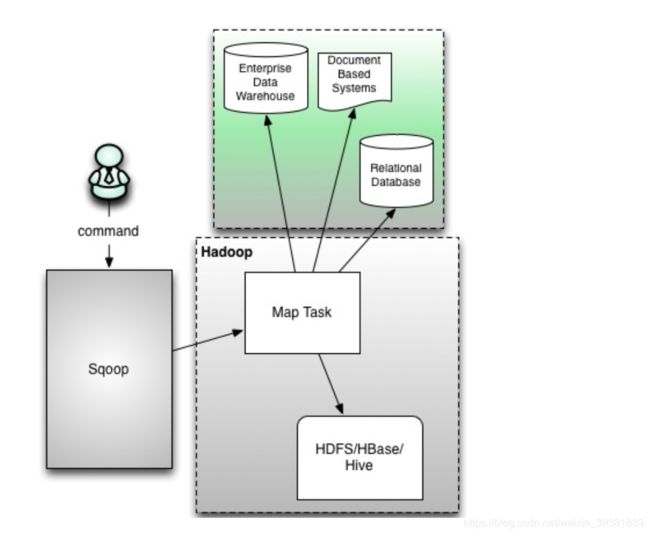

已经存在此表

![]()

先删掉

hadoop fs -rmr ec

![]()

再次指定主键导入

![]()

导入HDFS是文本格式的,所以要加入 allow_text_splitter=ture

sqoop import "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--table ec \

--split-by OldPart

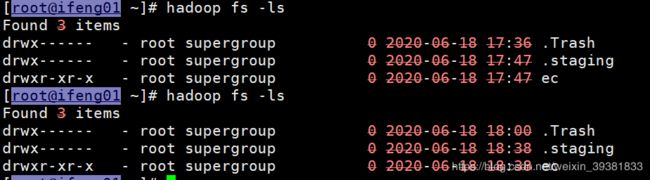

导入成功

File Output Format Counters

Bytes Written=16546

20/06/18 17:47:34 INFO mapreduce.ImportJobBase: Transferred 16.1582 KB in 17.0518 seconds (970.3367 bytes/sec)

20/06/18 17:47:34 INFO mapreduce.ImportJobBase: Retrieved 523 records.

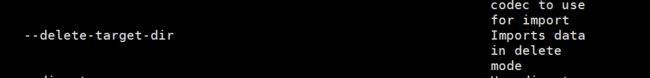

sqoop import "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--table ec \

--split-by OldPart \

--delete-target-dir

实现了覆盖

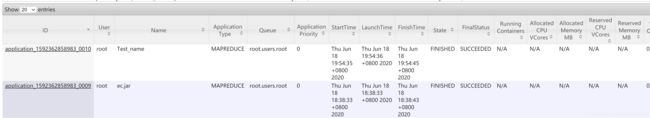

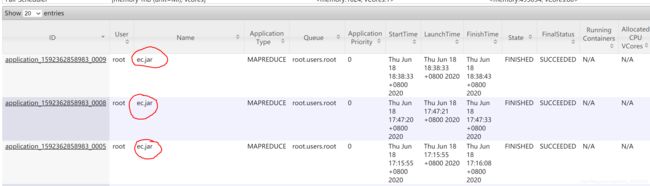

设置MR作业的名称

Yarn的名字都为ec.jar 尝试修改

sqoop import "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--table ec \

--split-by OldPart \

--delete-target-dir \

--mapreduce-job-name Test_name

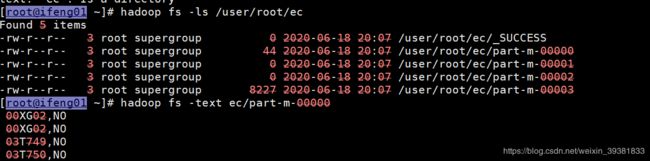

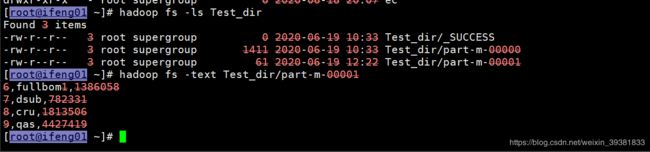

4 指定字段

sqoop import "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--table ec \

--split-by OldPart \

--delete-target-dir \

--mapreduce-job-name Test_name \

--columns "OldPart,NewPart"

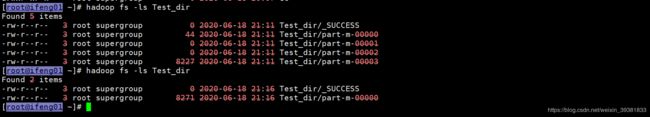

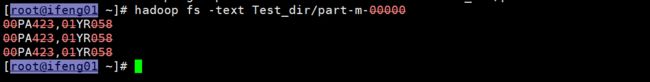

查看HDFS文件目录

hadoop fs -ls /user/root/ec

HDFS查看文本

hadoop fs -text ec/part-m-00000

sqoop import "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--table ec \

--split-by OldPart \

--delete-target-dir \

--mapreduce-job-name Test_name \

--columns "OldPart,NewPart" \

--target-dir Test_dir

sqoop import "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--table ec \

--split-by OldPart \

--delete-target-dir \

--mapreduce-job-name Test_name \

--columns "OldPart,NewPart" \

--target-dir Test_dir \

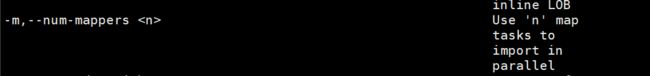

--m 1

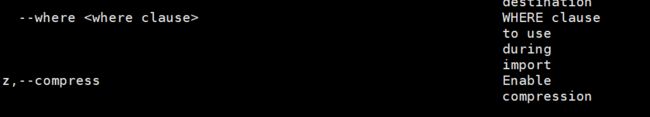

5 import 条件过滤

sqoop import "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--table ec \

--split-by OldPart \

--delete-target-dir \

--mapreduce-job-name Test_name \

--columns "OldPart,NewPart" \

--target-dir Test_dir \

--where "NewPart='01YR058'"

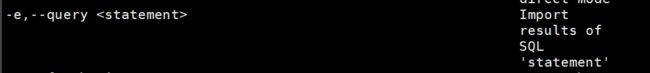

6 SQL嵌入

sqoop import "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--table ec \

--split-by OldPart \

--delete-target-dir \

--mapreduce-job-name Test_name \

--columns "OldPart,NewPart" \

--target-dir Test_dir \

--query "SELECT * FROM `ec` where NewPart = '01YR067'"

sqoop import "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--split-by OldPart \

--delete-target-dir \

--mapreduce-job-name Test_name \

--columns "OldPart,NewPart" \

--target-dir Test_dir \

--query "SELECT * FROM `ec` where NewPart = '01YR067'"

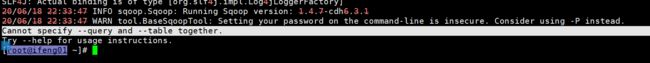

sqoop import "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--split-by OldPart \

--delete-target-dir \

--mapreduce-job-name Test_name \

--columns "OldPart,NewPart" \

--target-dir Test_dir \

--query "SELECT * FROM `ec` where NewPart = '01YR067'and \$CONDITIONS"

使用转义\ 后仍不可以

![]()

1 使用单引号 并用``(1左边)括起来

sqoop import "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--split-by OldPart \

--delete-target-dir \

--mapreduce-job-name Test_name \

--columns "OldPart,NewPart" \

--target-dir Test_dir \

--query 'SELECT * FROM ec `$CONDITIONS`'

版本问题,目前看来只有上面一种方法

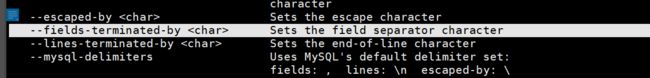

sqoop import "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--split-by OldPart \

--delete-target-dir \

--mapreduce-job-name Test_name \

--m 1 \

--target-dir Test_dir \

--query 'SELECT * FROM eccase `$CONDITIONS`' \

--fields-terminated-by '\t'

sqoop import "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--split-by OldPart \

--delete-target-dir \

--mapreduce-job-name Test_name \

--m 1 \

--target-dir Test_dir \

--query 'SELECT * FROM eccase `$CONDITIONS`' \

--fields-terminated-by '\t' \

--null-non-string ' ' \

-null-string '0'

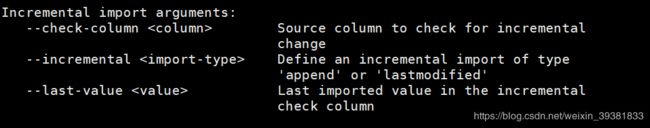

7 增量式抽取

sqoop import "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

--table sbom_total \

--split-by id \

--mapreduce-job-name Test_name \

--target-dir Test_dir \

--m 1 \

--check-column id \

--incremental append \

--last-value 5

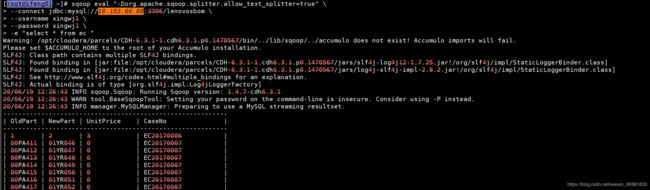

sqoop eval "-Dorg.apache.sqoop.splitter.allow_text_splitter=true" \

--connect jdbc:mysql://10.103.66.88:3306/lenovosbom \

--username xingwj1 \

--password xingwj1 \

-e "select * from ec "

8 Options-file 的使用

options-file可以保存一系列配置

编辑options-file

import

"-Dorg.apache.sqoop.splitter.allow_text_splitter=true"

--connect

jdbc:mysql://10.103.66.88:3306/lenovosbom

--username

xingwj1

--password

xingwj1

--delete-target-dir

执行options-file

sqoop --options-file /home/ETL/test/88.txt --table ec -m1

Hive

Hive arguments:

--create-hive-table Fail if the target hive

table exists

--external-table-dir Sets where the external

table is in HDFS

--hive-database Sets the database name to

use when importing to hive

--hive-delims-replacement Replace Hive record \0x01

and row delimiters (\n\r)

from imported string fields

with user-defined string

--hive-drop-import-delims Drop Hive record \0x01 and

row delimiters (\n\r) from

imported string fields

--hive-home Override $HIVE_HOME

--hive-import Import tables into Hive

(Uses Hive's default

delimiters if none are

set.)

--hive-overwrite Overwrite existing data in

the Hive table

--hive-partition-key Sets the partition key to

use when importing to hive

--hive-partition-value Sets the partition value to

use when importing to hive

--hive-table Sets the table name to use

when importing to hive

--hs2-keytab The location of the keytab

of the HiveServer2 user.

--hs2-url The URL to the HiveServer2.

--hs2-user The user/principal for

HiveServer2.

--map-column-hive Override mapping for

specific column to hive

types.

sqoop --options-file /home/ETL/test/88.txt \

--table ec \

-m1 \

--hive-import \

--create-hive-table \

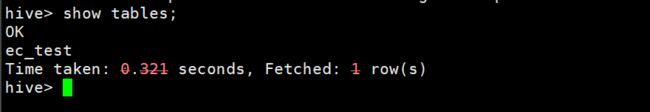

--hive-table ec_test

--create-hive-table 不建议使用,自动生成会导致表结构类型出错,应该手动创建