记录:使用Tensorflow的Object detection API实现实时目标检测

目录

- Tensorflow环境的配置和安装

- 版本选择

- 安装Anaconda2/3

- 换源

- 新建一个Anaconda环境

- 安装Tensorflow

- 安装Object detection API

- 训练模型

- 数据处理

- 打标

- 生成tf文件

- 导入模块

- pbtxt label目录

- 设置训练集和测试集

- 生成tf文件

- 下载预训练模型

- 修改训练设置

- 开始训练

- 得到模型

- 实时监测

Tensorflow环境的配置和安装

设备:win10 笔记本电脑

版本选择

GPU版本需要较好显卡支持,这里选择安装CPU版本。CPU版本安装比较简单,不需要安装显卡驱动,这里直接采用Anaconda安装。

安装Anaconda2/3

python2.7支持Anconda2,python3.5支持Anconda3,Anconda可以在清华镜像或者官网下载。

https://mirrors.tuna.tsinghua.edu.cn/anaconda/archive/

https://www.anaconda.com/download/

下载完成后安装。

打开命令界面,键入conda,若命令有效则安装完成。

换源

将Anaconda更换为清华的源

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main/

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/

conda config --set show_channel_urls yes

新建一个Anaconda环境

conda create -n tensorfow

这样就新建了一个名为tensorflow的3.5版本python的Anaconda环境

conda activate tensorflow python=3.5 anaconda

进入环境

安装Tensorflow

这里安装1.15版本的tensorflow,因为与colab上的版本一致,后续需要升级到2.0版本。

pip install tensorflow==1.15

进入tensorflow环境,键入python代码

import tensorflow as tf

hello = tf.compat.v1.constant("Helllo tensorflow!")

sess = tf.compat.v1.Session()

print(sess.run(hello))

若成功输出则安装完成。

安装Object detection API

安装

训练模型

由于电脑配置不支持,这里不在本机上进行训练,利用google的colab利用免费的gpu进行模型训练。

尝试COLAB

数据处理

打标

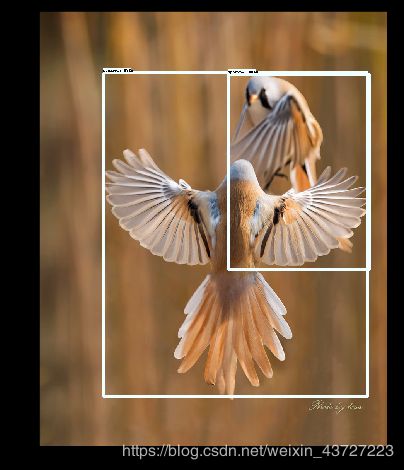

Object detection API为目标检测API,会对图像中目标识别并框出来,所以需要提前对图片进行打标。手把手教你打标

不打标的数据只能识别出来是不是,不能准确的框出来。

生成tf文件

将图片和标注(.xml)生成tensorflow可识别的tf文件。因为colab上直接下载解压的object detection api没有在环境变量中注册,所以需手动导入相应包。这里根据官方给的代码在/tensorflow/models/research/object_detection/dataset_tools目录下的create_pascal_tf_record.py进行修改。

导入模块

import sys

sys.path.append('/content/drive/My Drive/test_12_7/models/research')

sys.path.append('/content/drive/My Drive/test_12_7/models/research/slim')

sys.path.append('/content/drive/My Drive/test_12_7/models/research/slim/nets')

pbtxt label目录

需要自己写一个pbtxt文件来告诉tensorflow模型要训练的类别和数目

item {

id: 1

name: 'rat'

}

item {

id: 2

name: 'fox'

}

item {

id: 3

name: 'eagle'

}

item {

id: 4

name: 'sparrow'

}

其中name要和标注时的名字一致,还可添加displayname等属性。

设置训练集和测试集

模型进行训练的时候可以同时进行测试,这里需要手动选出一部分图片作为测试集,测试集图片不会在训练时出现。将选取的图片名称输入一个txt文件中使用。

生成tf文件

# -*- coding:utf-8 -*-

# Copyright 2017 The TensorFlow Authors. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ==============================================================================

r"""Convert raw PASCAL dataset to TFRecord for object_detection.

Example usage:

python object_detection/dataset_tools/create_pascal_tf_record4raccoon.py \

--data_dir=/home/forest/dataset/raccoon_dataset-master/images \

--set=/home/forest/dataset/raccoon_dataset-master/train.txt \

--output_path=/home/forest/dataset/raccoon_dataset-master/train.record \

--label_map_path=/home/forest/dataset/raccoon_dataset-master/raccoon_label_map.pbtxt \

--annotations_dir=/home/forest/dataset/raccoon_dataset-master/annotations

"""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import hashlib

import io

import logging

import os

from lxml import etree

import PIL.Image

import tensorflow as tf

from object_detection.utils import dataset_util

from object_detection.utils import label_map_util

try:

flags = tf.app.flags

flags.DEFINE_string('data_dir', '', 'Root directory to raw PASCAL VOC dataset.')

flags.DEFINE_string('set', 'train', 'Convert training set, validation set or '

'merged set.')

flags.DEFINE_string('annotations_dir', 'Annotations',

'(Relative) path to annotations directory.')

flags.DEFINE_string('year', 'VOC2007', 'Desired challenge year.')

flags.DEFINE_string('output_path', '', 'Path to output TFRecord')

flags.DEFINE_string('label_map_path', 'data/pascal_label_map.pbtxt',

'Path to label map proto')

flags.DEFINE_boolean('ignore_difficult_instances', False, 'Whether to ignore '

'difficult instances')

except:

print("has define!")

FLAGS = flags.FLAGS

SETS = ['train', 'val', 'trainval', 'test']

YEARS = ['VOC2007', 'VOC2012', 'merged']

def dict_to_tf_example(data,

dataset_directory,

label_map_dict,

ignore_difficult_instances=False,

image_subdirectory='JPEGImages'):

img_path = os.path.join(dataset_directory, data['filename'])

full_path = img_path

with tf.gfile.GFile(full_path, 'rb') as fid:

encoded_jpg = fid.read()

encoded_jpg_io = io.BytesIO(encoded_jpg)

image = PIL.Image.open(encoded_jpg_io)

key = hashlib.sha256(encoded_jpg).hexdigest()

width = int(data['size']['width'])

height = int(data['size']['height'])

xmin = []

ymin = []

xmax = []

ymax = []

classes = []

classes_text = []

truncated = []

poses = []

difficult_obj = []

i = 0

for obj in data['object']:

difficult = bool(int(obj['difficult']))

if ignore_difficult_instances and difficult:

continue

difficult_obj.append(int(difficult))

xmin.append(float(obj['bndbox']['xmin']) / width)

ymin.append(float(obj['bndbox']['ymin']) / height)

xmax.append(float(obj['bndbox']['xmax']) / width)

ymax.append(float(obj['bndbox']['ymax']) / height)

classes_text.append(obj['name'].encode('utf8'))

classes.append(label_map_dict[obj['name']])

truncated.append(int(obj['truncated']))

poses.append(obj['pose'].encode('utf8'))

example = tf.train.Example(features=tf.train.Features(feature={

'image/height': dataset_util.int64_feature(height),

'image/width': dataset_util.int64_feature(width),

'image/filename': dataset_util.bytes_feature(

data['filename'].encode('utf8')),

'image/source_id': dataset_util.bytes_feature(

data['filename'].encode('utf8')),

'image/key/sha256': dataset_util.bytes_feature(key.encode('utf8')),

'image/encoded': dataset_util.bytes_feature(encoded_jpg),

'image/format': dataset_util.bytes_feature('jpeg'.encode('utf8')),

'image/object/bbox/xmin': dataset_util.float_list_feature(xmin),

'image/object/bbox/xmax': dataset_util.float_list_feature(xmax),

'image/object/bbox/ymin': dataset_util.float_list_feature(ymin),

'image/object/bbox/ymax': dataset_util.float_list_feature(ymax),

'image/object/class/text': dataset_util.bytes_list_feature(classes_text),

'image/object/class/label': dataset_util.int64_list_feature(classes),

'image/object/difficult': dataset_util.int64_list_feature(difficult_obj),

'image/object/truncated': dataset_util.int64_list_feature(truncated),

'image/object/view': dataset_util.bytes_list_feature(poses),

}))

return example

def main(_):

#if FLAGS.set not in SETS:

# raise ValueError('set must be in : {}'.format(SETS))

#if FLAGS.year not in YEARS:

# raise ValueError('year must be in : {}'.format(YEARS))

#data_dir = FLAGS.data_dir

data_dir = '/content/drive/My Drive/test_12_7/result_12_8/images'

years = ['VOC2007', 'VOC2012']

if FLAGS.year != 'merged':

years = [FLAGS.year]

writer = tf.python_io.TFRecordWriter('/content/drive/My Drive/test_12_7/result_12_8/record/train.record')

label_map_dict = label_map_util.get_label_map_dict('/content/drive/My Drive/test_12_7/result_12_8/label.pbtxt')

for year in years:

logging.info('Reading from PASCAL %s dataset.', year)

examples_path = '/content/drive/My Drive/test_12_7/result_12_8/train.txt'

# 'aeroplane_' + FLAGS.set + '.txt')

annotations_dir = '/content/drive/My Drive/test_12_7/result_12_8/annotations'

examples_list = dataset_util.read_examples_list(examples_path)

for idx, example in enumerate(examples_list):

if idx % 100 == 0:

logging.info('On image %d of %d', idx, len(examples_list))

path = os.path.join(annotations_dir, example + '.xml')

with tf.gfile.GFile(path, 'r') as fid:

xml_str = fid.read()

xml = etree.fromstring(xml_str)

data = dataset_util.recursive_parse_xml_to_dict(xml)['annotation']

tf_example = dict_to_tf_example(data, data_dir, label_map_dict,

FLAGS.ignore_difficult_instances)

writer.write(tf_example.SerializeToString())

writer.close()

if __name__ == '__main__':

tf.app.run()

下载预训练模型

迁移学习是在已经训练好的模型基础上进行训练,即不更改模型的结构和输入输出,只在最后的softmax层进行一定的修改,便可以得到所需要的模型。这样可以可以减少数据的数量,提高训练速度和准确度。更深入的需要进一步的学习了解。

这里使用的是ssd_mobilenet_v1_coco模型,更多模型详情需要之后的学习与测试。

修改训练设置

在object_detection/samples/configs下找到ssd_mobilenet_v1_coco.config,复制到自己的目录下并进行修改。

主要修改模块如下

ssd {

num_classes: 1

box_coder {

faster_rcnn_box_coder {

y_scale: 10.0

x_scale: 10.0

height_scale: 5.0

width_scale: 5.0

}

fine_tune_checkpoint: "/content/drive/My Drive/test_12_7/models/research/object_detection/ssd_mobilenet_v1_coco_2017_11_17/model.ckpt"

train_input_reader: {

tf_record_input_reader {

input_path: "/content/drive/My Drive/test_12_7/result_12_8/record/train.record"

}

label_map_path: "/content/drive/My Drive/test_12_7/result_12_8/label.pbtxt"

}

eval_config: {

num_examples: 120

# Note: The below line limits the evaluation process to 10 evaluations.

# Remove the below line to evaluate indefinitely.

max_evals: 10

}

eval_input_reader: {

tf_record_input_reader {

input_path: "/content/drive/My Drive/test_12_7/result_12_8/record/val.record"

}

label_map_path: "/content/drive/My Drive/test_12_7/result_12_8/label.pbtxt"

shuffle: false

num_readers: 1

}

开始训练

进入环境

import os

project_path = '/content/drive/My Drive/test_12_7/models/research/object_detection' #change dir to your project folder

os.chdir(project_path) #change dir

开始训练

! python model_main.py \

--pipeline_config_path=../../../result_12_8/ssd_mobilenet_v1_coco.config.config\

--model_dir=../../../result_12_8/training \

--alsologtostderr

得到模型

当map值达到0.90后且不再增加,loss值也逐渐稳定,可以将模型导出进行测试。

! python export_inference_graph.py \

--pipeline_config_path=../../../result_12_8/ssd_mobilenet_v1_coco.config.config \

--trained_checkpoint_prefix=../../../result_12_8/training/model.ckpt-33211 \

--output_directory=../../../result_12_8/model/2

实时监测

通过opencv调用摄像头,并对视频中的图像进行截取,利用模型对齐检测,将检测得到的数据返回并对图片进行绘制后输出。

import time

start = time.time()

import numpy as np

import os

import six.moves.urllib as urllib

import sys

import tarfile

import tensorflow as tf

import zipfile

import cv2

from distutils.version import StrictVersion

from collections import defaultdict

from io import StringIO

from matplotlib import pyplot as plt

from PIL import Image

import pandas as pd

cv2.namedWindow("frame",0)

cv2.resizeWindow("frame",640,480)

from object_detection.utils import label_map_util

from object_detection.utils import visualization_utils as vis_util

os.chdir('D:\\tensorflow\\models\\research\\object_detection')

#load model

PATH_TO_FROZEN_GRAPH = 'D:/12_8/model/frozen_inference_graph.pb'

#load labels

PATH_TO_LABELS = 'D:/12_8/label.pbtxt'

category_index = label_map_util.create_category_index_from_labelmap(PATH_TO_LABELS, use_display_name=True)

detection_graph = tf.Graph()

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

def video_capture(image_tensor, detection_boxes, detection_scores, detection_classes, num_detections, sess, video_path):

if video_path == "nopath":

# 0是代表摄像头编号,只有一个的话默认为0

cap = cv2.VideoCapture(1)

print("open")

else:

cap = cv2.VideoCapture(video_path)

i = 1

while 1:

ref, frame = cap.read()

if ref:

i = i + 1

if i % 5 == 0:

loss_show(image_tensor, detection_boxes, detection_scores, detection_classes, num_detections, frame,

sess)

else:

cv2.imshow("frame", frame)

# 等待30ms显示图像,若过程中按“Esc”退出

c = cv2.waitKey(30) & 0xff

if c == 27: # ESC 按键 对应键盘值 27

cap.release()

break

else:

print("ref == false ")

break

def init_ogject_detection(video_path):

with detection_graph.as_default():

with tf.compat.v1.Session(config=tf.ConfigProto(device_count={'gpu':0})) as sess:

od_graph_def = tf.compat.v1.GraphDef()

with tf.io.gfile.GFile(PATH_TO_FROZEN_GRAPH, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

# Definite input and output Tensors for detection_graph

image_tensor = detection_graph.get_tensor_by_name('image_tensor:0')

# Each box represents a part of the image where a particular object was detected.

detection_boxes = detection_graph.get_tensor_by_name('detection_boxes:0')

# Each score represent how level of confidence for each of the objects.

# Score is shown on the result image, together with the class label.

detection_scores = detection_graph.get_tensor_by_name('detection_scores:0')

detection_classes = detection_graph.get_tensor_by_name('detection_classes:0')

num_detections = detection_graph.get_tensor_by_name('num_detections:0')

video_capture(image_tensor, detection_boxes, detection_scores, detection_classes, num_detections, sess, video_path)

def loss_show(image_tensor, detection_boxes, detection_scores, detection_classes, num_detections, image_np, sess):

starttime = time.time()

image_np = Image.fromarray(cv2.cvtColor(image_np, cv2.COLOR_BGR2RGB))

image_np = load_image_into_numpy_array(image_np)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

# Actual detection.

(boxes, scores, classes, num) = sess.run(

[detection_boxes, detection_scores, detection_classes, num_detections],

feed_dict={image_tensor: image_np_expanded})

#print("--scores--->", scores)

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

np.squeeze(boxes),

np.squeeze(classes).astype(np.int32),

np.squeeze(scores),

category_index,

use_normalized_coordinates=True,

line_thickness=5,

min_score_thresh=.5)

# write images

# 保存识别结果图片

print("------------use time ====> ", time.time() - starttime)

image_np = cv2.cvtColor(image_np, cv2.COLOR_BGR2RGB)

cv2.imshow("frame", image_np)

if __name__ == '__main__':

VIDEO_PATH = "nopath" # 本地文件传入文件路径 调用camera 传入'nopath'

init_ogject_detection(VIDEO_PATH)

根据我在自己电脑上的实验结果,发现摄像头可以正常识别出较大且图像颜色非失真的物体,远处物体难以识别,每次识别需要约0.3S,视频帧数不高。之后需要进一步完善模型,且数据集应该更加丰富才能够使模型进行更好的学习。

视频流畅度以及提高速度的解决办法

文章中似乎用到了python多进程并行的方法,使实时检测更加流畅且节省资源,之后会进行改良。