Hadoop(二) ( HDFS之单机版部署+伪分布式的部署)

文章目录

- 单机版的部署

- 伪分布式的部署

单机版的部署

官方文档:

https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/ClusterSetup.html

软件下载

https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/

实验环境:

172.25.2.3 ser3

实验步骤:

1.在ser3上

创建普通用户

[root@ser3 ~]# useradd yxx

[root@ser3 ~]# su - yxx

[yxx@ser3 ~]$

解压hadoop包

[yxx@ser3 ~]$ ls

hadoop-3.2.1.tar.gz jdk-8u251-linux-x64.tar.gz

[yxx@ser3 ~]$ tar zxf hadoop-3.2.1.tar.gz

[yxx@ser3 ~]$ ls

hadoop-3.2.1 hadoop-3.2.1.tar.gz jdk-8u251-linux-x64.tar.gz

[yxx@ser3 ~]$ cd hadoop-3.2.1/

[yxx@ser3 hadoop-3.2.1]$ ls

bin include libexec NOTICE.txt sbin

etc lib LICENSE.txt README.txt share

解压jdk包

[yxx@ser3 ~]$ tar zxf jdk-8u251-linux-x64.tar.gz

创建软链接方便以后更新

[yxx@ser3 ~]$ ln -s jdk1.8.0_251/ java

[yxx@ser3 ~]$ ln -s hadoop-3.2.1 hadoop

编写环境变量文件

[yxx@ser3 ~]$ cd hadoop-3.2.1/

[yxx@ser3 hadoop-3.2.1]$ cd etc/

[yxx@ser3 etc]$ ls

hadoop

[yxx@ser3 etc]$ cd hadoop/

[yxx@ser3 hadoop]$ vim hadoop-env.sh

54 export JAVA_HOME=/home/yxx/java

58 export HADOOP_HOME=/home/yxx/hadoop

进入二进制程序目录,运行程序

[yxx@ser3 ~]$ cd hadoop

[yxx@ser3 hadoop]$ cd bin/

[yxx@ser3 bin]$ ./hadoop

Usage: hadoop [OPTIONS] SUBCOMMAND [SUBCOMMAND OPTIONS]

or hadoop [OPTIONS] CLASSNAME [CLASSNAME OPTIONS]

where CLASSNAME is a user-provided Java class

OPTIONS is none or any of:

buildpaths attempt to add class files from

build tree

--config dir Hadoop config directory

--debug turn on shell script debug mode

--help usage information

hostnames list[,of,host,names] hosts to use in slave mode

hosts filename list of hosts to use in slave mode

loglevel level set the log4j level for this command

workers turn on worker mode

SUBCOMMAND is one of:

Admin Commands:

daemonlog get/set the log level for each daemon

Client Commands:

archive create a Hadoop archive

checknative check native Hadoop and compression libraries

availability

classpath prints the class path needed to get the Hadoop jar and

the required libraries

conftest validate configuration XML files

credential interact with credential providers

distch distributed metadata changer

distcp copy file or directories recursively

dtutil operations related to delegation tokens

envvars display computed Hadoop environment variables

fs run a generic filesystem user client

gridmix submit a mix of synthetic job, modeling a profiled

from production load

jar run a jar file. NOTE: please use "yarn jar" to launch

YARN applications, not this command.

jnipath prints the java.library.path

kdiag Diagnose Kerberos Problems

kerbname show auth_to_local principal conversion

key manage keys via the KeyProvider

rumenfolder scale a rumen input trace

rumentrace convert logs into a rumen trace

s3guard manage metadata on S3

trace view and modify Hadoop tracing settings

version print the version

Daemon Commands:

kms run KMS, the Key Management Server

SUBCOMMAND may print help when invoked w/o parameters or with -h.

[yxx@ser3 bin]$

进行测试

创建目录,复制一些文件,作为数据的来源

[yxx@ser3 hadoop]$ mkdir input

[yxx@ser3 hadoop]$ cp etc/hadoop/*.xml input

[yxx@ser3 hadoop]$ ls input/

capacity-scheduler.xml hdfs-site.xml kms-site.xml

core-site.xml httpfs-site.xml mapred-site.xml

hadoop-policy.xml kms-acls.xml yarn-site.xml

进行运算,output目录必须不存在

[yxx@ser3 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar grep input output 'dfs[a-z.]+'

2020-05-30 18:52:50,300 INFO impl.MetricsConfig: Loaded properties from hadoop-metrics2.properties

2020-05-30 18:52:50,400 INFO impl.MetricsSystemImpl: Scheduled Metric snapshot period at 10 second(s).

2020-05-30 18:52:50,400 INFO impl.MetricsSystemImpl: JobTracker metrics system started

2020-05-30 18:52:51,245 INFO input.FileInputFormat: Total input files to process : 9

2020-05-30 18:52:51,281 INFO mapreduce.JobSubmitter: number of splits:9

2020-05-30 18:52:51,893 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local408205321_0001

2020-05-30 18:52:51,893 INFO mapreduce.JobSubmitter: Executing with tokens: []

2020-05-30 18:52:52,136 INFO mapreduce.Job: The url to track the job: http://localhost:8080/

2020-05-30 18:52:52,136 INFO mapreduce.Job: Running job: job_local408205321_0001

2020-05-30 18:52:52,144 INFO mapred.LocalJobRunner: OutputCommitter set in config null

2020-05-30 18:52:52,161 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-05-30 18:52:52,161 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-05-30 18:52:52,162 INFO mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

2020-05-30 18:52:52,234 INFO mapred.LocalJobRunner: Waiting for map tasks

2020-05-30 18:52:52,236 INFO mapred.LocalJobRunner: Starting task: attempt_local408205321_0001_m_000000_0

2020-05-30 18:52:52,282 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-05-30 18:52:52,282 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-05-30 18:52:52,318 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2020-05-30 18:52:52,322 INFO mapred.MapTask: Processing split: file:/home/yxx/hadoop-3.2.1/input/hadoop-policy.xml:0+11392

2020-05-30 18:52:52,432 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

2020-05-30 18:52:52,432 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

2020-05-30 18:52:52,432 INFO mapred.MapTask: soft limit at 83886080

2020-05-30 18:52:52,432 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

2020-05-30 18:52:52,432 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

2020-05-30 18:52:52,439 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2020-05-30 18:52:52,456 INFO mapred.LocalJobRunner:

2020-05-30 18:52:52,456 INFO mapred.MapTask: Starting flush of map output

2020-05-30 18:52:52,456 INFO mapred.MapTask: Spilling map output

2020-05-30 18:52:52,456 INFO mapred.MapTask: bufstart = 0; bufend = 17; bufvoid = 104857600

2020-05-30 18:52:52,456 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 26214396(104857584); length = 1/6553600

2020-05-30 18:52:52,515 INFO mapred.MapTask: Finished spill 0

2020-05-30 18:52:52,523 INFO mapred.Task: Task:attempt_local408205321_0001_m_000000_0 is done. And is in the process of committing

2020-05-30 18:52:52,530 INFO mapred.LocalJobRunner: map

2020-05-30 18:52:52,530 INFO mapred.Task: Task 'attempt_local408205321_0001_m_000000_0' done.

2020-05-30 18:52:52,538 INFO mapred.Task: Final Counters for attempt_local408205321_0001_m_000000_0: Counters: 18

File System Counters

FILE: Number of bytes read=329146

FILE: Number of bytes written=836989

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=265

Map output records=1

Map output bytes=17

Map output materialized bytes=25

Input split bytes=116

Combine input records=1

Combine output records=1

Spilled Records=1

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=0

Total committed heap usage (bytes)=199229440

File Input Format Counters

Bytes Read=11392

2020-05-30 18:52:52,538 INFO mapred.LocalJobRunner: Finishing task: attempt_local408205321_0001_m_000000_0

2020-05-30 18:52:52,545 INFO mapred.LocalJobRunner: Starting task: attempt_local408205321_0001_m_000001_0

2020-05-30 18:52:52,557 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-05-30 18:52:52,558 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-05-30 18:52:52,560 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2020-05-30 18:52:52,564 INFO mapred.MapTask: Processing split: file:/home/yxx/hadoop-3.2.1/input/capacity-scheduler.xml:0+8260

2020-05-30 18:52:52,661 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

2020-05-30 18:52:52,661 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

2020-05-30 18:52:52,661 INFO mapred.MapTask: soft limit at 83886080

2020-05-30 18:52:52,661 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

2020-05-30 18:52:52,661 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

2020-05-30 18:52:52,667 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2020-05-30 18:52:52,680 INFO mapred.LocalJobRunner:

2020-05-30 18:52:52,680 INFO mapred.MapTask: Starting flush of map output

2020-05-30 18:52:52,690 INFO mapred.Task: Task:attempt_local408205321_0001_m_000001_0 is done. And is in the process of committing

2020-05-30 18:52:52,692 INFO mapred.LocalJobRunner: map

2020-05-30 18:52:52,692 INFO mapred.Task: Task 'attempt_local408205321_0001_m_000001_0' done.

2020-05-30 18:52:52,695 INFO mapred.Task: Final Counters for attempt_local408205321_0001_m_000001_0: Counters: 18

File System Counters

FILE: Number of bytes read=338456

FILE: Number of bytes written=837027

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=220

Map output records=0

Map output bytes=0

Map output materialized bytes=6

Input split bytes=121

Combine input records=0

Combine output records=0

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=0

Total committed heap usage (bytes)=304611328

File Input Format Counters

Bytes Read=8260

2020-05-30 18:52:52,695 INFO mapred.LocalJobRunner: Finishing task: attempt_local408205321_0001_m_000001_0

2020-05-30 18:52:52,696 INFO mapred.LocalJobRunner: Starting task: attempt_local408205321_0001_m_000002_0

2020-05-30 18:52:52,703 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-05-30 18:52:52,703 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-05-30 18:52:52,703 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2020-05-30 18:52:52,704 INFO mapred.MapTask: Processing split: file:/home/yxx/hadoop-3.2.1/input/kms-acls.xml:0+3518

2020-05-30 18:52:52,850 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

2020-05-30 18:52:52,850 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

2020-05-30 18:52:52,850 INFO mapred.MapTask: soft limit at 83886080

2020-05-30 18:52:52,850 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

2020-05-30 18:52:52,850 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

2020-05-30 18:52:52,853 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2020-05-30 18:52:52,855 INFO mapred.LocalJobRunner:

2020-05-30 18:52:52,855 INFO mapred.MapTask: Starting flush of map output

2020-05-30 18:52:52,859 INFO mapred.Task: Task:attempt_local408205321_0001_m_000002_0 is done. And is in the process of committing

2020-05-30 18:52:52,860 INFO mapred.LocalJobRunner: map

2020-05-30 18:52:52,861 INFO mapred.Task: Task 'attempt_local408205321_0001_m_000002_0' done.

2020-05-30 18:52:52,861 INFO mapred.Task: Final Counters for attempt_local408205321_0001_m_000002_0: Counters: 18

File System Counters

FILE: Number of bytes read=343024

FILE: Number of bytes written=837065

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=135

Map output records=0

Map output bytes=0

Map output materialized bytes=6

Input split bytes=111

Combine input records=0

Combine output records=0

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=114

Total committed heap usage (bytes)=148897792

File Input Format Counters

Bytes Read=3518

2020-05-30 18:52:52,861 INFO mapred.LocalJobRunner: Finishing task: attempt_local408205321_0001_m_000002_0

2020-05-30 18:52:52,861 INFO mapred.LocalJobRunner: Starting task: attempt_local408205321_0001_m_000003_0

2020-05-30 18:52:52,862 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-05-30 18:52:52,862 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-05-30 18:52:52,862 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2020-05-30 18:52:52,863 INFO mapred.MapTask: Processing split: file:/home/yxx/hadoop-3.2.1/input/hdfs-site.xml:0+775

2020-05-30 18:52:52,941 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

2020-05-30 18:52:52,941 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

2020-05-30 18:52:52,941 INFO mapred.MapTask: soft limit at 83886080

2020-05-30 18:52:52,941 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

2020-05-30 18:52:52,941 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

2020-05-30 18:52:53,040 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2020-05-30 18:52:53,042 INFO mapred.LocalJobRunner:

2020-05-30 18:52:53,042 INFO mapred.MapTask: Starting flush of map output

2020-05-30 18:52:53,048 INFO mapred.Task: Task:attempt_local408205321_0001_m_000003_0 is done. And is in the process of committing

2020-05-30 18:52:53,050 INFO mapred.LocalJobRunner: map

2020-05-30 18:52:53,050 INFO mapred.Task: Task 'attempt_local408205321_0001_m_000003_0' done.

2020-05-30 18:52:53,050 INFO mapred.Task: Final Counters for attempt_local408205321_0001_m_000003_0: Counters: 18

File System Counters

FILE: Number of bytes read=344849

FILE: Number of bytes written=837103

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=21

Map output records=0

Map output bytes=0

Map output materialized bytes=6

Input split bytes=112

Combine input records=0

Combine output records=0

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=98

Total committed heap usage (bytes)=296747008

File Input Format Counters

Bytes Read=775

2020-05-30 18:52:53,051 INFO mapred.LocalJobRunner: Finishing task: attempt_local408205321_0001_m_000003_0

2020-05-30 18:52:53,051 INFO mapred.LocalJobRunner: Starting task: attempt_local408205321_0001_m_000004_0

2020-05-30 18:52:53,053 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-05-30 18:52:53,053 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-05-30 18:52:53,053 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2020-05-30 18:52:53,054 INFO mapred.MapTask: Processing split: file:/home/yxx/hadoop-3.2.1/input/core-site.xml:0+774

2020-05-30 18:52:53,074 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

2020-05-30 18:52:53,074 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

2020-05-30 18:52:53,075 INFO mapred.MapTask: soft limit at 83886080

2020-05-30 18:52:53,075 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

2020-05-30 18:52:53,075 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

2020-05-30 18:52:53,193 INFO mapreduce.Job: Job job_local408205321_0001 running in uber mode : false

2020-05-30 18:52:53,193 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2020-05-30 18:52:53,196 INFO mapred.LocalJobRunner:

2020-05-30 18:52:53,196 INFO mapred.MapTask: Starting flush of map output

2020-05-30 18:52:53,199 INFO mapreduce.Job: map 100% reduce 0%

2020-05-30 18:52:53,203 INFO mapred.Task: Task:attempt_local408205321_0001_m_000004_0 is done. And is in the process of committing

2020-05-30 18:52:53,205 INFO mapred.LocalJobRunner: map

2020-05-30 18:52:53,206 INFO mapred.Task: Task 'attempt_local408205321_0001_m_000004_0' done.

2020-05-30 18:52:53,206 INFO mapred.Task: Final Counters for attempt_local408205321_0001_m_000004_0: Counters: 18

File System Counters

FILE: Number of bytes read=346673

FILE: Number of bytes written=837141

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=20

Map output records=0

Map output bytes=0

Map output materialized bytes=6

Input split bytes=112

Combine input records=0

Combine output records=0

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=103

Total committed heap usage (bytes)=293076992

File Input Format Counters

Bytes Read=774

2020-05-30 18:52:53,206 INFO mapred.LocalJobRunner: Finishing task: attempt_local408205321_0001_m_000004_0

2020-05-30 18:52:53,206 INFO mapred.LocalJobRunner: Starting task: attempt_local408205321_0001_m_000005_0

2020-05-30 18:52:53,207 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-05-30 18:52:53,207 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-05-30 18:52:53,208 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2020-05-30 18:52:53,215 INFO mapred.MapTask: Processing split: file:/home/yxx/hadoop-3.2.1/input/mapred-site.xml:0+758

2020-05-30 18:52:53,266 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

2020-05-30 18:52:53,266 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

2020-05-30 18:52:53,266 INFO mapred.MapTask: soft limit at 83886080

2020-05-30 18:52:53,266 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

2020-05-30 18:52:53,266 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

2020-05-30 18:52:53,270 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2020-05-30 18:52:53,280 INFO mapred.LocalJobRunner:

2020-05-30 18:52:53,280 INFO mapred.MapTask: Starting flush of map output

2020-05-30 18:52:53,283 INFO mapred.Task: Task:attempt_local408205321_0001_m_000005_0 is done. And is in the process of committing

2020-05-30 18:52:53,284 INFO mapred.LocalJobRunner: map

2020-05-30 18:52:53,285 INFO mapred.Task: Task 'attempt_local408205321_0001_m_000005_0' done.

2020-05-30 18:52:53,286 INFO mapred.Task: Final Counters for attempt_local408205321_0001_m_000005_0: Counters: 18

File System Counters

FILE: Number of bytes read=347969

FILE: Number of bytes written=837179

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=21

Map output records=0

Map output bytes=0

Map output materialized bytes=6

Input split bytes=114

Combine input records=0

Combine output records=0

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=0

Total committed heap usage (bytes)=398458880

File Input Format Counters

Bytes Read=758

2020-05-30 18:52:53,286 INFO mapred.LocalJobRunner: Finishing task: attempt_local408205321_0001_m_000005_0

2020-05-30 18:52:53,286 INFO mapred.LocalJobRunner: Starting task: attempt_local408205321_0001_m_000006_0

2020-05-30 18:52:53,291 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-05-30 18:52:53,291 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-05-30 18:52:53,294 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2020-05-30 18:52:53,298 INFO mapred.MapTask: Processing split: file:/home/yxx/hadoop-3.2.1/input/yarn-site.xml:0+690

2020-05-30 18:52:53,337 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

2020-05-30 18:52:53,337 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

2020-05-30 18:52:53,337 INFO mapred.MapTask: soft limit at 83886080

2020-05-30 18:52:53,337 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

2020-05-30 18:52:53,337 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

2020-05-30 18:52:53,340 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2020-05-30 18:52:53,341 INFO mapred.LocalJobRunner:

2020-05-30 18:52:53,341 INFO mapred.MapTask: Starting flush of map output

2020-05-30 18:52:53,344 INFO mapred.Task: Task:attempt_local408205321_0001_m_000006_0 is done. And is in the process of committing

2020-05-30 18:52:53,346 INFO mapred.LocalJobRunner: map

2020-05-30 18:52:53,346 INFO mapred.Task: Task 'attempt_local408205321_0001_m_000006_0' done.

2020-05-30 18:52:53,346 INFO mapred.Task: Final Counters for attempt_local408205321_0001_m_000006_0: Counters: 18

File System Counters

FILE: Number of bytes read=349197

FILE: Number of bytes written=837217

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=19

Map output records=0

Map output bytes=0

Map output materialized bytes=6

Input split bytes=112

Combine input records=0

Combine output records=0

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=2

Total committed heap usage (bytes)=471859200

File Input Format Counters

Bytes Read=690

2020-05-30 18:52:53,347 INFO mapred.LocalJobRunner: Finishing task: attempt_local408205321_0001_m_000006_0

2020-05-30 18:52:53,347 INFO mapred.LocalJobRunner: Starting task: attempt_local408205321_0001_m_000007_0

2020-05-30 18:52:53,354 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-05-30 18:52:53,355 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-05-30 18:52:53,356 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2020-05-30 18:52:53,364 INFO mapred.MapTask: Processing split: file:/home/yxx/hadoop-3.2.1/input/kms-site.xml:0+682

2020-05-30 18:52:53,383 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

2020-05-30 18:52:53,383 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

2020-05-30 18:52:53,383 INFO mapred.MapTask: soft limit at 83886080

2020-05-30 18:52:53,383 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

2020-05-30 18:52:53,383 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

2020-05-30 18:52:53,385 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2020-05-30 18:52:53,387 INFO mapred.LocalJobRunner:

2020-05-30 18:52:53,387 INFO mapred.MapTask: Starting flush of map output

2020-05-30 18:52:53,389 INFO mapred.Task: Task:attempt_local408205321_0001_m_000007_0 is done. And is in the process of committing

2020-05-30 18:52:53,398 INFO mapred.LocalJobRunner: map

2020-05-30 18:52:53,398 INFO mapred.Task: Task 'attempt_local408205321_0001_m_000007_0' done.

2020-05-30 18:52:53,401 INFO mapred.Task: Final Counters for attempt_local408205321_0001_m_000007_0: Counters: 18

File System Counters

FILE: Number of bytes read=350417

FILE: Number of bytes written=837255

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=20

Map output records=0

Map output bytes=0

Map output materialized bytes=6

Input split bytes=111

Combine input records=0

Combine output records=0

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=3

Total committed heap usage (bytes)=473956352

File Input Format Counters

Bytes Read=682

2020-05-30 18:52:53,402 INFO mapred.LocalJobRunner: Finishing task: attempt_local408205321_0001_m_000007_0

2020-05-30 18:52:53,403 INFO mapred.LocalJobRunner: Starting task: attempt_local408205321_0001_m_000008_0

2020-05-30 18:52:53,407 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-05-30 18:52:53,407 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-05-30 18:52:53,408 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2020-05-30 18:52:53,409 INFO mapred.MapTask: Processing split: file:/home/yxx/hadoop-3.2.1/input/httpfs-site.xml:0+620

2020-05-30 18:52:53,454 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

2020-05-30 18:52:53,455 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

2020-05-30 18:52:53,455 INFO mapred.MapTask: soft limit at 83886080

2020-05-30 18:52:53,455 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

2020-05-30 18:52:53,455 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

2020-05-30 18:52:53,459 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2020-05-30 18:52:53,460 INFO mapred.LocalJobRunner:

2020-05-30 18:52:53,461 INFO mapred.MapTask: Starting flush of map output

2020-05-30 18:52:53,465 INFO mapred.Task: Task:attempt_local408205321_0001_m_000008_0 is done. And is in the process of committing

2020-05-30 18:52:53,478 INFO mapred.LocalJobRunner: map

2020-05-30 18:52:53,479 INFO mapred.Task: Task 'attempt_local408205321_0001_m_000008_0' done.

2020-05-30 18:52:53,480 INFO mapred.Task: Final Counters for attempt_local408205321_0001_m_000008_0: Counters: 18

File System Counters

FILE: Number of bytes read=351575

FILE: Number of bytes written=837293

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=17

Map output records=0

Map output bytes=0

Map output materialized bytes=6

Input split bytes=114

Combine input records=0

Combine output records=0

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=2

Total committed heap usage (bytes)=475529216

File Input Format Counters

Bytes Read=620

2020-05-30 18:52:53,480 INFO mapred.LocalJobRunner: Finishing task: attempt_local408205321_0001_m_000008_0

2020-05-30 18:52:53,480 INFO mapred.LocalJobRunner: map task executor complete.

2020-05-30 18:52:53,485 INFO mapred.LocalJobRunner: Waiting for reduce tasks

2020-05-30 18:52:53,486 INFO mapred.LocalJobRunner: Starting task: attempt_local408205321_0001_r_000000_0

2020-05-30 18:52:53,525 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-05-30 18:52:53,525 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-05-30 18:52:53,525 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2020-05-30 18:52:53,532 INFO mapred.ReduceTask: Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@2e8c292a

2020-05-30 18:52:53,546 WARN impl.MetricsSystemImpl: JobTracker metrics system already initialized!

2020-05-30 18:52:53,591 INFO reduce.MergeManagerImpl: MergerManager: memoryLimit=332870432, maxSingleShuffleLimit=83217608, mergeThreshold=219694496, ioSortFactor=10, memToMemMergeOutputsThreshold=10

2020-05-30 18:52:53,612 INFO reduce.EventFetcher: attempt_local408205321_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events

2020-05-30 18:52:53,671 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local408205321_0001_m_000006_0 decomp: 2 len: 6 to MEMORY

2020-05-30 18:52:53,679 INFO reduce.InMemoryMapOutput: Read 2 bytes from map-output for attempt_local408205321_0001_m_000006_0

2020-05-30 18:52:53,683 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 2, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->2

2020-05-30 18:52:53,710 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local408205321_0001_m_000003_0 decomp: 2 len: 6 to MEMORY

2020-05-30 18:52:53,712 INFO reduce.InMemoryMapOutput: Read 2 bytes from map-output for attempt_local408205321_0001_m_000003_0

2020-05-30 18:52:53,712 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 2, inMemoryMapOutputs.size() -> 2, commitMemory -> 2, usedMemory ->4

2020-05-30 18:52:53,715 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local408205321_0001_m_000000_0 decomp: 21 len: 25 to MEMORY

2020-05-30 18:52:53,719 INFO reduce.InMemoryMapOutput: Read 21 bytes from map-output for attempt_local408205321_0001_m_000000_0

2020-05-30 18:52:53,719 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 21, inMemoryMapOutputs.size() -> 3, commitMemory -> 4, usedMemory ->25

2020-05-30 18:52:53,720 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local408205321_0001_m_000007_0 decomp: 2 len: 6 to MEMORY

2020-05-30 18:52:53,726 INFO reduce.InMemoryMapOutput: Read 2 bytes from map-output for attempt_local408205321_0001_m_000007_0

2020-05-30 18:52:53,726 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 2, inMemoryMapOutputs.size() -> 4, commitMemory -> 25, usedMemory ->27

2020-05-30 18:52:53,734 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local408205321_0001_m_000004_0 decomp: 2 len: 6 to MEMORY

2020-05-30 18:52:53,740 INFO reduce.InMemoryMapOutput: Read 2 bytes from map-output for attempt_local408205321_0001_m_000004_0

2020-05-30 18:52:53,740 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 2, inMemoryMapOutputs.size() -> 5, commitMemory -> 27, usedMemory ->29

2020-05-30 18:52:53,741 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local408205321_0001_m_000001_0 decomp: 2 len: 6 to MEMORY

2020-05-30 18:52:53,742 INFO reduce.InMemoryMapOutput: Read 2 bytes from map-output for attempt_local408205321_0001_m_000001_0

2020-05-30 18:52:53,742 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 2, inMemoryMapOutputs.size() -> 6, commitMemory -> 29, usedMemory ->31

2020-05-30 18:52:53,743 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local408205321_0001_m_000008_0 decomp: 2 len: 6 to MEMORY

2020-05-30 18:52:53,744 INFO reduce.InMemoryMapOutput: Read 2 bytes from map-output for attempt_local408205321_0001_m_000008_0

2020-05-30 18:52:53,744 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 2, inMemoryMapOutputs.size() -> 7, commitMemory -> 31, usedMemory ->33

2020-05-30 18:52:53,745 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local408205321_0001_m_000005_0 decomp: 2 len: 6 to MEMORY

2020-05-30 18:52:53,746 INFO reduce.InMemoryMapOutput: Read 2 bytes from map-output for attempt_local408205321_0001_m_000005_0

2020-05-30 18:52:53,746 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 2, inMemoryMapOutputs.size() -> 8, commitMemory -> 33, usedMemory ->35

2020-05-30 18:52:53,747 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local408205321_0001_m_000002_0 decomp: 2 len: 6 to MEMORY

2020-05-30 18:52:53,748 INFO reduce.InMemoryMapOutput: Read 2 bytes from map-output for attempt_local408205321_0001_m_000002_0

2020-05-30 18:52:53,748 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 2, inMemoryMapOutputs.size() -> 9, commitMemory -> 35, usedMemory ->37

2020-05-30 18:52:53,759 INFO reduce.EventFetcher: EventFetcher is interrupted.. Returning

2020-05-30 18:52:53,764 INFO mapred.LocalJobRunner: 9 / 9 copied.

2020-05-30 18:52:53,765 INFO reduce.MergeManagerImpl: finalMerge called with 9 in-memory map-outputs and 0 on-disk map-outputs

2020-05-30 18:52:53,788 INFO mapred.Merger: Merging 9 sorted segments

2020-05-30 18:52:53,789 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 10 bytes

2020-05-30 18:52:53,790 INFO reduce.MergeManagerImpl: Merged 9 segments, 37 bytes to disk to satisfy reduce memory limit

2020-05-30 18:52:53,791 INFO reduce.MergeManagerImpl: Merging 1 files, 25 bytes from disk

2020-05-30 18:52:53,791 INFO reduce.MergeManagerImpl: Merging 0 segments, 0 bytes from memory into reduce

2020-05-30 18:52:53,791 INFO mapred.Merger: Merging 1 sorted segments

2020-05-30 18:52:53,792 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 10 bytes

2020-05-30 18:52:53,792 INFO mapred.LocalJobRunner: 9 / 9 copied.

2020-05-30 18:52:53,823 INFO Configuration.deprecation: mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords

2020-05-30 18:52:53,830 INFO mapred.Task: Task:attempt_local408205321_0001_r_000000_0 is done. And is in the process of committing

2020-05-30 18:52:53,835 INFO mapred.LocalJobRunner: 9 / 9 copied.

2020-05-30 18:52:53,835 INFO mapred.Task: Task attempt_local408205321_0001_r_000000_0 is allowed to commit now

2020-05-30 18:52:53,837 INFO output.FileOutputCommitter: Saved output of task 'attempt_local408205321_0001_r_000000_0' to file:/home/yxx/hadoop-3.2.1/grep-temp-1965007720

2020-05-30 18:52:53,838 INFO mapred.LocalJobRunner: reduce > reduce

2020-05-30 18:52:53,839 INFO mapred.Task: Task 'attempt_local408205321_0001_r_000000_0' done.

2020-05-30 18:52:53,839 INFO mapred.Task: Final Counters for attempt_local408205321_0001_r_000000_0: Counters: 24

File System Counters

FILE: Number of bytes read=351961

FILE: Number of bytes written=837441

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Combine input records=0

Combine output records=0

Reduce input groups=1

Reduce shuffle bytes=73

Reduce input records=1

Reduce output records=1

Spilled Records=1

Shuffled Maps =9

Failed Shuffles=0

Merged Map outputs=9

GC time elapsed (ms)=0

Total committed heap usage (bytes)=475529216

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Output Format Counters

Bytes Written=123

2020-05-30 18:52:53,840 INFO mapred.LocalJobRunner: Finishing task: attempt_local408205321_0001_r_000000_0

2020-05-30 18:52:53,840 INFO mapred.LocalJobRunner: reduce task executor complete.

2020-05-30 18:52:54,205 INFO mapreduce.Job: map 100% reduce 100%

2020-05-30 18:52:54,205 INFO mapreduce.Job: Job job_local408205321_0001 completed successfully

2020-05-30 18:52:54,222 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=3453267

FILE: Number of bytes written=8371710

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=738

Map output records=1

Map output bytes=17

Map output materialized bytes=73

Input split bytes=1023

Combine input records=1

Combine output records=1

Reduce input groups=1

Reduce shuffle bytes=73

Reduce input records=1

Reduce output records=1

Spilled Records=2

Shuffled Maps =9

Failed Shuffles=0

Merged Map outputs=9

GC time elapsed (ms)=322

Total committed heap usage (bytes)=3537895424

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=27469

File Output Format Counters

Bytes Written=123

2020-05-30 18:52:54,257 WARN impl.MetricsSystemImpl: JobTracker metrics system already initialized!

2020-05-30 18:52:54,278 INFO input.FileInputFormat: Total input files to process : 1

2020-05-30 18:52:54,286 INFO mapreduce.JobSubmitter: number of splits:1

2020-05-30 18:52:54,329 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local1065595233_0002

2020-05-30 18:52:54,329 INFO mapreduce.JobSubmitter: Executing with tokens: []

2020-05-30 18:52:54,439 INFO mapreduce.Job: The url to track the job: http://localhost:8080/

2020-05-30 18:52:54,439 INFO mapreduce.Job: Running job: job_local1065595233_0002

2020-05-30 18:52:54,441 INFO mapred.LocalJobRunner: OutputCommitter set in config null

2020-05-30 18:52:54,441 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-05-30 18:52:54,441 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-05-30 18:52:54,441 INFO mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

2020-05-30 18:52:54,445 INFO mapred.LocalJobRunner: Waiting for map tasks

2020-05-30 18:52:54,445 INFO mapred.LocalJobRunner: Starting task: attempt_local1065595233_0002_m_000000_0

2020-05-30 18:52:54,451 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-05-30 18:52:54,451 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-05-30 18:52:54,452 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2020-05-30 18:52:54,453 INFO mapred.MapTask: Processing split: file:/home/yxx/hadoop-3.2.1/grep-temp-1965007720/part-r-00000:0+111

2020-05-30 18:52:54,489 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584)

2020-05-30 18:52:54,490 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100

2020-05-30 18:52:54,490 INFO mapred.MapTask: soft limit at 83886080

2020-05-30 18:52:54,490 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600

2020-05-30 18:52:54,490 INFO mapred.MapTask: kvstart = 26214396; length = 6553600

2020-05-30 18:52:54,496 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

2020-05-30 18:52:54,517 INFO mapred.LocalJobRunner:

2020-05-30 18:52:54,518 INFO mapred.MapTask: Starting flush of map output

2020-05-30 18:52:54,518 INFO mapred.MapTask: Spilling map output

2020-05-30 18:52:54,518 INFO mapred.MapTask: bufstart = 0; bufend = 17; bufvoid = 104857600

2020-05-30 18:52:54,518 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 26214396(104857584); length = 1/6553600

2020-05-30 18:52:54,527 INFO mapred.MapTask: Finished spill 0

2020-05-30 18:52:54,533 INFO mapred.Task: Task:attempt_local1065595233_0002_m_000000_0 is done. And is in the process of committing

2020-05-30 18:52:54,536 INFO mapred.LocalJobRunner: map

2020-05-30 18:52:54,536 INFO mapred.Task: Task 'attempt_local1065595233_0002_m_000000_0' done.

2020-05-30 18:52:54,536 INFO mapred.Task: Final Counters for attempt_local1065595233_0002_m_000000_0: Counters: 17

File System Counters

FILE: Number of bytes read=668798

FILE: Number of bytes written=1674616

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=1

Map output records=1

Map output bytes=17

Map output materialized bytes=25

Input split bytes=126

Combine input records=0

Spilled Records=1

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=0

Total committed heap usage (bytes)=470810624

File Input Format Counters

Bytes Read=123

2020-05-30 18:52:54,536 INFO mapred.LocalJobRunner: Finishing task: attempt_local1065595233_0002_m_000000_0

2020-05-30 18:52:54,538 INFO mapred.LocalJobRunner: map task executor complete.

2020-05-30 18:52:54,539 INFO mapred.LocalJobRunner: Waiting for reduce tasks

2020-05-30 18:52:54,540 INFO mapred.LocalJobRunner: Starting task: attempt_local1065595233_0002_r_000000_0

2020-05-30 18:52:54,546 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-05-30 18:52:54,546 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-05-30 18:52:54,547 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ]

2020-05-30 18:52:54,547 INFO mapred.ReduceTask: Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@26eff9fb

2020-05-30 18:52:54,556 WARN impl.MetricsSystemImpl: JobTracker metrics system already initialized!

2020-05-30 18:52:54,563 INFO reduce.MergeManagerImpl: MergerManager: memoryLimit=329567424, maxSingleShuffleLimit=82391856, mergeThreshold=217514512, ioSortFactor=10, memToMemMergeOutputsThreshold=10

2020-05-30 18:52:54,564 INFO reduce.EventFetcher: attempt_local1065595233_0002_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events

2020-05-30 18:52:54,566 INFO reduce.LocalFetcher: localfetcher#2 about to shuffle output of map attempt_local1065595233_0002_m_000000_0 decomp: 21 len: 25 to MEMORY

2020-05-30 18:52:54,568 INFO reduce.InMemoryMapOutput: Read 21 bytes from map-output for attempt_local1065595233_0002_m_000000_0

2020-05-30 18:52:54,569 INFO reduce.MergeManagerImpl: closeInMemoryFile -> map-output of size: 21, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->21

2020-05-30 18:52:54,571 INFO reduce.EventFetcher: EventFetcher is interrupted.. Returning

2020-05-30 18:52:54,574 INFO mapred.LocalJobRunner: 1 / 1 copied.

2020-05-30 18:52:54,574 INFO reduce.MergeManagerImpl: finalMerge called with 1 in-memory map-outputs and 0 on-disk map-outputs

2020-05-30 18:52:54,578 INFO mapred.Merger: Merging 1 sorted segments

2020-05-30 18:52:54,578 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 11 bytes

2020-05-30 18:52:54,581 INFO reduce.MergeManagerImpl: Merged 1 segments, 21 bytes to disk to satisfy reduce memory limit

2020-05-30 18:52:54,581 INFO reduce.MergeManagerImpl: Merging 1 files, 25 bytes from disk

2020-05-30 18:52:54,581 INFO reduce.MergeManagerImpl: Merging 0 segments, 0 bytes from memory into reduce

2020-05-30 18:52:54,581 INFO mapred.Merger: Merging 1 sorted segments

2020-05-30 18:52:54,581 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 11 bytes

2020-05-30 18:52:54,582 INFO mapred.LocalJobRunner: 1 / 1 copied.

2020-05-30 18:52:54,586 INFO mapred.Task: Task:attempt_local1065595233_0002_r_000000_0 is done. And is in the process of committing

2020-05-30 18:52:54,586 INFO mapred.LocalJobRunner: 1 / 1 copied.

2020-05-30 18:52:54,586 INFO mapred.Task: Task attempt_local1065595233_0002_r_000000_0 is allowed to commit now

2020-05-30 18:52:54,587 INFO output.FileOutputCommitter: Saved output of task 'attempt_local1065595233_0002_r_000000_0' to file:/home/yxx/hadoop-3.2.1/output

2020-05-30 18:52:54,588 INFO mapred.LocalJobRunner: reduce > reduce

2020-05-30 18:52:54,588 INFO mapred.Task: Task 'attempt_local1065595233_0002_r_000000_0' done.

2020-05-30 18:52:54,588 INFO mapred.Task: Final Counters for attempt_local1065595233_0002_r_000000_0: Counters: 24

File System Counters

FILE: Number of bytes read=668880

FILE: Number of bytes written=1674664

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Combine input records=0

Combine output records=0

Reduce input groups=1

Reduce shuffle bytes=25

Reduce input records=1

Reduce output records=1

Spilled Records=1

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=0

Total committed heap usage (bytes)=470810624

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Output Format Counters

Bytes Written=23

2020-05-30 18:52:54,588 INFO mapred.LocalJobRunner: Finishing task: attempt_local1065595233_0002_r_000000_0

2020-05-30 18:52:54,589 INFO mapred.LocalJobRunner: reduce task executor complete.

2020-05-30 18:52:55,440 INFO mapreduce.Job: Job job_local1065595233_0002 running in uber mode : false

2020-05-30 18:52:55,440 INFO mapreduce.Job: map 100% reduce 100%

2020-05-30 18:52:55,441 INFO mapreduce.Job: Job job_local1065595233_0002 completed successfully

2020-05-30 18:52:55,446 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=1337678

FILE: Number of bytes written=3349280

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=1

Map output records=1

Map output bytes=17

Map output materialized bytes=25

Input split bytes=126

Combine input records=0

Combine output records=0

Reduce input groups=1

Reduce shuffle bytes=25

Reduce input records=1

Reduce output records=1

Spilled Records=2

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=0

Total committed heap usage (bytes)=941621248

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=123

File Output Format Counters

Bytes Written=23

[yxx@ser3 hadoop]$

此时,是单机版,运算的数据量很小

[yxx@ser3 hadoop]$ cd output/

[yxx@ser3 output]$ ls

part-r-00000 _SUCCESS

[yxx@ser3 output]$ cat *

1 dfsadmin

[yxx@ser3 output]$

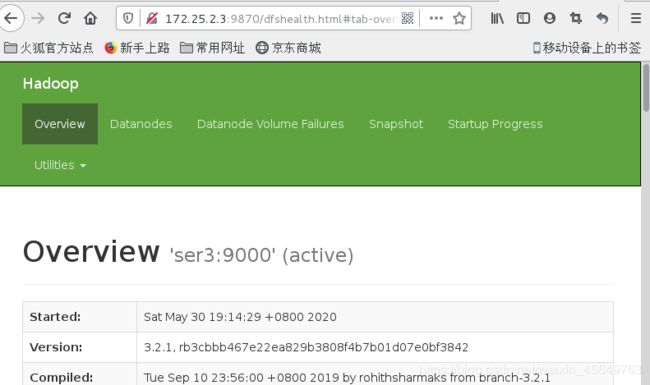

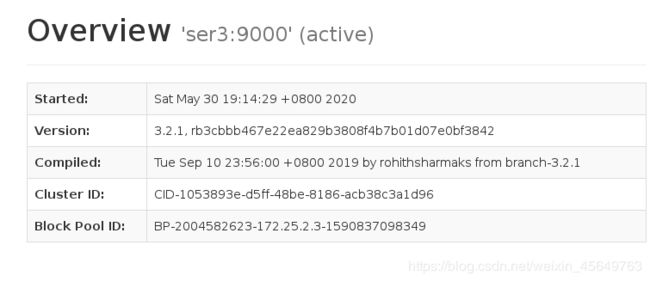

伪分布式的部署

伪分布式是因为在一台机器实现的分布式,不是真正意义上的分布式。

[yxx@ser3 hadoop]$ pwd

/home/yxx/hadoop/etc/hadoop

1.定义 hdfs的master

[yxx@ser3 hadoop]$ vim core-site.xml

fs.defaultFS

hdfs://172.25.2.3:9000

2.定义整个分布式文件系统的副本数

[yxx@ser3 hadoop]$ vim hdfs-site.xml

dfs.replication

1

3.给本机 172.25.2.4设置免密

[yxx@ser3 hadoop]$ ssh-keygen

[yxx@ser3 hadoop]$ ssh-copy-id 172.25.2.3

给localhost也设置免密

[yxx@ser3 hadoop]$ ssh-copy-id localhost

因为hadoop的工作方式的主从的,它是通过localhost ssh 启动相应的进程

[yxx@ser3 hadoop]$ cat workers

localhost

对分布式文件系统做格式化

主要存储元数据的镜像和对分布式文件系统的修改

[yxx@ser3 hadoop]$ bin/hdfs namenode -format

启动

[yxx@ser3 hadoop]$ sbin/start-dfs.sh

Starting namenodes on [ser3]

ser3: Warning: Permanently added 'ser3' (ECDSA) to the list of known hosts.

Starting datanodes

Starting secondary namenodes [ser3]

master结点叫namenode,数据结点叫datanode

启动java程序

[yxx@ser3 hadoop]$ cd /home/yxx/java/bin/

[yxx@ser3 bin]$ ./jps

16786 SecondaryNameNode

16930 Jps

16453 NameNode

16569 DataNode

[yxx@ser3 bin]$

设置java和hadoop环境变量

[yxx@ser3 ~]$ vim .bash_profile

PATH=$PATH:$HOME/.local/bin:$HOME/bin:/home/yxx/java/bin:/home/yxx/

hadoop/bin

[yxx@ser3 ~]$ source .bash_profile

[yxx@ser3 ~]$ jps 列出相应的进程,因为是伪分布式

16786 SecondaryNameNode (对nn的定期清理,合并镜像等,nn的冷备)

16453 NameNode (nn)

16569 DataNode (dn)

17087 Jps

查看结点信息

[yxx@ser3 ~]$ cd hadoop

[yxx@ser3 hadoop]$ hdfs dfsadmin -report

Configured Capacity: 18238930944 (16.99 GB)

Present Capacity: 11953930240 (11.13 GB)

DFS Remaining: 11953922048 (11.13 GB)

DFS Used: 8192 (8 KB)

DFS Used%: 0.00%

Replicated Blocks:

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

Erasure Coded Block Groups:

Low redundancy block groups: 0

Block groups with corrupt internal blocks: 0

Missing block groups: 0

Low redundancy blocks with highest priority to recover: 0

Pending deletion blocks: 0

-------------------------------------------------

Live datanodes (1):

Name: 172.25.2.3:9866 (ser3)

Hostname: ser3

Decommission Status : Normal

Configured Capacity: 18238930944 (16.99 GB)

DFS Used: 8192 (8 KB)

Non DFS Used: 6285000704 (5.85 GB)

DFS Remaining: 11953922048 (11.13 GB)

DFS Used%: 0.00%

DFS Remaining%: 65.54%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Sat May 30 19:27:04 CST 2020

Last Block Report: Sat May 30 19:14:33 CST 2020

Num of Blocks: 0

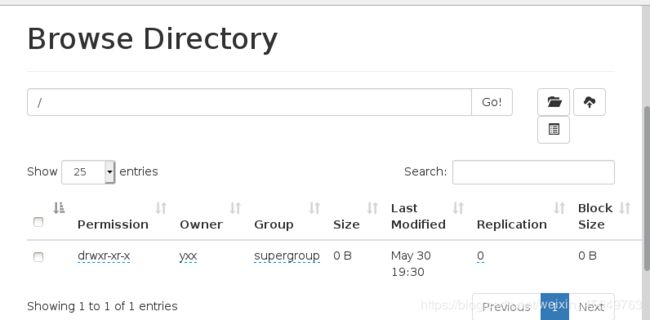

列出根

[yxx@ser3 hadoop]$ hdfs dfs -ls /

[yxx@ser3 hadoop]$

此时为空

创建目录

[yxx@ser3 hadoop]$ hdfs dfs -mkdir /user

[yxx@ser3 hadoop]$ hdfs dfs -mkdir /user/yxx

[yxx@ser3 hadoop]$ id

uid=1001(yxx) gid=1001(yxx) groups=1001(yxx)

[yxx@ser3 hadoop]$ hdfs dfs -ls

[yxx@ser3 hadoop]$

此时默认查看当前用户的路径

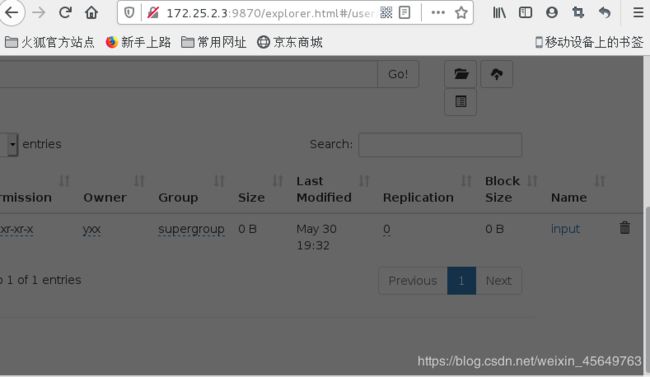

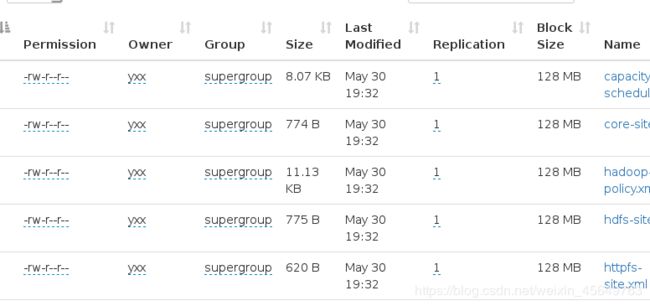

上传东西

[yxx@ser3 hadoop]$ hdfs dfs -put input

默认上传的就是刚才的用户主目录

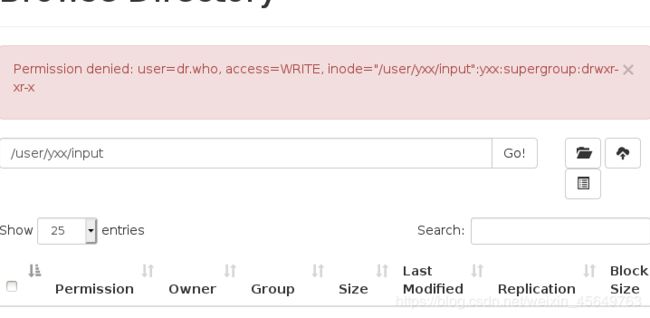

当想删除时,默认不允许,为了安全

此时已经把文件放在了分布式文件系统中,可以再进行别的操作

再进行测试

[yxx@ser3 ~]$ cd hadoop

[yxx@ser3 hadoop]$ ls

bin hadoop-3.2.1 input libexec logs output sbin

etc include lib LICENSE.txt NOTICE.txt README.txt share

[yxx@ser3 hadoop]$ rm -fr input/ output/

[yxx@ser3 hadoop]$ hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar grep input output 'dfs[a-z.]+'

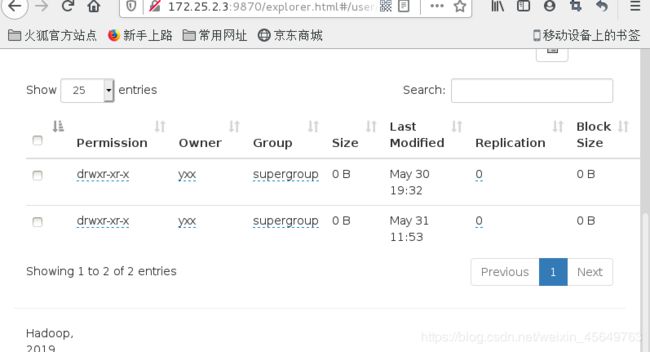

和刚才进行同样的运算,但是并没有在当前目录生成output

[yxx@ser3 hadoop]$ ls

bin hadoop-3.2.1 lib LICENSE.txt NOTICE.txt sbin

etc include libexec logs README.txt share

它的数据存储在分布式文件系统上,刚才是local

取的input文件也是来自于hadoop上,是通过调用hadoop的api接口来取的数据。

那么如何在系统上查看呢,已经存储到了分布式文件系统中。

[yxx@ser3 hadoop]$ hdfs dfs -ls

Found 2 items

drwxr-xr-x - yxx supergroup 0 2020-05-30 19:32 input

drwxr-xr-x - yxx supergroup 0 2020-05-31 11:53 output

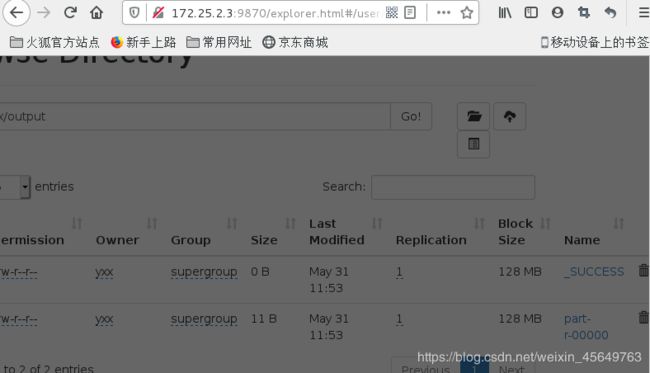

[yxx@ser3 hadoop]$ hdfs dfs -ls output

Found 2 items

-rw-r--r-- 1 yxx supergroup 0 2020-05-31 11:53 output/_SUCCESS

-rw-r--r-- 1 yxx supergroup 11 2020-05-31 11:53 output/part-r-00000

[yxx@ser3 hadoop]$ hdfs dfs -cat output/*

2020-05-31 12:01:16,860 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

1 dfsadmin

把分布式文件系统的数据get 到本地

[yxx@ser3 hadoop]$ hdfs dfs -get output

2020-05-31 12:02:23,333 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

[yxx@ser3 hadoop]$ ls

bin hadoop-3.2.1 lib LICENSE.txt NOTICE.txt README.txt share

etc include libexec logs output sbin

[yxx@ser3 hadoop]$ cd output/

[yxx@ser3 output]$ ls

part[yxx@ser3 output]$ cat *

1 dfsadmin

-r-00000 _SUCCESS

删除本地的,不影响分布式文件系统的

[yxx@ser3 hadoop]$ rm -fr output/

[yxx@ser3 hadoop]$ ls

bin hadoop-3.2.1 lib LICENSE.txt NOTICE.txt sbin

etc include libexec logs README.txt share

删除分布式文件系统的

[yxx@ser3 hadoop]$ hdfs dfs -rm -r output/

Deleted output

[yxx@ser3 hadoop]$

再用新的jar包命令进行词频统计

hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar wordcount input output

[yxx@ser3 hadoop]$ hdfs dfs -cat output/*

2020-05-31 12:09:56,723 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

"*" 21

"AS 9

"License"); 9

"alice,bob 21

"clumping" 1

(ASF) 1

(root 1

(the 9

--> 18

-1 1

-1, 1

0.0 1

1-MAX_INT. 1

1. 1

1.0. 1

2.0 9

40 2

40+20=60 1

: 2