- 斤斤计较的婚姻到底有多难?

白心之岂必有为

很多人私聊我会问到在哪个人群当中斤斤计较的人最多?我都会回答他,一般婚姻出现问题的斤斤计较的人士会非常多,以我多年经验,在婚姻落的一塌糊涂的人当中,斤斤计较的人数占比在20~30%以上,也就是说10个婚姻出现问题的斤斤计较的人有2-3个有多不减。在婚姻出问题当中,有大量的心理不平衡的、尖酸刻薄的怨妇。在婚姻中仅斤斤计较有两种类型:第一种是物质上的,另一种是精神上的。在物质与精神上抠门已经严重的影响

- QQ群采集助手,精准引流必备神器

2401_87347160

其他经验分享

功能概述微信群查找与筛选工具是一款专为微信用户设计的辅助工具,它通过关键词搜索功能,帮助用户快速找到相关的微信群,并提供筛选是否需要验证的群组的功能。主要功能关键词搜索:用户可以输入关键词,工具将自动查找包含该关键词的微信群。筛选功能:工具提供筛选机制,用户可以选择是否只显示需要验证或不需要验证的群组。精准引流:通过上述功能,用户可以更精准地找到目标群组,进行有效的引流操作。3.设备需求该工具可以

- 机器学习与深度学习间关系与区别

ℒℴѵℯ心·动ꦿ໊ོ꫞

人工智能学习深度学习python

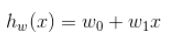

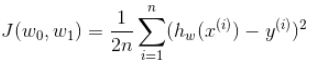

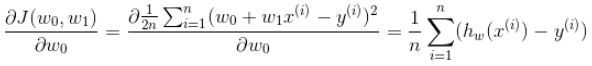

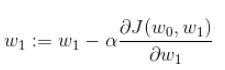

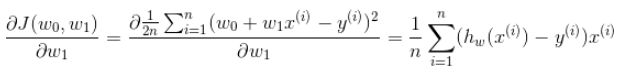

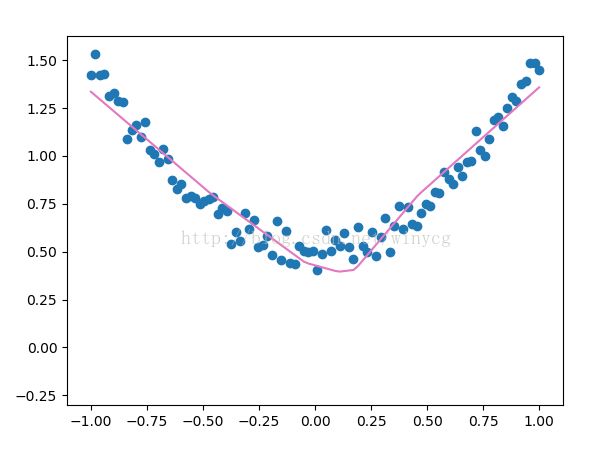

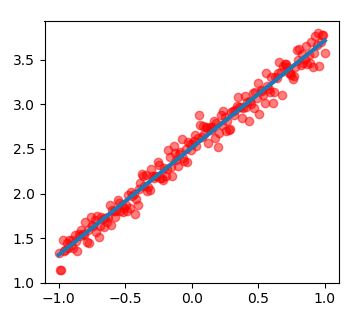

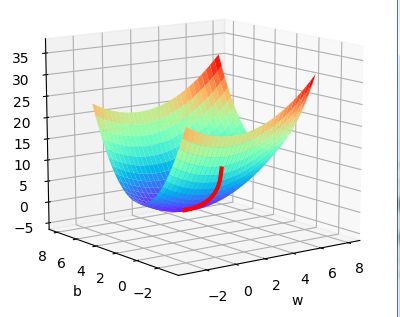

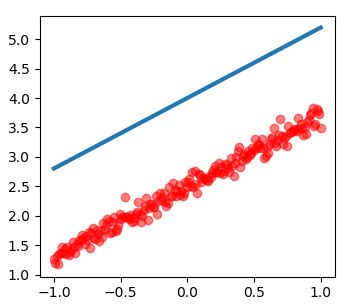

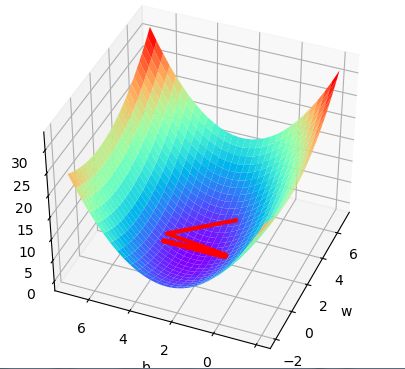

一、机器学习概述定义机器学习(MachineLearning,ML)是一种通过数据驱动的方法,利用统计学和计算算法来训练模型,使计算机能够从数据中学习并自动进行预测或决策。机器学习通过分析大量数据样本,识别其中的模式和规律,从而对新的数据进行判断。其核心在于通过训练过程,让模型不断优化和提升其预测准确性。主要类型1.监督学习(SupervisedLearning)监督学习是指在训练数据集中包含输入

- 随笔 | 仙一般的灵气

海思沧海

仙岛今天,我看了你全部,似乎已经进入你的世界我不知道,这是否是梦幻,还是你仙一般的灵气吸引了我也许每一个人都要有一份属于自己的追求,这样才能够符合人生的梦想,生活才能够充满着阳光与快乐我不知道,我为什么会这样的感叹,是在感叹自己的人生,还是感叹自己一直没有孜孜不倦的追求只感觉虚度了光阴,每天活在自己的梦中,活在一个不真实的世界是在逃避自己,还是在逃避周围的一切有时候我嘲笑自己,嘲笑自己如此的虚无,

- 一百九十四章. 自相矛盾

巨木擎天

唉!就这么一夜,林子感觉就像过了很多天似的,先是回了阳间家里,遇到了那么多不可思议的事情儿。特别是小伙伴们,第二次与自己见面时,僵硬的表情和恐怖的气氛,让自己如坐针毡,打从心眼里难受!还有东子,他现在还好吗?有没有被人欺负?护城河里的小鱼小虾们,还都在吗?水不会真的干枯了吧?那对相亲相爱漂亮的太平鸟儿,还好吧!春天了,到了做窝、下蛋、喂养小鸟宝宝的时候了,希望它们都能够平安啊!虽然没有看见家人,也

- 微服务下功能权限与数据权限的设计与实现

nbsaas-boot

微服务java架构

在微服务架构下,系统的功能权限和数据权限控制显得尤为重要。随着系统规模的扩大和微服务数量的增加,如何保证不同用户和服务之间的访问权限准确、细粒度地控制,成为设计安全策略的关键。本文将讨论如何在微服务体系中设计和实现功能权限与数据权限控制。1.功能权限与数据权限的定义功能权限:指用户或系统角色对特定功能的访问权限。通常是某个用户角色能否执行某个操作,比如查看订单、创建订单、修改用户资料等。数据权限:

- 学点心理知识,呵护孩子健康

静候花开_7090

昨天听了华中师范大学教育管理学系副教授张玲老师的《哪里才是学生心理健康的最后庇护所,超越教育与技术的思考》的讲座。今天又重新学习了一遍,收获匪浅。张玲博士也注意到了当今社会上的孩子由于心理问题导致的自残、自杀及伤害他人等恶性事件。她向我们普及了一个重要的命题,她说心理健康的一些基本命题,我们与我们通常的一些教育命题是不同的,她还举了几个例子,让我们明白我们原来以为的健康并非心理学上的健康。比如如果

- 《投行人生》读书笔记

小蘑菇的树洞

《投行人生》----作者詹姆斯-A-朗德摩根斯坦利副主席40年的职业洞见-很短小精悍的篇幅,比较适合初入职场的新人。第一部分成功的职业生涯需要规划1.情商归为适应能力分享与协作同理心适应能力,更多的是自我意识,你有能力识别自己的情并分辨这些情绪如何影响你的思想和行为。2.对于初入职场的人的建议,细节,截止日期和数据很重要截止日期,一种有效的方法是请老板为你所有的任务进行优先级排序。和老板喝咖啡的好

- Long类型前后端数据不一致

igotyback

前端

响应给前端的数据浏览器控制台中response中看到的Long类型的数据是正常的到前端数据不一致前后端数据类型不匹配是一个常见问题,尤其是当后端使用Java的Long类型(64位)与前端JavaScript的Number类型(最大安全整数为2^53-1,即16位)进行数据交互时,很容易出现精度丢失的问题。这是因为JavaScript中的Number类型无法安全地表示超过16位的整数。为了解决这个问

- 店群合一模式下的社区团购新发展——结合链动 2+1 模式、AI 智能名片与 S2B2C 商城小程序源码

说私域

人工智能小程序

摘要:本文探讨了店群合一的社区团购平台在当今商业环境中的重要性和优势。通过分析店群合一模式如何将互联网社群与线下终端紧密结合,阐述了链动2+1模式、AI智能名片和S2B2C商城小程序源码在这一模式中的应用价值。这些创新元素的结合为社区团购带来了新的机遇,提升了用户信任感、拓展了营销渠道,并实现了线上线下的完美融合。一、引言随着互联网技术的不断发展,社区团购作为一种新兴的商业模式,在满足消费者日常需

- 2021-08-26

影幽

在生活中,女人与男人的感悟往往有所不同。人生最大的舞台就是生活,大幕随时都可能拉开,关键是你愿不愿意表演都无法躲避。在生活中,遇事不要急躁,不要急于下结论,尤其生气时不要做决断,要学会换位思考,大事化小小事化了,把复杂的事情尽量简单处理,千万不要把简单的事情复杂化。永远不要扭曲,别人善意,无药可救。昨天是张过期的支票,明天是张信用卡,只有今天才是现金,要善加利用!执着的攀登者不必去与别人比较自己的

- 高级编程--XML+socket练习题

masa010

java开发语言

1.北京华北2114.8万人上海华东2,500万人广州华南1292.68万人成都华西1417万人(1)使用dom4j将信息存入xml中(2)读取信息,并打印控制台(3)添加一个city节点与子节点(4)使用socketTCP协议编写服务端与客户端,客户端输入城市ID,服务器响应相应城市信息(5)使用socketTCP协议编写服务端与客户端,客户端要求用户输入city对象,服务端接收并使用dom4j

- 2018-07-23-催眠日作业-#不一样的31天#-66小鹿

小鹿_33

预言日:人总是在逃避命运的路上,与之不期而遇。心理学上有个著名的名词,叫做自证预言;经济学上也有一个很著名的定律叫做,墨菲定律;在灵修派上,还有一个很著名的法则,叫做吸引力法则。这3个领域的词,虽然看起来不太一样,但是他们都在告诉人们一个现象:你越担心什么,就越有可能会发生什么。同样的道理,你越想得到什么,就应该要积极地去创造什么。无论是自证预言,墨菲定律还是吸引力法则,对人都有正反2个维度的影响

- 回溯 Leetcode 332 重新安排行程

mmaerd

Leetcode刷题学习记录leetcode算法职场和发展

重新安排行程Leetcode332学习记录自代码随想录给你一份航线列表tickets,其中tickets[i]=[fromi,toi]表示飞机出发和降落的机场地点。请你对该行程进行重新规划排序。所有这些机票都属于一个从JFK(肯尼迪国际机场)出发的先生,所以该行程必须从JFK开始。如果存在多种有效的行程,请你按字典排序返回最小的行程组合。例如,行程[“JFK”,“LGA”]与[“JFK”,“LGB

- 每日一题——第九十题

互联网打工人no1

C语言程序设计每日一练c语言

题目:判断子串是否与主串匹配#include#include#include//////判断子串是否在主串中匹配//////主串///子串///boolisSubstring(constchar*str,constchar*substr){intlenstr=strlen(str);//计算主串的长度intlenSub=strlen(substr);//计算子串的长度//遍历主字符串,对每个可能得

- Python数据分析与可视化实战指南

William数据分析

pythonpython数据

在数据驱动的时代,Python因其简洁的语法、强大的库生态系统以及活跃的社区,成为了数据分析与可视化的首选语言。本文将通过一个详细的案例,带领大家学习如何使用Python进行数据分析,并通过可视化来直观呈现分析结果。一、环境准备1.1安装必要库在开始数据分析和可视化之前,我们需要安装一些常用的库。主要包括pandas、numpy、matplotlib和seaborn等。这些库分别用于数据处理、数学

- 《庄子.达生9》

钱江潮369

【原文】孔子观于吕梁,县水三十仞,流沫四十里,鼋鼍鱼鳖之所不能游也。见一丈夫游之,以为有苦而欲死也,使弟子并流而拯之。数百步而出,被发行歌而游于塘下。孔子从而问焉,曰:“吾以子为鬼,察子则人也。请问,‘蹈水有道乎’”曰:“亡,吾无道。吾始乎故,长乎性,成乎命。与齐俱入,与汩偕出,从水之道而不为私焉。此吾所以蹈之也。”孔子曰:“何谓始乎故,长乎性,成乎命?”曰:“吾生于陵而安于陵,故也;长于水而安于

- 水泥质量纠纷案代理词

徐宝峰律师

贵州领航建设有限公司诉贵州纳雍隆庆乌江水泥有限公司产品质量纠纷案代理词尊敬的审判长、审判员:贵州千里律师事务所接受被告贵州纳雍隆庆乌江水泥有限公司的委托,指派我担任其诉讼代理人,参加本案的诉讼活动。下面,我结合本案事实和相关法律规定发表如下代理意见,供合议庭评议案件时参考:原告应当举证证明其遭受的损失与被告生产的水泥质量的因果关系。首先水泥是一种粉状水硬性无机胶凝材料。加水搅拌后成浆体,能在空气中

- Goolge earth studio 进阶4——路径修改与平滑

陟彼高冈yu

Googleearthstudio进阶教程旅游

如果我们希望在大约中途时获得更多的城市鸟瞰视角。可以将相机拖动到这里并创建一个新的关键帧。camera_target_clip_7EarthStudio会自动平滑我们的路径,所以当我们通过这个关键帧时,不是一个生硬的角度,而是一个平滑的曲线。camera_target_clip_8路径上有贝塞尔控制手柄,允许我们调整路径的形状。右键单击,我们可以选择“平滑路径”,这是默认的自动平滑算法,或者我们可

- 将cmd中命令输出保存为txt文本文件

落难Coder

Windowscmdwindow

最近深度学习本地的训练中我们常常要在命令行中运行自己的代码,无可厚非,我们有必要保存我们的炼丹结果,但是复制命令行输出到txt是非常麻烦的,其实Windows下的命令行为我们提供了相应的操作。其基本的调用格式就是:运行指令>输出到的文件名称或者具体保存路径测试下,我打开cmd并且ping一下百度:pingwww.baidu.com>./data.txt看下相同目录下data.txt的输出:如果你再

- 18-115 一切思考不能有效转化为行动,都TM是扯淡!

成长时间线

7月25号写了一篇关于为什么会断更如此严重的反思,然而,之后日更仅仅维持了一周,又出现了这次更严重的现象。从8月2号到昨天8月6号,5天!又是5天没有更文!虽然这次断更时间和上次一样,那为什么说这次更严重?因为上次之后就分析了问题的原因,以及应该如何解决,按理说应该会好转,然而,没过几天严重断更的现象再次出现,想想,经过反思,问题依然没有解决与改变,这让我有些担忧。到底是哪里出了问题,难道我就真的

- 山东大学小树林支教调研团青青仓木队——翟晓楠

山东大学青青仓木队

过了半年,又一次启程,又一次回到支教的初心之地。比起上一次的试探与不安,我更多了一丝稳重与熟练。心境、处境也都随着半个学期的过去而变得不同,半个学期中,身体上的,心理上的,太多的逆境让我变得步履维艰,曲曲折折,弯弯绕绕,我仿佛打不起精神,没有胃口,没有动力。感觉走的不顺畅的时候,支教这个旅程,给了我力量。自告奋勇承担起队长这一职务的我,从组织时的复杂和困难的经历,协调各种问题,从无到有,和校长和队

- 直返最高等级与直返APP:无需邀请码的返利新体验

古楼

随着互联网的普及和电商的兴起,直返模式逐渐成为一种流行的商业模式。在这种模式下,消费者通过购买产品或服务,获得一定的返利,并可以分享给更多的人。其中,直返最高等级和直返APP是直返模式中的重要概念和工具。本文将详细介绍直返最高等级的概念、直返APP的使用以及与邀请码的关系。【高省】APP(高佣金领导者)是一个自用省钱佣金高,分享推广赚钱多的平台,百度有几百万篇报道,运行三年,稳定可靠。高省APP,

- DIV+CSS+JavaScript技术制作网页(旅游主题网页设计与制作)云南大理

STU学生网页设计

网页设计期末网页作业html静态网页html5期末大作业网页设计web大作业

️精彩专栏推荐作者主页:【进入主页—获取更多源码】web前端期末大作业:【HTML5网页期末作业(1000套)】程序员有趣的告白方式:【HTML七夕情人节表白网页制作(110套)】文章目录二、网站介绍三、网站效果▶️1.视频演示2.图片演示四、网站代码HTML结构代码CSS样式代码五、更多源码二、网站介绍网站布局方面:计划采用目前主流的、能兼容各大主流浏览器、显示效果稳定的浮动网页布局结构。网站程

- 2020-04-12每天三百字之连接与替代

冷眼看潮

不知道是不是好为人师,有时候还真想和别人分享一下我对某些现象的看法或者解释。人类社会不断发展进步的过程,就是不断连接与替代的过程。人类发现了火并应用火以后,告别了茹毛饮血的野兽般的原始生活(火烧、烹饪替代了生食)人类用石器代替了完全手工,工具的使用使人类进步一大步。类似这样的替代还有很多,随着科技的发展,有更多的原始的事物被替代,代之以更高效、更先进的技术。在近现代,汽车替代了马车,高速公路和铁路

- 探索OpenAI和LangChain的适配器集成:轻松切换模型提供商

nseejrukjhad

langchaineasyui前端python

#探索OpenAI和LangChain的适配器集成:轻松切换模型提供商##引言在人工智能和自然语言处理的世界中,OpenAI的模型提供了强大的能力。然而,随着技术的发展,许多人开始探索其他模型以满足特定需求。LangChain作为一个强大的工具,集成了多种模型提供商,通过提供适配器,简化了不同模型之间的转换。本篇文章将介绍如何使用LangChain的适配器与OpenAI集成,以便轻松切换模型提供商

- 利用Requests Toolkit轻松完成HTTP请求

nseejrukjhad

http网络协议网络python

RequestsToolkit的力量:轻松构建HTTP请求Agent在现代软件开发中,API请求是与外部服务交互的核心。RequestsToolkit提供了一种便捷的方式,帮助开发者构建自动化的HTTP请求Agent。本文旨在详细介绍RequestsToolkit的设置、使用和潜在挑战。引言RequestsToolkit是一个强大的工具包,可用于构建执行HTTP请求的智能代理。这对于想要自动化与外

- 数组去重

好奇的猫猫猫

整理自js中基础数据结构数组去重问题思考?如何去除数组中重复的项例如数组:[1,3,4,3,5]我们在做去重的时候,一开始想到的肯定是,逐个比较,外面一层循环,内层后一个与前一个一比较,如果是久不将当前这一项放进新的数组,挨个比较完之后返回一个新的去过重复的数组不好的实践方式上述方法效率极低,代码量还多,思考?有没有更好的方法这时候不禁一想当然有了!!!hashtable啊,通过对象的hash办法

- 春季养肝正当时

dxn悟

重温快乐2023年2月4日立春。春天来了,春暖花开,小鸟欢唱,那在这样的季节我们如何养肝呢?自然界的春季对应中医五行的木,人体五脏肝属木,“木曰曲直”,是以树干曲曲直直地向上、向外伸长舒展的生发姿态,来形容具有生长、升发、条达、舒畅等特征的食物及现象。根据中医天人相应的理念,肝五行属木,喜条达,主疏泄,与春天相应,所以春天最适合养肝。养肝首先要少生气,因为肝喜条达恶抑郁。人体五志肝为怒,生气发怒最

- 关于城市旅游的HTML网页设计——(旅游风景云南 5页)HTML+CSS+JavaScript

二挡起步

web前端期末大作业javascripthtmlcss旅游风景

⛵源码获取文末联系✈Web前端开发技术描述网页设计题材,DIV+CSS布局制作,HTML+CSS网页设计期末课程大作业|游景点介绍|旅游风景区|家乡介绍|等网站的设计与制作|HTML期末大学生网页设计作业,Web大学生网页HTML:结构CSS:样式在操作方面上运用了html5和css3,采用了div+css结构、表单、超链接、浮动、绝对定位、相对定位、字体样式、引用视频等基础知识JavaScrip

- 强大的销售团队背后 竟然是大数据分析的身影

蓝儿唯美

数据分析

Mark Roberge是HubSpot的首席财务官,在招聘销售职位时使用了大量数据分析。但是科技并没有挤走直觉。

大家都知道数理学家实际上已经渗透到了各行各业。这些热衷数据的人们通过处理数据理解商业流程的各个方面,以重组弱点,增强优势。

Mark Roberge是美国HubSpot公司的首席财务官,HubSpot公司在构架集客营销现象方面出过一份力——因此他也是一位数理学家。他使用数据分析

- Haproxy+Keepalived高可用双机单活

bylijinnan

负载均衡keepalivedhaproxy高可用

我们的应用MyApp不支持集群,但要求双机单活(两台机器:master和slave):

1.正常情况下,只有master启动MyApp并提供服务

2.当master发生故障时,slave自动启动本机的MyApp,同时虚拟IP漂移至slave,保持对外提供服务的IP和端口不变

F5据说也能满足上面的需求,但F5的通常用法都是双机双活,单活的话还没研究过

服务器资源

10.7

- eclipse编辑器中文乱码问题解决

0624chenhong

eclipse乱码

使用Eclipse编辑文件经常出现中文乱码或者文件中有中文不能保存的问题,Eclipse提供了灵活的设置文件编码格式的选项,我们可以通过设置编码 格式解决乱码问题。在Eclipse可以从几个层面设置编码格式:Workspace、Project、Content Type、File

本文以Eclipse 3.3(英文)为例加以说明:

1. 设置Workspace的编码格式:

Windows-&g

- 基础篇--resources资源

不懂事的小屁孩

android

最近一直在做java开发,偶尔敲点android代码,突然发现有些基础给忘记了,今天用半天时间温顾一下resources的资源。

String.xml 字符串资源 涉及国际化问题

http://www.2cto.com/kf/201302/190394.html

string-array

- 接上篇补上window平台自动上传证书文件的批处理问卷

酷的飞上天空

window

@echo off

: host=服务器证书域名或ip,需要和部署时服务器的域名或ip一致 ou=公司名称, o=公司名称

set host=localhost

set ou=localhost

set o=localhost

set password=123456

set validity=3650

set salias=s

- 企业物联网大潮涌动:如何做好准备?

蓝儿唯美

企业

物联网的可能性也许是无限的。要找出架构师可以做好准备的领域然后利用日益连接的世界。

尽管物联网(IoT)还很新,企业架构师现在也应该为一个连接更加紧密的未来做好计划,而不是跟上闸门被打开后的集成挑战。“问题不在于物联网正在进入哪些领域,而是哪些地方物联网没有在企业推进,” Gartner研究总监Mike Walker说。

Gartner预测到2020年物联网设备安装量将达260亿,这些设备在全

- spring学习——数据库(mybatis持久化框架配置)

a-john

mybatis

Spring提供了一组数据访问框架,集成了多种数据访问技术。无论是JDBC,iBATIS(mybatis)还是Hibernate,Spring都能够帮助消除持久化代码中单调枯燥的数据访问逻辑。可以依赖Spring来处理底层的数据访问。

mybatis是一种Spring持久化框架,要使用mybatis,就要做好相应的配置:

1,配置数据源。有很多数据源可以选择,如:DBCP,JDBC,aliba

- Java静态代理、动态代理实例

aijuans

Java静态代理

采用Java代理模式,代理类通过调用委托类对象的方法,来提供特定的服务。委托类需要实现一个业务接口,代理类返回委托类的实例接口对象。

按照代理类的创建时期,可以分为:静态代理和动态代理。

所谓静态代理: 指程序员创建好代理类,编译时直接生成代理类的字节码文件。

所谓动态代理: 在程序运行时,通过反射机制动态生成代理类。

一、静态代理类实例:

1、Serivce.ja

- Struts1与Struts2的12点区别

asia007

Struts1与Struts2

1) 在Action实现类方面的对比:Struts 1要求Action类继承一个抽象基类;Struts 1的一个具体问题是使用抽象类编程而不是接口。Struts 2 Action类可以实现一个Action接口,也可以实现其他接口,使可选和定制的服务成为可能。Struts 2提供一个ActionSupport基类去实现常用的接口。即使Action接口不是必须实现的,只有一个包含execute方法的P

- 初学者要多看看帮助文档 不要用js来写Jquery的代码

百合不是茶

jqueryjs

解析json数据的时候需要将解析的数据写到文本框中, 出现了用js来写Jquery代码的问题;

1, JQuery的赋值 有问题

代码如下: data.username 表示的是: 网易

$("#use

- 经理怎么和员工搞好关系和信任

bijian1013

团队项目管理管理

产品经理应该有坚实的专业基础,这里的基础包括产品方向和产品策略的把握,包括设计,也包括对技术的理解和见识,对运营和市场的敏感,以及良好的沟通和协作能力。换言之,既然是产品经理,整个产品的方方面面都应该能摸得出门道。这也不懂那也不懂,如何让人信服?如何让自己懂?就是不断学习,不仅仅从书本中,更从平时和各种角色的沟通

- 如何为rich:tree不同类型节点设置右键菜单

sunjing

contextMenutreeRichfaces

组合使用target和targetSelector就可以啦,如下: <rich:tree id="ruleTree" value="#{treeAction.ruleTree}" var="node" nodeType="#{node.type}"

selectionChangeListener=&qu

- 【Redis二】Redis2.8.17搭建主从复制环境

bit1129

redis

开始使用Redis2.8.17

Redis第一篇在Redis2.4.5上搭建主从复制环境,对它的主从复制的工作机制,真正的惊呆了。不知道Redis2.8.17的主从复制机制是怎样的,Redis到了2.4.5这个版本,主从复制还做成那样,Impossible is nothing! 本篇把主从复制环境再搭一遍看看效果,这次在Unbuntu上用官方支持的版本。 Ubuntu上安装Red

- JSONObject转换JSON--将Date转换为指定格式

白糖_

JSONObject

项目中,经常会用JSONObject插件将JavaBean或List<JavaBean>转换为JSON格式的字符串,而JavaBean的属性有时候会有java.util.Date这个类型的时间对象,这时JSONObject默认会将Date属性转换成这样的格式:

{"nanos":0,"time":-27076233600000,

- JavaScript语言精粹读书笔记

braveCS

JavaScript

【经典用法】:

//①定义新方法

Function .prototype.method=function(name, func){

this.prototype[name]=func;

return this;

}

//②给Object增加一个create方法,这个方法创建一个使用原对

- 编程之美-找符合条件的整数 用字符串来表示大整数避免溢出

bylijinnan

编程之美

import java.util.LinkedList;

public class FindInteger {

/**

* 编程之美 找符合条件的整数 用字符串来表示大整数避免溢出

* 题目:任意给定一个正整数N,求一个最小的正整数M(M>1),使得N*M的十进制表示形式里只含有1和0

*

* 假设当前正在搜索由0,1组成的K位十进制数

- 读书笔记

chengxuyuancsdn

读书笔记

1、Struts访问资源

2、把静态参数传递给一个动作

3、<result>type属性

4、s:iterator、s:if c:forEach

5、StringBuilder和StringBuffer

6、spring配置拦截器

1、访问资源

(1)通过ServletActionContext对象和实现ServletContextAware,ServletReque

- [通讯与电力]光网城市建设的一些问题

comsci

问题

信号防护的问题,前面已经说过了,这里要说光网交换机与市电保障的关系

我们过去用的ADSL线路,因为是电话线,在小区和街道电力中断的情况下,只要在家里用笔记本电脑+蓄电池,连接ADSL,同样可以上网........

- oracle 空间RESUMABLE

daizj

oracle空间不足RESUMABLE错误挂起

空间RESUMABLE操作 转

Oracle从9i开始引入这个功能,当出现空间不足等相关的错误时,Oracle可以不是马上返回错误信息,并回滚当前的操作,而是将操作挂起,直到挂起时间超过RESUMABLE TIMEOUT,或者空间不足的错误被解决。

这一篇简单介绍空间RESUMABLE的例子。

第一次碰到这个特性是在一次安装9i数据库的过程中,在利用D

- 重构第一次写的线程池

dieslrae

线程池 python

最近没有什么学习欲望,修改之前的线程池的计划一直搁置,这几天比较闲,还是做了一次重构,由之前的2个类拆分为现在的4个类.

1、首先是工作线程类:TaskThread,此类为一个工作线程,用于完成一个工作任务,提供等待(wait),继续(proceed),绑定任务(bindTask)等方法

#!/usr/bin/env python

# -*- coding:utf8 -*-

- C语言学习六指针

dcj3sjt126com

c

初识指针,简单示例程序:

/*

指针就是地址,地址就是指针

地址就是内存单元的编号

指针变量是存放地址的变量

指针和指针变量是两个不同的概念

但是要注意: 通常我们叙述时会把指针变量简称为指针,实际它们含义并不一样

*/

# include <stdio.h>

int main(void)

{

int * p; // p是变量的名字, int *

- yii2 beforeSave afterSave beforeDelete

dcj3sjt126com

delete

public function afterSave($insert, $changedAttributes)

{

parent::afterSave($insert, $changedAttributes);

if($insert) {

//这里是新增数据

} else {

//这里是更新数据

}

}

- timertask

shuizhaosi888

timertask

java.util.Timer timer = new java.util.Timer(true);

// true 说明这个timer以daemon方式运行(优先级低,

// 程序结束timer也自动结束),注意,javax.swing

// 包中也有一个Timer类,如果import中用到swing包,

// 要注意名字的冲突。

TimerTask task = new

- Spring Security(13)——session管理

234390216

sessionSpring Security攻击保护超时

session管理

目录

1.1 检测session超时

1.2 concurrency-control

1.3 session 固定攻击保护

- 公司项目NODEJS实践0.3[ mongo / session ...]

逐行分析JS源代码

mongodbsessionnodejs

http://www.upopen.cn

一、前言

书接上回,我们搭建了WEB服务端路由、模板等功能,完成了register 通过ajax与后端的通信,今天主要完成数据与mongodb的存取,实现注册 / 登录 /

- pojo.vo.po.domain区别

LiaoJuncai

javaVOPOJOjavabeandomain

POJO = "Plain Old Java Object",是MartinFowler等发明的一个术语,用来表示普通的Java对象,不是JavaBean, EntityBean 或者 SessionBean。POJO不但当任何特殊的角色,也不实现任何特殊的Java框架的接口如,EJB, JDBC等等。

即POJO是一个简单的普通的Java对象,它包含业务逻辑

- Windows Error Code

OhMyCC

windows

0 操作成功完成.

1 功能错误.

2 系统找不到指定的文件.

3 系统找不到指定的路径.

4 系统无法打开文件.

5 拒绝访问.

6 句柄无效.

7 存储控制块被损坏.

8 存储空间不足, 无法处理此命令.

9 存储控制块地址无效.

10 环境错误.

11 试图加载格式错误的程序.

12 访问码无效.

13 数据无效.

14 存储器不足, 无法完成此操作.

15 系

- 在storm集群环境下发布Topology

roadrunners

集群stormtopologyspoutbolt

storm的topology设计和开发就略过了。本章主要来说说如何在storm的集群环境中,通过storm的管理命令来发布和管理集群中的topology。

1、打包

打包插件是使用maven提供的maven-shade-plugin,详细见maven-shade-plugin。

<plugin>

<groupId>org.apache.maven.

- 为什么不允许代码里出现“魔数”

tomcat_oracle

java

在一个新项目中,我最先做的事情之一,就是建立使用诸如Checkstyle和Findbugs之类工具的准则。目的是制定一些代码规范,以及避免通过静态代码分析就能够检测到的bug。 迟早会有人给出案例说这样太离谱了。其中的一个案例是Checkstyle的魔数检查。它会对任何没有定义常量就使用的数字字面量给出警告,除了-1、0、1和2。 很多开发者在这个检查方面都有问题,这可以从结果

- zoj 3511 Cake Robbery(线段树)

阿尔萨斯

线段树

题目链接:zoj 3511 Cake Robbery

题目大意:就是有一个N边形的蛋糕,切M刀,从中挑选一块边数最多的,保证没有两条边重叠。

解题思路:有多少个顶点即为有多少条边,所以直接按照切刀切掉点的个数排序,然后用线段树维护剩下的还有哪些点。

#include <cstdio>

#include <cstring>

#include <vector&