tensorflow2.0 神经网络多次训练结果的可视化

针对自己的数据集,我们用不同的网络来训练,或者用同一网络来测试不同的数据集,都会得到很多个训练结果。为了在此之间进行对比,我们最好把所有的结果绘制在一张图中。下面我们来提供一种方法。

我们在训练网络的过程中会有训练日志输出,针对每一个epoch,都会有训练准确率acc和训练损失loss,还有验证准确率val_acc和验证损失val_loss,还有最后的测试准确率test_acc。我们需要把他们写入一个字典当中,进行调用。然后可视化,接下来上代码:

import matplotlib.pyplot as plt

#用不同的字典将不同的训练结果写入

dict1={'_4_loss':[2.9492,1.4026,0.9703,0.8491,0.8409,0.6915,0.6087,0.6116,0.4860,0.5437,0.4484,0.4296,0.4486,0.4082,0.3752,0.4040,0.3312,0.3715,0.2840,0.3193,0.3025,0.3879,0.3137,0.3200,0.2683,0.2574,0.2987,0.3124,0.2497,0.3511,0.2578,0.2828,0.3018,0.3333,0.2968,0.4256,0.3496,0.3688,0.3829,0.2439,0.2508,0.2581,0.2181,0.2557,0.2523,0.3288,0.2883,0.2523,0.3114,0.3476],

'_4_acc':[0.3090,0.5401,0.6557,0.7123,0.7311,0.7571,0.7854,0.7783,0.8302,0.8113,0.8561,0.8443,0.8467,0.8608,0.8703,0.8656,0.8679,0.8821,0.8939,0.8892,0.9033,0.8797,0.8821,0.8986,0.8939,0.9033,0.9057,0.9080,0.9245,0.8892,0.9104,0.9080,0.8915,0.8962,0.8962,0.8750,0.9033,0.8797,0.8939,0.9104,0.9057,0.9127,0.9269,0.9198,0.9057,0.9009,0.8939,0.9080,0.8915,0.8844],

'_4_val_loss':[19.5177,8.5774,10.5291,6.7281,5.6474,7.3317,5.1849,9.2877,13.5350,5.4513,3.3073,7.0256,8.5327,5.3727,6.9400,4.3825,2.7396,2.6096,3.5402,4.3104,3.3585,3.0279,4.6128,3.9091,2.9176,2.2243,2.6610,2.8934,2.0937,2.2669,3.5162,3.2038,2.1915,2.7525,2.7614,2.0319,1.7375,1.1879,2.2752,3.8828,3.5285,3.5578,2.1637,2.5984,2.0643,1.7535,1.6126,2.4406,2.2205,1.04140],

'_4_val_acc':[0.2113,0.3944,0.3028,0.4225,0.3944,0.4859,0.4296,0.3169,0.2254,0.4366,0.5704,0.3521,0.2958,0.4648,0.3380,0.4648,0.5563,0.5423,0.4859,0.4648,0.5211,0.5634,0.4296,0.5704,0.5493,0.6408,0.6197,0.5845,0.6268,0.6338,0.4507,0.5352,0.6268,0.5352,0.6197,0.6127,0.6972,0.7887,0.6197,0.5070,0.5141,0.5141,0.6761,0.6127,0.7183,0.7113,0.7535,0.6761,0.6197,0.7465]}

dict2={'_9_loss':[2.9523,1.3878,1.1013,0.9804,0.8321,0.7458,0.7620,0.7260,0.5969,0.5662,0.6416,0.5508,0.4961,0.4319,0.4476,0.5162,0.4074,0.4666,0.4283,0.4029,0.4131,0.3835,0.3541,0.3880,0.3947,0.3631,0.3714,0.3975,0.3815,0.3403,0.3688,0.3187,0.3086,0.3152,0.3333,0.2600,0.2856,0.3357,0.3333,0.3291,0.2746,0.3312,0.3100,0.3570,0.3021,0.3197,0.2618,0.2179,0.2694,0.2710],

'_9_acc':[0.3299,0.5753,0.6000,0.6495,0.7134,0.7299,0.7320,0.7402,0.7773,0.7835,0.7856,0.8268,0.8268,0.8536,0.8412,0.8351,0.8557,0.8309,0.8474,0.8536,0.8412,0.8763,0.8825,0.8515,0.8639,0.8804,0.8598,0.8680,0.8598,0.8845,0.8845,0.8763,0.9093,0.8887,0.8845,0.9072,0.9134,0.8784,0.8660,0.9010,0.8990,0.9010,0.8866,0.8619,0.8907,0.8866,0.8969,0.9237,0.9030,0.9030],

'_9_val_loss':[18.1512,9.8764,21.0780,15.030342,6.7554,12.6005,5.4650,4.6715,4.9408,6.0524,10.5684,6.5912,5.4219,4.3312,3.4515,3.8430,4.2787,3.2179,3.4217,3.1289,2.9515,2.9530,4.7775,3.3264,3.4435,1.8848,2.2672,3.5573,3.5521,2.4999,2.5100,2.1778,1.7509,1.7265,1.4248,1.3023,1.4990,1.7748,1.5395,1.4459,1.6364,2.5519,2.3438,2.4383,1.4671,2.1979,2.7805,2.0529,1.6031,1.6032],

'_9_val_acc':[0.2716,0.2716,0.1514,0.1975,0.3827,0.1914,0.3889,0.4074,0.3951,0.2840,0.1667,0.3025,0.3210,0.4198,0.5062,0.3889,0.4321,0.5247,0.4815,0.4444,0.5432,0.5494,0.4012,0.4815,0.4383,0.6420,0.5309,0.4444,0.4259,0.5741,0.5679,0.6173,0.6296,0.6420,0.7222,0.7284,0.6975,0.7160,0.6667,0.6852,0.6358,0.5617,0.5926,0.5926,0.6481,0.6296,0.6235,0.6296,0.6481,0.6728]}

dict3={'_20_loss':[2.5753,1.4587,1.1181,0.8649,0.8306,0.7430,0.7421,0.6304,0.6233,0.5954,0.6070,0.5940,0.5420,0.4909,0.5283,0.4661,0.4858,0.4610,0.4360,0.4385,0.4511,0.4019,0.3982,0.3778,0.3767,0.3611,0.3017,0.4328,0.3147,0.4247,0.3920,0.4141,0.4901,0.3954,0.3688,0.3733,0.5044,0.4238,0.3573,0.2724,0.3484,0.3535,0.3643,0.3622,0.2888,0.2447,0.3833,0.3659,0.3979,0.4396],

'_20_acc':[0.3788,0.5600,0.6141,0.7082,0.6988,0.7129,0.7176,0.7647,0.7741,0.7929,0.7906,0.8071,0.8024,0.8282,0.7976,0.8118,0.8235,0.8541,0.8541,0.8400,0.8188,0.8565,0.8400,0.8518,0.8706,0.8918,0.8847,0.8635,0.9129,0.8824,0.8518,0.8588,0.8541,0.8541,0.8659,0.8776,0.8471,0.8635,0.8682,0.8941,0.8776,0.8753,0.8871,0.8659,0.9129,0.9012,0.8682,0.8988,0.8541,0.8541],

'_20_val_loss':[11.2321,16.2215,3.3194,18.1751,25.4574,6.9738,12.2705,21.7173,8.1879,7.8002,10.6400,11.1575,10.7819,7.1390,6.0313,8.6398,3.7608,4.9681,6.2474,6.0046,4.4199,3.0689,4.3069,4.4688,2.7023,3.3829,3.7836,2.5370,5.1312,3.5202,1.9268,3.1265,4.2022,3.4149,2.7747,2.6760,2.3214,2.2555,1.9714,1.8672,2.1720,3.9476,3.3983,2.7317,2.9689,3.0366,3.2539,2.1411,2.1958,2.3463],

'_20_val_acc':[0.1620,0.2254,0.4859,0.2294,0.1338,0.3310,0.2958,0.1268,0.3592,0.3592,0.2817,0.2324,0.2746,0.3873,0.4085,0.3169,0.5282,0.4859,0.4366,0.4577,0.4859,0.5634,0.4507,0.4296,0.5775,0.5423,0.5141,0.5563,0.4437,0.5211,0.5775,0.5211,0.4718,0.5493,0.5634,0.5845,0.5845,0.6268,0.6479,0.6620,0.5845,0.4859,0.5282,0.5634,0.5352,0.5634,0.5563,0.6127,0.6127,0.6408]}

#调用字典的值

_4_loss=dict1['_4_loss']

_4_acc=dict1['_4_acc']

_4_val_loss=dict1['_4_val_loss']

_4_val_acc=dict1['_4_val_acc']

_9_loss=dict2['_9_loss']

_9_acc=dict2['_9_acc']

_9_val_loss=dict2['_9_val_loss']

_9_val_acc=dict2['_9_val_acc']

_20_loss=dict3['_20_loss']

_20_acc=dict3['_20_acc']

_20_val_loss=dict3['_20_val_loss']

_20_val_acc=dict3['_20_val_acc']

#绘制点(可以将最后的测试结果可视化出来)

plt.scatter(50,0.6620)

plt.scatter(50,0.6408)

plt.scatter(50,0.6358)

#plt.plot(epoch, _4_loss, linewidth=1,label='1_4_loss')

plt.plot(epoch, _4_acc, linewidth=1, label='1_4_acc')

#plt.plot(epoch,_4_val_loss, linewidth=1, label='1_4_val_loss')

#plt.plot(epoch, _4_val_acc, linewidth=1, label='1_4_val_acc')

#plt.plot(epoch, _9_loss, linewidth=1, label='1_9_loss')

plt.plot(epoch, _9_acc, linewidth=1, label='1_9_acc')

#plt.plot(epoch, _9_val_loss, linewidth=1, label='1_9_val_loss')

#plt.plot(epoch, _9_val_acc, linewidth=1, label='1_9_val_acc')

#plt.plot(epoch, _20_loss, linewidth=1, label='1_20_loss')

plt.plot(epoch, _20_acc, linewidth=1, label='1_20_acc')

#plt.plot(epoch, _20_val_loss, linewidth=1, label='1_20_val_loss')

#plt.plot(epoch, _20_val_acc, linewidth=1, label='1_20_val_acc')

plt.grid(True)

plt.xlabel('epoch')

plt.ylabel('acc')# acc根据情况修改,如果绘制损失的时候变为loss

#plt.legend(loc='upper left')#标签的位置(左上方)

#plt.legend(loc='upper right')#右上方

plt.legend(loc='lower right')#左下方

plt.show()下面看一下结果

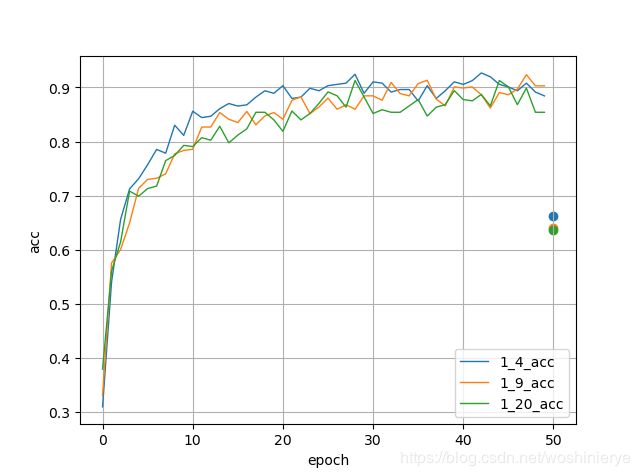

训练集acc

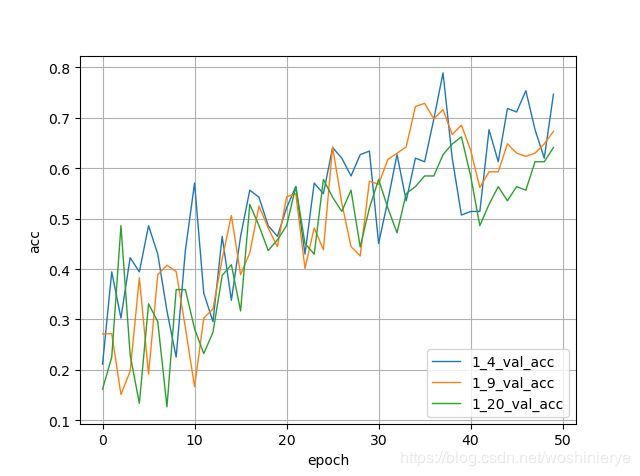

验证集acc

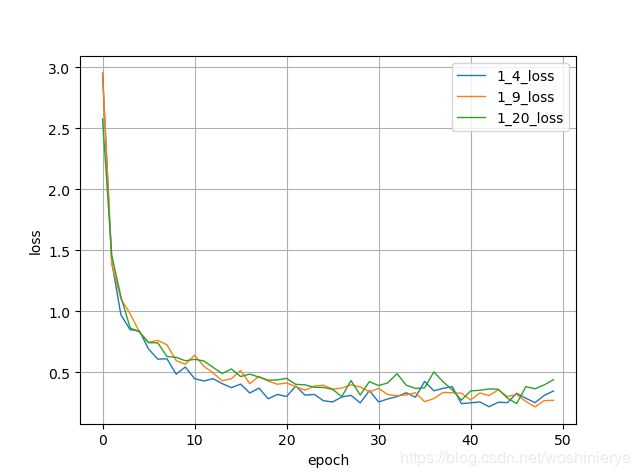

训练集loss

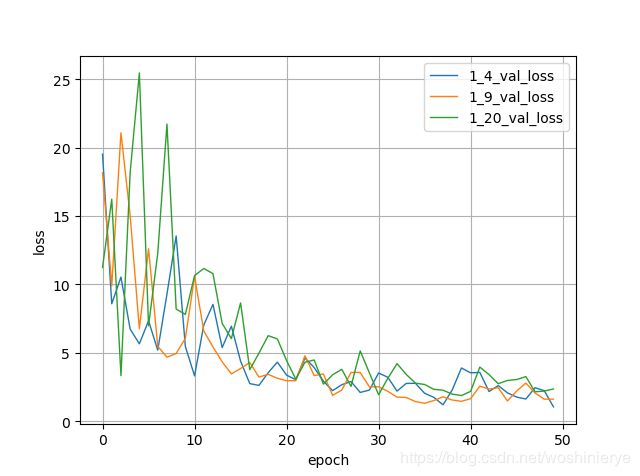

验证loss

验证loss

当然了,这是一种比较笨的方法,是权宜之计。还有比较高端的方法就是在程序当中用代码生成预测值文件(.txt)和标签值文件(.txt),然后直接调用。这种方法后续再分享。

当然了,这是一种比较笨的方法,是权宜之计。还有比较高端的方法就是在程序当中用代码生成预测值文件(.txt)和标签值文件(.txt),然后直接调用。这种方法后续再分享。

本文到此结束,感谢阅读。