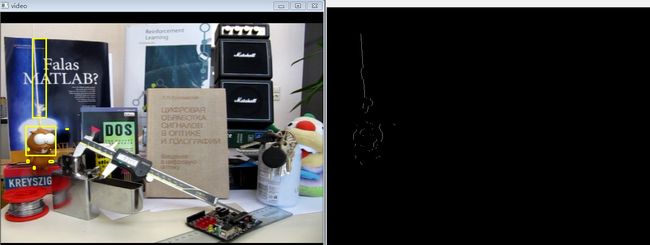

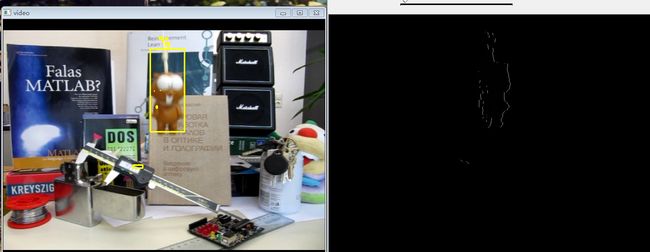

opencv-视频处理-实时前景检测--三帧差法

假设下面的是视频流中的按时间先后顺序的任意三帧图片

依次定义它们的灰度图为:

![]() ,

,![]() ,

,![]()

其中

![]() 代表

代表![]() 的任意一点

的任意一点![]() 处的坐标

处的坐标

![]() 代表

代表![]() 在任意一点

在任意一点![]() 处的坐标

处的坐标

![]() 代表

代表![]() 在任意一点

在任意一点![]() 处的坐标

处的坐标

然后定义:

前两张的灰度的差为:

![]()

后两张的灰度差为:

![]()

最后做一下![]() 和

和![]() “与”运算。

“与”运算。

优点:

实时性高

缺点:

1、运动物体本身颜色相近时,会出现较大的空洞。

2、无法应对光照骤变的情况

下面为整个工程的代码:

#include

using namespace std;

#include

#include

#include

using namespace cv;

const unsigned char FORE_GROUD = 255;

int thresh = 10;

int main(int argc,char*argv[])

{

VideoCapture video(argv[1]);

//判断如果video是否可以打开

if(!video.isOpened())

return -1;

//用于保存当前帧的图片

Mat currentBGRFrame;

//用来保存上一帧和当前帧的灰度图片

Mat previousSecondGrayFrame;

Mat previousFirstGrayFrame;

Mat currentGaryFrame;

//保存两次的帧差

Mat previousFrameDifference;//previousFrameFirst - previousFrameSecond的差分

Mat currentFrameDifference;//currentFrame - previousFrameFirst;

//用来保存帧差的绝对值

Mat absFrameDifferece;

//用来显示前景

Mat previousSegmentation;

Mat currentSegmentation;

Mat segmentation;

//显示前景

namedWindow("segmentation",1);

createTrackbar("阈值:","segmentation",&thresh,FORE_GROUD,NULL);

//帧数

int numberFrame = 0;

//形态学处理用到的算子

Mat morphologyKernel = getStructuringElement(MORPH_RECT,Size(3,3),Point(-1,-1));

for(;;)

{

//读取当前帧

video >> currentBGRFrame;

//判断当前帧是否存在

if(!currentBGRFrame.data)

break;

numberFrame++;

//颜色空间的转换

cvtColor(currentBGRFrame,currentGaryFrame,COLOR_BGR2GRAY);

if( numberFrame == 1)

{

//保存当前帧的灰度图

previousSecondGrayFrame = currentGaryFrame.clone();

//显示视频

imshow("video",currentBGRFrame);

continue;

}

else if( numberFrame == 2)

{

//保存当前帧的灰度图

previousFirstGrayFrame = currentGaryFrame.clone();

//previousFirst - previousSecond

subtract(previousFirstGrayFrame,previousSecondGrayFrame,previousFrameDifference,Mat(),CV_16SC1);

//取绝对值

absFrameDifferece = abs(previousFrameDifference);

//位深的改变

absFrameDifferece.convertTo(absFrameDifferece,CV_8UC1,1,0);

//阈值处理

threshold(absFrameDifferece,previousSegmentation,double(thresh),double(FORE_GROUD),THRESH_BINARY);

//显示视频

imshow("video",currentBGRFrame);

continue;

}

else

{

//src1-src2

subtract(currentGaryFrame,previousFirstGrayFrame,currentFrameDifference,Mat(),CV_16SC1);

//取绝对值

absFrameDifferece = abs(currentFrameDifference);

//位深的改变

absFrameDifferece.convertTo(absFrameDifferece,CV_8UC1,1,0);

//阈值处理

threshold(absFrameDifferece,currentSegmentation,double(thresh),double(FORE_GROUD),THRESH_BINARY);

//与运算

bitwise_and(previousSegmentation,currentSegmentation,segmentation);

//中值滤波

medianBlur(segmentation,segmentation,3);

//形态学处理(开闭运算)

//morphologyEx(segmentation,segmentation,MORPH_OPEN,morphologyKernel,Point(-1,-1),1,BORDER_REPLICATE);

morphologyEx(segmentation,segmentation,MORPH_CLOSE,morphologyKernel,Point(-1,-1),2,BORDER_REPLICATE);

//找边界

vector< vector > contours;

vector hierarchy;

//复制segmentation

Mat tempSegmentation = segmentation.clone();

findContours( segmentation, contours, hierarchy, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_SIMPLE, Point(0, 0) );//CV_RETR_TREE

vector< vector > contours_poly( contours.size() );

/*存储运动物体*/

vector boundRect;

boundRect.clear();

//画出运动物体

for(int index = 0;index < contours.size() ;index++)

{

approxPolyDP( Mat(contours[index]), contours_poly[index], 3, true );

Rect rect = boundingRect( Mat(contours_poly[index]) );

rectangle(currentBGRFrame,rect,Scalar(0,255,255),2);

}

//显示视频

imshow("video",currentBGRFrame);

//前景检测

imshow("segmentation",segmentation);

//保存当前帧的灰度图

previousFirstGrayFrame = currentGaryFrame.clone();

//保存当前的前景检测

previousSegmentation = currentSegmentation.clone();

}

if(waitKey(33) == 'q')

break;

}

return 0;

}