Android Studio使用Opencv实现图像的实时处理

OpenCV是著名的跨平台计算机视觉开源库,广泛应用于计算机视觉相关领域。OpenCV 已经发布 Android 平台下的 SDK,可以直接导入 android Studio。Opencv移植进Android平台可以在移动端实现图像的处理,目标的识别等功能,具有良好的开发前景。

一.开发准备

第一步主要是搭好开发环境,将Opencv导入到Android Studio,可以直接在Android Studio中选择File-->Import Module,找到OpenCV解压的路径,选择sdk/Java文件夹作为Module进行导入。网上的教程很多了,主要参考博客:http://blog.csdn.net/gao_chun/article/details/49359535。注意点:导入后要注意Gradle中的配置问题,比如本文的opencvCVLibrary249目录下的build.gradle中的各项设置数值要与app/src下的build.gradle一致。即:

1)compileSdkVersion

2)buildToolsVersion

3)minSdkVersion

4)targetSdkVersion(将其内容与app文件夹下的build.gradle中信息相一致)

再有一点就是要注意OpenCVLibrary的版本问题,比如高版本opencvCVLibrary310中src/main/java没有highgui等文件,而相对低版本opencvCVLibrary249则还有,根据自己的开发需要选择合适版本。

二.程序实例

接下来是一个图像处理的程序,主要参考博客:http://blog.csdn.net/yangtrees/article/details/38279351

主程序MainActivity.java:

package com.example.lenovo.edgeopencv;

import android.app.Activity;

import android.content.Context;

import android.os.Bundle;

import android.util.Log;

import android.view.Menu;

import android.view.MenuItem;

import android.view.SurfaceView;

import android.view.View;

import android.view.WindowManager;

import android.widget.Button;

import android.widget.Toast;

import org.opencv.android.BaseLoaderCallback;

import org.opencv.android.CameraBridgeViewBase;

import org.opencv.android.CameraBridgeViewBase.CvCameraViewFrame;

import org.opencv.android.CameraBridgeViewBase.CvCameraViewListener2;

import org.opencv.android.LoaderCallbackInterface;

import org.opencv.android.OpenCVLoader;

import org.opencv.core.Core;

import org.opencv.core.CvType;

import org.opencv.core.Mat;

import org.opencv.core.MatOfFloat;

import org.opencv.core.MatOfInt;

import org.opencv.core.MatOfRect;

import org.opencv.core.Point;

import org.opencv.core.Rect;

import org.opencv.core.Scalar;

import org.opencv.core.Size;

import org.opencv.imgproc.Imgproc;

import org.opencv.objdetect.CascadeClassifier;

import java.io.File;

import java.io.FileOutputStream;

import java.io.InputStream;

import java.util.Arrays;

public class MainActivity extends Activity implements CvCameraViewListener2 {

private static final String TAG = "OCVSample::Activity";

private CameraBridgeViewBase mOpenCvCameraView;

private CascadeClassifier cascadeClassifier;

private boolean mIsJavaCamera = true;

private MenuItem mItemSwitchCamera = null;

private Mat mRgba;

private Mat mGray;

private Mat mTmp;

private Size mSize0;

private Mat mIntermediateMat;

private MatOfInt mChannels[];

private MatOfInt mHistSize;

private int mHistSizeNum = 25;

private Mat mMat0;

private float[] mBuff;

private MatOfFloat mRanges;

private Point mP1;

private Point mP2;

private Scalar mColorsRGB[];

private Scalar mColorsHue[];

private Scalar mWhilte;

private Mat mSepiaKernel;

private Button mBtn = null;

private int mProcessMethod = 0;

private int absoluteFaceSize;//face

private BaseLoaderCallback mLoaderCallback = new BaseLoaderCallback(this) {

@Override

public void onManagerConnected(int status) {

switch (status) {

case LoaderCallbackInterface.SUCCESS:

{

Log.i(TAG, "OpenCV loaded successfully");

//mOpenCvCameraView.enableView();

initializeOpenCVDependencies();

} break;

default:

{

super.onManagerConnected(status);

} break;

}

}

};

private void initializeOpenCVDependencies() {

try {

// Copy the resource into a temp file so OpenCV can load it

InputStream is = getResources().openRawResource(R.raw.lbpcascade_frontalface);

File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

File mCascadeFile = new File(cascadeDir, "lbpcascade_frontalface.xml");

FileOutputStream os = new FileOutputStream(mCascadeFile);

byte[] buffer = new byte[4096];

int bytesRead;

while ((bytesRead = is.read(buffer)) != -1) {

os.write(buffer, 0, bytesRead);

}

is.close();

os.close();

// Load the cascade classifier

cascadeClassifier = new CascadeClassifier(mCascadeFile.getAbsolutePath());

} catch (Exception e) {

Log.e("OpenCVActivity", "Error loading cascade", e);

}

// And we are ready to go

mOpenCvCameraView.enableView();

//openCvCameraView.enableView();

}

public MainActivity() {

Log.i(TAG, "Instantiated new " + this.getClass());

}

/** Called when the activity is first created. */

@Override

public void onCreate(Bundle savedInstanceState) {

Log.i(TAG, "called onCreate");

super.onCreate(savedInstanceState);

getWindow().addFlags(WindowManager.LayoutParams.FLAG_KEEP_SCREEN_ON);

setContentView(R.layout.opencv_camera);

if (mIsJavaCamera)

mOpenCvCameraView = (CameraBridgeViewBase) findViewById(R.id.tutorial1_activity_java_surface_view);

else

mOpenCvCameraView = (CameraBridgeViewBase) findViewById(R.id.tutorial1_activity_native_surface_view);

mOpenCvCameraView.setVisibility(SurfaceView.VISIBLE);

mOpenCvCameraView.setCvCameraViewListener(this);

mBtn = (Button) findViewById(R.id.buttonGray);

mBtn.setOnClickListener(new View.OnClickListener(){

@Override

public void onClick(View v) {

mProcessMethod++;

if(mProcessMethod>8) mProcessMethod=0;

}

});

}

@Override

public void onPause()

{

super.onPause();

if (mOpenCvCameraView != null)

mOpenCvCameraView.disableView();

}

@Override

public void onResume()

{

super.onResume();

if (!OpenCVLoader.initDebug()) {////////////////////

Log.e("log_wons", "OpenCV init error");

// Handle initialization error

}

initializeOpenCVDependencies();////////////////////face

OpenCVLoader.initAsync(OpenCVLoader.OPENCV_VERSION_2_4_9, this, mLoaderCallback);

}

public void onDestroy() {

super.onDestroy();

if (mOpenCvCameraView != null)

mOpenCvCameraView.disableView();

}

@Override

public boolean onCreateOptionsMenu(Menu menu) {

Log.i(TAG, "called onCreateOptionsMenu");

mItemSwitchCamera = menu.add("Toggle Native/Java camera");

return true;

}

@Override

public boolean onOptionsItemSelected(MenuItem item) {

String toastMesage = new String();

Log.i(TAG, "called onOptionsItemSelected; selected item: " + item);

if (item == mItemSwitchCamera) {

mOpenCvCameraView.setVisibility(SurfaceView.GONE);

mIsJavaCamera = !mIsJavaCamera;

if (mIsJavaCamera) {

mOpenCvCameraView = (CameraBridgeViewBase) findViewById(R.id.tutorial1_activity_java_surface_view);

toastMesage = "Java Camera";

} else {

mOpenCvCameraView = (CameraBridgeViewBase) findViewById(R.id.tutorial1_activity_native_surface_view);

toastMesage = "Native Camera";

}

mOpenCvCameraView.setVisibility(SurfaceView.VISIBLE);

mOpenCvCameraView.setCvCameraViewListener(this);

mOpenCvCameraView.enableView();

Toast toast = Toast.makeText(this, toastMesage, Toast.LENGTH_LONG);

toast.show();

}

return true;

}

public void onCameraViewStarted(int width, int height) {

mRgba = new Mat(height, width, CvType.CV_8UC4);

mGray = new Mat(height, width, CvType.CV_8UC1);

mTmp = new Mat(height, width, CvType.CV_8UC4);//face

// The faces will be a 20% of the height of the screen

absoluteFaceSize = (int) (height * 0.2);//face

mIntermediateMat = new Mat();

mSize0 = new Size();

mChannels = new MatOfInt[] { new MatOfInt(0), new MatOfInt(1), new MatOfInt(2) };

mBuff = new float[mHistSizeNum];

mHistSize = new MatOfInt(mHistSizeNum);

mRanges = new MatOfFloat(0f, 256f);

mMat0 = new Mat();

mColorsRGB = new Scalar[] { new Scalar(200, 0, 0, 255), new Scalar(0, 200, 0, 255), new Scalar(0, 0, 200, 255) };

mColorsHue = new Scalar[] {

new Scalar(255, 0, 0, 255), new Scalar(255, 60, 0, 255), new Scalar(255, 120, 0, 255), new Scalar(255, 180, 0, 255), new Scalar(255, 240, 0, 255),

new Scalar(215, 213, 0, 255), new Scalar(150, 255, 0, 255), new Scalar(85, 255, 0, 255), new Scalar(20, 255, 0, 255), new Scalar(0, 255, 30, 255),

new Scalar(0, 255, 85, 255), new Scalar(0, 255, 150, 255), new Scalar(0, 255, 215, 255), new Scalar(0, 234, 255, 255), new Scalar(0, 170, 255, 255),

new Scalar(0, 120, 255, 255), new Scalar(0, 60, 255, 255), new Scalar(0, 0, 255, 255), new Scalar(64, 0, 255, 255), new Scalar(120, 0, 255, 255),

new Scalar(180, 0, 255, 255), new Scalar(255, 0, 255, 255), new Scalar(255, 0, 215, 255), new Scalar(255, 0, 85, 255), new Scalar(255, 0, 0, 255)

};

mWhilte = Scalar.all(255);

mP1 = new Point();

mP2 = new Point();

// Fill sepia kernel

mSepiaKernel = new Mat(4, 4, CvType.CV_32F);

mSepiaKernel.put(0, 0, /* R */0.189f, 0.769f, 0.393f, 0f);

mSepiaKernel.put(1, 0, /* G */0.168f, 0.686f, 0.349f, 0f);

mSepiaKernel.put(2, 0, /* B */0.131f, 0.534f, 0.272f, 0f);

mSepiaKernel.put(3, 0, /* A */0.000f, 0.000f, 0.000f, 1f);

}

public void onCameraViewStopped() {

mRgba.release();

mGray.release();

mTmp.release();

}

public Mat onCameraFrame(CvCameraViewFrame inputFrame) {

mRgba = inputFrame.rgba();

Size sizeRgba = mRgba.size();

int rows = (int) sizeRgba.height;

int cols = (int) sizeRgba.width;

Mat rgbaInnerWindow;

int left = cols / 8;

int top = rows / 8;

int width = cols * 3 / 4;

int height = rows * 3 / 4;

//灰度图

if (mProcessMethod == 1)

Imgproc.cvtColor(inputFrame.gray(), mRgba, Imgproc.COLOR_GRAY2RGBA, 4);

//Canny边缘检测

else if (mProcessMethod == 2) {

mRgba = inputFrame.rgba();

Imgproc.Canny(inputFrame.gray(), mTmp, 80, 100);

Imgproc.cvtColor(mTmp, mRgba, Imgproc.COLOR_GRAY2RGBA, 4);

}

//Hist

else if (mProcessMethod == 3) {

Mat hist = new Mat();

int thikness = (int) (sizeRgba.width / (mHistSizeNum + 10) / 5);

if (thikness > 5) thikness = 5;

int offset = (int) ((sizeRgba.width - (5 * mHistSizeNum + 4 * 10) * thikness) / 2);

// RGB

for (int c = 0; c < 3; c++) {

Imgproc.calcHist(Arrays.asList(mRgba), mChannels[c], mMat0, hist, mHistSize, mRanges);

Core.normalize(hist, hist, sizeRgba.height / 2, 0, Core.NORM_INF);

hist.get(0, 0, mBuff);

for (int h = 0; h < mHistSizeNum; h++) {

mP1.x = mP2.x = offset + (c * (mHistSizeNum + 10) + h) * thikness;

mP1.y = sizeRgba.height - 1;

mP2.y = mP1.y - 2 - (int) mBuff[h];

Core.line(mRgba, mP1, mP2, mColorsRGB[c], thikness);

}

}

// Value and Hue

Imgproc.cvtColor(mRgba, mTmp, Imgproc.COLOR_RGB2HSV_FULL);

// Value

Imgproc.calcHist(Arrays.asList(mTmp), mChannels[2], mMat0, hist, mHistSize, mRanges);

Core.normalize(hist, hist, sizeRgba.height / 2, 0, Core.NORM_INF);

hist.get(0, 0, mBuff);

for (int h = 0; h < mHistSizeNum; h++) {

mP1.x = mP2.x = offset + (3 * (mHistSizeNum + 10) + h) * thikness;

mP1.y = sizeRgba.height - 1;

mP2.y = mP1.y - 2 - (int) mBuff[h];

Core.line(mRgba, mP1, mP2, mWhilte, thikness);

}

}

//inner Window Sobel

else if (mProcessMethod == 4) {

Mat gray = inputFrame.gray();

Mat grayInnerWindow = gray.submat(top, top + height, left, left + width);

rgbaInnerWindow = mRgba.submat(top, top + height, left, left + width);

Imgproc.Sobel(grayInnerWindow, mIntermediateMat, CvType.CV_8U, 1, 1);

Core.convertScaleAbs(mIntermediateMat, mIntermediateMat, 10, 0);

Imgproc.cvtColor(mIntermediateMat, rgbaInnerWindow, Imgproc.COLOR_GRAY2BGRA, 4);

grayInnerWindow.release();

rgbaInnerWindow.release();

}

//SEPIA

else if (mProcessMethod == 5) {

rgbaInnerWindow = mRgba.submat(top, top + height, left, left + width);

Core.transform(rgbaInnerWindow, rgbaInnerWindow, mSepiaKernel);

rgbaInnerWindow.release();

}

//ZOOM

else if (mProcessMethod == 6) {

Mat zoomCorner = mRgba.submat(0, rows / 2 - rows / 10, 0, cols / 2 - cols / 10);

Mat mZoomWindow = mRgba.submat(rows / 2 - 9 * rows / 100, rows / 2 + 9 * rows / 100, cols / 2 - 9 * cols / 100, cols / 2 + 9 * cols / 100);

Imgproc.resize(mZoomWindow, zoomCorner, zoomCorner.size());

Size wsize = mZoomWindow.size();

Core.rectangle(mZoomWindow, new Point(1, 1), new Point(wsize.width - 2, wsize.height - 2), new Scalar(255, 0, 0, 255), 2);

zoomCorner.release();

mZoomWindow.release();

}

//PIXELIZE

else if (mProcessMethod == 7) {

rgbaInnerWindow = mRgba.submat(top, top + height, left, left + width);

Imgproc.resize(rgbaInnerWindow, mIntermediateMat, mSize0, 0.1, 0.1, Imgproc.INTER_NEAREST);

Imgproc.resize(mIntermediateMat, rgbaInnerWindow, rgbaInnerWindow.size(), 0., 0., Imgproc.INTER_NEAREST);

rgbaInnerWindow.release();

}

//POSTERIZE

else if (mProcessMethod == 8) {

rgbaInnerWindow = mRgba.submat(top, top + height, left, left + width);

Imgproc.Canny(rgbaInnerWindow, mIntermediateMat, 80, 90);

rgbaInnerWindow.setTo(new Scalar(0, 0, 0, 255), mIntermediateMat);

Core.convertScaleAbs(rgbaInnerWindow, mIntermediateMat, 1. / 16, 0);

Core.convertScaleAbs(mIntermediateMat, rgbaInnerWindow, 16, 0);

rgbaInnerWindow.release();

} else{

mRgba = inputFrame.rgba();

Imgproc.cvtColor(mRgba, mTmp, Imgproc.COLOR_RGBA2RGB);

MatOfRect faces = new MatOfRect();

// Use the classifier to detect faces

if (cascadeClassifier != null) {

cascadeClassifier.detectMultiScale(mTmp, faces, 1.1, 2, 2,

new Size(absoluteFaceSize, absoluteFaceSize), new Size());

}

// If there are any faces found, draw a rectangle around it

Rect[] facesArray = faces.toArray();

for (int i = 0; i 三.相关配置

本文在实时图像处理的同时实现人脸检测,主要配置是将 OpenCV Android SDK 中 sdk/etc 目录下的 lbpcascade_frontalface.xml 文件复制到项目 app/src/main/res/raw 目录下即可。参考博客:http://blog.csdn.net/tobacco5648/article/details/51615632

当完成上述步骤后,遇到了一个bug,困扰了很久。问题:java.lang.IllegalArgumentException: Service Intent must be explicit: Intent {act=org.opencv.engine.BIND }.。 经过查找相关资料,发现是因为Android5.0(本文使用SDK版本22.0.1)中service的intent一定要显性声明,当这样绑定的时候不会报错。解决办法即需要修改AsyncServiceHelper.java里的相关代码,将public static boolean initOpenCV(){}里的有关Intent的代码改为一下格式:

public static boolean initOpenCV(String Version, final Context AppContext,

final LoaderCallbackInterface Callback) {

AsyncServiceHelper helper = new AsyncServiceHelper(Version, AppContext,

Callback);

Intent intent = new Intent("org.opencv.engine.BIND");

intent.setPackage("org.opencv.engine");

if (AppContext.bindService(intent, helper.mServiceConnection,

Context.BIND_AUTO_CREATE)) {

return true;

} else {

AppContext.unbindService(helper.mServiceConnection);

InstallService(AppContext, Callback);

return false;

}

} 修改完成后,再次编译可以正常运行。

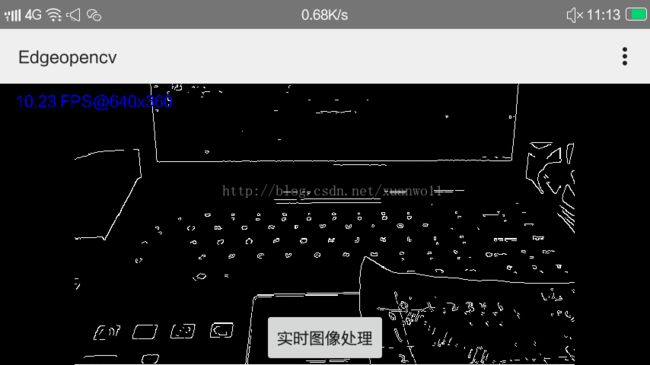

五.实现效果

在AndroidMainfest.xml中添加Camera相关权限:

编译之后运行程序,实现效果如下:

实时图像边缘检测

实时人脸检测