GCN

日萌社

人工智能AI:Keras PyTorch MXNet TensorFlow PaddlePaddle 深度学习实战(不定时更新)

github下载代码

https://github.com/dragen1860/Deep-Learning-with-TensorFlow-book

https://github.com/dragen1860/TensorFlow-2.x-Tutorials

config.py

import argparse

args = argparse.ArgumentParser()

args.add_argument('--dataset', default='cora')

args.add_argument('--model', default='gcn')

args.add_argument('--learning_rate', default=0.01)

args.add_argument('--epochs', default=200)

args.add_argument('--hidden1', default=16)

args.add_argument('--dropout', default=0.5)

args.add_argument('--weight_decay', default=5e-4)

args.add_argument('--early_stopping', default=10)

args.add_argument('--max_degree', default=3)

args = args.parse_args()

print(args)inits.py

import tensorflow as tf

import numpy as np

def uniform(shape, scale=0.05, name=None):

"""Uniform init."""

initial = tf.random.uniform(shape, minval=-scale, maxval=scale, dtype=tf.float32)

return tf.Variable(initial, name=name)

def glorot(shape, name=None):

"""Glorot & Bengio (AISTATS 2010) init."""

init_range = np.sqrt(6.0/(shape[0]+shape[1]))

initial = tf.random.uniform(shape, minval=-init_range, maxval=init_range, dtype=tf.float32)

return tf.Variable(initial, name=name)

def zeros(shape, name=None):

"""All zeros."""

initial = tf.zeros(shape, dtype=tf.float32)

return tf.Variable(initial, name=name)

def ones(shape, name=None):

"""All ones."""

initial = tf.ones(shape, dtype=tf.float32)

return tf.Variable(initial, name=name)layers.py

from inits import *

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

from config import args

# global unique layer ID dictionary for layer name assignment

_LAYER_UIDS = {}

def get_layer_uid(layer_name=''):

"""Helper function, assigns unique layer IDs."""

if layer_name not in _LAYER_UIDS:

_LAYER_UIDS[layer_name] = 1

return 1

else:

_LAYER_UIDS[layer_name] += 1

return _LAYER_UIDS[layer_name]

def sparse_dropout(x, rate, noise_shape):

"""

Dropout for sparse tensors.

"""

random_tensor = 1 - rate

random_tensor += tf.random.uniform(noise_shape)

dropout_mask = tf.cast(tf.floor(random_tensor), dtype=tf.bool)

pre_out = tf.sparse.retain(x, dropout_mask)

return pre_out * (1./(1 - rate))

def dot(x, y, sparse=False):

"""

Wrapper for tf.matmul (sparse vs dense).

"""

if sparse:

res = tf.sparse.sparse_dense_matmul(x, y)

else:

res = tf.matmul(x, y)

return res

class Dense(layers.Layer):

"""Dense layer."""

def __init__(self, input_dim, output_dim, placeholders, dropout=0., sparse_inputs=False,

act=tf.nn.relu, bias=False, featureless=False, **kwargs):

super(Dense, self).__init__(**kwargs)

if dropout:

self.dropout = placeholders['dropout']

else:

self.dropout = 0.

self.act = act

self.sparse_inputs = sparse_inputs

self.featureless = featureless

self.bias = bias

# helper variable for sparse dropout

self.num_features_nonzero = placeholders['num_features_nonzero']

with tf.variable_scope(self.name + '_vars'):

self.vars['weights'] = glorot([input_dim, output_dim],

name='weights')

if self.bias:

self.vars['bias'] = zeros([output_dim], name='bias')

if self.logging:

self._log_vars()

def _call(self, inputs):

x = inputs

# dropout

if self.sparse_inputs:

x = sparse_dropout(x, 1-self.dropout, self.num_features_nonzero)

else:

x = tf.nn.dropout(x, 1-self.dropout)

# transform

output = dot(x, self.vars['weights'], sparse=self.sparse_inputs)

# bias

if self.bias:

output += self.vars['bias']

return self.act(output)

class GraphConvolution(layers.Layer):

"""

Graph convolution layer.

"""

def __init__(self, input_dim, output_dim, num_features_nonzero,

dropout=0.,

is_sparse_inputs=False,

activation=tf.nn.relu,

bias=False,

featureless=False, **kwargs):

super(GraphConvolution, self).__init__(**kwargs)

self.dropout = dropout

self.activation = activation

self.is_sparse_inputs = is_sparse_inputs

self.featureless = featureless

self.bias = bias

self.num_features_nonzero = num_features_nonzero

self.weights_ = []

for i in range(1):

w = self.add_variable('weight' + str(i), [input_dim, output_dim])

self.weights_.append(w)

if self.bias:

self.bias = self.add_variable('bias', [output_dim])

# for p in self.trainable_variables:

# print(p.name, p.shape)

def call(self, inputs, training=None):

x, support_ = inputs

# dropout

if training is not False and self.is_sparse_inputs:

x = sparse_dropout(x, self.dropout, self.num_features_nonzero)

elif training is not False:

x = tf.nn.dropout(x, self.dropout)

# convolve

supports = list()

for i in range(len(support_)):

if not self.featureless: # if it has features x

pre_sup = dot(x, self.weights_[i], sparse=self.is_sparse_inputs)

else:

pre_sup = self.weights_[i]

support = dot(support_[i], pre_sup, sparse=True)

supports.append(support)

output = tf.add_n(supports)

# bias

if self.bias:

output += self.bias

return self.activation(output)

metrics.py

import tensorflow as tf

def masked_softmax_cross_entropy(preds, labels, mask):

"""

Softmax cross-entropy loss with masking.

"""

loss = tf.nn.softmax_cross_entropy_with_logits(logits=preds, labels=labels)

mask = tf.cast(mask, dtype=tf.float32)

mask /= tf.reduce_mean(mask)

loss *= mask

return tf.reduce_mean(loss)

def masked_accuracy(preds, labels, mask):

"""

Accuracy with masking.

"""

correct_prediction = tf.equal(tf.argmax(preds, 1), tf.argmax(labels, 1))

accuracy_all = tf.cast(correct_prediction, tf.float32)

mask = tf.cast(mask, dtype=tf.float32)

mask /= tf.reduce_mean(mask)

accuracy_all *= mask

return tf.reduce_mean(accuracy_all)

models.py

import tensorflow as tf

from tensorflow import keras

from layers import *

from metrics import *

from config import args

class MLP(keras.Model):

def __init__(self, placeholders, input_dim, **kwargs):

super(MLP, self).__init__(**kwargs)

self.inputs = placeholders['features']

self.input_dim = input_dim

# self.input_dim = self.inputs.get_shape().as_list()[1] # To be supported in future Tensorflow versions

self.output_dim = placeholders['labels'].get_shape().as_list()[1]

self.placeholders = placeholders

self.optimizer = tf.train.AdamOptimizer(learning_rate=args.learning_rate)

self.build()

def _loss(self):

# Weight decay loss

for var in self.layers[0].vars.values():

self.loss += args.weight_decay * tf.nn.l2_loss(var)

# Cross entropy error

self.loss += masked_softmax_cross_entropy(self.outputs, self.placeholders['labels'],

self.placeholders['labels_mask'])

def _accuracy(self):

self.accuracy = masked_accuracy(self.outputs, self.placeholders['labels'],

self.placeholders['labels_mask'])

def _build(self):

self.layers.append(Dense(input_dim=self.input_dim,

output_dim=args.hidden1,

placeholders=self.placeholders,

act=tf.nn.relu,

dropout=True,

sparse_inputs=True,

logging=self.logging))

self.layers.append(Dense(input_dim=args.hidden1,

output_dim=self.output_dim,

placeholders=self.placeholders,

act=lambda x: x,

dropout=True,

logging=self.logging))

def predict(self):

return tf.nn.softmax(self.outputs)

class GCN(keras.Model):

def __init__(self, input_dim, output_dim, num_features_nonzero, **kwargs):

super(GCN, self).__init__(**kwargs)

self.input_dim = input_dim # 1433

self.output_dim = output_dim

print('input dim:', input_dim)

print('output dim:', output_dim)

print('num_features_nonzero:', num_features_nonzero)

self.layers_ = []

self.layers_.append(GraphConvolution(input_dim=self.input_dim, # 1433

output_dim=args.hidden1, # 16

num_features_nonzero=num_features_nonzero,

activation=tf.nn.relu,

dropout=args.dropout,

is_sparse_inputs=True))

self.layers_.append(GraphConvolution(input_dim=args.hidden1, # 16

output_dim=self.output_dim, # 7

num_features_nonzero=num_features_nonzero,

activation=lambda x: x,

dropout=args.dropout))

for p in self.trainable_variables:

print(p.name, p.shape)

def call(self, inputs, training=None):

"""

:param inputs:

:param training:

:return:

"""

x, label, mask, support = inputs

outputs = [x]

for layer in self.layers:

hidden = layer((outputs[-1], support), training)

outputs.append(hidden)

output = outputs[-1]

# # Weight decay loss

loss = tf.zeros([])

for var in self.layers_[0].trainable_variables:

loss += args.weight_decay * tf.nn.l2_loss(var)

# Cross entropy error

loss += masked_softmax_cross_entropy(output, label, mask)

acc = masked_accuracy(output, label, mask)

return loss, acc

def predict(self):

return tf.nn.softmax(self.outputs)

train.py

import time

import tensorflow as tf

from tensorflow.keras import optimizers

from utils import *

from models import GCN, MLP

from config import args

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

print('tf version:', tf.__version__)

assert tf.__version__.startswith('2.')

# set random seed

seed = 123

np.random.seed(seed)

tf.random.set_seed(seed)

# load data

adj, features, y_train, y_val, y_test, train_mask, val_mask, test_mask = load_data(args.dataset)

print('adj:', adj.shape)

print('features:', features.shape)

print('y:', y_train.shape, y_val.shape, y_test.shape)

print('mask:', train_mask.shape, val_mask.shape, test_mask.shape)

# D^-1@X

features = preprocess_features(features) # [49216, 2], [49216], [2708, 1433]

print('features coordinates::', features[0].shape)

print('features data::', features[1].shape)

print('features shape::', features[2])

if args.model == 'gcn':

# D^-0.5 A D^-0.5

support = [preprocess_adj(adj)]

num_supports = 1

model_func = GCN

elif args.model == 'gcn_cheby':

support = chebyshev_polynomials(adj, args.max_degree)

num_supports = 1 + args.max_degree

model_func = GCN

elif args.model == 'dense':

support = [preprocess_adj(adj)] # Not used

num_supports = 1

model_func = MLP

else:

raise ValueError('Invalid argument for model: ' + str(args.model))

# Create model

model = GCN(input_dim=features[2][1], output_dim=y_train.shape[1], num_features_nonzero=features[1].shape) # [1433]

train_label = tf.convert_to_tensor(y_train)

train_mask = tf.convert_to_tensor(train_mask)

val_label = tf.convert_to_tensor(y_val)

val_mask = tf.convert_to_tensor(val_mask)

test_label = tf.convert_to_tensor(y_test)

test_mask = tf.convert_to_tensor(test_mask)

features = tf.SparseTensor(*features)

support = [tf.cast(tf.SparseTensor(*support[0]), dtype=tf.float32)]

num_features_nonzero = features.values.shape

dropout = args.dropout

optimizer = optimizers.Adam(lr=1e-2)

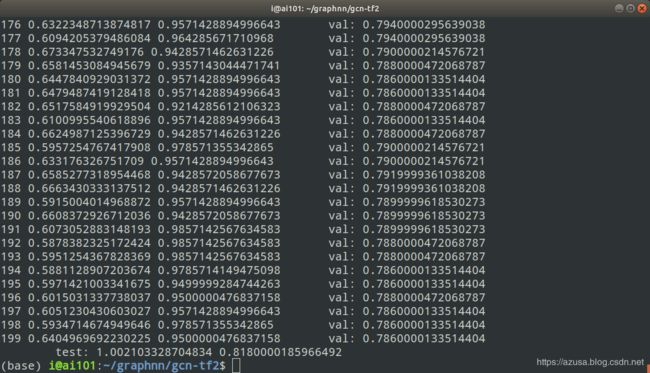

for epoch in range(args.epochs):

with tf.GradientTape() as tape:

loss, acc = model((features, train_label, train_mask,support))

grads = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(grads, model.trainable_variables))

_, val_acc = model((features, val_label, val_mask, support), training=False)

if epoch % 20 == 0:

print(epoch, float(loss), float(acc), '\tval:', float(val_acc))

test_loss, test_acc = model((features, test_label, test_mask, support), training=False)

print('\ttest:', float(test_loss), float(test_acc))utils.py

import numpy as np

import pickle as pkl

import networkx as nx

import scipy.sparse as sp

from scipy.sparse.linalg.eigen.arpack import eigsh

import sys

def parse_index_file(filename):

"""

Parse index file.

"""

index = []

for line in open(filename):

index.append(int(line.strip()))

return index

def sample_mask(idx, l):

"""

Create mask.

"""

mask = np.zeros(l)

mask[idx] = 1

return np.array(mask, dtype=np.bool)

def load_data(dataset_str):

"""

Loads input data from gcn/data directory

ind.dataset_str.x => the feature vectors of the training instances as scipy.sparse.csr.csr_matrix object;

ind.dataset_str.tx => the feature vectors of the test instances as scipy.sparse.csr.csr_matrix object;

ind.dataset_str.allx => the feature vectors of both labeled and unlabeled training instances

(a superset of ind.dataset_str.x) as scipy.sparse.csr.csr_matrix object;

ind.dataset_str.y => the one-hot labels of the labeled training instances as numpy.ndarray object;

ind.dataset_str.ty => the one-hot labels of the test instances as numpy.ndarray object;

ind.dataset_str.ally => the labels for instances in ind.dataset_str.allx as numpy.ndarray object;

ind.dataset_str.graph => a dict in the format {index: [index_of_neighbor_nodes]} as collections.defaultdict

object;

ind.dataset_str.test.index => the indices of test instances in graph, for the inductive setting as list object.

All objects above must be saved using python pickle module.

:param dataset_str: Dataset name

:return: All data input files loaded (as well the training/test data).

"""

names = ['x', 'y', 'tx', 'ty', 'allx', 'ally', 'graph']

objects = []

for i in range(len(names)):

with open("data/ind.{}.{}".format(dataset_str, names[i]), 'rb') as f:

if sys.version_info > (3, 0):

objects.append(pkl.load(f, encoding='latin1'))

else:

objects.append(pkl.load(f))

x, y, tx, ty, allx, ally, graph = tuple(objects)

test_idx_reorder = parse_index_file("data/ind.{}.test.index".format(dataset_str))

test_idx_range = np.sort(test_idx_reorder)

if dataset_str == 'citeseer':

# Fix citeseer dataset (there are some isolated nodes in the graph)

# Find isolated nodes, add them as zero-vecs into the right position

test_idx_range_full = range(min(test_idx_reorder), max(test_idx_reorder)+1)

tx_extended = sp.lil_matrix((len(test_idx_range_full), x.shape[1]))

tx_extended[test_idx_range-min(test_idx_range), :] = tx

tx = tx_extended

ty_extended = np.zeros((len(test_idx_range_full), y.shape[1]))

ty_extended[test_idx_range-min(test_idx_range), :] = ty

ty = ty_extended

features = sp.vstack((allx, tx)).tolil()

features[test_idx_reorder, :] = features[test_idx_range, :]

adj = nx.adjacency_matrix(nx.from_dict_of_lists(graph))

labels = np.vstack((ally, ty))

labels[test_idx_reorder, :] = labels[test_idx_range, :]

idx_test = test_idx_range.tolist()

idx_train = range(len(y))

idx_val = range(len(y), len(y)+500)

train_mask = sample_mask(idx_train, labels.shape[0])

val_mask = sample_mask(idx_val, labels.shape[0])

test_mask = sample_mask(idx_test, labels.shape[0])

y_train = np.zeros(labels.shape)

y_val = np.zeros(labels.shape)

y_test = np.zeros(labels.shape)

y_train[train_mask, :] = labels[train_mask, :]

y_val[val_mask, :] = labels[val_mask, :]

y_test[test_mask, :] = labels[test_mask, :]

return adj, features, y_train, y_val, y_test, train_mask, val_mask, test_mask

def sparse_to_tuple(sparse_mx):

"""

Convert sparse matrix to tuple representation.

"""

def to_tuple(mx):

if not sp.isspmatrix_coo(mx):

mx = mx.tocoo()

coords = np.vstack((mx.row, mx.col)).transpose()

values = mx.data

shape = mx.shape

return coords, values, shape

if isinstance(sparse_mx, list):

for i in range(len(sparse_mx)):

sparse_mx[i] = to_tuple(sparse_mx[i])

else:

sparse_mx = to_tuple(sparse_mx)

return sparse_mx

def preprocess_features(features):

"""

Row-normalize feature matrix and convert to tuple representation

"""

rowsum = np.array(features.sum(1)) # get sum of each row, [2708, 1]

r_inv = np.power(rowsum, -1).flatten() # 1/rowsum, [2708]

r_inv[np.isinf(r_inv)] = 0. # zero inf data

r_mat_inv = sp.diags(r_inv) # sparse diagonal matrix, [2708, 2708]

features = r_mat_inv.dot(features) # D^-1:[2708, 2708]@X:[2708, 2708]

return sparse_to_tuple(features) # [coordinates, data, shape], []

def normalize_adj(adj):

"""Symmetrically normalize adjacency matrix."""

adj = sp.coo_matrix(adj)

rowsum = np.array(adj.sum(1)) # D

d_inv_sqrt = np.power(rowsum, -0.5).flatten() # D^-0.5

d_inv_sqrt[np.isinf(d_inv_sqrt)] = 0.

d_mat_inv_sqrt = sp.diags(d_inv_sqrt) # D^-0.5

return adj.dot(d_mat_inv_sqrt).transpose().dot(d_mat_inv_sqrt).tocoo() # D^-0.5AD^0.5

def preprocess_adj(adj):

"""Preprocessing of adjacency matrix for simple GCN model and conversion to tuple representation."""

adj_normalized = normalize_adj(adj + sp.eye(adj.shape[0]))

return sparse_to_tuple(adj_normalized)

def chebyshev_polynomials(adj, k):

"""

Calculate Chebyshev polynomials up to order k. Return a list of sparse matrices (tuple representation).

"""

print("Calculating Chebyshev polynomials up to order {}...".format(k))

adj_normalized = normalize_adj(adj)

laplacian = sp.eye(adj.shape[0]) - adj_normalized

largest_eigval, _ = eigsh(laplacian, 1, which='LM')

scaled_laplacian = (2. / largest_eigval[0]) * laplacian - sp.eye(adj.shape[0])

t_k = list()

t_k.append(sp.eye(adj.shape[0]))

t_k.append(scaled_laplacian)

def chebyshev_recurrence(t_k_minus_one, t_k_minus_two, scaled_lap):

s_lap = sp.csr_matrix(scaled_lap, copy=True)

return 2 * s_lap.dot(t_k_minus_one) - t_k_minus_two

for i in range(2, k+1):

t_k.append(chebyshev_recurrence(t_k[-1], t_k[-2], scaled_laplacian))

return sparse_to_tuple(t_k)