OpenStack Redhat部署安装详解

【资料】

社区OpenStack Queens版本部署安装详解

KeyStone配置详细解释

openstack之keystone部署

照着官网来安装openstack pike之创建并启动instance

创建 OpenStack云主机(十一)

【openstack N版】——镜像服务glance

5 云计算系列之glance镜像服务安装

Keystone几种token生成的方式分析

= = = = = = = = = = = = = = = = =

一、部署软件环境

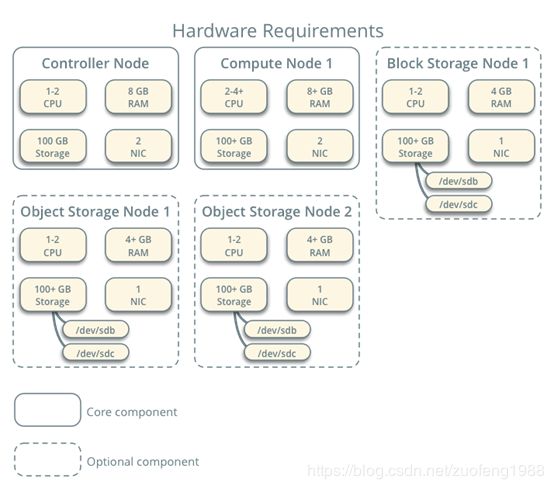

1、硬件最低需求

【说明】[fz1]

1、此次部署搭建采用三台物理节点手搭建社区OpenStack Queen+RedHat7环境

2、

1、操作系统:

RedHat7 / Centos7

cat /etc/redhat-release

CentOS Linux release 7.6.1810 (Core)

2、内核版本:

[root@controller horizon]# uname -a

Linux controller 3.10.0-957.21.3.el7.x86_64 #1 SMP Tue Jun 18 16:35:19 UTC 2019 x86_64 x86_64 x86_64 GNU/Linux

3、节点间以及网卡配置

控制controller节点

[root@cinder ~]# ip a

1: lo:

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno1:

link/ether 40:a8:f0:27:b6:fc brd ff:ff:ff:ff:ff:ff

inet 10.8.52.202/24 brd 10.8.52.255 scope global eno1

valid_lft forever preferred_lft forever

inet6 fe80::42a8:f0ff:fe27:b6fc/64 scope link

valid_lft forever preferred_lft forever

3: eno2:

link/ether 40:a8:f0:27:b6:fd brd ff:ff:ff:ff:ff:ff

4: eno3:

link/ether 40:a8:f0:27:b6:fe brd ff:ff:ff:ff:ff:ff

5: eno4:

link/ether 40:a8:f0:27:b6:ff brd ff:ff:ff:ff:ff:ff

6: virbr0:

link/ether 52:54:00:84:c6:68 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

7: virbr0-nic:

link/ether 52:54:00:84:c6:68 brd ff:ff:ff:ff:ff:ff

计算compute节点

[root@compute ~]# ip a

1: lo:

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno1:

link/ether 40:a8:f0:27:b6:fc brd ff:ff:ff:ff:ff:ff

inet 10.8.52.203/24 brd 10.8.52.255 scope global eno1

valid_lft forever preferred_lft forever

inet6 fe80::42a8:f0ff:fe27:b6fc/64 scope link

valid_lft forever preferred_lft forever

3: eno2:

link/ether 40:a8:f0:27:b6:fd brd ff:ff:ff:ff:ff:ff

4: eno3:

link/ether 40:a8:f0:27:b6:fe brd ff:ff:ff:ff:ff:ff

5: eno4:

link/ether 40:a8:f0:27:b6:ff brd ff:ff:ff:ff:ff:ff

6: virbr0:

link/ether 52:54:00:84:c6:68 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

7: virbr0-nic:

link/ether 52:54:00:84:c6:68 brd ff:ff:ff:ff:ff:ff

存储Cinder节点

1: lo:

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno1:

link/ether 40:a8:f0:27:b6:fc brd ff:ff:ff:ff:ff:ff

inet 10.8.52.204/24 brd 10.8.52.255 scope global eno1

valid_lft forever preferred_lft forever

inet6 fe80::42a8:f0ff:fe27:b6fc/64 scope link

valid_lft forever preferred_lft forever

3: eno2:

link/ether 40:a8:f0:27:b6:fd brd ff:ff:ff:ff:ff:ff

4: eno3:

link/ether 40:a8:f0:27:b6:fe brd ff:ff:ff:ff:ff:ff

5: eno4:

link/ether 40:a8:f0:27:b6:ff brd ff:ff:ff:ff:ff:ff

6: virbr0:

link/ether 52:54:00:84:c6:68 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

7: virbr0-nic:

link/ether 52:54:00:84:c6:68 brd ff:ff:ff:ff:ff:ff

DHCP的资源池:206、207、208.

密码表:

root root

admin 123456

demo 123456

glance 123456

nova 123456

placement 123456

neutron 123456

域 项目 用户 角色

Example server demo user

Domain demo

Admin admin

Service glance

Controller compute cinder

原:10.71.11.12 10.71.11.13 10.71.11.14

现:10.8.52.202 10.8.52.203 10.8.52.204

二.OpenStack概述

OpenStack项目是一个开源云计算平台,支持所有类型的云环境。该项目旨在实现简单,大规模的可扩展性和丰富的功能。

OpenStack通过各种补充服务提供基础架构即服务(IaaS)解决方案。每项服务都提供了一个应用程序编程接口(API),以促进这种集成。

本文涵盖了使用适用于具有足够Linux经验的OpenStack新用户的功能性示例体系结构,逐步部署主要OpenStack服务。只用于学习OpenStack最小化环境。

三、OpenStack架构总览

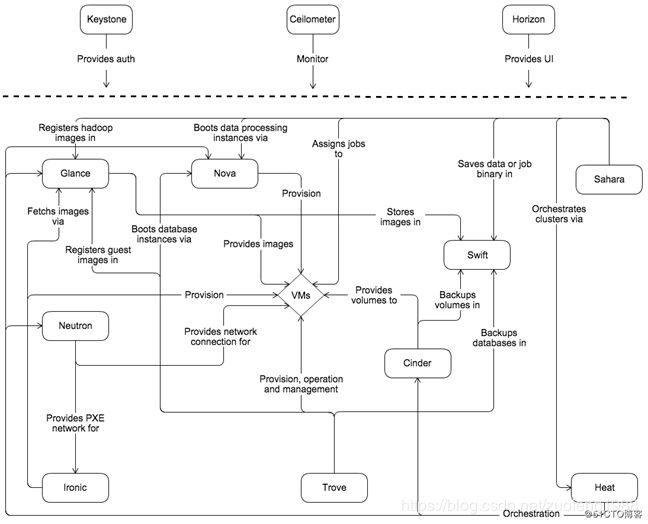

1.概念性架构

下图显示了OpenStack各服务之间的关系:

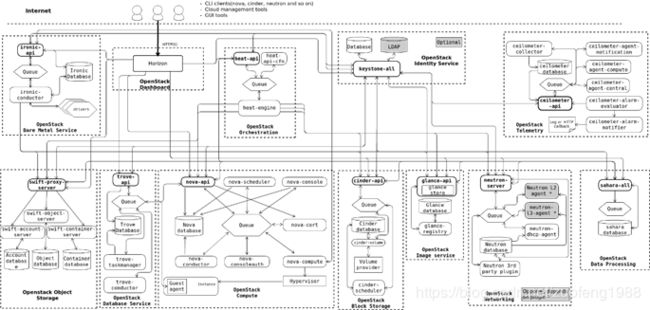

2.逻辑体系结构

下图显示了OpenStack云中最常见但不是唯一可能的体系结构:

【说明】这张图非常重要!建议打印出来。

对于设计,部署和配置OpenStack,学习者必须了解逻辑体系结构。

如概念架构所示,OpenStack由几个独立的部分组成,称为OpenStack服务。所有服务都通过keystone服务进行身份验证。

各个服务通过公共API相互交互,除非需要特权管理员命令。

在内部,OpenStack服务由多个进程组成。所有服务都至少有一个API进程,它监听API请求,预处理它们并将它们传递给服务的其他部分。除身份服务外,实际工作由不同的流程完成。

对于一个服务的进程之间的通信,使用AMQP消息代理。该服务的状态存储在数据库中。部署和配置OpenStack云时,您可以选择多种消息代理和数据库解决方案,例如RabbitMQ,MySQL,MariaDB和SQLite。

用户可以通过Horizon Dashboard实现的基于Web的用户界面,通过命令行客户端以及通过浏览器插件或curl等工具发布API请求来访问OpenStack。对于应用程序,有几个SDK可用。最终,所有这些访问方法都会对各种OpenStack服务发出REST API调用。

四.OpenStack组件服务部署

部署前置条件(以下命令在所有节点执行)

1.配置节点网卡IP

(略)

2.设置主机名

hostnamectl set-hostname 主机名

hostnamectl hostname 主机名

bash ##使设置立即生效

3.配置域名解析,编辑编辑/etc/hosts文件,加入如下配置

10.8.52.202 controller

10.8.52.203 compute

10.8.52.204 cinder

4.验证网络连通性

在控制节点执行

root@controller ~]# ping -c 4 openstack.org

PING openstack.org (162.242.140.107) 56(84) bytes of data.

64 bytes from 162.242.140.107 (162.242.140.107): icmp_seq=1 ttl=46 time=248 ms

64 bytes from 162.242.140.107 (162.242.140.107): icmp_seq=2 ttl=46 time=248 ms

64 bytes from 162.242.140.107 (162.242.140.107): icmp_seq=3 ttl=46 time=248 ms

64 bytes from 162.242.140.107 (162.242.140.107): icmp_seq=4 ttl=46 time=248 ms

[root@controller ~]# ping -c 4 compute

PING compute (10.71.11.13) 56(84) bytes of data.

64 bytes from compute (10.71.11.13): icmp_seq=1 ttl=64 time=0.395 ms

64 bytes from compute (10.71.11.13): icmp_seq=2 ttl=64 time=0.214 ms

在计算节点执行

[root@compute ~]# ping -c 4 openstack.org

PING openstack.org (162.242.140.107) 56(84) bytes of data.

64 bytes from 162.242.140.107 (162.242.140.107): icmp_seq=1 ttl=46 time=249 ms

64 bytes from 162.242.140.107 (162.242.140.107): icmp_seq=2 ttl=46 time=248 ms

[root@compute ~]# ping -c 4 controller

PING controller (10.71.11.12) 56(84) bytes of data.

64 bytes from controller (10.71.11.12): icmp_seq=1 ttl=64 time=0.237 ms

5.配置为阿里的yum源

备份

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup

【改】

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup201907231434

下载

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

或者

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

6.安装NTP时钟服务(所有节点)可能同步需要一点时间

controller节点

安装软件包

yum install chrony -y

编辑/etc/chrony.conf文件,配置时钟源同步服务端

【改】server controlelr iburst ##所有节点向controller节点同步时间

server controller iburst ##所有节点向controller节点同步时间

【改】allow 10.71.11.0/24 ##设置时间同步网段

allow 10.8.52.0/24 ##设置时间同步网段

设置NTP服务开机启动

systemctl enable chronyd.service

systemctl start chronyd.service

其他节点

安装软件包

yum install chrony -y

配置所有节点指向controller同步时间

vi /etc/chrony.conf

server controlelr iburst

【改】server controller iburst

重启NTP服(略)

systemctl restart chronyd.service

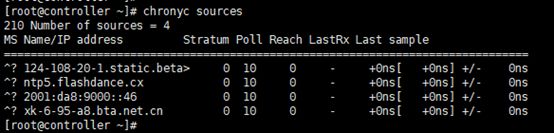

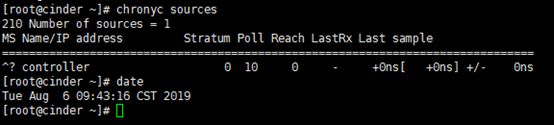

验证时钟同步服务

在controller节点执行

[root@controller ~]# chronyc sources

210 Number of sources = 4

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* time4.aliyun.com 2 10 377 1015 +115us[ +142us] +/- 14ms

^- ntp8.flashdance.cx 2 10 347 428 +27ms[ +27ms] +/- 259ms

^- 85.199.214.101 1 10 377 988 +38ms[ +38ms] +/- 202ms

^- ntp7.flashdance.cx 2 10 367 836 +35ms[ +35ms] +/- 247ms

MS列中的内容应该指明* NTP服务当前同步的服务器。

在其他节点执行

[root@compute ~]# chronyc sources

210 Number of sources = 4

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* leontp.ccgs.wa.edu.au 1 10 377 752 +49ms[ +49ms] +/- 121ms

^+ ntp5.flashdance.cx 2 10 373 1155 +15ms[ +16ms] +/- 258ms

^+ 85.199.214.101 1 10 377 46m -22ms[ -21ms] +/- 164ms

^+ ntp8.flashdance.cx 2 10 333 900 -6333us[-5976us] +/- 257ms

[root@cinder ~]# chronyc sources

210 Number of sources = 4

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^+ 61-216-153-104.HINET-IP.> 3 10 377 748 -3373us[-3621us] +/- 87ms

^- 85.199.214.100 1 10 377 876 +37ms[ +36ms] +/- 191ms

^* 61-216-153-106.HINET-IP.> 3 10 377 869 +774us[ +527us] +/- 95ms

^- makaki.miuku.net 2 10 377 384 +30ms[ +30ms] +/- 254ms

注意:日常运维中经常遇见时钟飘逸问题,导致集群服务脑裂

【问题】506 Cannot talk to daemon

【答】

【问题】Fatal error : Could not parse server directive at line 19 in file /etc/chrony.conf

【答】控制节点不需要配置server!!!!!

【问题】Jul 23 18:11:29 compute chronyd[50703]: chronyd version 3.2 starting (+CMDMON +NTP +REFCLOCK +RTC +PRIVDROP +...EBUG)

Jul 23 18:11:29 compute chronyd[50703]: Could not change ownership of /var/run/chrony : Operation not permitted

Jul 23 18:11:29 compute chronyd[50703]: Could not access /var/run/chrony : No such file or directory[fz1]

【答】

OpenStack服务安装、配置

说明:无特殊说明,以下操作在所有节点上执行

1.下载安装openstack软件仓库(queens版本)

yum install centos-release-openstack-queens -y

【改】

yum install

https://repos.fedorapeople.org/repos/openstack/openstack-queens/rdo-release-queens-0.noarch.rpm

【改】

yum install -y rdo-release-queens-0.noarch.rpm

yum install -y rdo-release-queens-1.noarch.rpm

【问题】Cannot open: https://repos.fedorapeople.org/repos/openstack/openstack-queens/rdo-release-queens-1.noarch.rpm. Skipping

【答】

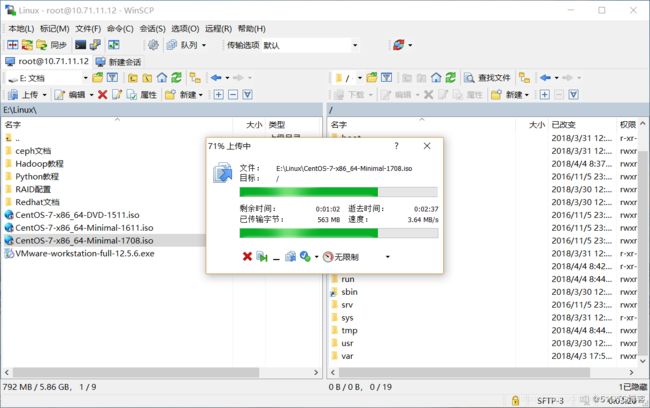

下载到本地windows,然后再rz到linux上 yum install安装。

【现象】This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

【问题】

使用非注册redhat(版本:7.1)yum命令

使用redhat版本的yum源是需要收费问题

【答】

选择使用Centos的yum源。

操作步骤

1、卸载原redhat 自带yum

2、寻找centos相关yum软件包进行rpm 安装,安装时要解决依赖问题,比较麻烦

3、修改repo文件,将地址指向国内镜像服务器

参考链接

https://blog.csdn.net/ztx114/article/details/85149629 非常详细

一、卸载原来的yum

二、下载并安装CentOS7的yum以及依赖

安装

1、安装yum-metadata-parser

2、安装yum

3、安装python-urlgrabber

4、安装rpm(版本>=4.11.3-22)

5、安装yum-plugin-fastestmirror

6、安装yum-updateonboot和yum-utils

三、配置yum源

四、参考:

2.更新所有节点软件包

yum upgrade

【问题】为什么还要update一下?

新部署系统需要yum update/upgrade是因为yum不会给你解决依赖冲突(但是apt会)。

举个例子,你的系统中已经安装了kernel-2.6.32.500,但是你要安装的某个软件包依赖于kernel-2.6.32.600,此时yum会报错退出,告诉你依赖不满足,并不会升级kernel包(只是举个例子而已,实际上几乎没有软件包直接依赖于kernel包),所以你只能yum update/upgrade一次,把系统中所有的软件包全部更新,这样满足新部署的软件包的依赖。

在debian/ubuntu的系统中,apt会对这种情况自动处理,会自动升级依赖的软件包。

换句话来说,对于新部署的服务器,也是推荐upgrade全部的软件包,已获得最新的安全补丁。即使对于已经上线的服务器,也是推荐定期打安全漏洞补丁,减少漏洞带来的侵害。

【问题】yum update和yum upgrade的区别:

【答】

它们都对软件进行升级,也都对内核进行升级。(重启进入新内核,uname -r查看)

唯一的区别是yum update不删除旧包而yum upgrade删除旧包

在生产环境中最好使用yum update,防止因旧软件包依赖而出现问题。

【答】

我更推荐用yum upgrade取代yum update,yum update只更新系统中已有的软件包,不会更新内核软件包(kernel-这个包),yum upgrade是更彻底的update,会分析包的废弃关系,可以跨小版本升级(比如从centos 7.1升级到centos 7.4),除了做了yum update完全相同的事之外,还会更新kernel-的包,也会卸载掉已经废弃的包。

3.安装openstack client端

yum install python-openstackclient -y

4.安装openstack-selinux

yum install openstack-selinux -y

【问题】这两个是用来干嘛的额?

【答】

OpenStackClient (aka OSC) is a command-line client for OpenStack that brings the command set for Compute, Identity, Image, Object Store and Block Storage APIs together in a single shell with a uniform command structure.

The primary goal is to provide a unified shell command structure and a common language to describe operations in OpenStack.

OpenStackClient(又名OSC)是OpenStack的命令行客户机,它将用于计算、标识、图像、对象存储和块存储api的命令集放在一个具有统一命令结构的单一shell中。

主要目标是提供统一的shell命令结构和描述OpenStack中的操作的通用语言。

【答】

openstack-selinux为openstack服务自动管理Selinux中的安全策略

Processing Conflict: initscripts-9.49.46-1.el7.x86_64 conflicts redhat-release < 7.5-0.11

【答】

Error: initscripts conflicts with redhat-release-server-7.1-1.el7.x86_64

Error: unbound-libs conflicts with redhat-release-server-7.1-1.el7.x86_64

Error: initscripts conflicts with redhat-release-server-7.1-1.el7.x86_64

You could try using --skip-broken to work around the problem

【答】

yum update --exclude=kernel* --exclude=centos-release* --exclude=initscripts*

【答】

解决方案

卸载冲突软件包

操作步骤

rpm -e redhat-release-server-7.1-1.el7.x86_64 --nodeps

yum update

** Found 5 pre-existing rpmdb problem(s), 'yum check' output follows:

PackageKit-0.8.9-11.el7.x86_64 has missing requires of PackageKit-backend

anaconda-core-19.31.123-1.el7.x86_64 has missing requires of yum-utils >= ('0', '1.1.11', '3')

python-urlgrabber-3.10-9.el7.noarch is a duplicate with python-urlgrabber-3.10-6.el7.noarch

rhn-check-2.0.2-6.el7.noarch has missing requires of yum-rhn-plugin >= ('0', '1.6.4', '1')

rpm-4.11.3-35.el7.x86_64 is a duplicate with rpm-4.11.1-25.el7.x86_64

这是在安装openstackclient时报的错:

Failed:

centos-release.x86_64 0:7-6.1810.2.el7.centos

【答】

安装数据库(controller节点执行)

大多数OpenStack服务使用SQL数据库来存储信息,数据库通常在控制器节点上运行。 本文主要使用MariaDB或MySQL。

安装软件包

yum install mariadb mariadb-server python2-PyMySQL -y

编辑/etc/my.cnf.d/mariadb-server.cnf并完成以下操作

[root@controller ~]# vi /etc/my.cnf.d/mariadb-server.cnf

#

# These groups are read by MariaDB server.

# Use it for options that only the server (but not clients) should see

#

# See the examples of server my.cnf files in /usr/share/mysql/

#

# this is read by the standalone daemon and embedded servers

[server]

# this is only for the mysqld standalone daemon

# Settings user and group are ignored when systemd is used.

# If you need to run mysqld under a different user or group,

# customize your systemd unit file for mysqld/mariadb according to the

# instructions in http://fedoraproject.org/wiki/Systemd

[mysqld]

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

log-error=/var/log/mariadb/mariadb.log

pid-file=/var/run/mariadb/mariadb.pid

bind-address = 10.71.11.12

【改】bind-address = 10.8.52.202

Default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

说明:bind-address使用controller节点的管理IP

【改】不要输入其他东西!好像mariadb已经不用innodb了!!!

【说明】因为keystone是一个认证服务也属于一个web app,既然是认证服务就要在后端安装一个数据库。用来存放用户的相关数据

设置服务开机启动

systemctl enable mariadb.service

systemctl start mariadb.service

通过运行mysql_secure_installation脚本来保护数据库服务。

[root@controller ~]# mysql_secure_installation

NOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MariaDB

SERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY!

In order to log into MariaDB to secure it, we'll need the current

password for the root user. If you've just installed MariaDB, and

you haven't set the root password yet, the password will be blank,

so you should just press enter here.

Enter current password for root (enter for none):

OK, successfully used password, moving on...

Setting the root password ensures that nobody can log into the MariaDB

root user without the proper authorisation.

Set root password? [Y/n]

New password:

Re-enter new password:

Password updated successfully!

【注意】这个输入数据库的密码!!!

Reloading privilege tables..

... Success!

By default, a MariaDB installation has an anonymous user, allowing anyone

to log into MariaDB without having to have a user account created for

them. This is intended only for testing, and to make the installation

go a bit smoother. You should remove them before moving into a

production environment.

Remove anonymous users? [Y/n]

... Success!

Normally, root should only be allowed to connect from 'localhost'. This

ensures that someone cannot guess at the root password from the network.

Disallow root login remotely? [Y/n]

... Success!

By default, MariaDB comes with a database named 'test' that anyone can

access. This is also intended only for testing, and should be removed

before moving into a production environment.

Remove test database and access to it? [Y/n]

- Dropping test database...

... Success!

- Removing privileges on test database...

... Success!

Reloading the privilege tables will ensure that all changes made so far

will take effect immediately.

Reload privilege tables now? [Y/n]

... Success!

Cleaning up...

All done! If you've completed all of the above steps, your MariaDB

installation should now be secure.

Thanks for using MariaDB!

【说明】数据库的密码是:root,改为123456了。

【问题】Failed to start MariaDB 10.1 database server.

【答】

【问题】vim&vi在编辑的时候突然卡死,不接收输入问题的解决

【答】原来ctrl+s是shell控制键,在输入ctrl+q就可以恢复了,网上看到crt远程使用vim的时候也是发现这个问题,原因其实是一样的。

在controller节点安装、配置RabbitMQ

1.安装配置消息队列组件

yum install rabbitmq-server -y

2.设置服务开机启动

systemctl enable rabbitmq-server.service;

systemctl start rabbitmq-server.service

3.添加openstack 用户

rabbitmqctl add_user openstack 123456

4.openstack用户的权限配置(给openstack用户配置读写权限)

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

Setting permissions for user "openstack" in vhost "/"

安装缓存数据库Memcached(controller节点)

说明:服务的身份认证服务使用Memcached缓存令牌[fz2] 。 memcached服务通常在控制器节点上运行。 对于生产部署,我们建议启用防火墙,身份验证和加密的组合来保护它。

1.安装配置组件

yum install memcached python-memcached -y

2.编辑/etc/sysconfig/memcached

vi /etc/sysconfig/memcached

OPTIONS="-l 10.71.11.12,::1,controller"

【改】

OPTIONS="-l 10.8.52.202,::1,controller"

【问题】在IPv6的单播地址中有两种特殊地址,0:0:0:0:0:0:0:0和0:0:0:0:0:0:0:1,它们分别表示什么含义

【答】

前一个全零(可简写为::)的地址叫未指定地址,一般不分配给主机

后一个(可简写为::1)的地址叫环回地址,用来检测本主机协议是否正确安装

3.设置服务开机启动

systemctl enable memcached.service;

systemctl start memcached.service

检查查看配置文件

[root@linux-node1 ~]# cat /etc/sysconfig/memcached

PORT="11211" #端口号

USER="memcached" #用户

MAXCONN="1024" #最大连接数

CACHESIZE="64" #大小64兆

OPTIONS=""

【问题】Jul 25 16:31:53 controller memcached[13013]: failed to listen on TCP port 11211: Address already in use

Jul 25 16:31:53 controller systemd[1]: memcached.service: main process exited, code=exited, status=71/n/a

【答】

Use netstat, ss or lsof to find out which process uses this port. For example:

ss -nltp | grep 11211

Etcd服务安装(controller)

1.安装服务

yum install etcd -y

2.编辑/etc/etcd/etcd.conf文件

vi /etc/etcd/etcd.conf

ETCD_INITIAL_CLUSTER

ETCD_INITIAL_ADVERTISE_PEER_URLS

ETCD_ADVERTISE_CLIENT_URLS

ETCD_LISTEN_CLIENT_URLS

#[Member]

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="http://10.71.11.12:2380"

ETCD_LISTEN_CLIENT_URLS="http://10.71.11.12:2379"

ETCD_NAME="controller"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.71.11.12:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://10.71.11.12:2379"

ETCD_INITIAL_CLUSTER="controller=http://10.71.11.12:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster-01"

ETCD_INITIAL_CLUSTER_STATE="new"

3.设置服务开机启动

systemctl enable etcd;

systemctl start etcd

【问题】Jul 24 13:49:56 controller systemd[1]: start request repeated too quickly for etcd.service

Jul 24 13:49:56 controller systemd[1]: Failed to start Etcd Server.

Process: 36293 ExecStart=/bin/bash -c GOMAXPROCS=$(nproc) /usr/bin/etcd --name="${ETCD_NAME}" --data-dir="${ETCD_DATA_DIR}" --listen-client-urls="${ETCD_LISTEN_CLIENT_URLS}" (code=exited, status=1/FAILURE)

【答】

--initial-cluster-state=existing \ # 将new这个参数修改成existing,启动正常!

安装keystone组件(controller)

1.创建keystone数据库并授权

mysql -u root -p

CREATE DATABASE keystone; #新建库keystone

#授权keystone用户拥有keystone数据库的所有权限

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY '123456'; #新建本地访问keystone库的账号

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY '123456';#新建远程访问keystone库的账号

【说明】

注意:以后每部署一个组件都要有一个自己的数据库,在数据库中。比如nova也要访问数据库,那么数据库中就存放一个nova的库,里面存放一些nova相关的信息 ,catalog等

【问题】完美解决 ERROR 1064 (42000): You have an error in your SQL......

【答】在MySQL命令行使用sql语句进行建表时,MySQL 报错,遇到同一个问题,写一篇博客来加深印象。

原SQL语句:CREATE DATABASE 'xiaoyaoji' CHARACTER SET utf8;

咋一看没有问题,但是运行起来就会曝出以下问题:

这个问题是语法上的错误,在MySQL中,为了区分MySQL的关键字与普通字符,MySQL引入了一个反引号。

在上述的sql语句中,数据库名称使用的是单引号而不是反引号,所以会就报了这个错误出来。修改后为:

CREATE DATABASE `xiaoyaoji` CHARACTER SET utf8;`

在英文键盘输入环境下,按图示按钮输入反引号 `

MariaDB [keystone]> show tables;

Empty set (0.00 sec)

【答】当时还没有数据

Query OK, 0 rows affected (0.00 sec)

【答】没有问题

2.安装、配置组件

yum install openstack-keystone httpd mod_wsgi -y

【说明】

1、wsgi是python一个cgi的接口(keystone需要使用httpd来运行)

2、keystone软件包名openstack-keystone

3、安装httpd和mod_wsgi的原因是,社区主推apache+keystone

openstack-keystone本质就是一款基于wsgi协议的web app,而httpd本质就是一个兼容wsgi协议的web server,所以我们需要为httpd安装mod_wsgi模块

【答】#openstack-keystone本质就是一款基于wsgi协议的web app,而httpd本质就是一个兼容wsgi协议的web server,所以我们需要为httpd安装mod_wsgi模块

【答】字不如表,表不如图。

【问题】apache keystone wsgi httpd是如何工作的[fz3]

【答】

WSGI简介

3.编辑 vim /etc/keystone/keystone.conf

[database]

connection = mysql+pymysql://keystone:123456@controller/keystone

[token]

provider = fernet[fz4]

【说明】

mysql+pymysql:pymysql是一个python库,使用python可以操作mysql原生sql

#mysql+pmysql是由上面的Python-mysql #用户名:密码@mysql地址/哪个库 那个模块安装的,用pymysql来操作mysql

driver = memcache

【说明】没有配置,默认是存储在sql中,我们需要将它修改为memcache

4.同步keystone数据库

su -s /bin/sh -c "keystone-manage db_sync" keystone

【说明】

之所以要初始化,是因为python的orm(Object Relation Mapping)对象关系映射,需要初始化来生成数据库表结构。

【说明】

会自动找到keystone配置文件里的mysql连接,来帮我们创建数据库中的表

问题:我们为什么要使用keystone用户去同步数据库呢?

【答】

因为同步数据库会在/var/log/keystone去写一个日志,如果使用root权限,keystone启动时会读取这个日志,那么将无法进行读取出现启动错误的情况[fz5]

shell-init: error retrieving current directory: getcwd: cannot access parent directories: No such file or directory

【答】

该错误表示 getcwd 命令无法定位到当前工作目录。一般来说是因为你 cd 到了某个目录之后 rm 了这个目录,这时去执行某些 service 脚本的时候就会报 getcwd 错误。只需要 cd 到任何一个实际存在的目录下在执行命令即可。

5.数据库初始化

keystone-manage fernet_setup[fz6] --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

【答】

执行完命令之后会在/etc/keystone下生成fernet-keys目录,它的权限是keystone里面存放这一些相关证书。

【说明】

上面的两个步骤是keystone对自己授权的一个过程,创建了一个keystone用户与一个keystone组。并对这个用户和组授权。因为keystone是对其他组件认证的服务,那么它自己就合格么?所以它先要对自己进行一下认证

6.引导身份认证服务

keystone-manage bootstrap --bootstrap-password 123456 \

--bootstrap-admin-url http://controller:5000/v3/ \

--bootstrap-internal-url http://controller:5000/v3/ \

--bootstrap-public-url http://controller:5000/v3/ \

--bootstrap-region-id RegionOne

【改】

| keystone-manage bootstrap --bootstrap-password 123456 \ |

配置apache http服务

1.编辑vim /etc/httpd/conf/httpd.conf,配置ServerName参数

ServerName controller[fz7]

2.创建 /usr/share/keystone/wsgi-keystone.conf链接文件

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

【说明】为mod_wsgi模块添加配置文件,即wsgi的配置文件使用http的配置文件。

【答】

linux的软链接和硬链接删除都不会影响原始文件,但是修改的话都会影响原始文件。

1、linux的软链接相当于windows里的快捷方式,快捷方式删了就删了,原来文件还是存在的。

2、linux的硬链接有可能会(即当硬链接数为1时,这时再删除,就真的删没了。如果硬链接数大于1,则删除硬链接只是使连接数减1,而不会真正删除文件)

但是软链接可以跨系统,这点硬链接不行。

【说明】[root@controller conf.d]# ll

total 16

-rw-r--r--. 1 root root 2926 Apr 24 21:45 autoindex.conf

-rw-r--r--. 1 root root 366 Apr 24 21:46 README

-rw-r--r--. 1 root root 1252 Apr 24 21:44 userdir.conf

-rw-r--r--. 1 root root 824 Apr 24 21:44 welcome.conf

lrwxrwxrwx. 1 root root 38 Jul 25 09:06 wsgi-keystone.conf -> /usr/share/keystone/wsgi-keystone.conf

【问题】做软链接的时候,目的文件是个空文件

【答】软链接权限

软连接一个文件打开后是空文件,而且无法保存和编写。

原因在于,软连接与硬链接不同,需要用绝对路径显示 如下

ln -s /home/test/test1 /home test

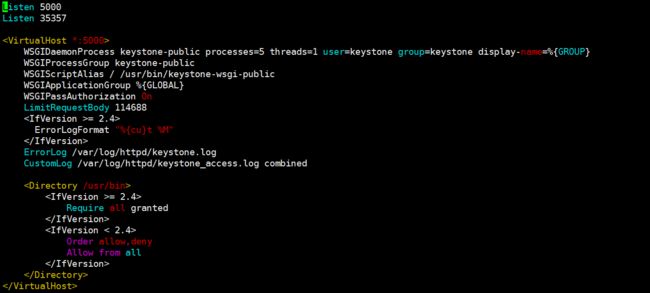

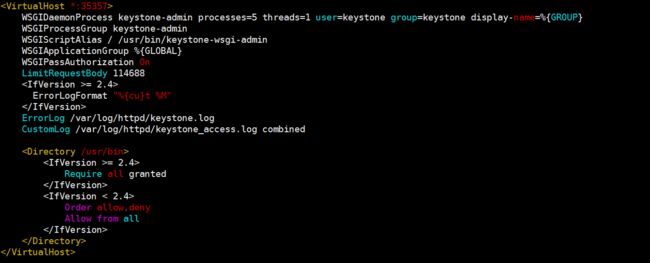

查看wsgi-keystone.conf文件

【说明】1.上面两个端口说明keystone要开放这两个端口,apache才能把请求交给keystone

2.Port 5000:Wsgi守护进程,进程5个,每个进程下面一个线程,用户keystone,组keystone。进程组/usr/bin/keystone-wsgi-public 用来产生5000端口(内部用户与外部用户使用)

3.Port 35357:跟上面一样。进程组/usr/bin/keystone-wsgi-admin 用来产生35357端口(管理员使用)

3.设置服务开机启动

systemctl enable httpd.service;

systemctl start httpd.service

启动服务报错

[root@controller ~]# systemctl start httpd.service

Job for httpd.service failed because the control process exited with error code. See "systemctl status httpd.service" and "journalctl -xe" for details.

【问题】

[root@controller ~]# journalctl -xe

Apr 01 02:31:03 controller systemd[1]: [/usr/lib/systemd/system/memcached.service:62] Unknown lvalue 'ProtectControlGroups' in section 'Service'

Apr 01 02:31:03 controller systemd[1]: [/usr/lib/systemd/system/memcached.service:65] Unknown lvalue 'RestrictRealtime' in section 'Service'

Apr 01 02:31:03 controller systemd[1]: [/usr/lib/systemd/system/memcached.service:72] Unknown lvalue 'RestrictNamespaces' in section 'Service'

Apr 01 02:31:03 controller polkitd[928]: Unregistered Authentication Agent for unix-process:18932:9281785 (system bus name :1.157, object path /org/freedeskt

Apr 01 02:31:09 controller polkitd[928]: Registered Authentication Agent for unix-process:18952:9282349 (system bus name :1.158 [/usr/bin/pkttyagent --notify

Apr 01 02:31:09 controller systemd[1]: Starting The Apache HTTP Server...

-- Subject: Unit httpd.service has begun start-up

-- Defined-By: systemd

-- Support: http://lists.freedesktop.org/mailman/listinfo/systemd-devel

--

-- Unit httpd.service has begun starting up.

Apr 01 02:31:09 controller httpd[18958]: (13)Permission denied: AH00072: make_sock: could not bind to address [::]:5000

Apr 01 02:31:09 controller httpd[18958]: (13)Permission denied: AH00072: make_sock: could not bind to address 0.0.0.0:5000

Apr 01 02:31:09 controller httpd[18958]: no listening sockets available, shutting down

Apr 01 02:31:09 controller httpd[18958]: AH00015: Unable to open logs

Apr 01 02:31:09 controller systemd[1]: httpd.service: main process exited, code=exited, status=1/FAILURE

Apr 01 02:31:09 controller kill[18960]: kill: cannot find process ""

Apr 01 02:31:09 controller systemd[1]: httpd.service: control process exited, code=exited status=1

Apr 01 02:31:09 controller systemd[1]: Failed to start The Apache HTTP Server.

-- Subject: Unit httpd.service has failed

-- Defined-By: systemd

-- Support: http://lists.freedesktop.org/mailman/listinfo/systemd-devel

--

-- Unit httpd.service has failed.

--

-- The result is failed.

Apr 01 02:31:09 controller systemd[1]: Unit httpd.service entered failed state.

Apr 01 02:31:09 controller systemd[1]: httpd.service failed.

Apr 01 02:31:09 controller polkitd[928]: Unregistered Authentication Agent for unix-process:18952:9282349 (system bus name :1.158, object path /org/freedeskt

【答】我竟然没有报错!!!

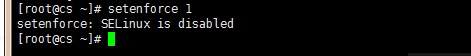

经过判断,是SELinux引发的问题

解决办法:关闭SELinux防火墙

【说明】

防火墙与selinux是否有什么关系的呀?

有时做测试时,为什么两个都要关闭的呢?

Linux 防火墙、SELinux 的开启和关闭

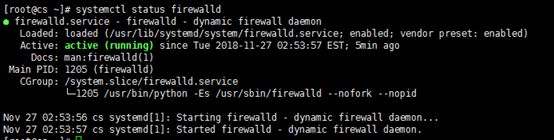

防火墙( firewalld)

临时关闭防火墙

systemctl stop firewalld

临时打开防火墙

systemctl start firewalld

永久关闭防火墙开机自关闭

systemctl disable firewalld

防火墙开机自启动

systemctl enable firewalld

重启防火墙(disable、enable 修改后重启防火墙生效,或重启linux)

systemctl restart firewalld

查看防火墙的状态

systemctl status firewalld

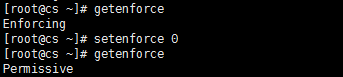

SELinux

临时关闭SELinux

setenforce 0 (状态为permissive)

临时打开SELinux

setenforce 1 (状态为enforcing)

查看SELinux的状态

getenforce

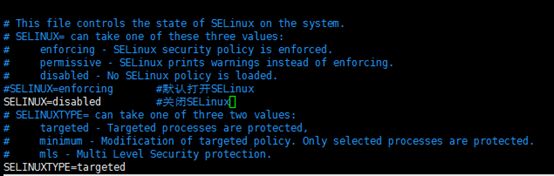

永久关闭SELinux(重启后生效)

编辑/etc/selinux/config 文件,将SELinux的默认值enforcing 改为 disabled,下次开机就不会再启动

注意:此时也不可以用setenforce 1 命令临时打开

如果要再次打开需要修改配置文件,重启后生效

防火墙与selinux什么关系?

因为SELinux 太 安 全了,限制太多, 有的人说SELinux和其他的服务有冲突,所以关闭,然而并不是,只是限制太多,导致很多时候服务正常配置了,但是无法访问。 这只是人对SELinux的机制了解的不够多,也就是不会使用。

所以基本上安装好系统之后第一件事就是关闭SELinux 。

还有一个原因就是,Linux系统已经很安全了,有 iptables 和 Firewall ,为什么还要使用SELinux这么复杂的东西呢,当然这也只是对于一些中小型公司而言的。对于一些安全性比较高的公司,也会使用SELinux。

firewall和Selinux一般学习的话,尽量都关掉。再企业中的话,我遇到的基本也是关闭,大部分使用的是iptables,firewall可以看作是再iptables的基础上升级了的,但是大部分firewalld命令底层调用的命令仍然是iptables。现在大部分企业用iptables,你可以学者用firewall,防火墙基本都是要必须掌握的。Selinux就没太大必要了

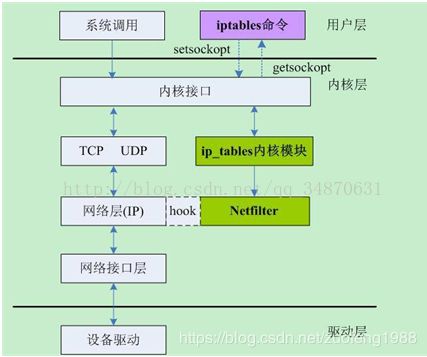

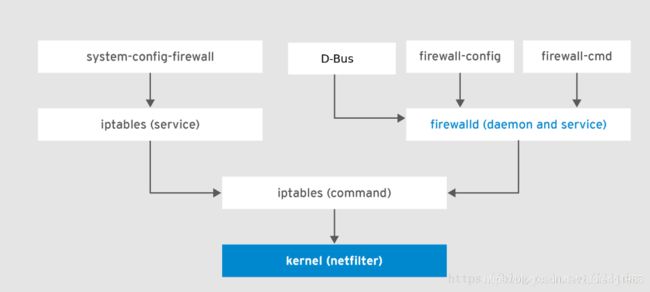

SELinux、Netfilter、iptables、firewall和UFW五者关系

一、五者是什么?

1、SELinux是美国国家安全局发布的一个强制访问控制系统

2、Netfilter是Linux 2.4.x引入的一个子系统,作为一个通用的、抽象的框架,提供一整套的hook函数的管理机制

3、iptables是Linux下功能强大的应用层防火墙工具。

4、firewall是centos7里面新的防火墙管理命令,底层还是调用iptables来处理的

5、ufw是Ubuntu下的一个简易的防火墙配置工具。,底层还是调用iptables来处理的

二、五者关系?

1、Netfilter管网络,selinux管本地。

2、iptables是用于设置防火墙,防范来自网络的入侵和实现网络地址转发、QoS等功能,而SELinux则可以理解为是作为Linux文件权限控制(即我们知道的rwx)的补充存在的·

3、ufw是自2.4版本以后的Linux内核中一个简易的防火墙配置工具,底层还是调用iptables来处理的,iptables可以灵活的定义防火墙规则, 功能非常强大。但是产生的副作用便是配置过于复杂。因此产生一个相对iptables简单很多的防火墙配置工具:ufw

4、firewall是centos7里面新的防火墙管理命令,底层还是调用iptables来处理的,主要区别是iptables服务,每次更改都意味着刷新所有旧规则并从/etc/sysconfig/iptables读取所有新规则,firewall可以在运行时更改设置,而不丢失现有连接。

5、iptables是Linux下功能强大的应用层防火墙工具, 说到iptables必然提到Netfilter,iptables是应用层的,其实质是一个定义规则的配置工具,而核心的数据包拦截和转发是Netfiler。Netfilter是Linux操作系统核心层内部的一个数据包处理模块

iptables和Netfilter关系图:

Hook:简单来说,就是把系统的程序拉出来变成我们自己执行代码片段。

iptables和fillwall关系图:

[root@controller ~]# vi /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

再次重启服务报错解决

[root@controller ~]#

systemctl enable httpd.service;

systemctl start httpd.service;

4.配置administrative账号

export OS_USERNAME=admin

export OS_PASSWORD=123456

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default[fz1]

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

【改】

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=123456

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

【改】

export OS_USERNAME=admin

export OS_PASSWORD=123456

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

【说明】

第一步的值等于keystone.conf中admin_token的值。并且只要在当前终端执行命令,当前终端就是管理员用户

【答】

总所周知,Keystone服务同时占用了35357和5000这2个端口,这是因为v2版本的时候使用了2个端口,而v3版本为了向前兼容,所以也占用了这2个端口。

在v3版本的时候,这2个端口提供的服务基本相同。但相对来说35357会比较全一些。

还有一点不同的就是admin角色的服务只能通过35357来认证,而普通用户的服务这2个端口都可以进行认证。

cat /etc/redhat-release

CentOS Linux release 7.6.1810 (Core)

【答】

#让openstack-keystone能够知道如何连接到后端的数据库keystone

#mysql+pymysql:pymysql是一个python库,使用python可以操作mysql原生sql

[database]

connection = mysql+pymysql://keystone:[email protected]/keystone #mysql+pmysql是由上面的Python-mysql #用户名:密码@mysql地址/哪个库 那个模块安装的,用pymysql来操作mysql

[token]

provider = fernet #fernet为生成token的方式(还有pki)

创建 domain, projects, users, roles

1.创建域

openstack domain create --description "Domain" example

[root@controller ~]# openstack domain create --description "Domain" example

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Domain |

| enabled | True |

| id | 199658b1d0234c3cb8785c944aa05780 |

| name | example |

| tags | [] |

+-------------+----------------------------------+

【问题】Failed to discover available identity versions when contacting http://controller:35357/v3. Attempting to parse version from URL.

Unable to establish connection to 平http://controller:35357/v3/auth/tokens: ('Connection aborted.', BadStatusLine("''",))

【答】就是http_PROXY的问题!!!!

折腾了24小时的问题!@!!!!!!

[root@controller keystone]# unset http_proxy

只有在yum命令行的时候去配置proxy!!!!

或者配置no_proxy=127.0.0.1

但是为什么呢!!!!!!!!

【答】就算是本机地址,也会首先走代理!

当执行如下Identity组件验证代码:

openstack –os-auth-url http://:5000/v3 –os-project-domain-name default –os-user-domain-name default –os-project-name demo –os-username demo token issue

出现如下的错误提示:

Discovering versions from the identity service failed when creating the password plugin. Attempting to determine version from URL.

解决方法:

1) 是否已经执行过admin-openrc,若没有,执行:

# . admin-openrc

2) 检查是否export了HTTP Proxy,如果有,则先退出当前用户,然后重新登录。

2019-07-25 10:50:36.155 9825 ERROR keystone raise errorclass(errno, errval)

2019-07-25 10:50:36.155 9825 ERROR keystone ProgrammingError: (pymysql.err.ProgrammingError) (1146, u"Table 'keystone.project' doesn't exist") [SQL: u'INSERT INTO project (id, name, domain_id, description, enabled, extra, parent_id, is_domain) VALUES (%(id)s, %(name)s, %(domain_id)s, %(description)s, %(enabled)s, %(extra)s, %(parent_id)s, %(is_domain)s)'] [parameters: {'is_domain': 1, 'description': 'The default domain', 'extra': '{}', 'enabled': 1, 'id': 'default', 'parent_id': None, 'domain_id': '<

【答】检查配置文件中[token]是否配置错误,如果错误是无法成功初始化fernet的

如果始终给你报401就删掉数据库重新来吧,如果还是有毛病看我这篇文章

http://blog.csdn.net/controllerha/article/details/78701936

把些个环境配置重新来一篇

【答】you can use curl http://10.8.52.202:5000 on your controller ndoe, if it successfully return version list, then keystone service is ok, you need to check the connect between control node and other nodes, otherwise, you need to check keystone log in 10.23.77.68, it seems this service is not running

【答】

查看35357端口和5000端口是否被占用?

netstat -anp|grep 35357|5000

【答】

Before the Queens release, keystone needed to be run on two separate ports to accommodate the Identity v2 API which ran a separate admin-only service commonly on port 35357. With the removal of the v2 API, keystone can be run on the same port for all interfaces.

【答】pgrep keystone

pgrep 是通过程序的名字来查询进程的工具,一般是用来判断程序是否正在运行。在服务器的配置和管理中,这个工具常被应用,简单明了;

【答】

Check if Apache is running, and if keystone processes run under Apache. I expect they don't. In this case, you should have earlier messages in the log file that should give you clues.

【答】

systemctl -l | grep ceph

- 创建服务项目

[root@controller ~]# openstack project create --domain default --description "Service Project" service

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Service Project |

| domain_id | default |

| enabled | True |

| id | 03e700ff43e44b29b97365bac6c7d723 |

| is_domain | False |

| name | service |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

3.创建平台demo项目

[root@controller ~]# openstack project create --domain default --description "Demo Project" demo

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Demo Project |

| domain_id | default |

| enabled | True |

| id | 61f8c9005ca84477b5bdbf485be1a546 |

| is_domain | False |

| name | demo |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

4.创建demo用户

[root@controller ~]# openstack user create --domain default --password-prompt demo

User Password:root

Repeat User Password:root(或大写)

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | fa794c034a53472c827a94e6a6ad12c1 |

| name | demo |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

5.创建用户角色

[root@controller ~]# openstack role create user

+-----------+----------------------------------+

| Field | Value |

+-----------+----------------------------------+

| domain_id | None |

| id | 15ea413279a74770b79630b75932a596 |

| name | user |

+-----------+----------------------------------+

6.添加用户角色到demo项目和用户

openstack role add --project demo --user demo user

说明:此条命令执行成功后不返回参数

验证操作

1.取消环境变量

2.admin用户返回的认证token

[root@controller ~]# unset OS_AUTH_URL OS_PASSWORD

[root@controller ~]# openstack

--os-auth-url http://controller:35357/v3

--os-project-domain-nam[fz1] e Default

--os-user-domain-name Default

--os-project-name admin

--os-username admin

token issue

Password: 123456

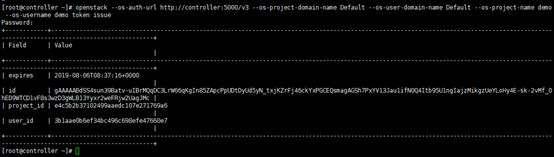

3.demo用户返回的认证token

[root@controller ~]# openstack --os-auth-url http://controller:5000/v3 --os-project-domain-name Default --os-user-domain-name Default --os-project-name demo --os-username demo token issue

Password: root

【问题】Ignoring domain related config user_domain_name because identity API version is 2.0

【答】重新配置env环境 admin-openrc

创建openstack 客户端环境脚本

1.创建admin-openrc脚本

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=123456

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

2.创建demo-openrc脚本

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=123456

【改】export OS_PASSWORD=root

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

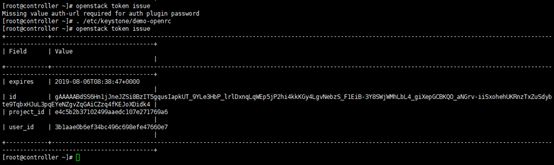

3.使用脚本,返回认证token

[root@controller ~]# openstack token issue[fz1]

【说明】

出于安全考虑,关闭临时令牌认证机制(配置文件中的admin_token

和keystone-manage的--bootstrap-password都是基于该机制)

2.取消一切环境变量(退出xshell/puppy远程连接软件,重新连接)

安装Glance服务(controller)

1.创建glance数据库,并授权

mysql -u root -p

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY '123456';

2.获取admin用户的环境变量,并创建服务认证

. admin-openrc

创建glance用户

[root@controller ~]# openstack user create --domain default --password-prompt glance

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | dd2363d365624c998dfd788b13e1282b |

| name | glance |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

把admin用户添加到glance用户和项目中

openstack role add --project service --user glance admin[fz1]

说明:此条命令执行不返回不返回

创建glance服务

[root@controller ~]# openstack service create --name glance --description "OpenStack Image" image

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Image |

| enabled | True |

| id | 5927e22c745449869ff75b193ed7d7c6 |

| name | glance |

| type | image |

+-------------+----------------------------------+

3.创建镜像服务API端点

[root@controller ~]# openstack endpoint create --region RegionOne image public http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 0822449bf80f4f6897be5e3240b6bfcc |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 5927e22c745449869ff75b193ed7d7c6 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne image internal http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | f18ae583441b4d118526571cdc204d8a |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 5927e22c745449869ff75b193ed7d7c6 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne image admin http://controller:9292

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 79eadf7829274b1b9beb2bfb6be91992 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 5927e22c745449869ff75b193ed7d7c6 |

| service_name | glance |

| service_type | image |

| url | http://controller:9292 |

+--------------+----------------------------------+

【说明】Endpoint的每个URL都对应一个服务实例的访问地址,并且具有public、orivate和admin这三种权限。

pubic url可以完全被全局访问,private url只能被局域网访问,admin url被从常规的访问中分离。

安装和配置组件

1.安装软件包

yum install openstack-glance -y

2.编辑/etc/glance/glance-api.conf文件

[database]

connection = mysql+pymysql://glance:123456@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = 123456

[paste_deploy]

flavor = keystone

[glance_store]

#配置本地文件系统和镜像存储位置

stores = file,http

#配置默认存储

default_store = file

#配置文件系统存储数据目录

filesystem_store_datadir = /var/lib/glance/images/

3.编辑/etc/glance/glance-registry.conf

[database]

connection = mysql+pymysql://glance:123456@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = 123456

[paste_deploy]

#配置认证服务访问

flavor = keystone

4.同步镜像服务数据库

#将数据导入数据库

su -s /bin/sh -c "glance-manage db_sync" glance

#检查数据

[root@linux-node1 ~]# mysql -uglance -p123456 -h10.8.52.202 -e"use glance;show tables"

+----------------------------------+

| Tables_in_glance |

+----------------------------------+

| alembic_version |

| image_locations |

| image_members |

| image_properties |

| image_tags |

| images |

| metadef_namespace_resource_types |

| metadef_namespaces |

| metadef_objects |

| metadef_properties |

| metadef_resource_types |

| metadef_tags |

| migrate_version |

| task_info |

| tasks |

+----------------------------------+

启动服务

systemctl enable openstack-glance-api.service openstack-glance-registry.service

systemctl start openstack-glance-api.service openstack-glance-registry.service

验证操作

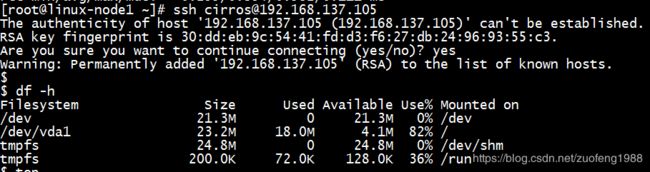

使用CirrOS验证Image服务的操作,这是一个小型Linux映像,可帮助您测试OpenStack部署。

有关如何下载和构建映像的更多信息,请参阅OpenStack虚拟机映像指南https://docs.openstack.org/image-guide/

有关如何管理映像的信息,请参阅OpenStack最终用户指南https://docs.openstack.org/queens/user/

1.获取admin用户的环境变量,且下载镜像

. admin-openrc

wget http://download.cirros-cloud.net/0.3.5/cirros-0.3.5-x86_64-disk.img

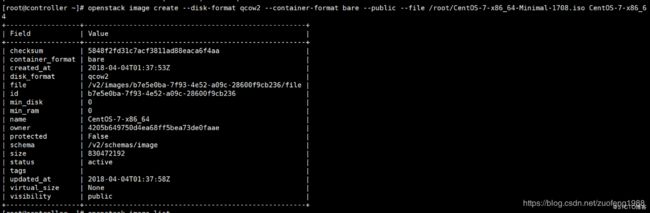

2.上传镜像

使用QCOW2磁盘格式,裸容器格式和公开可见性将图像上传到Image服务,以便所有项目都可以访问它:

[root@controller ~]# openstack image create "cirros" --file cirros-0.3.5-x86_64-disk.img --disk-format qcow2 --container-format bare --public

+------------------+------------------------------------------------------+

| Field | Value |

+------------------+------------------------------------------------------+

| checksum | f8ab98ff5e73ebab884d80c9dc9c7290 |

| container_format | bare |

| created_at | 2018-04-01T08:00:05Z |

| disk_format | qcow2 |

| file | /v2/images/916faa2b-e292-46e0-bfe4-0f535069a1a0/file |

| id | 916faa2b-e292-46e0-bfe4-0f535069a1a0 |

| min_disk | 0 |

| min_ram | 0 |

| name | cirros |

| owner | 4205b649750d4ea68ff5bea73de0faae |

| protected | False |

| schema | /v2/schemas/image |

| size | 13267968 |

| status | active |

| tags | |

| updated_at | 2018-04-01T08:00:06Z |

| virtual_size | None |

| visibility | public |

+------------------+------------------------------------------------------+

【问题】Failed to discover available identity versions when contacting http://controller:5000/v3. Attempting to parse version from URL.

Unable to establish connection to http://controller:5000/v3/auth/tokens: ('Connection aborted.', BadStatusLine("''",))

【答】http代理问题

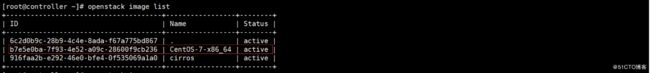

3.查看上传的镜像

[root@controller ~]# openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| 916faa2b-e292-46e0-bfe4-0f535069a1a0 | cirros | active |

+--------------------------------------+--------+--------+

说明:glance具体配置选项:https://docs.openstack.org/glance/queens/configuration/index.html

controller节点安装和配置compute服务

1.创建nova_api, nova, nova_cell0数据库

mysql -u root -p

CREATE DATABASE nova_api;

CREATE DATABASE nova;

CREATE DATABASE nova_cell0;

数据库登录授权

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY '123456';

2.创建nova用户

[root@controller ~]# . admin-openrc

[root@controller ~]# openstack user create --domain default --password-prompt nova

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 8e72103f5cc645669870a630ffb25065 |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

3.添加admin用户为nova用户

openstack role add --project service --user nova admin

4.创建nova服务

[root@controller ~]# openstack service create --name nova --description "OpenStack Compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | 9f8f8d8cb8e542b09694bee6016cc67c |

| name | nova |

| type | compute |

+-------------+----------------------------------+

5.创建compute API 服务端点

[root@controller ~]# openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | cf260d5a56344c728840e2696f44f9bc |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 9f8f8d8cb8e542b09694bee6016cc67c |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | f308f29a78e04b888c7418e78c3d6a6d |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 9f8f8d8cb8e542b09694bee6016cc67c |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 022d96fa78de4b73b6212c09f13d05be |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 9f8f8d8cb8e542b09694bee6016cc67c |

| service_name | nova |

| service_type | compute |

| url | http://controller:8774/v2.1 |

+--------------+----------------------------------+

创建一个placement服务用户

[root@controller ~]# openstack user create --domain default --password-prompt placement[fz2]

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | fa239565fef14492ba18a649deaa6f3c |

| name | placement |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

6.添加placement用户为项目服务admin角色

openstack role add --project service --user placement admin

7.创建在服务目录创建Placement API服务

[root@controller ~]# openstack service create --name placement --description "Placement API" placement

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Placement API |

| enabled | True |

| id | 32bb1968c08747ccb14f6e4a20cd509e |

| name | placement |

| type | placement |

+-------------+----------------------------------+

8.创建Placement API服务端点

[root@controller ~]# openstack endpoint create --region RegionOne placement public http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | b856962188484f4ba6fad500b26b00ee |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 32bb1968c08747ccb14f6e4a20cd509e |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne placement internal http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | 62e5a3d82a994f048a8bb8ddd1adc959 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 32bb1968c08747ccb14f6e4a20cd509e |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

[root@controller ~]# openstack endpoint create --region RegionOne placement admin http://controller:8778

+--------------+----------------------------------+

| Field | Value |

+--------------+----------------------------------+

| enabled | True |

| id | f12f81ff7b72416aa5d035b8b8cc2605 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 32bb1968c08747ccb14f6e4a20cd509e |

| service_name | placement |

| service_type | placement |

| url | http://controller:8778 |

+--------------+----------------------------------+

安装和配置组件

- 安装软件包

yum install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler openstack-nova-placement-api

2.编辑 /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:123456@controller

my_ip = 10.71.11.12

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api_database]

connection = mysql+pymysql://nova:123456@controller/nova_api

[database]

connection = mysql+pymysql://nova:123456@controller/nova

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = 123456

[vnc]

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:35357/v3

username = placement

password = 123456

3.由于软件包的一个bug,需要在/etc/httpd/conf.d/00-nova-placement-api.conf文件中添加如下配置

Require all granted

Order allow,deny

Allow from all

4.重新http服务

systemctl restart httpd

5.同步nova-api数据库

su -s /bin/sh -c "nova-manage api_db sync" nova

同步数据库报错

[root@controller ~]# su -s /bin/sh -c "nova-manage api_db sync" nova

Traceback (most recent call last):

File "/usr/bin/nova-manage", line 10, in <module>

sys.exit(main())

File "/usr/lib/python2.7/site-packages/nova/cmd/manage.py", line 1597, in main

config.parse_args(sys.argv)

File "/usr/lib/python2.7/site-packages/nova/config.py", line 52, in parse_args

default_config_files=default_config_files)

File "/usr/lib/python2.7/site-packages/oslo_config/cfg.py", line 2502, in __call__

else sys.argv[1:])

File "/usr/lib/python2.7/site-packages/oslo_config/cfg.py", line 3166, in _parse_cli_opts

return self._parse_config_files()

File "/usr/lib/python2.7/site-packages/oslo_config/cfg.py", line 3183, in _parse_config_files

ConfigParser._parse_file(config_file, namespace)

File "/usr/lib/python2.7/site-packages/oslo_config/cfg.py", line 1950, in _parse_file

raise ConfigFileParseError(pe.filename, str(pe))

oslo_config.cfg.ConfigFileParseError: Failed to parse /etc/nova/nova.conf: at /etc/nova/nova.conf:8, No ':' or '=' found in assignment: '/etc/nova/nova.conf'

根据报错,把/etc/nova/nova.conf中第八行注释掉,解决报错

【答】没有遇到这个问题

[root@controller ~]# su -s /bin/sh -c "nova-manage api_db sync" nova

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_tpool'] not supported

exception.NotSupportedWarning

6.注册cell0数据库

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_tpool'] not supported

exception.NotSupportedWarning

7.创建cell1 cell

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

【说明】nova-manage cell_v2 命令

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_tpool'] not supported

exception.NotSupportedWarning

6c689e8c-3e13-4e6d-974c-c2e4e22e510b

8.同步nova数据库

[root@controller ~]# su -s /bin/sh -c "nova-manage db sync" nova

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_tpool'] not supported

exception.NotSupportedWarning

/usr/lib/python2.7/site-packages/pymysql/cursors.py:165: Warning: (1831, u'Duplicate index `block_device_mapping_instance_uuid_virtual_name_device_name_idx`. This is deprecated and will be disallowed in a future release.')

result = self._query(query)

/usr/lib/python2.7/site-packages/pymysql/cursors.py:165: Warning: (1831, u'Duplicate index `uniq_instances0uuid`. This is deprecated and will be disallowed in a future release.')

result = self._query(query)

9.验证 nova、 cell0、 cell1数据库是否注册正确

[root@controller ~]# nova-manage cell_v2 list_cells

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_tpool'] not supported

exception.NotSupportedWarning

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+

| Name | UUID | Transport URL | Database Connection |

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@controller/nova_cell0 |

| cell1 | 6c689e8c-3e13-4e6d-974c-c2e4e22e510b | rabbit://openstack:****@controller | mysql+pymysql://nova:****@controller/nova |

+-------+--------------------------------------+------------------------------------+-------------------------------------------------+

10.设置服务为开机启动

systemctl enable openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

systemctl start openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

【答】

跟计算节点上运行的是nova-conpute

安装和配置compute节点服务

1.安装软件包

yum install openstack-nova-compute

2.编辑/etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:123456@controller

my_ip = 10.8.52.203

【改】my_ip = 10.8.52.203

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api]

auth_strategy = keystone

[glance]

api_servers = http://controller:9292

[keystone_authtoken]

auth_uri = http://10.71.11.12:5000

【改】auth_uri = http:// controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = 123456

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

os_region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:35357/v3

username = placement

password = 123456

[vnc]

enabled = True

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

【说明】0.0.0.0的意思是任意地址

3.设置服务开机启动

systemctl enable libvirtd.service[fz3] openstack-nova-compute.service

systemctl start libvirtd.service openstack-nova-compute.service

说明:如果nova-compute服务无法启动,请检查/var/log/nova/nova-compute.log,会出现如下报错信息

2018-04-01 12:03:43.362 18612 INFO os_vif [-] Loaded VIF plugins: ovs, linux_bridge

2018-04-01 12:03:43.431 18612 WARNING oslo_config.cfg [-] Option "use_neutron" from group "DEFAULT" is deprecated for removal (

nova-network is deprecated, as are any related configuration options.

). Its value may be silently ignored in the future.

2018-04-01 12:03:43.609 18612 INFO nova.virt.driver [req-8f3c2d77-ea29-49ca-933b-bfd4179552dc - - - - -] Loading compute driver 'libvirt.LibvirtDriver'

2018-04-01 12:03:43.825 18612 WARNING oslo_config.cfg [req-8f3c2d77-ea29-49ca-933b-bfd4179552dc - - - - -] Option "firewall_driver" from group "DEFAULT" is deprecated for removal (

nova-network is deprecated, as are any related configuration options.

). Its value may be silently ignored in the future.

2018-04-01 12:03:43.832 18612 WARNING os_brick.initiator.connectors.remotefs [req-8f3c2d77-ea29-49ca-933b-bfd4179552dc - - - - -] Connection details not present. RemoteFsClient may not initialize properly.

2018-04-01 12:03:43.938 18612 ERROR oslo.messaging._drivers.impl_rabbit [req-8f3c2d77-ea29-49ca-933b-bfd4179552dc - - - - -] [683db769-0ab2-4e92-b19e-d2b711c8fadf] AMQP server on controller:5672 is unreachable: [Errno 113] EHOSTUNREACH. Trying again in 1 seconds. Client port: None: error: [Errno 113] EHOSTUNREACH

2018-04-01 12:03:45.042 18612 ERROR oslo.messaging._drivers.impl_rabbit [req-8f3c2d77-ea29-49ca-933b-bfd4179552dc - - - - -] [683db769-0ab2-4e92-b19e-d2b711c8fadf] AMQP server on controller:5672 is unreachable: [Errno 113] EHOSTUNREACH. Trying again in 2 seconds. Client port: None: error: [Errno 113] EHOSTUNREACH

2018-04-01 12:03:47.140 18612 ERROR oslo.messaging._drivers.impl_rabbit [req-8f3c2d77-ea29-49ca-933b-bfd4179552dc - - - - -] [683db769-0ab2-4e92-b19e-d2b711c8fadf] AMQP server on controller:5672 is unreachable: [Errno 113] EHOSTUNREACH. Trying again in 4 seconds. Client port: None: error: [Errno 113] EHOSTUNREACH

2018-04-01 12:03:51.244 18612 ERROR oslo.messaging._drivers.impl_rabbit [req-8f3c2d77-ea29-49ca-933b-bfd4179552dc - - - - -] [683db769-0ab2-4e92-b19e-d2b711c8fadf] AMQP server on controller:5672 is unreachable: [Errno 113] EHOSTUNREACH. Trying again in 6 seconds. Client port: None: error: [Errno 113] EHOSTUNREACH

2018-04-01 12:03:57.351 18612 ERROR oslo.messaging._drivers.impl_rabbit [req-8f3c2d77-ea29-49ca-933b-bfd4179552dc - - - - -] [683db769-0ab2-4e92-b19e-d2b711c8fadf] AMQP server on controller:5672 is unreachable: [Errno 113] EHOSTUNREACH. Trying again in 8 seconds. Client port: None: error: [Errno 113] EHOSTUNREACH

2018-04-01 12:04:05.458 18612 ERROR oslo.messaging._drivers.impl_rabbit [req-8f3c2d77-ea29-49ca-933b-bfd4179552dc - - - - -] [683db769-0ab2-4e92-b19e-d2b711c8fadf] AMQP server on controller:5672 is unreachable: [Errno 113] EHOSTUNREACH. Trying again in 10 seconds. Client port: None: error: [Errno 113] EHOSTUNREACH

@

"/var/log/nova/nova-compute.log" 947L, 240212C

控制器:5672上的错误消息AMQP服务器无法访问 可能表示控制器节点上的防火墙阻止了对端口5672的访问。配置防火墙以在控制器节点上打开端口5672,并在计算节点上重新启动nova-compute服务。

【答】

防火墙未关闭或5672端口未打开

【答】

1.防火墙未关闭或5672端口未打开

这可能表明controller节点上的防火墙阻止了对端口5672的访问。配置防火墙,在controller节点上打开5672端口,并在compute节点上重新启动nova-compute服务。

# netstat -auntlp | grep 5672 (查看5672端口是否在使用)

# iptables -I INPUT -p tcp --dport 5672 -j ACCEPT (打开5672端口)

# iptables-save

# iptables -L -n

# systemctl restart openstack-nova* (controller、compute节点)

【答】

启用iptables,在rabbitmq server端加入如下规则,开放rabbitmq端口(5672),允许其他主机访问rabbitmq server:

# iptables -I INPUT -p tcp --dport 5672 -j ACCEPT

#添加规则

# service iptables save

#保存设置

# service iptables restart

# 重启iptables,生效规则

解决CentOS7关闭/开启防火墙出现Unit iptables.service failed to load: No such file or directory.

【答】

解决CentOS7关闭/开启防火墙出现Unit iptables.service failed to load: No such file or directory.

清除controller的防火墙

[root@controller ~]# iptables -F

[root@controller ~]# iptables -X

[root@controller ~]# iptables -Z

重启计算服务成功

4.添加compute节点到cell数据库(controller)

验证有几个计算节点在数据库中

[root@controller ~]. admin-openrc

[root@controller ~]# openstack compute service list --service nova-compute

+----+--------------+---------+------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+--------------+---------+------+---------+-------+----------------------------+

| 8 | nova-compute | compute | nova | enabled | up | 2018-04-01T22:24:14.000000 |

+----+--------------+---------+------+---------+-------+----------------------------+

5.发现计算节点

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_tpool'] not supported

exception.NotSupportedWarning

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting compute nodes from cell 'cell1': 6c689e8c-3e13-4e6d-974c-c2e4e22e510b

Found 1 unmapped computes in cell: 6c689e8c-3e13-4e6d-974c-c2e4e22e510b

Checking host mapping for compute host 'compute': 32861a0d-894e-4af9-a57c-27662d27e6bd

Creating host mapping for compute host 'compute': 32861a0d-894e-4af9-a57c-27662d27e6b

在controller节点验证计算服务操作

1.列出服务组件

[root@controller ~]#. admin-openrc

[root@controller ~]# openstack compute service list

+----+------------------+----------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+------------------+----------------+----------+---------+-------+----------------------------+

| 1 | nova-consoleauth | controller | internal | enabled | up | 2018-04-01T22:25:29.000000 |

| 2 | nova-conductor | controller | internal | enabled | up | 2018-04-01T22:25:33.000000 |

| 3 | nova-scheduler | controller | internal | enabled | up | 2018-04-01T22:25:30.000000 |

| 6 | nova-conductor | ansible-server | internal | enabled | up | 2018-04-01T22:25:55.000000 |

| 7 | nova-scheduler | ansible-server[fz4] | internal | enabled | up | 2018-04-01T22:25:59.000000 |

| 8 | nova-compute | compute | nova | enabled | up | 2018-04-01T22:25:34.000000 |

| 9 | nova-consoleauth | ansible-server | internal | enabled | up | 2018-04-01T22:25:57.000000 |

+----+------------------+----------------+----------+---------+-------+----------------------------+

2.列出身份服务中的API端点以验证与身份服务的连接:

[root@controller ~]# openstack catalog list

+-----------+-----------+-----------------------------------------+

| Name | Type | Endpoints |

+-----------+-----------+-----------------------------------------+

| placement | placement | RegionOne |

| | | internal: http://controller:8778 |

| | | RegionOne |

| | | public: http://controller:8778 |

| | | RegionOne |

| | | admin: http://controller:8778 |

| | | |

| keystone | identity | RegionOne |

| | | public: http://controller:5000/v3/ |

| | | RegionOne |

| | | admin: http://controller:35357/v3/ |

| | | RegionOne |

| | | internal: http://controller:5000/v3/ |

| | | |

| glance | image | RegionOne |

| | | public: http://controller:9292 |

| | | RegionOne |

| | | admin: http://controller:9292 |

| | | RegionOne |

| | | internal: http://controller:9292 |

| | | |

| nova | compute | RegionOne |

| | | admin: http://controller:8774/v2.1 |

| | | RegionOne |

| | | public: http://controller:8774/v2.1 |

| | | RegionOne |

| | | internal: http://controller:8774/v2.1 |

| | | |

+-----------+-----------+-----------------------------------------+

【说明】目录

3.列出镜像

[root@controller ~]# openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| 916faa2b-e292-46e0-bfe4-0f535069a1a0 | cirros | active |

+--------------------------------------+--------+--------+

4.检查cells和placement API是否正常

[root@controller ~]# nova-status upgrade check

/usr/lib/python2.7/site-packages/oslo_db/sqlalchemy/enginefacade.py:332: NotSupportedWarning: Configuration option(s) ['use_tpool'] not supported

exception.NotSupportedWarning

Option "os_region_name" from group "placement" is deprecated. Use option "region-name" from group "placement".

+---------------------------+

| Upgrade Check Results |

+---------------------------+

| Check: Cells v2 |

| Result: Success |

| Details: None |

+---------------------------+

| Check: Placement API |

| Result: Success |

| Details: None |

+---------------------------+

| Check: Resource Providers |[fz5]

| Result: Success |

| Details: None |

+---------------------------+

nova知识点https://docs.openstack.org/nova/queens/admin/index.html

oslo_config.cfg.ConfigFilesNotFoundError: Failed to find some config files: /etc/neutron/plugin.ini

【答】

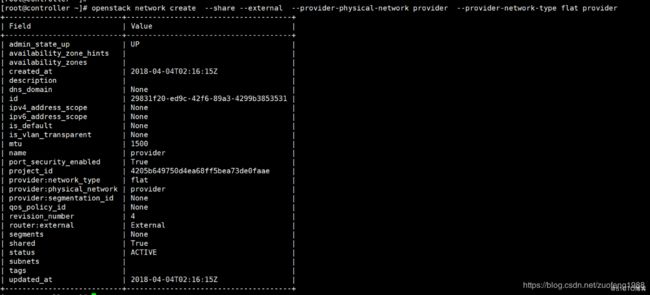

安装和配置controller节点neutron网络配置

1.创建nuetron数据库和授权

mysql -u root -p

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY '123456';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY '123456';

2.创建服务

. admin-openrc

openstack user create --domain default --password-prompt neutron

添加admin角色为neutron用户

openstack role add --project service --user neutron admin

创建neutron服务

openstack service create --name neutron --description "OpenStack Networking" network

3.创建网络服务端点

openstack endpoint create --region RegionOne network public http://controller:9696

openstack endpoint create --region RegionOne network internal http://controller:9696

openstack endpoint create --region RegionOne network admin http://controller:969

配置网络部分(controller节点)

1.安装组件

yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables

【说明】

分析Ebtables/Iptables实现及命令。

ebtables和iptables都是linux系统下,netfilter的配置工具,可以在链路层和网络层的几个关键节点配置报文过滤和修改规则。

ebtables更侧重vlan,mac和报文流量。

iptables侧重ip层信息,4层的端口信息。

2.配置服务组件,编辑 /etc/neutron/neutron.conf

[DEFAULT]

auth_strategy = keystone

core_plugin = ml2

service_plugins =

transport_url = rabbit://openstack:123456@controller

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[database]

connection = mysql+pymysql://neutron:123456@controller/neutron

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123456

[nova]

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = 123456

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

配置网络二层插件

编辑/etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,vlan

tenant_network_types =

mechanism_drivers = linuxbridge

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[securitygroup]

enable_ipset = true

配置Linux网桥

编辑 /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:ens6f0

【改】physical_interface_mappings = provider: eno1

[vxlan]

enable_vxlan = false

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

配置DHCP服务

编辑 /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

配置metadata

编辑 /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_host = controller

metadata_proxy_shared_secret = 123456

配置计算服务使用网络服务

编辑/etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123456

service_metadata_proxy = true

metadata_proxy_shared_secret = 123456

完成安装

1.创建服务软连接

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

2.同步数据库

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

3.重启compute API服务

systemctl restart openstack-nova-api.service

4.配置网络服务开机启动

systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

systemctl start neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

配置compute节点网络服务

1.安装组件

yum install openstack-neutron-linuxbridge ebtables ipset

2.配置公共组件

编辑/etc/neutron/neutron.conf

[DEFAULT]

auth_strategy = keystone

transport_url = rabbit://openstack:123456@controller

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123456

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

配置网络

1.配置Linux网桥,编辑 /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:ens6f0

【改】physical_interface_mappings = provider:eno1

[vxlan]

enable_vxlan = false

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

配置计算节点网络服务

编辑/etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123456

完成安装

1.重启compute服务

systemctl restart openstack-nova-compute.service

2.设置网桥服务开机启动

systemctl enable neutron-linuxbridge-agent.service

systemctl start neutron-linuxbridge-agent.service

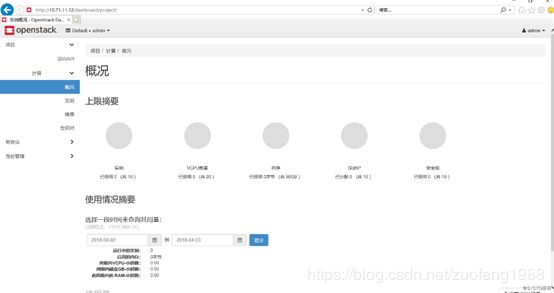

在controller节点安装Horizon服务

1.安装软件包

yum install openstack-dashboard[fz6] -y

编辑/etc/openstack-dashboard/local_settings

OPENSTACK_HOST = "controller"

ALLOWED_HOSTS = ['*']

配置memcache会话存储

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

开启身份认证API 版本v3

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HO

开启domains版本支持

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

配置API版本

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

:

OPENSTACK_NEUTRON_NETWORK = {

'enable_router': False,

'enable_quotas': False,

'enable_distributed_router': False,

'enable_ha_router': False,

'enable_lb': False,

'enable_firewall': False,

'enable_***': False,

'enable_fip_topology_check': False,

}

2.完成安装,重启web服务和会话存储

systemctl restart httpd.service memcached.service

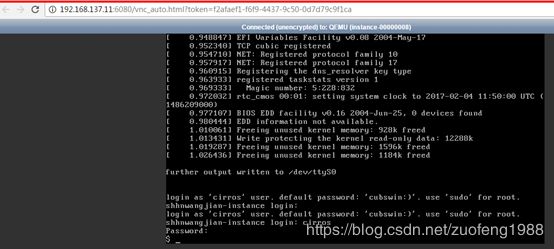

在浏览器输入http://10.8.52.202/dashboard.,访问openstack的web页面

输入:

default

admin/123456

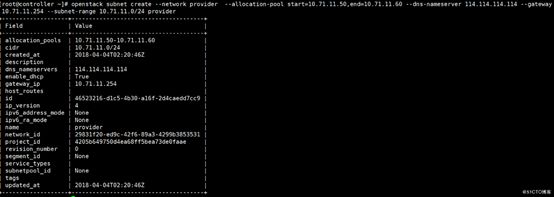

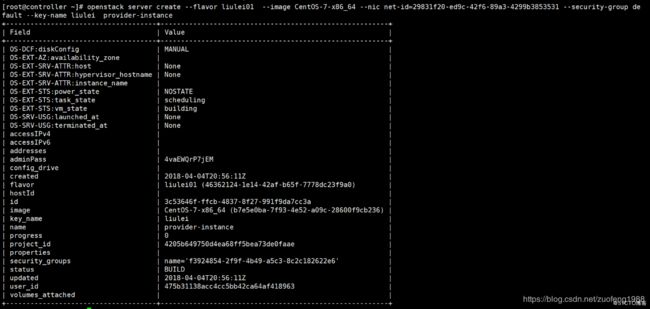

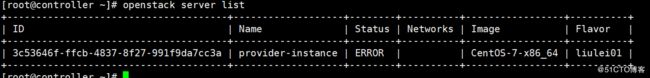

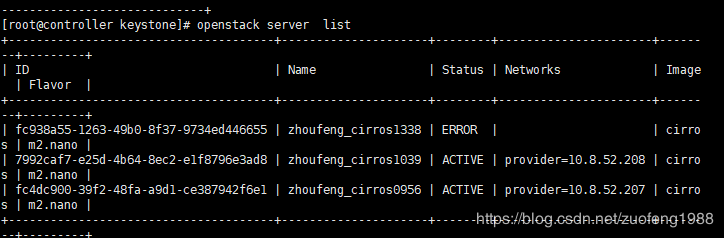

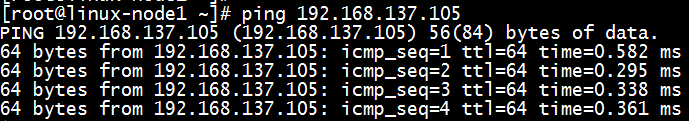

Demo/Root