100万并发连接服务器笔记之Java Netty处理1M连接会怎么样

转载:http://www.blogjava.net/yongboy/archive/2013/05/13/399203.html

前言

每一种该语言在某些极限情况下的表现一般都不太一样,那么我常用的Java语言,在达到100万个并发连接情况下,会怎么样呢,有些好奇,更有些期盼。

这次使用经常使用的顺手的netty NIO框架(netty-3.6.5.Final),封装的很好,接口很全面,就像它现在的域名 netty.io,专注于网络IO。

整个过程没有什么技术含量,浅显分析过就更显得有些枯燥无聊,准备好,硬着头皮吧。

测试服务器配置

运行在VMWare Workstation 9中,64位Centos 6.2系统,分配14.9G内存左右,4核。

已安装有Java7版本:

java version "1.7.0_21"

Java(TM) SE Runtime Environment (build 1.7.0_21-b11)

Java HotSpot(TM) 64-Bit Server VM (build 23.21-b01, mixed mode)

测试端

测试端和以前一样的程序,翻看前几篇博客就可以看到client5.c的源码。

在/etc/sysctl.conf中添加如下配置:

fs.file-max = 1048576

net.ipv4.ip_local_port_range = 1024 65535

net.ipv4.tcp_mem = 786432 2097152 3145728

net.ipv4.tcp_rmem = 4096 4096 16777216

net.ipv4.tcp_wmem = 4096 4096 16777216

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_tw_recycle = 1

服务器程序

这次也是很简单呐,没有业务功能,客户端HTTP请求,服务端输出chunked编码内容。

入口HttpChunkedServer.java:

| 1234567891011121314151617181920212223242526272829303132333435363738394041424344454647484950515253545556575859606162636465 |

package

com

.

test

.

server

;

import

static

org

.

jboss

.

netty

.

channel

.

Channels

.

pipeline

;

import

java.net.InetSocketAddress

;

import

java.util.concurrent.Executors

;

import

org.jboss.netty.bootstrap.ServerBootstrap

;

import

org.jboss.netty.channel.ChannelPipeline

;

import

org.jboss.netty.channel.ChannelPipelineFactory

;

import

org.jboss.netty.channel.socket.nio.NioServerSocketChannelFactory

;

import

org.jboss.netty.handler.codec.http.HttpChunkAggregator

;

import

org.jboss.netty.handler.codec.http.HttpRequestDecoder

;

import

org.jboss.netty.handler.codec.http.HttpResponseEncoder

;

import

org.jboss.netty.handler.stream.ChunkedWriteHandler

;

public

class

HttpChunkedServer

{

private

final

int

port

;

public

HttpChunkedServer

(

int

port

)

{

this

.

port

=

port

;

}

public

void

run

()

{

// Configure the server.

ServerBootstrap

bootstrap

=

new

ServerBootstrap

(

new

NioServerSocketChannelFactory

(

Executors

.

newCachedThreadPool

(),

Executors

.

newCachedThreadPool

()));

// Set up the event pipeline factory.

bootstrap

.

setPipelineFactory

(

new

ChannelPipelineFactory

()

{

public

ChannelPipeline

getPipeline

()

throws

Exception

{

ChannelPipeline

pipeline

=

pipeline

();

pipeline

.

addLast

(

"decoder"

,

new

HttpRequestDecoder

());

pipeline

.

addLast

(

"aggregator"

,

new

HttpChunkAggregator

(

65536

));

pipeline

.

addLast

(

"encoder"

,

new

HttpResponseEncoder

());

pipeline

.

addLast

(

"chunkedWriter"

,

new

ChunkedWriteHandler

());

pipeline

.

addLast

(

"handler"

,

new

HttpChunkedServerHandler

());

return

pipeline

;

}

});

bootstrap

.

setOption

(

"child.reuseAddress"

,

true

);

bootstrap

.

setOption

(

"child.tcpNoDelay"

,

true

);

bootstrap

.

setOption

(

"child.keepAlive"

,

true

);

// Bind and start to accept incoming connections.

bootstrap

.

bind

(

new

InetSocketAddress

(

port

));

}

public

static

void

main

(

String

[]

args

)

{

int

port

;

if

(

args

.

length

>

0

)

{

port

=

Integer

.

parseInt

(

args

[

0

]);

}

else

{

port

=

8080

;

}

System

.

out

.

format

(

"server start with port %d \n"

,

port

);

new

HttpChunkedServer

(

port

).

run

();

}

}

|

唯一的自定义处理器HttpChunkedServerHandler.java:

| 123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293949596979899100101102103104105106107108109110111112113114115116117118119120 |

package

com

.

test

.

server

;

import

static

org

.

jboss

.

netty

.

handler

.

codec

.

http

.

HttpHeaders

.

Names

.

CONTENT_TYPE

;

import

static

org

.

jboss

.

netty

.

handler

.

codec

.

http

.

HttpMethod

.

GET

;

import

static

org

.

jboss

.

netty

.

handler

.

codec

.

http

.

HttpResponseStatus

.

BAD_REQUEST

;

import

static

org

.

jboss

.

netty

.

handler

.

codec

.

http

.

HttpResponseStatus

.

METHOD_NOT_ALLOWED

;

import

static

org

.

jboss

.

netty

.

handler

.

codec

.

http

.

HttpResponseStatus

.

OK

;

import

static

org

.

jboss

.

netty

.

handler

.

codec

.

http

.

HttpVersion

.

HTTP_1_1

;

import

java.util.concurrent.atomic.AtomicInteger

;

import

org.jboss.netty.buffer.ChannelBuffer

;

import

org.jboss.netty.buffer.ChannelBuffers

;

import

org.jboss.netty.channel.Channel

;

import

org.jboss.netty.channel.ChannelFutureListener

;

import

org.jboss.netty.channel.ChannelHandlerContext

;

import

org.jboss.netty.channel.ChannelStateEvent

;

import

org.jboss.netty.channel.ExceptionEvent

;

import

org.jboss.netty.channel.MessageEvent

;

import

org.jboss.netty.channel.SimpleChannelUpstreamHandler

;

import

org.jboss.netty.handler.codec.frame.TooLongFrameException

;

import

org.jboss.netty.handler.codec.http.DefaultHttpChunk

;

import

org.jboss.netty.handler.codec.http.DefaultHttpResponse

;

import

org.jboss.netty.handler.codec.http.HttpChunk

;

import

org.jboss.netty.handler.codec.http.HttpHeaders

;

import

org.jboss.netty.handler.codec.http.HttpRequest

;

import

org.jboss.netty.handler.codec.http.HttpResponse

;

import

org.jboss.netty.handler.codec.http.HttpResponseStatus

;

import

org.jboss.netty.util.CharsetUtil

;

public

class

HttpChunkedServerHandler

extends

SimpleChannelUpstreamHandler

{

private

static

final

AtomicInteger

count

=

new

AtomicInteger

(

0

);

private

void

increment

()

{

System

.

out

.

format

(

"online user %d\n"

,

count

.

incrementAndGet

());

}

private

void

decrement

()

{

if

(

count

.

get

()

<=

0

)

{

System

.

out

.

format

(

"~online user %d\n"

,

0

);

}

else

{

System

.

out

.

format

(

"~online user %d\n"

,

count

.

decrementAndGet

());

}

}

@Override

public

void

messageReceived

(

ChannelHandlerContext

ctx

,

MessageEvent

e

)

throws

Exception

{

HttpRequest

request

=

(

HttpRequest

)

e

.

getMessage

();

if

(

request

.

getMethod

()

!=

GET

)

{

sendError

(

ctx

,

METHOD_NOT_ALLOWED

);

return

;

}

sendPrepare

(

ctx

);

increment

();

}

@Override

public

void

channelDisconnected

(

ChannelHandlerContext

ctx

,

ChannelStateEvent

e

)

throws

Exception

{

decrement

();

super

.

channelDisconnected

(

ctx

,

e

);

}

@Override

public

void

exceptionCaught

(

ChannelHandlerContext

ctx

,

ExceptionEvent

e

)

throws

Exception

{

Throwable

cause

=

e

.

getCause

();

if

(

cause

instanceof

TooLongFrameException

)

{

sendError

(

ctx

,

BAD_REQUEST

);

return

;

}

}

private

static

void

sendError

(

ChannelHandlerContext

ctx

,

HttpResponseStatus

status

)

{

HttpResponse

response

=

new

DefaultHttpResponse

(

HTTP_1_1

,

status

);

response

.

setHeader

(

CONTENT_TYPE

,

"text/plain; charset=UTF-8"

);

response

.

setContent

(

ChannelBuffers

.

copiedBuffer

(

"Failure: "

+

status

.

toString

()

+

"\r\n"

,

CharsetUtil

.

UTF_8

));

// Close the connection as soon as the error message is sent.

ctx

.

getChannel

().

write

(

response

)

.

addListener

(

ChannelFutureListener

.

CLOSE

);

}

private

void

sendPrepare

(

ChannelHandlerContext

ctx

)

{

HttpResponse

response

=

new

DefaultHttpResponse

(

HTTP_1_1

,

OK

);

response

.

setChunked

(

true

);

response

.

setHeader

(

HttpHeaders

.

Names

.

CONTENT_TYPE

,

"text/html; charset=UTF-8"

);

response

.

addHeader

(

HttpHeaders

.

Names

.

CONNECTION

,

HttpHeaders

.

Values

.

KEEP_ALIVE

);

response

.

setHeader

(

HttpHeaders

.

Names

.

TRANSFER_ENCODING

,

HttpHeaders

.

Values

.

CHUNKED

);

Channel

chan

=

ctx

.

getChannel

();

chan

.

write

(

response

);

// 缓冲必须凑够256字节,浏览器端才能够正常接收 ...

StringBuilder

builder

=

new

StringBuilder

();

builder

.

append

(

""

);

int

leftChars

=

256

-

builder

.

length

();

for

(

int

i

=

0

;

i

<

leftChars

;

i

++)

{

builder

.

append

(

" "

);

}

writeStringChunk

(

chan

,

builder

.

toString

());

}

private

void

writeStringChunk

(

Channel

channel

,

String

data

)

{

ChannelBuffer

chunkContent

=

ChannelBuffers

.

dynamicBuffer

(

channel

.

getConfig

().

getBufferFactory

());

chunkContent

.

writeBytes

(

data

.

getBytes

());

HttpChunk

chunk

=

new

DefaultHttpChunk

(

chunkContent

);

channel

.

write

(

chunk

);

}

}

|

启动脚本start.sh

| 12 |

set

CLASSPATH

=.

nohup java -server -Xmx6G -Xms6G -Xmn600M -XX:PermSize

=50M -XX:MaxPermSize

=50M -Xss256K -XX:+DisableExplicitGC -XX:SurvivorRatio

=1 -XX:+UseConcMarkSweepGC -XX:+UseParNewGC -XX:+CMSParallelRemarkEnabled -XX:+UseCMSCompactAtFullCollection -XX:CMSFullGCsBeforeCompaction

=0 -XX:+CMSClassUnloadingEnabled -XX:LargePageSizeInBytes

=128M -XX:+UseFastAccessorMethods -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction

=80 -XX:SoftRefLRUPolicyMSPerMB

=0 -XX:+PrintClassHistogram -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+PrintHeapAtGC -Xloggc:gc.log -Djava.ext.dirs

=lib com.test.server.HttpChunkedServer 8000 >server.out 2>&1 &

|

达到100万并发连接时的一些信息

每次服务器端达到一百万个并发持久连接之后,然后关掉测试端程序,断开所有的连接,等到服务器端日志输出在线用户为0时,再次重复以上步骤。在这反反复复的情况下,观察内存等信息的一些情况。以某次断开所有测试端为例后,当前系统占用为(设置为list_free_1):

total used free shared buffers cached

Mem: 15189 7736 7453 0 18 120

-/+ buffers/cache: 7597 7592

Swap: 4095 948 3147

通过top观察,其进程相关信息

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

4925 root 20 0 8206m 4.3g 2776 S 0.3 28.8 50:18.66 java

在启动脚本start.sh中,我们设置堆内存为6G。

ps aux|grep java命令获得信息:

root 4925 38.0 28.8 8403444 4484764 ? Sl 15:26 50:18 java -server...HttpChunkedServer 8000

RSS占用内存为4484764K/1024K=4379M

然后再次启动测试端,在服务器接收到online user 1023749时,ps aux|grep java内容为:

root 4925 43.6 28.4 8403444 4422824 ? Sl 15:26 62:53 java -server...

查看当前网络信息统计

ss -s

Total: 1024050 (kernel 1024084)

TCP: 1023769 (estab 1023754, closed 2, orphaned 0, synrecv 0, timewait 0/0), ports 12

Transport Total IP IPv6

* 1024084 - -

RAW 0 0 0

UDP 7 6 1

TCP 1023767 12 1023755

INET 1023774 18 1023756

FRAG 0 0 0

通过top查看一下

top -p 4925

top - 17:51:30 up 3:02, 4 users, load average: 1.03, 1.80, 1.19

Tasks: 1 total, 0 running, 1 sleeping, 0 stopped, 0 zombie

Cpu0 : 0.9%us, 2.6%sy, 0.0%ni, 52.9%id, 1.0%wa, 13.6%hi, 29.0%si, 0.0%st

Cpu1 : 1.4%us, 4.5%sy, 0.0%ni, 80.1%id, 1.9%wa, 0.0%hi, 12.0%si, 0.0%st

Cpu2 : 1.5%us, 4.4%sy, 0.0%ni, 80.5%id, 4.3%wa, 0.0%hi, 9.3%si, 0.0%st

Cpu3 : 1.9%us, 4.4%sy, 0.0%ni, 84.4%id, 3.2%wa, 0.0%hi, 6.2%si, 0.0%st

Mem: 15554336k total, 15268728k used, 285608k free, 3904k buffers

Swap: 4194296k total, 1082592k used, 3111704k free, 37968k cached

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

4925 root 20 0 8206m 4.2g 2220 S 3.3 28.4 62:53.66 java

四核都被占用了,每一个核心不太平均。这是在虚拟机中得到结果,可能真实服务器会更好一些。 因为不是CPU密集型应用,CPU不是问题,无须多加关注。

系统内存状况

free -m

total used free shared buffers cached

Mem: 15189 14926 263 0 5 56

-/+ buffers/cache: 14864 324

Swap: 4095 1057 3038

物理内存已经无法满足要求了,占用了1057M虚拟内存。

查看一下堆内存情况

jmap -heap 4925

Attaching to process ID 4925, please wait...

Debugger attached successfully.

Server compiler detected.

JVM version is 23.21-b01

using parallel threads in the new generation.

using thread-local object allocation.

Concurrent Mark-Sweep GC

Heap Configuration:

MinHeapFreeRatio = 40

MaxHeapFreeRatio = 70

MaxHeapSize = 6442450944 (6144.0MB)

NewSize = 629145600 (600.0MB)

MaxNewSize = 629145600 (600.0MB)

OldSize = 5439488 (5.1875MB)

NewRatio = 2

SurvivorRatio = 1

PermSize = 52428800 (50.0MB)

MaxPermSize = 52428800 (50.0MB)

G1HeapRegionSize = 0 (0.0MB)

Heap Usage:

New Generation (Eden + 1 Survivor Space):

capacity = 419430400 (400.0MB)

used = 308798864 (294.49354553222656MB)

free = 110631536 (105.50645446777344MB)

73.62338638305664% used

Eden Space:

capacity = 209715200 (200.0MB)

used = 103375232 (98.5863037109375MB)

free = 106339968 (101.4136962890625MB)

49.29315185546875% used

From Space:

capacity = 209715200 (200.0MB)

used = 205423632 (195.90724182128906MB)

free = 4291568 (4.0927581787109375MB)

97.95362091064453% used

To Space:

capacity = 209715200 (200.0MB)

used = 0 (0.0MB)

free = 209715200 (200.0MB)

0.0% used

concurrent mark-sweep generation:

capacity = 5813305344 (5544.0MB)

used = 4213515472 (4018.321487426758MB)

free = 1599789872 (1525.6785125732422MB)

72.48054631000646% used

Perm Generation:

capacity = 52428800 (50.0MB)

used = 5505696 (5.250640869140625MB)

free = 46923104 (44.749359130859375MB)

10.50128173828125% used

1439 interned Strings occupying 110936 bytes.

老生代占用内存为72%,较为合理,毕竟系统已经处理100万个连接。

再次断开所有测试端,看看系统内存(free -m)

total used free shared buffers cached

Mem: 15189 7723 7466 0 13 120

-/+ buffers/cache: 7589 7599

Swap: 4095 950 3145

记为list_free_2。

list_free_1和list_free_2两次都释放后的内存比较结果,系统可用物理已经内存已经降到7589M,先前可是7597M物理内存。

总之,我们的JAVA测试程序在内存占用方面已经,最低需要7589 + 950 = 8.6G内存为最低需求内存吧。

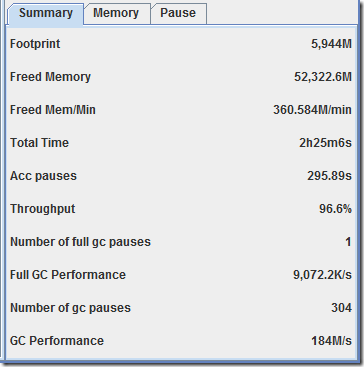

GC日志

我们在启动脚本处设置的一大串参数,到底是否达到目标,还得从gc日志处获得具体效果,推荐使用GCViewer。

总之:

- 只进行了一次Full GC,代价太高,停顿了12秒。

- PartNew成为了停顿大户,导致整个系统停顿了41秒之久,不可接受。

- 当前JVM调优喜忧参半,还得继续努力等

小结

Java与与Erlang、C相比,比较麻烦的事情,需要在程序一开始就得准备好它的堆栈到底需要多大空间,换个说法就是JVM启动参数设置堆内存大小,设置合适的垃圾回收机制,若以后程序需要更多内存,需停止程序,编辑启动参数,然后再次启动。总之一句话,就是麻烦。单单JVM的调优,就得持续不断的根据检测、信息、日志等进行适当微调。

- JVM需要提前指定堆大小,相比Erlang/C,这可能是个麻烦

- GC(垃圾回收),相对比麻烦,需要持续不断的根据日志、JVM堆栈信息、运行时情况进行JVM参数微调

- 设置一个最大连接目标,多次测试达到顶峰,然后释放所有连接,反复观察内存占用,获得一个较为合适的系统运行内存值

- Eclipse Memory Analyzer结合jmap导出堆栈DUMP文件,分析内存泄漏,还是很方便的

- 想修改运行时内容,或者称之为热加载,默认不可能

- 真实机器上会有更好的反映

吐槽一下:

JAVA OSGI,相对比Erlang来说,需要人转换思路,不是那么原生的东西,总是有些别扭,社区或商业公司对此的修修补补,不过是实现一些面向对象所不具备的热加载的企业特性。

测试源代码,下载just_test。