DCGAN Tutorials解读

DCGAN Tutorials解读

- DCGAN

- URL

- 概述

- GAN表达公式

- DCGAN

- 包含库

- 输入

- 数据集

- 权重初始化

- 生成器

- 判别器

- 损失函数和优化器

- 训练

- 训练判别器

- 训练生成器

- 结果

- 损失函数对比

- 真实图像与假图像对

- 参考

DCGAN

URL

DCGAN Tutorial

DCGAN Github

概述

今天看了dcgan,链接为DCGAN Tutorial,感受到了这个网络框架的强大。gan网络是由两个部分组成,生成器和鉴别器。生成器用来生成假的数据,鉴别器用来识别假的的数据。生成器一直致力于把假的数据“变成”真的数据而鉴别器则是致力于把假的数据识别出来。所以当鉴别器只有 50 % 50\% 50%的概率判断生成器产生的数据为假时,该训练便成功。

GAN表达公式

我们可以定义 x x x为输入图像, D ( x ) D(x) D(x)为鉴别器网络。则当 x x x的值来自训练图像时, D ( x ) D(x) D(x)的值为1;当 x x x的值来自生成图像时, D ( x ) D(x) D(x)的值为0。 D ( x ) D(x) D(x)可以当作典型的二分类。对于生成器,我们可以定义 z z z为一个遵循标准正态分布的虚拟空间向量, G ( z ) G(z) G(z)表示生成器。 G ( z ) G(z) G(z)的目的是可以估计训练数据( P d a t a P_{data} Pdata)的分布,因此它能够根据数据( P g P_g Pg)分布估计假数据。

D ( G ( z ) ) D(G(z)) D(G(z))是一个估计生成器( G G G)是真实图像的概率模型。以下公式是GAN网络的loss function:

m i n m a x V G D ( D , G ) = E x − P d a t a ( x ) [ l o g D ( x ) ] + E z − P z ( z ) [ l o g ( 1 − D ( G ( z ) ) ) ] \mathop{minmaxV}\limits_{G~~~~~D}(D,G) = E_{x-P_{data}(x)}[logD(x)]+E_{z-P_z(z)}[log(1-D(G(z)))] G DminmaxV(D,G)=Ex−Pdata(x)[logD(x)]+Ez−Pz(z)[log(1−D(G(z)))]

理论上讲,当 P g = P d a t a P_g=P_{data} Pg=Pdata时,判别器几乎不能判断输入是否为真。但是,GANs网络的收敛理论一直在研究,在现实模型中不能总是训练到目标点。

DCGAN

DCGAN是GAN网络的简单延申,但是它能分别对鉴别器和生成器进行卷积和反卷积。鉴别器由卷积层,池化层和LeakyReLU激活函数组成。当输入图像是 3 x 64 x 64 3x64x64 3x64x64的RGB图像时,输出是判断输入的图像是否为真的概率分布。生成器是由反卷积层,池化层和ReLU激活函数组成。它的输入为一个遵循标准正太分布的隐藏向量 z z z,输出为一个 3 x 64 x 64 3x64x64 3x64x64的RGB图像。反卷积可以使隐藏向量 z z z转换成一个和RGB图像有相同形状的卷。下面以CelebA为数据集,对CelebA做DCGAN网络训练。

包含库

from __future__ import print_function

#%matplotlib inline

import argparse

import os

import random

import torch

import torch.nn as nn

import torch.nn.parallel

import torch.backends.cudnn as cudnn

import torch.optim as optim

import torch.utils.data

import torchvision.datasets as dset

import torchvision.transforms as transforms

import torchvision.utils as vutils

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.animation as animation

from IPython.display import HTML

# Set random seed for reproducibility

manualSeed = 999

#manualSeed = random.randint(1, 10000) # use if you want new results

print("Random Seed: ", manualSeed)

random.seed(manualSeed)

torch.manual_seed(manualSeed)这部分设置了一个随机种子,如果你想每次运行都得到一个新的结果,可以使用manualSeed = random.randint(1, 10000)这段代码。

该行代码输出为:

Out:

Random Seed: 999输入

输入部分需要定义一些变量用于后续的训练。

d a t a r o o t dataroot dataroot数据集的路径,最好写绝对路径; w o r k e r s workers workers设置加载数据集的线程数,数越大,速度越快; b i t c h _ s i z e bitch\_size bitch_size训练时一次处理数据集图片的数量,这里使用了128个; i m a g e _ s i z e image\_size image_size 训练图片的大小,所有的图片在输入时都会预处理( r e s i z e resize resize),这里的尺寸大小为64; n c nc nc训练图片的通道数,一般都选用彩色图片,因此 n c = 3 nc=3 nc=3; n z nz nz生成器的输入向量的大小,这里为100; n g f ngf ngf生成器的网络结构的参数,这里为64; d n f dnf dnf生成器的网络结构的参数,这里为64; n u m _ e p o c h s num\_epochs num_epochs训练周期的大小,一般为5,也可以设置为10,15,20…; l r lr lr为学习率,这里为0.0002; b e t a 1 beta1 beta1优化器参数; n g p u ngpu ngpu gpu的数量,如果没有GPU,这里赋值为0。

代码如下:

# Root directory for dataset

dataroot = "data/celeba"

# Number of workers for dataloader

workers = 2

# Batch size during training

batch_size = 128

# Spatial size of training images. All images will be resized to this

# size using a transformer.

image_size = 64

# Number of channels in the training images. For color images this is 3

nc = 3

# Size of z latent vector (i.e. size of generator input)

nz = 100

# Size of feature maps in generator

ngf = 64

# Size of feature maps in discriminator

ndf = 64

# Number of training epochs

num_epochs = 5

# Learning rate for optimizers

lr = 0.0002

# Beta1 hyperparam for Adam optimizers

beta1 = 0.5

# Number of GPUs available. Use 0 for CPU mode.

ngpu = 1数据集

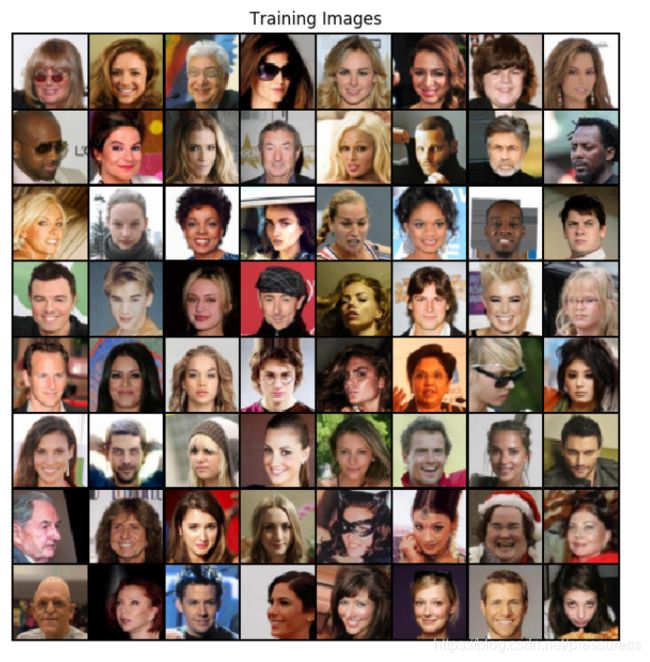

这次历程是以CelebA为数据集,对CelebA做DCGAN网络训练。也可以从[ Google Drive]中下载。数据集的名称为 i m g _ a l i g n _ c e l e b a . z i p img\_align\_celeba.zip img_align_celeba.zip,当解压之后,我们设置 d a t a r o o t dataroot dataroot路径规则如下所示:

/path to/celeba

-> img_align_celeba

-> 188242.jpg

-> 173822.jpg

-> 284702.jpg

-> 537394.jpg

...实际上就是新建一个文件夹 c e l e b a celeba celeba,然后把 i m g a l i g n c e l e b a . z i p img_align_celeba.zip imgalignceleba.zip解压进去,数据集路径 d a t a r o o t dataroot dataroot就写 y o u r p a t h / c e l e b a your path/celeba yourpath/celeba.

对图片预处理:

以下代码为对数据集做预处理,包括 r e s i z e resize resize图片大小,设置显卡数量等。

# We can use an image folder dataset the way we have it setup.

# Create the dataset

dataset = dset.ImageFolder(root=dataroot,

transform=transforms.Compose([

transforms.Resize(image_size),

transforms.CenterCrop(image_size),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)),

]))

# Create the dataloader

dataloader = torch.utils.data.DataLoader(dataset, batch_size=batch_size,

shuffle=True, num_workers=workers)

# Decide which device we want to run on

device = torch.device("cuda:0" if (torch.cuda.is_available() and ngpu > 0) else "cpu")

# Plot some training images

real_batch = next(iter(dataloader))

plt.figure(figsize=(8,8))

plt.axis("off")

plt.title("Training Images")

plt.imshow(np.transpose(vutils.make_grid(real_batch[0].to(device)[:64], padding=2, normalize=True).cpu(),(1,2,0)))权重初始化

这里的权重初始化时遵循均值为 0 0 0,方差为 0.02 0.02 0.02的正态分布的随机值。初始化的参数是生成器和判别器网络的卷积,反卷积,池化的参数。

代码如下:

# custom weights initialization called on netG and netD

def weights_init(m):

classname = m.__class__.__name__

if classname.find('Conv') != -1:

nn.init.normal_(m.weight.data, 0.0, 0.02)

elif classname.find('BatchNorm') != -1:

nn.init.normal_(m.weight.data, 1.0, 0.02)

nn.init.constant_(m.bias.data, 0)生成器

生成器是由反卷积,归一化,relu激活函数组成,主要是将 n c nc nc维的隐藏空间向量反卷积成对应目标图像大小。其代码如下所示:

# Generator Code

class Generator(nn.Module):

def __init__(self, ngpu):

super(Generator, self).__init__()

self.ngpu = ngpu

self.main = nn.Sequential(

# input is Z, going into a convolution

nn.ConvTranspose2d( nz, ngf * 8, 4, 1, 0, bias=False),

nn.BatchNorm2d(ngf * 8),

nn.ReLU(True),

# state size. (ngf*8) x 4 x 4

nn.ConvTranspose2d(ngf * 8, ngf * 4, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf * 4),

nn.ReLU(True),

# state size. (ngf*4) x 8 x 8

nn.ConvTranspose2d( ngf * 4, ngf * 2, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf * 2),

nn.ReLU(True),

# state size. (ngf*2) x 16 x 16

nn.ConvTranspose2d( ngf * 2, ngf, 4, 2, 1, bias=False),

nn.BatchNorm2d(ngf),

nn.ReLU(True),

# state size. (ngf) x 32 x 32

nn.ConvTranspose2d( ngf, nc, 4, 2, 1, bias=False),

nn.Tanh()

# state size. (nc) x 64 x 64

)

def forward(self, input):

return self.main(input)这里的图像大小为64,因此生成器将 n z = 100 nz=100 nz=100反卷积为 64 × 64 64\times64 64×64的大小.下面的代码时一个生成器采用权值初始化函数( w e i g h t s _ i n i t weights\_init weights_init)的实例。

# Create the generator

netG = Generator(ngpu).to(device)

# Handle multi-gpu if desired

if (device.type == 'cuda') and (ngpu > 1):

netG = nn.DataParallel(netG, list(range(ngpu)))

# Apply the weights_init function to randomly initialize all weights

# to mean=0, stdev=0.2.

netG.apply(weights_init)

# Print the model

print(netG)输出为:

Out:

Generator(

(main): Sequential(

(0): ConvTranspose2d(100, 512, kernel_size=(4, 4), stride=(1, 1), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): ConvTranspose2d(512, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): ConvTranspose2d(256, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(7): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(8): ReLU(inplace=True)

(9): ConvTranspose2d(128, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(10): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(11): ReLU(inplace=True)

(12): ConvTranspose2d(64, 3, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(13): Tanh()

)

)判别器

判别器本质上就是一个二分类,它将训练数据和生成数据打了两个标签,并且对训练数据做一系列的卷积,归一化和LeakyReLU 。最后通过一个Sigmoid激活函数输出一个概率模型。其代码如下所示:

class Discriminator(nn.Module):

def __init__(self, ngpu):

super(Discriminator, self).__init__()

self.ngpu = ngpu

self.main = nn.Sequential(

# input is (nc) x 64 x 64

nn.Conv2d(nc, ndf, 4, 2, 1, bias=False),

nn.LeakyReLU(0.2, inplace=True),

# state size. (ndf) x 32 x 32

nn.Conv2d(ndf, ndf * 2, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 2),

nn.LeakyReLU(0.2, inplace=True),

# state size. (ndf*2) x 16 x 16

nn.Conv2d(ndf * 2, ndf * 4, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 4),

nn.LeakyReLU(0.2, inplace=True),

# state size. (ndf*4) x 8 x 8

nn.Conv2d(ndf * 4, ndf * 8, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 8),

nn.LeakyReLU(0.2, inplace=True),

# state size. (ndf*8) x 4 x 4

nn.Conv2d(ndf * 8, 1, 4, 1, 0, bias=False),

nn.Sigmoid()

)

def forward(self, input):

return self.main(input)下面是判别器使用 w e i g h t _ i n i t weight\_init weight_init函数的实例:

# Create the Discriminator

netD = Discriminator(ngpu).to(device)

# Handle multi-gpu if desired

if (device.type == 'cuda') and (ngpu > 1):

netD = nn.DataParallel(netD, list(range(ngpu)))

# Apply the weights_init function to randomly initialize all weights

# to mean=0, stdev=0.2.

netD.apply(weights_init)

# Print the model

print(netD)输出为:

Out:

Discriminator(

(main): Sequential(

(0): Conv2d(3, 64, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(1): LeakyReLU(negative_slope=0.2, inplace=True)

(2): Conv2d(64, 128, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(3): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(4): LeakyReLU(negative_slope=0.2, inplace=True)

(5): Conv2d(128, 256, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(6): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(7): LeakyReLU(negative_slope=0.2, inplace=True)

(8): Conv2d(256, 512, kernel_size=(4, 4), stride=(2, 2), padding=(1, 1), bias=False)

(9): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(10): LeakyReLU(negative_slope=0.2, inplace=True)

(11): Conv2d(512, 1, kernel_size=(4, 4), stride=(1, 1), bias=False)

(12): Sigmoid()

)

)

损失函数和优化器

上面已经把判别器和生成器设置完毕,接下来就是对他们做损失函数和优化。这里使用了二元交叉熵损失函数:

ι ( x , y ) = L = { l 1 , . . . , l N } T , l n = − [ y n ⋅ l o g x n + ( 1 − y n ) ⋅ l o g ( 1 − x n ) ] \iota(x,y)=L={\{l_1,...,l_N\}}^T,l_n=-[y_n\cdot logx_n+(1-y_n)\cdot log(1-x_n)] ι(x,y)=L={l1,...,lN}T,ln=−[yn⋅logxn+(1−yn)⋅log(1−xn)]

并且设真实数据标签为1,虚假数据标签为0.最后分别对生成器和判别器做优化。代码如下:

# Initialize BCELoss function

criterion = nn.BCELoss()

# Create batch of latent vectors that we will use to visualize

# the progression of the generator

fixed_noise = torch.randn(64, nz, 1, 1, device=device)

# Establish convention for real and fake labels during training

real_label = 1

fake_label = 0

# Setup Adam optimizers for both G and D

optimizerD = optim.Adam(netD.parameters(), lr=lr, betas=(beta1, 0.999))

optimizerG = optim.Adam(netG.parameters(), lr=lr, betas=(beta1, 0.999))训练

现在,我们已经定义了GAN网络框架。接下来便可以训练数据集。训练部分分为两个部分,训练判别器和训练生成器。

训练判别器

判别器的作用是最大化正确分类的概率。实际上我们是最大化 l o g D ( x ) + l o g ( 1 − D ( G ( z ) ) logD(x)+log(1-D(G(z)) logD(x)+log(1−D(G(z))

,这一步可以分为两个部分,首先,我们对真实数据训练,通过数据集 D D D进行正向传递,计算损失函数( l o g D ( x ) logD(x) logD(x)),然后在反向传递时计算梯度。然后,我们用生成器生成一批假样本,通过数据集 D D D计算损失函数 ( l o g ( 1 − D G ( z ) ) ) (log(1-DG(z))) (log(1−DG(z))),再通过反向传递计算梯度。通过计算真数据和假数据的梯度,我们可以一步求出判别器的优化器。

训练生成器

我们使用生成器是为了能够生成更好的假数据,我们首先从上一步的判别器里分出假数据,然后将生成器的数据打上标签1,并计算生成器的损失函数,再计算生成器的梯度。最后根据梯度计算对生成器的参数进行优化。

具体代码如下:

print("Starting Training Loop...")

# For each epoch

for epoch in range(num_epochs):

# For each batch in the dataloader

for i, data in enumerate(dataloader, 0):

############################

# (1) Update D network: maximize log(D(x)) + log(1 - D(G(z)))

###########################

## Train with all-real batch

netD.zero_grad()

# Format batch

real_cpu = data[0].to(device)

b_size = real_cpu.size(0)

label = torch.full((b_size,), real_label, device=device)

# Forward pass real batch through D

output = netD(real_cpu).view(-1)

# Calculate loss on all-real batch

errD_real = criterion(output, label)

# Calculate gradients for D in backward pass

errD_real.backward()

D_x = output.mean().item()

## Train with all-fake batch

# Generate batch of latent vectors

noise = torch.randn(b_size, nz, 1, 1, device=device)

# Generate fake image batch with G

fake = netG(noise)

label.fill_(fake_label)

# Classify all fake batch with D

output = netD(fake.detach()).view(-1)

# Calculate D's loss on the all-fake batch

errD_fake = criterion(output, label)

# Calculate the gradients for this batch

errD_fake.backward()

D_G_z1 = output.mean().item()

# Add the gradients from the all-real and all-fake batches

errD = errD_real + errD_fake

# Update D

optimizerD.step()

############################

# (2) Update G network: maximize log(D(G(z)))

###########################

netG.zero_grad()

label.fill_(real_label) # fake labels are real for generator cost

# Since we just updated D, perform another forward pass of all-fake batch through D

output = netD(fake).view(-1)

# Calculate G's loss based on this output

errG = criterion(output, label)

# Calculate gradients for G

errG.backward()

D_G_z2 = output.mean().item()

# Update G

optimizerG.step()

# Output training stats

if i % 50 == 0:

print('[%d/%d][%d/%d]\tLoss_D: %.4f\tLoss_G: %.4f\tD(x): %.4f\tD(G(z)): %.4f / %.4f'

% (epoch, num_epochs, i, len(dataloader),

errD.item(), errG.item(), D_x, D_G_z1, D_G_z2))

# Save Losses for plotting later

G_losses.append(errG.item())

D_losses.append(errD.item())

# Check how the generator is doing by saving G's output on fixed_noise

if (iters % 500 == 0) or ((epoch == num_epochs-1) and (i == len(dataloader)-1)):

with torch.no_grad():

fake = netG(fixed_noise).detach().cpu()

img_list.append(vutils.make_grid(fake, padding=2, normalize=True))

iters += 1结果

损失函数对比

代码如下:

plt.figure(figsize=(10,5))

plt.title("Generator and Discriminator Loss During Training")

plt.plot(G_losses,label="G")

plt.plot(D_losses,label="D")

plt.xlabel("iterations")

plt.ylabel("Loss")

plt.legend()

plt.show()真实图像与假图像对

下图所示为真实图像和假图像的可视化对比

代码如下:

# Grab a batch of real images from the dataloader

real_batch = next(iter(dataloader))

# Plot the real images

plt.figure(figsize=(15,15))

plt.subplot(1,2,1)

plt.axis("off")

plt.title("Real Images")

plt.imshow(np.transpose(vutils.make_grid(real_batch[0].to(device)[:64], padding=5, normalize=True).cpu(),(1,2,0)))

# Plot the fake images from the last epoch

plt.subplot(1,2,2)

plt.axis("off")

plt.title("Fake Images")

plt.imshow(np.transpose(img_list[-1],(1,2,0)))

plt.show()参考

DCGAN Tutorial

Generative Adversarial Nets

好多不明白和错误之处,请大家指出来,谢谢~