学习笔记|Pytorch使用教程07(transforms数据预处理方法(一))

学习笔记|Pytorch使用教程07

本学习笔记主要摘自“深度之眼”,做一个总结,方便查阅。

使用Pytorch版本为1.2。

- 数据增强

- transforms——裁剪

- transforms——翻转和旋转

- 注意:torchvision.transforms.ToTensor

一.数据增强

数据增强又称数据增广,数据扩增,它是对训练集进行变换,使训练集更丰富从而让模型更具泛化能力。

二.transforms——裁剪

1.transforms.CenterCrop

功能:从图像中心裁剪图片

# -*- coding: utf-8 -*-

import os

import numpy as np

import torch

import random

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

from tools.my_dataset import RMBDataset

from PIL import Image

from matplotlib import pyplot as plt

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

set_seed(1) # 设置随机种子

# 参数设置

MAX_EPOCH = 10

BATCH_SIZE = 1

LR = 0.01

log_interval = 10

val_interval = 1

rmb_label = {"1": 0, "100": 1}

def transform_invert(img_, transform_train):

"""

将data 进行反transfrom操作

:param img_: tensor

:param transform_train: torchvision.transforms

:return: PIL image

"""

if 'Normalize' in str(transform_train):

norm_transform = list(filter(lambda x: isinstance(x, transforms.Normalize), transform_train.transforms))

mean = torch.tensor(norm_transform[0].mean, dtype=img_.dtype, device=img_.device)

std = torch.tensor(norm_transform[0].std, dtype=img_.dtype, device=img_.device)

img_.mul_(std[:, None, None]).add_(mean[:, None, None])

img_ = img_.transpose(0, 2).transpose(0, 1) # C*H*W --> H*W*C

img_ = np.array(img_) * 255

if img_.shape[2] == 3:

img_ = Image.fromarray(img_.astype('uint8')).convert('RGB')

elif img_.shape[2] == 1:

img_ = Image.fromarray(img_.astype('uint8').squeeze())

else:

raise Exception("Invalid img shape, expected 1 or 3 in axis 2, but got {}!".format(img_.shape[2]) )

return img_

# ============================ step 1/5 数据 ============================

split_dir = os.path.join("..", "..", "data", "rmb_split")

train_dir = os.path.join(split_dir, "train")

valid_dir = os.path.join(split_dir, "valid")

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

train_transform = transforms.Compose([

transforms.Resize((224, 224)),

# 1 CenterCrop

# transforms.CenterCrop(512), # 512

# 2 RandomCrop

# transforms.RandomCrop(224, padding=16),

# transforms.RandomCrop(224, padding=(16, 64)),

# transforms.RandomCrop(224, padding=16, fill=(255, 0, 0)),

# transforms.RandomCrop(512, pad_if_needed=True), # pad_if_needed=True

# transforms.RandomCrop(224, padding=64, padding_mode='edge'),

# transforms.RandomCrop(224, padding=64, padding_mode='reflect'),

# transforms.RandomCrop(1024, padding=1024, padding_mode='symmetric'),

# 3 RandomResizedCrop

# transforms.RandomResizedCrop(size=224, scale=(0.5, 0.5)),

# 4 FiveCrop

# transforms.FiveCrop(112),

# transforms.Lambda(lambda crops: torch.stack([(transforms.ToTensor()(crop)) for crop in crops])),

# 5 TenCrop

# transforms.TenCrop(112, vertical_flip=False),

# transforms.Lambda(lambda crops: torch.stack([(transforms.ToTensor()(crop)) for crop in crops])),

# 1 Horizontal Flip

# transforms.RandomHorizontalFlip(p=1),

# 2 Vertical Flip

# transforms.RandomVerticalFlip(p=0.5),

# 3 RandomRotation

# transforms.RandomRotation(90),

# transforms.RandomRotation((90), expand=True),

# transforms.RandomRotation(30, center=(0, 0)),

# transforms.RandomRotation(30, center=(0, 0), expand=True), # expand only for center rotation

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

valid_transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std)

])

# 构建MyDataset实例

train_data = RMBDataset(data_dir=train_dir, transform=train_transform)

valid_data = RMBDataset(data_dir=valid_dir, transform=valid_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=BATCH_SIZE)

# ============================ step 5/5 训练 ============================

for epoch in range(MAX_EPOCH):

for i, data in enumerate(train_loader):

inputs, labels = data # B C H W

img_tensor = inputs[0, ...] # C H W

img = transform_invert(img_tensor, train_transform)

plt.imshow(img)

plt.show()

plt.pause(0.5)

plt.close()

# bs, ncrops, c, h, w = inputs.shape

# for n in range(ncrops):

# img_tensor = inputs[0, n, ...] # C H W

# img = transform_invert(img_tensor, train_transform)

# plt.imshow(img)

# plt.show()

# plt.pause(1)

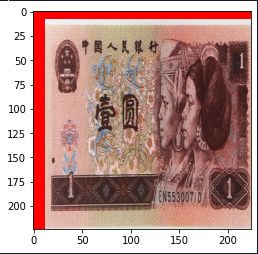

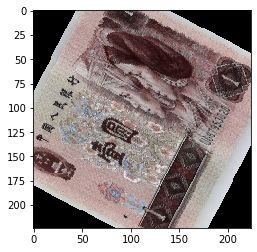

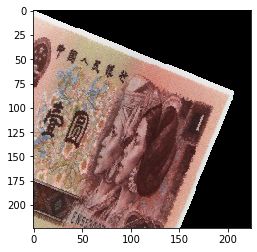

当注释掉 **#transforms.CenterCrop(196)**时,输出:

不注释,输出:

当设置成 transforms.CenterCrop(512) 时,输出:

2.transforms.RandomCrop

功能:从图片中随机裁剪出尺寸为size的图片

- size:所需裁剪图片尺寸

- padding:设置填充大小

当为a时上下左右均填充a个像素。

当为(a, b)时,上下填充b个像素,左右填充a个像素。

当为(a, b, c, d)时,左、上、右、下分别填充a,b,c,d - pad_if_need:若图像小于设定size,则填充

- padding_mpde:填充模式,有4种模式。

1、constant:像素值由fill设定。

2、edge:像素值由图像边缘像素决定。

3、reflect:镜像填充,最后一个像素不镜像,eg:[1, 2, 3, 4] -->[ 3, 2, 1, 2, 3, 4, 3, 2](2个像素填充)

4、symmetric:镜像填充,最后一个像素镜像。eg:[1, 2, 3, 4] -->[ 2, 1, 1, 2, 3, 4, 4, 3](2个像素填充) - fill: constant时,设置填充的像数值。

在上述代码基础下做测试:

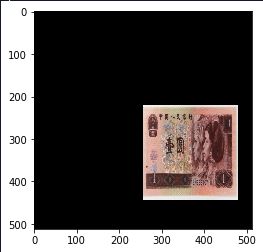

当设置成 transforms.RandomCrop(224, padding=16) 时:

为什么只有左上角是黑色的?

因为pandding操作,使得图像尺寸扩大32(16 + 16)个像素点,大于原来的像素点(224),然后随机选择一个224*224大小的图像,如图所示,是选择图像的左上角。

当设置成 transforms.RandomCrop(224, padding=(16, 64)) 时:

左右填充区域小,上下填充区域大。

当设置成 transforms.RandomCrop(224, padding=16, fill=(255, 0, 0)) 时:

填充区域的RGB值为(255, 0, 0)

当设置 transforms.RandomCrop(512, pad_if_needed=True) 时:

当size(512) 大于图像的尺寸(224)时,要设置 pad_if_needed=True,否则会报错。

当设置 transforms.RandomCrop(224, padding=64, padding_mode=‘edge’) 时:

扩充的像素点,是边缘像素点值。

当设置 **transforms.RandomCrop(224, padding=64, padding_mode=‘reflect’)**时:

进行镜像填充。

当设置 transforms.RandomCrop(1024, padding=1024, padding_mode=‘symmetric’) 时:

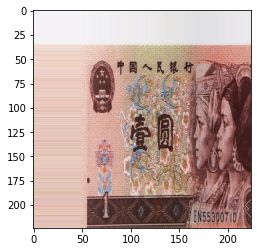

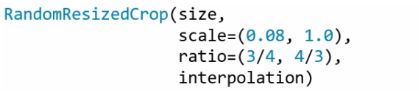

3.RandomResizedCrop

功能:随机大小、长宽比裁剪图片

- size:所需裁剪图片尺寸

- scale:随机裁剪面积比例,默认(0.08,1)

- ratio:随机长宽比,默认(3/4,4/3)

- interpolation:插值方法

PIL.Image.NEAREST

PIL.Image.BILINEAR

PIL.Image.BICUBIC

设置 transforms.RandomResizedCrop(size=224, scale=(0.5, 0.5)) 时:

4.FiveCrop

5.TenCrop

功能:在图像的上下左右及中心裁剪出尺寸为size的图片,TenCrop对这5张图片进行水平或垂直镜像获得10张图片。

- size:所需裁剪图片尺寸

- vertical_flip:是否垂直翻转

设置 transforms.FiveCrop(112)

会报错:TypeError: pic should be PIL Image or ndarray. Got

设置:

transforms.Lambda(lambda crops: torch.stack([(transforms.ToTensor()(crop)) for crop in crops]))

#transforms.ToTensor(),

#transforms.Normalize(norm_mean, norm_std),

且:

bs, ncrops, c, h, w = inputs.shape

for n in range(ncrops):

img_tensor = inputs[0, n, ...] # C H W

img = transform_invert(img_tensor, train_transform)

plt.imshow(img)

plt.show()

plt.pause(1)

FiveCrop对图像进行左上角、右上角、左下角、右下角以及中心进行裁剪。

设置:

transforms.TenCrop(112, vertical_flip=False),

transforms.Lambda(lambda crops: torch.stack([(transforms.ToTensor()(crop)) for crop in crops])),

TenCrop是在FiveCrop的基础上进行水平翻转(vertical_flip=False),vertical_flip=True为垂直翻转。

三.transforms——翻转和旋转

1.RandomHorizontalFlip

2.RandomVerticalFlip

功能:依概率水平(左右)或垂直(上下)翻转图片

- p:翻转概率

设置 transforms.RandomHorizontalFlip(p=1) 时:

水平翻转

设置 transforms.RandomVerticalFlip(p=0.5) 时

垂直翻转概率p=0.5

- degrees:选择角度

当为a时,在(-a,a)之间选择角度

当为b时,在(a,b)之间选择旋转角度 - resample:重采样方法

- expand:是否扩大图片,以保持原图信息

- center:旋转点设置,默认中心旋转

当设置 transforms.RandomRotation(90) 时:

随机旋转。

当设置transforms.RandomRotation((90), expand=True) 时:

图像区域扩大,不丢失图像信息。

设置 transforms.RandomRotation(30, center=(0, 0)) :

在左上角进行旋转,会丢失信息。

当设置 transforms.RandomRotation(30, center=(0, 0), expand=True), # expand only for center rotation:

expand only for center rotation。中心旋转和左上角旋转需要扩大的长度是不一样的,计算方法也不一样,expand方法是不能找回左上角丢失的信息。

四.注意:torchvision.transforms.ToTensor

注意torchvision.transforms.ToTensor

是可以把PIL读取的图片(PILImage)或者numpy的ndarray转化成Tensor

直接进入(step into):t_out = transforms.ToTensor()(img1) 可以看到消息内容:

def to_tensor(pic):

"""Convert a ``PIL Image`` or ``numpy.ndarray`` to tensor.

See ``ToTensor`` for more details.

Args:

pic (PIL Image or numpy.ndarray): Image to be converted to tensor.

Returns:

Tensor: Converted image.

"""

if not(_is_pil_image(pic) or _is_numpy(pic)):

raise TypeError('pic should be PIL Image or ndarray. Got {}'.format(type(pic)))

if _is_numpy(pic) and not _is_numpy_image(pic):

raise ValueError('pic should be 2/3 dimensional. Got {} dimensions.'.format(pic.ndim))

if isinstance(pic, np.ndarray):

# handle numpy array

if pic.ndim == 2:

pic = pic[:, :, None]

img = torch.from_numpy(pic.transpose((2, 0, 1)))

# backward compatibility

if isinstance(img, torch.ByteTensor):

return img.float().div(255)

else:

return img

if accimage is not None and isinstance(pic, accimage.Image):

nppic = np.zeros([pic.channels, pic.height, pic.width], dtype=np.float32)

pic.copyto(nppic)

return torch.from_numpy(nppic)

# handle PIL Image

if pic.mode == 'I':

img = torch.from_numpy(np.array(pic, np.int32, copy=False))

elif pic.mode == 'I;16':

img = torch.from_numpy(np.array(pic, np.int16, copy=False))

elif pic.mode == 'F':

img = torch.from_numpy(np.array(pic, np.float32, copy=False))

elif pic.mode == '1':

img = 255 * torch.from_numpy(np.array(pic, np.uint8, copy=False))

else:

img = torch.ByteTensor(torch.ByteStorage.from_buffer(pic.tobytes()))

# PIL image mode: L, LA, P, I, F, RGB, YCbCr, RGBA, CMYK

if pic.mode == 'YCbCr':

nchannel = 3

elif pic.mode == 'I;16':

nchannel = 1

else:

nchannel = len(pic.mode)

img = img.view(pic.size[1], pic.size[0], nchannel)

# put it from HWC to CHW format

# yikes, this transpose takes 80% of the loading time/CPU

img = img.transpose(0, 1).transpose(0, 2).contiguous()

if isinstance(img, torch.ByteTensor):

return img.float().div(255)

else:

return img

详细内容可以查看 Pytorch之浅入torchvision.transforms.ToTensor与ToPILImage

为什么我要提这个呢?是因为有一次训练的时候使用了torchvision.transforms.ToTensor,对图片除以255进行归一化,但是发现模型基本上不收敛,参数也无法传递。

这个是因为torchvision.transforms.ToTensor中:

有一步已经进行除以255了,如果再除以255,实际上除的是2552,使得参数非常非常小,梯度自然也非常非常小,这也是为什么迭代很多次看不见结果的原因。