爬虫之requests模块

requests是一个http请求库

requests是基于python内置的urllib3来编写的,它比urllib更加方便,特别是在添加headers,

post请求,以及cookies的设置上,处理代理请求,用几句话就可以实现,而urllib比较繁琐,

requests比urllib方便多了,requests是一个简单易用的http请求库

一.requests基本使用

requests模块发送简单的get请求、获取响应的方式

格式: response(响应的变量名由你命名,一般为response) = 请求工具/对象.请求方法(请求地址)

即: resposne = requests.get(url)

response常用属性

response.text— 响应体 的str类型

respones.content —响应体的 bytes类型

response.status_code —响应的状态码

response.request.headers— 响应对应的请求头

response.headers— 响应头

response.cookies---- 响应的cookie

response.url—获得url

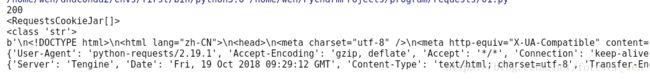

import requests ##requests模块需要安装

url = 'http://www.taobao.com'

response = requests.get(url)

print(response.status_code)

print(response.cookies)

print(response.text)

print(type(response.text))

print(response.content)

print(response.request.headers)

print(response.headers)

常见的请求方式(post和delete)

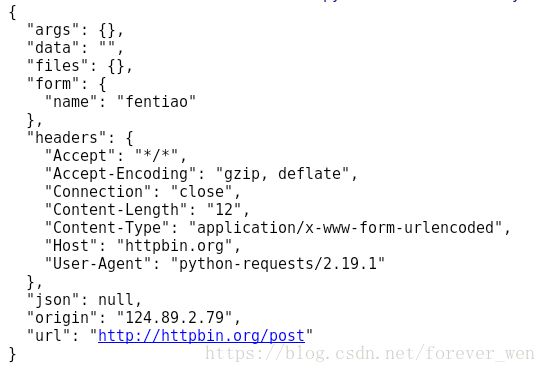

requests提交Form表单,一般存在于网站的登录,用来提交用户名和密码。以http://httpbin.org/post 为例,在requests中,以form表单形式发送post请求,只需要将请求的参数构造成一个字典,然后传给requests.post()的data参数即可import requests

response = requests.post('http://httpbin.org/post',data={'name':'fentiao'})

print(response.text)

response = requests.delete('http://httpbin.org/delete',data={'name':'fentiao'})

print(response.text)

httpbin.org网站可以显示你提交请求的内容,注意一下输出的”Content-Type”:”application/x-www-form-urlencoded”,证明这是提交Form的方式。

我们在登录一个网站时,Content-Type是这样的。

带参数的get请求

import requests

data = {

'start': 20,

'limit': 40,

'sort': 'new_score',

'status': 'P',

}

url = 'https://movie.douban.com/subject/4864908/comments'

response = requests.get(url, params=data)

print(response.url)

解析json格式

import requests

ip = input("请输入查询的IP:")

url = "http://ip.taobao.com/service/getIpInfo.php?ip=%s" %(ip)

response = requests.get(url)

content = response.json()

print(content)

print(type(content))

获取二进制数据

import requests

url = 'https://gss0.bdstatic.com/-4o3dSag_xI4khGkpoWK1HF6hhy/baike/w%3D268%3Bg%3D0/sign=4f7bf38ac3fc1e17fdbf8b3772ab913e/d4628535e5dde7119c3d076aabefce1b9c1661ba.jpg'

response = requests.get(url)

print(response.text)

with open('github.png', 'wb') as f:

# response.text : 返回字符串的页面信息

# response.content : 返回bytes的页面信息

f.write(response.content)

由于图片为二进制文件,我们用text只能得到下面的乱码。在写入文件时要用content方法

下载视频

import requests

# url = 'https://gss0.bdstatic.com/-4o3dSag_xI4khGkpoWK1HF6hhy/baike/w%3D268%3Bg%3D0/sign=4f7bf38ac3fc1e17fdbf8b3772ab913e/d4628535e5dde7119c3d076aabefce1b9c1661ba.jpg'

url = "http://gslb.miaopai.com/stream/sJvqGN6gdTP-sWKjALzuItr7mWMiva-zduKwuw__.mp4"

response = requests.get(url)

with open('/tmp/learn.mp4', 'wb') as f:

# response.text : 返回字符串的页面信息

# response.content : 返回bytes的页面信息

f.write(response.content)

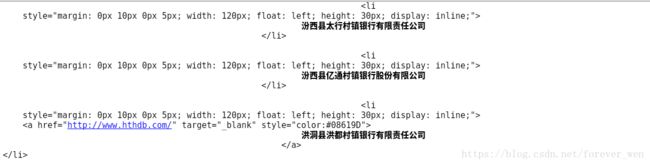

添加headers信息

当我们访问一些反爬虫的网页时,需要伪装成浏览器,即添加头信息import requests

url = 'http://www.cbrc.gov.cn/chinese/jrjg/index.html'

user_agent = 'Mozilla/5.0 (X11; Linux x86_64; rv:45.0) Gecko/20100101 Firefox/45.0'

headers = {

'User-Agent': user_agent

}

response = requests.get(url, headers=headers)

print(response.status_code)

print(response.text)

二.requests高级设置

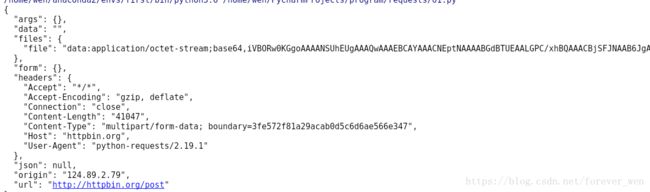

上传文件

import requests

data = {'file':open('github.png', 'rb')}

response = requests.post('http://httpbin.org/post', files=data)

print(response.text)

获取cookie信息

import requests

response = requests.get('http://www.csdn.net')

print(response.cookies)

for key, value in response.cookies.items():

print(key + "=" + value)

![]()

读取已经存在的cookie信息访问网址内容(会话维持)

import requests

# 设置一个cookie: name='westos'

s = requests.session()

response1 = s.get('http://httpbin.org/cookies/set/name/westos')

response2 = s.get('http://httpbin.org/cookies')

print(response2.text)

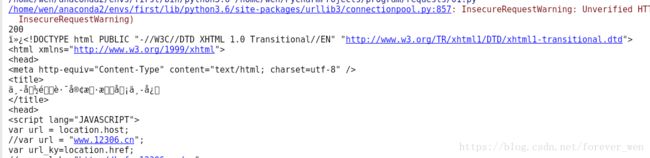

忽略证书验证

import requests

url = 'https://www.12306.cn'

response = requests.get(url, verify=False)

print(response.status_code)

print(response.text)

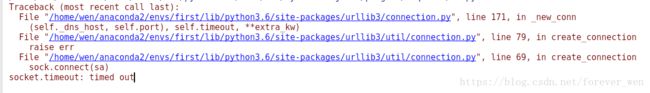

代理设置/设置超时间

import requests

proxy = {

'https': '171.221.239.11:808',

'http': '218.14.115.211:3128'

}

response = requests.get('http://httpbin.org/get', proxies=proxy, timeout=10)

print(response.text)