WGAN and WGAN-GP:Wasserstein GAN and Improved Training of Wasserstein GANs

Wasserstein GAN and Improved Training of Wasserstein GANs

Paper:

WGAN:https://arxiv.org/pdf/1701.07875.pdf

WGAN-GP:https://arxiv.org/pdf/1704.00028.pdf

参考:

https://lilianweng.github.io/lil-log/2017/08/20/from-GAN-to-WGAN.html

https://vincentherrmann.github.io/blog/wasserstein/

recommend:https://www.depthfirstlearning.com/2019/WassersteinGAN

(阅读笔记)

1.Intro

- 得到目标概率密度一般就利用极大似然估计的方法,而不同分布之间则一般用散度衡量。

- 模型生成得到的分布与原始真实的分布不太可能有交叉的地方。两个分布都仅仅只是各自有各自的,而不是联合的,得到的这种形式的目标分布是不理想的。It is then unlikely that…have a non-negligible intersection.

- 所以很多文献都是通过给目标分布添加噪声来尽量覆盖所有的例子,但是会使图像受损。

- 而GAN就是通过生成器让低维流形产生高维的分布,当下效果也不是很理想。

- 主要目标是衡量分布之间的距离。we direct our attention on the various ways to measure how close the model distribution and the real distribution are, or equivalently.

- 研究了EM距离。we provide a comprehensive theoretical analysis of how the Earth Mover (EM) distance behaves in comparison to popular probability distances and divergences used in the context of learning distributions.

- 定义了WGAN。we de fine a form of GAN called Wasserstein-GAN that minimizes a reasonable and efficient approximation of the EM distance, and we theoretically show that the corresponding optimization problem is sound.

2.Distances

-

各种 d i s t a n c e s ( d i v e r g e n c e s ) distances(divergences) distances(divergences): T V \mathbf{TV} TV; K L \mathbf{KL} KL; J S \mathbf{JS} JS等(可见论文 f f f-GAN),而 E a r t h Earth Earth- M o v e r ( E M ) Mover(EM) Mover(EM)如下:

W ( P r , P g ) = inf γ ∈ Π ( P r , P g ) E ( x , y ) ∼ γ [ ∥ x − y ∥ ] = inf γ ∈ Π ( P r , P g ) ∫ ∫ [ γ ( x , y ) ∥ x − y ∥ ] d x d y (1) \begin{aligned} W(\mathbb{P}_{r},\mathbb{P}_{g})&=\inf_{\gamma \in \Pi(\mathbb{P}_{r},\mathbb{P}_{g})} \mathbb{E}_{(x,y) \sim \gamma} \left[\|x-y \| \right]\\ &=\inf_{\gamma \in \Pi(\mathbb{P}_{r},\mathbb{P}_{g})} \int \int \left[\ \gamma(x,y)\|x-y \| \right]\mathrm{d}x\mathrm{d}y \tag{1} \end{aligned} W(Pr,Pg)=γ∈Π(Pr,Pg)infE(x,y)∼γ[∥x−y∥]=γ∈Π(Pr,Pg)inf∫∫[ γ(x,y)∥x−y∥]dxdy(1)

P r , P g \mathbb{P}_{r},\mathbb{P}_{g} Pr,Pg的联合分布集为 Π \Pi Π; γ \gamma γ是其中一种联合分布;从 γ \gamma γ中抽样得到所有 ( x , y ) (x,y) (x,y),用范数衡量距离后再求均值;在所有联合分布集 Π \Pi Π中, γ \gamma γ使该期望达到下界,该最小值即是 E a r t h Earth Earth- M o v e r ( E M ) Mover(EM) Mover(EM)。

所以具体实现就是类似推土的意思,主要目标是保证每一组抽样点相似:

-

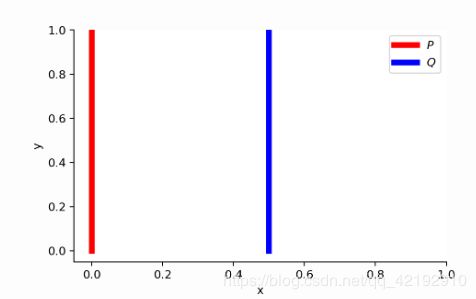

假设有均匀分布 Z ∼ U [ 0 , 1 ] Z \sim U[0,1] Z∼U[0,1],现有真实分布 P 0 ∼ ( 0 , Z ) ∈ R 2 P_0 \sim (0,Z)\in \mathbb{R}^2 P0∼(0,Z)∈R2,类似在二维坐标图中,点分布于 y y y轴 0 0 0到 1 1 1。而目标使分布 g θ ∼ ( θ , Z ) g_\theta \sim(\theta,Z) gθ∼(θ,Z)去拟合 P 0 P_0 P0。

∀ ( x , y ) ∈ P , x = 0 and y ∼ U ( 0 , 1 ) ∀ ( x , y ) ∈ Q , x = θ , 0 ≤ θ ≤ 1 and θ , y ∼ U ( 0 , 1 ) (2) \forall (x, y) \in P, x = 0 \text{ and } y \sim U(0, 1) \tag{2} \\ \forall (x, y) \in Q, x = \theta, 0 \leq \theta \leq 1 \text{ and } \theta, y \sim U(0, 1) \\ ∀(x,y)∈P,x=0 and y∼U(0,1)∀(x,y)∈Q,x=θ,0≤θ≤1 and θ,y∼U(0,1)(2)

所以有如下距离定义,只有当 θ = 0 \theta=0 θ=0时,才能达到最小,但是除了 W W W,均达不到最下值。:

W ( P 0 , P θ ) = ∣ θ ∣ J S ( P 0 , P θ ) = { log 2 if θ ≠ 0 0 if θ = 0 K L ( P 0 , P θ ) = K L ( P θ , P 0 ) = { ∞ if θ ≠ 0 0 if θ = 0 T V ( P 0 , P θ ) = { 1 if θ ≠ 0 0 if θ = 0 where: D K L ( P ∥ Q ) = ∑ x = 0 , y ∼ U ( 0 , 1 ) 1 ⋅ log 1 0 = + ∞ D J S ( P ∥ Q ) = 1 2 ( ∑ x = 0 , y ∼ U ( 0 , 1 ) 1 ⋅ log 1 1 / 2 + ∑ x = 0 , y ∼ U ( 0 , 1 ) 1 ⋅ log 1 1 / 2 ) = log 2 (3) \begin{aligned} W(\mathbb{P}_{0},\mathbb{P}_{\theta})&=|\theta|\\ \mathbf{JS}(\mathbb{P}_{0},\mathbb{P}_{\theta})&= \begin{cases} \log 2& \text{if $\theta \neq$0}\\ 0& \text{if $\theta=$0} \end{cases} \\ \mathbf{KL}(\mathbb{P}_{0},\mathbb{P}_{\theta})&=\mathbf{KL}(\mathbb{P}_{\theta},\mathbb{P}_{0})= \begin{cases} \infty& \text{if $\theta \neq$0}\\ 0& \text{if $\theta=$0} \end{cases} \\ \mathbf{TV}(\mathbb{P}_{0},\mathbb{P}_{\theta})&= \begin{cases} 1 & \text{if $\theta \neq$0}\\ 0& \text{if $\theta=$0} \end{cases} \\ \text{where: $D_{KL}(P \| Q$) }& \text{$= \sum_{x=0, y \sim U(0, 1)} 1 \cdot \log\frac{1}{0} = +\infty$ } \\ \text{ $D_{JS}(P \| Q$)}&= \text{$\frac{1}{2}(\sum_{x=0, y \sim U(0, 1)} 1 \cdot \log\frac{1}{1/2} + \sum_{x=0, y \sim U(0, 1)} 1 \cdot \log\frac{1}{1/2}) = \log 2$ } \\ \tag{3} \end{aligned} W(P0,Pθ)JS(P0,Pθ)KL(P0,Pθ)TV(P0,Pθ)where: DKL(P∥Q) DJS(P∥Q)=∣θ∣={log20if θ=0if θ=0=KL(Pθ,P0)={∞0if θ=0if θ=0={10if θ=0if θ=0=∑x=0,y∼U(0,1)1⋅log01=+∞ =21(∑x=0,y∼U(0,1)1⋅log1/21+∑x=0,y∼U(0,1)1⋅log1/21)=log2 (3) -

Why Wasserstein is indeed weak?(有待研究更新)

论文还叙述了为什么Wasserstein距离是比 J S \mathbf{JS} JS距离差的,但作者仍然用Wasserstein距离。证明用到了一些泛函的概念。 X \mathcal{X} X为 R 2 \mathbb{R}^2 R2中的一组集,即 X ∈ R 2 \mathcal{X}\in \mathbb{R}^2 X∈R2; C b ( X ) C_b(\mathcal{X}) Cb(X)是将 X \mathcal{X} X映射到 R \mathbb{R} R的函数的空间( C b ( X ) C_b(\mathcal{X}) Cb(X)中每一个元素都是函数,它是一集合):

C b ( X ) = { f : X → R , f is continuous and bounded } (4) \begin{aligned} C_b(\mathcal{X}) &= \{ f:\mathcal{X} \rightarrow \mathbb{R}, &\text{$f$ is continuous and bounded} \}\\ \tag{4} \end{aligned} Cb(X)={f:X→R,f is continuous and bounded}(4)

当有 f ∈ C b ( X ) f \in C_b(\mathcal{X}) f∈Cb(X)后,按照矩阵的方式理解则有,所以 f f f的无穷范数即是得到的 R 2 \mathbb{R}^2 R2空间结果的绝对值最大值:

assume: f m × n ⋅ X n × 1 = R m × 1 ∴ f m × n ⋅ X n × d = R m × d ∴ ∥ f ∥ ∞ = max x ∈ X ∣ f ( x ) ∣ (5) \begin{aligned} \text{assume:}f_{m \times n} \cdot \mathcal{X}_{n \times 1}= \mathbb{R}_{m \times 1} \\ \therefore f_{m \times n} \cdot \mathcal{X}_{n \times d}= \mathbb{R}_{m \times d} \\ \therefore \|f\|_{\infin} = \max_{x \in \mathcal{X}}|f(x)| \tag{5} \end{aligned} assume:fm×n⋅Xn×1=Rm×1∴fm×n⋅Xn×d=Rm×d∴∥f∥∞=x∈Xmax∣f(x)∣(5)

给集合 ( C b ( X ) (C_b(\mathcal{X}) (Cb(X)赋予一范数进行约束得到一个赋范向量空间 ( C b ( X ) , ∥ ⋅ ∥ ) (C_b(\mathcal{X}),\| \cdot \| ) (Cb(X),∥⋅∥)( f ∞ f_\infin f∞范数诱导的自然拓扑)

E × E ⟶ R ( x , y ) ↦ ∥ x − y ∥ ( x , y ) ↦ ∥ x − y ∥ (6) \begin{aligned} {\mathbb {E}}\times {\mathbb {E}}\longrightarrow {\mathbb {R}} {\displaystyle \ (x,y)\mapsto \Vert x-y\Vert } \ (x,y)\mapsto \Vert x-y\Vert \tag{6} \end{aligned} E×E⟶R (x,y)↦∥x−y∥ (x,y)↦∥x−y∥(6)

3.WGAN

- 利用Kantorovich-Rubinstein对偶性,将推土距离转换如下(but why?有待研究更新),其中 K K K代表 K-Lipschitz : ∣ f ( x 1 ) − f ( x 2 ) ∣ ≤ K ∣ x 1 − x 2 ∣ \text{K-Lipschitz}:\lvert f(x_1) - f(x_2) \rvert \leq K \lvert x_1 - x_2 \rvert K-Lipschitz:∣f(x1)−f(x2)∣≤K∣x1−x2∣,约束函数平稳,斜率不能太大:

W ( P r , P θ ) = 1 K sup ∥ f ∥ L ≤ K E x ∼ P r [ f ( x ) ] − E x ∼ P θ [ f ( x ) ] (7) \begin{aligned} W(\mathbb{P}_{r},\mathbb{P}_{\theta})= \frac{1}{K} \sup_{\| f \|_L \leq K} \mathbb{E}_{x \sim \mathbb{P}_{r}}[f(x)] - \mathbb{E}_{x \sim \mathbb{P}_{\theta}}[f(x)] \tag{7} \end{aligned} W(Pr,Pθ)=K1∥f∥L≤KsupEx∼Pr[f(x)]−Ex∼Pθ[f(x)](7)

所以有 K-Lipschitz \text{K-Lipschitz} K-Lipschitz函数 { f w } w ∈ W \{ f_w \}_{w \in W} {fw}w∈W,判别器需要学到一个好的 f f f,并且要求损失函数如下进行收敛:

L ( P r , P θ ) = W ( P r , P θ ) = max w ∈ W E x ∼ p r [ f w ( x ) ] − E z ∼ p r ( z ) [ f w ( g θ ( z ) ) ] (8) \begin{aligned} L(\mathbb{P}_{r},\mathbb{P}_{\theta})=W(\mathbb{P}_{r},\mathbb{P}_{\theta})= \max_{w \in W} \mathbb{E}_{x \sim p_r}[f_w(x)] - \mathbb{E}_{z \sim p_r(z)}[f_w(g_\theta(z))] \tag{8} \end{aligned} L(Pr,Pθ)=W(Pr,Pθ)=w∈WmaxEx∼pr[fw(x)]−Ez∼pr(z)[fw(gθ(z))](8)

正如算法流程所述, 以便使用梯度下降,所以文中使用约束权重范围的方法,以防止改变权重造成很大的改变,确保 1-Lipschitz \text{1-Lipschitz} 1-Lipschitz。

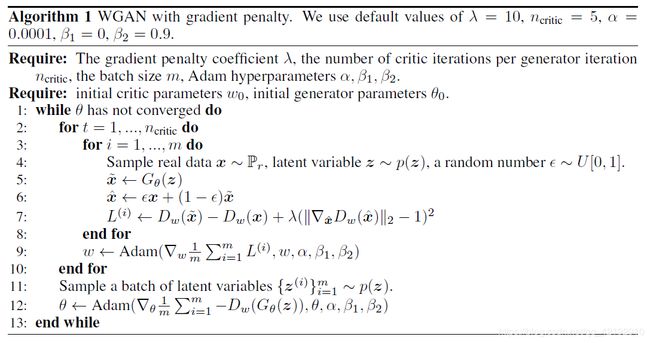

4.WGAN-GP

Intro

- 在WGAN-GP的文章中提出了使用权重约束的问题,会不收敛或者仅产生较差的样本。but sometimes can still generate only poor samples or fail to converge.

- 提出了新的裁剪权重的方法(梯度惩罚gradient penalty)。We propose an alternative to clipping weights: penalize the norm of gradient of the critic with respect to its input.

Details

- 对于 1-Lipschitz \text{1-Lipschitz} 1-Lipschitz的函数 f f f意味这 P r , P θ \mathbb{P}_{r},\mathbb{P}_{\theta} Pr,Pθ之间的梯度不会超过1,所以惩罚梯度的意思就是惩罚大于1的情况。所以损失就如下改变:

L = E x ~ ∼ P θ [ D ( x ~ ) ] − E x ~ ∼ P r [ D ( x ~ ) ] + λ E x ^ ∼ P x ^ [ ( ∥ ▽ x ^ D ( x ^ ) ∥ 2 − 1 ) 2 ] (9) \begin{aligned} L=\mathbb{E}_{\widetilde{x} \sim \mathbb{P}_{\theta}} \left[D(\widetilde{x})\right]-\mathbb{E}_{\widetilde{x} \sim \mathbb{P}_{r}} \left[D(\widetilde{x})\right] + \lambda \mathbb{E}_{\hat{x} \sim \mathbb{P}_{\hat{x}}} \left[ (\| \bigtriangledown_{\hat{x}}D(\hat{x}) \|_2-1)^2 \right] \tag{9} \end{aligned} L=Ex ∼Pθ[D(x )]−Ex ∼Pr[D(x )]+λEx^∼Px^[(∥▽x^D(x^)∥2−1)2](9)

前一部分很容易理解即是GAN的标准损失,后面一项就是超参数 λ \lambda λ下对梯度进行约束的表达。 - 由上述式子理应该对所有的数据进行惩罚,但是这样却很棘手,无法对所有的数据都保证斜率小于1,所以是重新随机抽出一个数据集 x ^ \hat{x} x^,仅对其进行惩罚。

- 没有BN层, λ \lambda λ设置为10。

- 理论上惩罚应该仅仅对所有的大于1,而小于1的部分不用管 max { 0 , ( ∥ ▽ x ^ D ( x ^ ) ∥ 2 − 1 ) 2 } \max\{0 \text{ , } (\| \bigtriangledown_{\hat{x}}D(\hat{x}) \|_2-1)^2 \} max{0 , (∥▽x^D(x^)∥2−1)2},但是让其靠近1理论上更好。We encourage the norm of the gradient to go towards 1 (two-sided penalty) instead of just staying below 1 (one-sided penalty).