Caffe 实现多标签分类 支持Multi-Label的LMDB数据格式输入

Caffe自带的图像转LMDB接口只支持单label,对于多label的任务,可以使用HDF5的格式,也可以通过修改caffe代码来实现, 我的文章Caffe 实现多标签分类 里介绍了怎么通过修改ImageDataLayer来实现Multilabel的任务, 本篇文章介绍怎么通过修改DataLayer来实现带Multilabel的LMDB格式数据输入的分类任务

1. 首先修改代码

修改下面的几个文件:

$CAFFE_ROOT/src/caffe/proto/caffe.proto

$CAFFE_ROOT/src/caffe/layers/data_layer.cpp

$CAFFE_ROOT/src/caffe/util/io.cpp

$CAFFE_ROOT/include/caffe/util/io.hpp

$CAFFE_ROOT/tools/convert_imageset.cpp

(1) 修改 caffe.proto

在 message Datum { }里添加用于容纳labels的一项

repeated float labels = 8;

如果你的Label只有int类型,可以用 repeated int32 labels = 8;

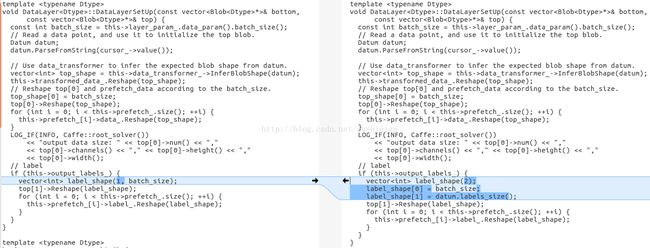

(2) 修改 data_layer.cpp

修改函数 DataLayerSetUp()

新的代码:

vector label_shape(2);

label_shape[0] = batch_size;

label_shape[1] = datum.labels_size(); 修改函数 load_batch()

新的代码:

int labelSize = datum.labels_size();

for(int i = 0; i < labelSize; i++){

top_label[item_id*labelSize + i] = datum.labels(i);

}(3) 修改 io.hpp

新的代码

bool ReadFileToDatum(const string& filename, const vector label, Datum* datum);

inline bool ReadFileToDatum(const string& filename, Datum* datum) {

return ReadFileToDatum(filename, vector(), datum);

}

bool ReadImageToDatum(const string& filename, const vector label,

const int height, const int width, const bool is_color,

const std::string & encoding, Datum* datum);

inline bool ReadImageToDatum(const string& filename, const vector label,

const int height, const int width, const bool is_color, Datum* datum) {

return ReadImageToDatum(filename, label, height, width, is_color,

"", datum);

}

inline bool ReadImageToDatum(const string& filename, const vector label,

const int height, const int width, Datum* datum) {

return ReadImageToDatum(filename, label, height, width, true, datum);

}

inline bool ReadImageToDatum(const string& filename, const vector label,

const bool is_color, Datum* datum) {

return ReadImageToDatum(filename, label, 0, 0, is_color, datum);

}

inline bool ReadImageToDatum(const string& filename, const vector label,

Datum* datum) {

return ReadImageToDatum(filename, label, 0, 0, true, datum);

}

inline bool ReadImageToDatum(const string& filename, const vector label,

const std::string & encoding, Datum* datum) {

return ReadImageToDatum(filename, label, 0, 0, true, encoding, datum);

}

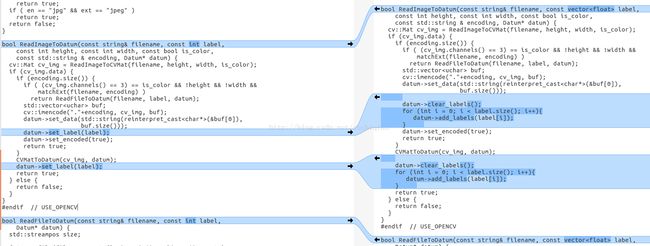

代码修改前后,右边是修改后的代码

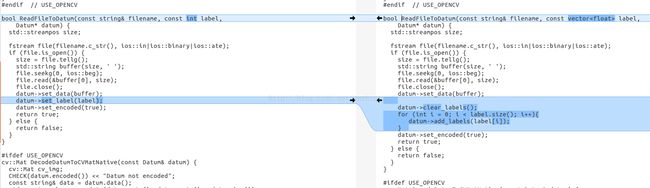

(4) 修改 io.cpp

修改函数 ReadImageToDatum()

修改后的代码

bool ReadImageToDatum(const string& filename, const vector label,

const int height, const int width, const bool is_color,

const std::string & encoding, Datum* datum) {

cv::Mat cv_img = ReadImageToCVMat(filename, height, width, is_color);

if (cv_img.data) {

if (encoding.size()) {

if ( (cv_img.channels() == 3) == is_color && !height && !width &&

matchExt(filename, encoding) )

return ReadFileToDatum(filename, label, datum);

std::vector buf;

cv::imencode("."+encoding, cv_img, buf);

datum->set_data(std::string(reinterpret_cast(&buf[0]),

buf.size()));

datum->clear_labels();

for (int i = 0; i < label.size(); i++){

datum->add_labels(label[i]);

}

datum->set_encoded(true);

return true;

}

CVMatToDatum(cv_img, datum);

datum->clear_labels();

for (int i = 0; i < label.size(); i++){

datum->add_labels(label[i]);

}

return true;

} else {

return false;

}

}

修改函数 ReadFileToDatum()

修改后的代码

bool ReadFileToDatum(const string& filename, const vector label,

Datum* datum) {

std::streampos size;

fstream file(filename.c_str(), ios::in|ios::binary|ios::ate);

if (file.is_open()) {

size = file.tellg();

std::string buffer(size, ' ');

file.seekg(0, ios::beg);

file.read(&buffer[0], size);

file.close();

datum->set_data(buffer);

datum->clear_labels();

for (int i = 0; i < label.size(); i++){

datum->add_labels(label[i]);

}

datum->set_encoded(true);

return true;

} else {

return false;

}

}

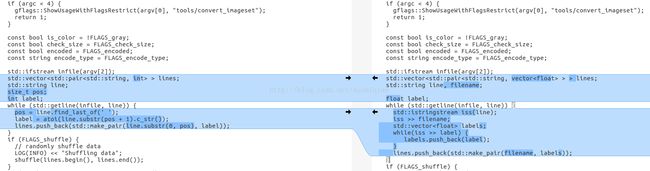

(5) 修改 convert_imageset.cpp

修改部分新的代码

std::vector > > lines;

std::string line, filename;

float label;

while (std::getline(infile, line)) {

std::istringstream iss(line);

iss >> filename;

std::vector labels;

while(iss >> label) {

labels.push_back(label);

}

lines.push_back(std::make_pair(filename, labels));

} 2. 编译代码

mark@ubuntu:~/caffe/build$ make all

mark@ubuntu:~/caffe/build$ sudo make install

3. 生成LMDB文件

编译成功后,使用新生成的 convert_imageset 将训练所用的图片转换成LMDB文件

将训练所用图片转换为LMDB文件

mark@ubuntu:~/caffe$ sudo ./build/tools/convert_imageset -shuffle=true /home/mark/data/ /home/mark/data/train.txt ./examples/captcha/captcha_train_lmdb

/home/mark/data/ 是训练所用的图片所在的root目录

/home/mark/data/train.txt 记录每个训练图片文件的名称和标签,它的内容见下图,训练图片文件的名称和/home/mark/data/拼接起来是训练图片的绝对路径

./examples/captcha/captcha_train_lmdb 是生成的lmdb文件所在目录

同样可以将测试图片转换成LMDB文件

mark@ubuntu:~/caffe$ sudo ./build/tools/convert_imageset -shuffle=true /home/mark/data/ /home/mark/data/test.txt ./examples/captcha/captcha_test_lmdb

4. 网络结构和solver

网络结构文件 captcha_train_test_lmdb.prototxt

name: "captcha"

layer {

name: "Input"

type: "Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

transform_param {

scale: 0.00390625

}

data_param {

source: "examples/captcha/captcha_train_lmdb"

batch_size: 50

backend: LMDB

}

}

layer {

name: "Input"

type: "Data"

top: "data"

top: "label"

include {

phase: TEST

}

transform_param {

scale: 0.00390625

}

data_param {

source: "examples/captcha/captcha_test_lmdb"

batch_size: 20

backend: LMDB

}

}

layer {

name: "slice"

type: "Slice"

bottom: "label"

top: "label_1"

top: "label_2"

top: "label_3"

top: "label_4"

slice_param {

axis: 1

slice_point:1

slice_point:2

slice_point:3

}

}

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

convolution_param {

num_output: 20

kernel_size: 5

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "pool1"

type: "Pooling"

bottom: "conv1"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layer {

name: "conv2"

type: "Convolution"

bottom: "pool1"

top: "conv2"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

convolution_param {

num_output: 50

kernel_size: 5

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "pool2"

type: "Pooling"

bottom: "conv2"

top: "pool2"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layer {

name: "ip1"

type: "InnerProduct"

bottom: "pool2"

top: "ip1"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 500

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "relu1"

type: "ReLU"

bottom: "ip1"

top: "ip1"

}

layer {

name: "ip2"

type: "InnerProduct"

bottom: "ip1"

top: "ip2"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 100

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "ip3_1"

type: "InnerProduct"

bottom: "ip2"

top: "ip3_1"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 10

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "ip3_2"

type: "InnerProduct"

bottom: "ip2"

top: "ip3_2"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 10

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "ip3_3"

type: "InnerProduct"

bottom: "ip2"

top: "ip3_3"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 10

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "ip3_4"

type: "InnerProduct"

bottom: "ip2"

top: "ip3_4"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 10

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "accuracy1"

type: "Accuracy"

bottom: "ip3_1"

bottom: "label_1"

top: "accuracy1"

include {

phase: TEST

}

}

layer {

name: "loss1"

type: "SoftmaxWithLoss"

bottom: "ip3_1"

bottom: "label_1"

top: "loss1"

}

layer {

name: "accuracy2"

type: "Accuracy"

bottom: "ip3_2"

bottom: "label_2"

top: "accuracy2"

include {

phase: TEST

}

}

layer {

name: "loss2"

type: "SoftmaxWithLoss"

bottom: "ip3_2"

bottom: "label_2"

top: "loss2"

}

layer {

name: "accuracy3"

type: "Accuracy"

bottom: "ip3_3"

bottom: "label_3"

top: "accuracy3"

include {

phase: TEST

}

}

layer {

name: "loss3"

type: "SoftmaxWithLoss"

bottom: "ip3_3"

bottom: "label_3"

top: "loss3"

}

layer {

name: "accuracy4"

type: "Accuracy"

bottom: "ip3_4"

bottom: "label_4"

top: "accuracy4"

include {

phase: TEST

}

}

layer {

name: "loss4"

type: "SoftmaxWithLoss"

bottom: "ip3_4"

bottom: "label_4"

top: "loss4"

}# The train/test net protocol buffer definition

net: "examples/captcha/captcha_train_test_lmdb.prototxt"

# test_iter specifies how many forward passes the test should carry out.

# covering the full 9,800 testing images.

test_iter: 200

# Carry out testing every 200 training iterations.

test_interval: 200

# The base learning rate, momentum and the weight decay of the network.

base_lr: 0.001

momentum: 0.9

weight_decay: 0.0005

# The learning rate policy

lr_policy: "inv"

gamma: 0.0001

power: 0.75

# Display every 100 iterations

display: 100

# The maximum number of iterations

max_iter: 10000

# snapshot intermediate results

snapshot: 5000

snapshot_prefix: "examples/captcha/captcha"

# solver mode: CPU or GPU

solver_mode: GPU5. 开始训练

mark@ubuntu:~/caffe$ sudo ./build/tools/caffe train --solver=examples/captcha/captcha_solver_lmdb.prototxt

训练完后,生成model文件: captcha_iter_10000.caffemodel

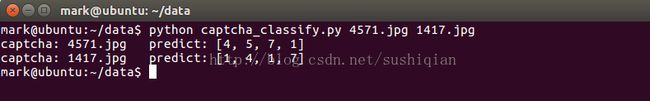

6. 用生成的model 文件进行测试

首先,需要一个deploy.prototxt文件,在captcha_train_test_lmdb.prototxt的基础上修改,修改后保存为 captcha_deploy_lmdb.prototxt 内容如下

name: "captcha"

input: "data"

input_dim: 1 # batchsize

input_dim: 3 # number of channels - rgb

input_dim: 60 # height

input_dim: 160 # width

layer {

name: "conv1"

type: "Convolution"

bottom: "data"

top: "conv1"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

convolution_param {

num_output: 20

kernel_size: 5

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "pool1"

type: "Pooling"

bottom: "conv1"

top: "pool1"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layer {

name: "conv2"

type: "Convolution"

bottom: "pool1"

top: "conv2"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

convolution_param {

num_output: 50

kernel_size: 5

stride: 1

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "pool2"

type: "Pooling"

bottom: "conv2"

top: "pool2"

pooling_param {

pool: MAX

kernel_size: 2

stride: 2

}

}

layer {

name: "ip1"

type: "InnerProduct"

bottom: "pool2"

top: "ip1"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 500

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "relu1"

type: "ReLU"

bottom: "ip1"

top: "ip1"

}

layer {

name: "ip2"

type: "InnerProduct"

bottom: "ip1"

top: "ip2"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 100

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "ip3_1"

type: "InnerProduct"

bottom: "ip2"

top: "ip3_1"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 10

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "ip3_2"

type: "InnerProduct"

bottom: "ip2"

top: "ip3_2"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 10

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "ip3_3"

type: "InnerProduct"

bottom: "ip2"

top: "ip3_3"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 10

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "ip3_4"

type: "InnerProduct"

bottom: "ip2"

top: "ip3_4"

param {

lr_mult: 1

}

param {

lr_mult: 2

}

inner_product_param {

num_output: 10

weight_filler {

type: "xavier"

}

bias_filler {

type: "constant"

}

}

}

layer {

name: "prob1"

type: "Softmax"

bottom: "ip3_1"

top: "prob1"

}

layer {

name: "prob2"

type: "Softmax"

bottom: "ip3_2"

top: "prob2"

}

layer {

name: "prob3"

type: "Softmax"

bottom: "ip3_3"

top: "prob3"

}

layer {

name: "prob4"

type: "Softmax"

bottom: "ip3_4"

top: "prob4"

}编写测试代码:

import numpy as np

import os

import sys

os.environ['GLOG_minloglevel'] = '3'

import caffe

CAFFE_ROOT = '/home/mark/caffe'

deploy_file_name = 'captcha_deploy_lmdb.prototxt'

model_file_name = 'captcha_iter_10000.caffemodel'

IMAGE_HEIGHT = 60

IMAGE_WIDTH = 160

IMAGE_CHANNEL = 3

def classify(imageFileName):

deploy_file = CAFFE_ROOT + '/examples/captcha/' + deploy_file_name

model_file = CAFFE_ROOT + '/examples/captcha/' + model_file_name

#初始化caffe

net = caffe.Net(deploy_file, model_file, caffe.TEST)

#数据预处理

transformer = caffe.io.Transformer({'data': net.blobs['data'].data.shape})

transformer.set_transpose('data', (2, 0, 1))#pycaffe读取的图片文件格式为H×W×C,需转化为C×H×W

#pycaffe将图片存储为[0, 1], 如果模型输入用的是0~255的原始格式,需要做如下转换

#transformer.set_raw_scale('data', 255)

transformer.set_channel_swap('data', (2, 1, 0))#caffe中图片是BGR格式,而原始格式是RGB,所以要转化

# 将输入图片格式转化为合适格式(与deploy文件相同)

net.blobs['data'].reshape(1, IMAGE_CHANNEL, IMAGE_HEIGHT, IMAGE_WIDTH)

#读取图片

#参数color: True(default)是彩色图,False是灰度图

img = caffe.io.load_image(imageFileName, color=True)

#数据输入、预处理

net.blobs['data'].data[...] = transformer.preprocess('data', img)

#前向迭代,即分类

out = net.forward()

#求出每个标签概率最大值的下标

result = []

predict1 = out['prob1'][0].argmax()

result.append(predict1)

predict2 = out['prob2'][0].argmax()

result.append(predict2)

predict3 = out['prob3'][0].argmax()

result.append(predict3)

predict4 = out['prob4'][0].argmax()

result.append(predict4)

return result

if __name__ == '__main__':

imgList = sys.argv[1:]

for captcha in imgList:

predict = classify(captcha)

print "captcha:", captcha, " predict:", predict