【MNN学习一】基于Linux的MNN编译安装,模型转换以及benchmark测试

目录

一. MNN编译安装 (MNN编译)

二. 编译模型转换工具MNNConvert (MNNConvert)

三. 下载模型并使用MNNConvert (模型下载&转换)

四. 测试benchmark

一. MNN编译安装 (MNN编译)

1. 编译选项

cmake_minimum_required(VERSION 2.8)

project(MNN)

# complier options

set(CMAKE_C_STANDARD 99)

set(CMAKE_CXX_STANDARD 11)

enable_language(ASM)

# set(CMAKE_C_COMPILER gcc)

# set(CMAKE_CXX_COMPILER g++)

option(MNN_USE_CPP11 "Enable MNN use c++11" ON)

if (NOT MSVC)

if(MNN_USE_CPP11)

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -std=gnu99")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11")

else()

set(CMAKE_C_FLAGS "${CMAKE_C_FLAGS} -std=gnu99")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++0x")

endif()

endif()

# build options

option(MNN_BUILD_HARD "Build -mfloat-abi=hard or not" OFF)

option(MNN_BUILD_SHARED_LIBS "MNN build shared or static lib" ON)

option(MNN_FORBID_MULTI_THREAD "Disable Multi Thread" OFF)

option(MNN_OPENMP "Enable Multiple Thread Linux|Android" ON)

option(MNN_USE_THREAD_POOL "Use Multiple Thread by Self ThreadPool" OFF)

option(MNN_SUPPORT_TRAIN "Enable Train Ops" ON)

option(MNN_BUILD_DEMO "Build demo/exec or not" ON)

option(MNN_BUILD_QUANTOOLS "Build Quantized Tools or not" ON)

option(MNN_USE_INT8_FAST "Build int8 or not" ON)

if (MNN_USE_THREAD_POOL)

set(MNN_OPENMP OFF)

add_definitions(-DMNN_USE_THREAD_POOL)

endif()

if(MNN_FORBID_MULTI_THREAD)

add_definitions(-DMNN_FORBIT_MULTI_THREADS)

endif()

if(MNN_USE_INT8_FAST)

add_definitions(-DMNN_USE_INT8_FAST)

endif()

# debug options

option(MNN_DEBUG "Enable MNN DEBUG" OFF)

if(CMAKE_BUILD_TYPE MATCHES "Debug")

set(MNN_DEBUG ON)

endif()

option(MNN_DEBUG_MEMORY "MNN Debug Memory Access" OFF)

option(MNN_DEBUG_TENSOR_SIZE "Enable Tensor Size" OFF )

option(MNN_GPU_TRACE "Enable MNN Gpu Debug" OFF)

if(MNN_DEBUG_MEMORY)

add_definitions(-DMNN_DEBUG_MEMORY)

endif()

if(MNN_DEBUG_TENSOR_SIZE)

add_definitions(-DMNN_DEBUG_TENSOR_SIZE)

endif()

if(MNN_GPU_TRACE)

add_definitions(-DMNN_GPU_FORCE_FINISH)

endif()

# backend options

option(MNN_METAL "Enable Metal" OFF)

option(MNN_OPENCL "Enable OpenCL" ON)

option(MNN_OPENGL "Enable OpenGL" OFF)

option(MNN_VULKAN "Enable Vulkan" OFF)

option(MNN_ARM82 "Enable ARM82" ON)

# codegen register ops

if (MNN_METAL)

add_definitions(-DMNN_CODEGEN_REGISTER)

endif()

# target options

option(MNN_BUILD_BENCHMARK "Build benchmark or not" ON)

option(MNN_BUILD_TEST "Build tests or not" ON)

option(MNN_BUILD_FOR_ANDROID_COMMAND "Build from command" OFF)

option(MNN_BUILD_FOR_IOS "Build for ios" OFF)

set (MNN_HIDDEN FALSE)

if (NOT MNN_BUILD_TEST)

if (NOT MNN_DEBUG)

set (MNN_HIDDEN TRUE)

endif()

endif()

include(cmake/macros.cmake)

message(STATUS ">>>>>>>>>>>>>")

message(STATUS "MNN BUILD INFO:")

message(STATUS "\tSystem: ${CMAKE_SYSTEM_NAME}")

message(STATUS "\tProcessor: ${CMAKE_SYSTEM_PROCESSOR}")

message(STATUS "\tDEBUG: ${MNN_DEBUG}")

message(STATUS "\tMetal: ${MNN_METAL}")

message(STATUS "\tOpenCL: ${MNN_OPENCL}")

message(STATUS "\tOpenGL: ${MNN_OPENGL}")

message(STATUS "\tVulkan: ${MNN_VULKAN}")

message(STATUS "\tOpenMP: ${MNN_OPENMP}")

message(STATUS "\tHideen: ${MNN_HIDDEN}")

.

.

.

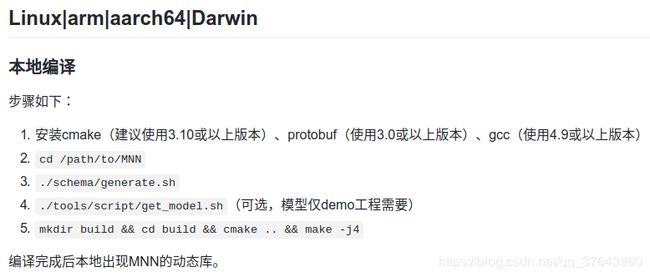

.2. 本地编译

在这一步中先忽略第四小步,在MNNConvert安装以后再./tools/script/get_model.sh

二. 编译模型转换工具MNNConvert (MNNConvert)

1. 安装protobuf(3.0以上) (Protocol Buffers - Google's data interchange format)

sudo apt-get install autoconf automake libtool curl make g++ unzip

git clone https://github.com/protocolbuffers/protobuf.git

cd protobuf

git submodule update --init --recursive

./autogen.sh

./configure

make

make check

sudo make install

sudo ldconfig2. 编译MNNConvert

以下两种方法可用:

(1) 执行以下命令:

cd MNN/tools/converter

./generate_schema.sh

mkdir build

cd build && cmake .. && make -j4(2) 在本地(rk3399)编译时,先修改build_tool.sh中的 make -j16

#!/bin/bash

if [ -d "build" ]; then

rm -rf build

fi

./generate_schema.sh

mkdir build

cd build

cmake ..

make clean

make -j4 # # #3. 测试MNNConvert (模型转换的使用)

cd MNN/tools/converter/build

./MNNConvert -h4. 测试MNNDump2Json (MNNDump2Json)

将MNN模型bin文件 dump 成可读的类json格式文件,以方便对比原始模型参数

cd MNN/tools/converter/build

./MNNDump2Json.out ../../../benchmark/models/mobilenet_v1.caffe.mnn mobilenet_v1.caffe.json三. 下载模型并使用MNNConvert (模型下载&转换)

1. 下载并使用第二步得到的MNNConvert来转换模型

cd MNN

./tools/script/get_model.sh下载的模型在/MNN/resource/model/ (应该没记错^_^)

2. 使用MNNConvert

(1) Caffe2MNN

cd MNN/resource/build

./MNNConvert -f CAFFE --modelFile mobilenet_v1.caffe.caffemodel --prototxt mobilenet_v1.caffe.prototxt --MNNModel mobilenet_v1.caffe.mnn --bizCode MNN(2) TFLite2MNN

cd MNN/resource/build

./MNNConvert -f TFLITE --modelFile mobilenet_v2_1.0_224_quant.tflite --MNNModel mobilenet_v2_1.0_224_quant.tflite.mnn --bizCode MNN将mobilenet_v1.caffe.mnn,mobilenet_v2_1.0_224_quant.tflite.mnn复制到/MNN/benchmark/models/目录下用于下面的测试。

四. 测试benchmark

cd MNN/build

./benchmark.out ../benchmark/models/ 10 0

# ------------------ output --------------------- #

MNN benchmark

Forward type: **CPU** thread=4** precision=2

--------> Benchmarking... loop = 10

[ - ] MobileNetV2_224.mnn max = 92.516ms min = 88.733ms avg = 90.227ms

[ - ] mobilenet_v2_1.0_224_quant.tflite.mnn max = 87.738ms min = 84.411ms avg = 85.817ms

[ - ] mobilenet_v1_quant.caffe.mnn max = 75.278ms min = 74.261ms avg = 74.763ms

[ - ] inception-v3.mnn max = 996.025ms min = 807.543ms avg = 863.333ms

[ - ] resnet-v2-50.mnn max = 531.352ms min = 445.743ms avg = 473.887ms

[ - ] mobilenet-v1-1.0.mnn max = 103.705ms min = 93.981ms avg = 96.573ms

[ - ] SqueezeNetV1.0.mnn max = 193.273ms min = 115.615ms avg = 127.908ms

[ - ] mobilenet_v1.caffe.mnn max = 107.799ms min = 94.063ms avg = 98.914mscd MNN/build

./benchmark.out ../benchmark/models/ 10 0

# ------------------ output --------------------- #

MNN benchmark

Forward type: **OpenCL** thread=4** precision=2

--------> Benchmarking... loop = 10

Can't Find type=3 backend, use 0 instead

[ - ] MobileNetV2_224.mnn max = 92.939ms min = 88.632ms avg = 89.630ms

Can't Find type=3 backend, use 0 instead

[ - ] mobilenet_v2_1.0_224_quant.tflite.mnn max = 89.197ms min = 84.408ms avg = 86.093ms

Can't Find type=3 backend, use 0 instead

[ - ] inception-v3.mnn max = 870.511ms min = 817.133ms avg = 839.259ms

Can't Find type=3 backend, use 0 instead

[ - ] resnet-v2-50.mnn max = 501.031ms min = 448.233ms avg = 462.990ms

Can't Find type=3 backend, use 0 instead

[ - ] mobilenet-v1-1.0.mnn max = 107.135ms min = 94.491ms avg = 98.316ms

Can't Find type=3 backend, use 0 instead

[ - ] SqueezeNetV1.0.mnn max = 124.856ms min = 113.669ms avg = 118.974ms

Can't Find type=3 backend, use 0 instead

[ - ] mobilenet_v1.caffe.mnn max = 107.162ms min = 94.707ms avg = 98.140ms编译MNN时,选择option(MNN_OPENCL "Enable OpenCL" ON),为啥出现不能用OpenCL!!!

参考链接:https://github.com/alibaba/MNN