Resnet原理&源码简单分析

Resnet原理&源码简单分析

- 原理

- 源码

嗨,小伙伴们,今天让我们来了解一下Resnet的原理以及Resnet18网络在Pytorch的实现。

原理

Resnet想必大家都很熟悉了,它的中文名为残差网络,是由何恺明大佬提出的一种网络结构。

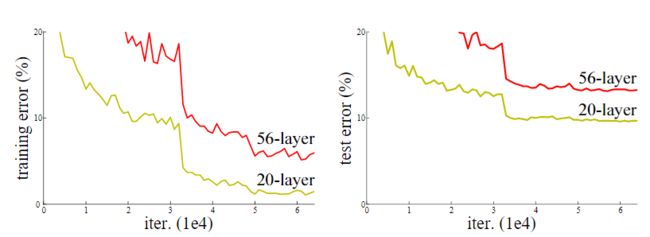

在论文的开篇,提出了一个问题,神经网络越深,性能就越好吗?答案是否定的,如越深的神经网络可能造成著名的梯度消失、爆炸问题,但这个问题已经通过Batch normalization解决。

但更深的网络呢?

会出现网络层数增加但loss不降反升的情况(如下图),而且这种下降并不是通过过拟合引起的。

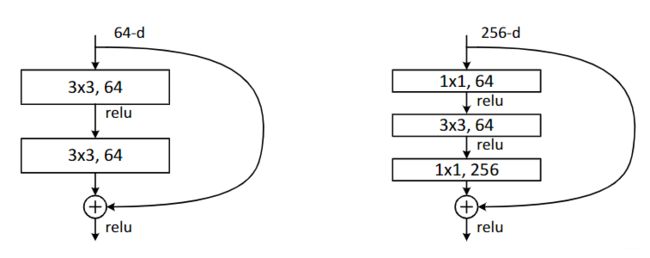

为了能够实现更深的网络同时不出现退化的现象,论文提出了一个假设:当把浅层网络特征传到深层网络时,深层网络的效果一定会比浅层网络好(至少不会差),只要保证输出的特征参数一致,那么就可以使用Identity mapping(恒等映射),来传递特征。

那么,恒等映射是一个什么样的东西呢?如下图,非常简单,其实就是把浅层网络的输出X加到两层或三层卷积层后(较深层)的输出,在这个过程中,两层神经网络的输出参数个数是一样的,这也保证了不同深度的网络参数可以进行直接相加。

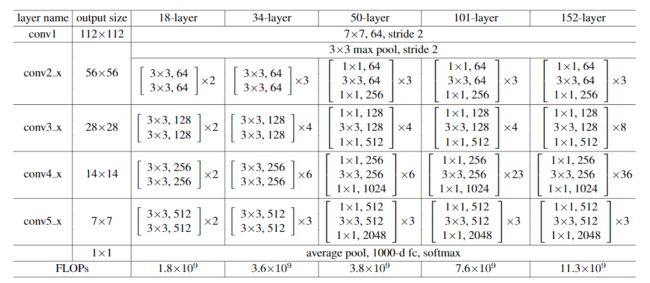

我们把上面的Block,称之为A Building Block,在论文中,提出了resnet18,resnet34,resnet50,resnet101,resnet152,resnet后面的尾数分别代表了网络的层数。在18、34的网络里,使用到的A Building Block是上图左边的形式。而在50、101、152网络里,使用的是右边的形式。

提出的五个网络结构如下所示:

这个图是怎么看的呢?我们以18层网络Resnet18来看。

Resnet18要经过一个卷积层、Pooling层,然后是四个“小方块”,一个方块由两个A Build Block组成,一个A Build Block又由两个卷积层组成,四个“小方块”即16层,最后是average pool、全连接层。由于Pooling层不需要参数学习,故去除Pooling层,整个resnet18网络由18层组成。

源码

为了更好地帮助小伙伴理解,我把resnet在pytorch的源码缩减了,目前这个代码只实现了resnet18和resnet34。

如有需要可自行查看pytorch的resnet源码。

一开始图像的输入是224*224,经过第一次卷积之后,由于stride为2,output输出变为112x112,然后经过一个max pool,size又缩小一半变为56x56。这两个都是正常的CNN操作。

![]()

不难在__init__定义以下组件,

self.conv1 = nn.Conv2d(1, self.inplanes, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(self.inplanes)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

同时在forward()前向传播写上:

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

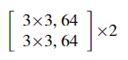

然后再看到这个小方块,它表示的就是刚刚所说的A Building Block,两个Building Block串在一起,相当于这里有四层卷积层。由于Resnet18有四个这样的方块,每一个方块有四层卷积层,每一个方块不同的地方就在于卷积通道分辨率和卷积核数量不同,一个简单的想法就是定义一个A Building Block类,然后将2个A Building Block串在一起当成“一层”,然后在前向传播中调用“四层”即可。

我们把把一个A Building Block定义为BasicBlock,由于输入输出尺度等参数可能不同,所以在调用时需要传参。比较需要注意的是恒等映射,其实在代码实现就是在前向传播之前保存输入的所有参数identity,当进行两次卷积后,就将输出+identity得到新的输出,在相加之前还得判断是否需要先进行下采样,保证输入和输出的参数是一样的。

class BasicBlock(nn.Module):

# OutChannal represents kernal size.

def __init__(self, InChannal, OutChannal, stride=1, downsample=None):

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(InChannal, OutChannal, kernel_size=3, stride=stride, bias=False, padding=1)

self.bn1 = nn.BatchNorm2d(OutChannal)

self.relu = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(OutChannal, OutChannal, kernel_size=3, bias=False, padding=1)

self.bn2 = nn.BatchNorm2d(OutChannal)

self.downsample = downsample

self.stride = stride

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity # Resnet`s essence

out = self.relu(out)

return out

到这里大家应该就逐渐清晰了,我们只需要定义两个类,一个是BasicBlock类,另一个是ResNet的类。BasicBlock就是一个基础的模块给Resnet调用。在基础模块BasicBlock写好之后,我们继续完善Resnet。现在要做的事情就是把两个BasicBlock拼接起来,形成刚刚的“小方块”。

在ResNet类里,定义了一个拼接函数,当需要下采样的时候,也即是stride步长为2时,进行下采样,然后再将剩余的Block拼接起来(根据block数量而定),使用了Sequential函数。由代码可知,在每一个小方块运行前(除了第一个),都会先进行下采样。

def _make_layer(self, Basicblock, planes, block_num, stride=1):

downsample = None

# Change channal size

if stride != 1 or self.inplanes != planes:

downsample = nn.Sequential(

nn.Conv2d(self.inplanes, planes, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(planes),

)

# Initial layers

layers = []

# 1.original layer, downsamples firstly.

layers.append(Basicblock(self.inplanes, planes, stride, downsample))

self.inplanes = planes

# 2.add layer

for i in range(1, block_num):

layers.append(Basicblock(self.inplanes, planes))

return nn.Sequential(*layers)

于是,resnet18中的由resnet组成的“小方块”,就由上面的_make_layer函数封装好了。

最后,你只需要在Resnet里“搭积木”就好了。比如,resnet18要经过一个卷积层、BN层、ReLU层,Pooling层,然后是四个“小方块”,最后是average pool、全连接层以及softmax。

先在__init__里定义:

self.conv1 = nn.Conv2d(1, self.inplanes, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(self.inplanes)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(Basicblock, 64, block_num=layers[0], stride=1)

self.layer2 = self._make_layer(Basicblock, 128, block_num=layers[1], stride=2)

self.layer3 = self._make_layer(Basicblock, 256, block_num=layers[2], stride=2)

self.layer4 = self._make_layer(Basicblock, 512, block_num=layers[3], stride=2)

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512, num_classes)

再在forward前向传播中写下:

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

然后我们定义函数来进行调用。这里由于Resnet34也是采用了一样的BasicBlock,所以34也能用。

def _resnet(block, layers, **kwargs):

model = ResNet(block, layers, **kwargs)

return model

def resnet18():

return _resnet(BasicBlock, [2, 2, 2, 2])

def resnet34():

return _resnet(BasicBlock, [3, 4, 6, 3])

最后,只需要调用函数:

net = resnet18()

一个基本的ResNet就这样搭建好了,有兴趣的小伙伴可以自己写一个resnet50哈~

下面是完整的代码:

# A BasicBlock has two convolution layer.

class BasicBlock(nn.Module):

# OutChannal represents kernal size.

def __init__(self, InChannal, OutChannal, stride=1, downsample=None):

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(InChannal, OutChannal, kernel_size=3, stride=stride, bias=False, padding=1)

self.bn1 = nn.BatchNorm2d(OutChannal)

self.relu = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(OutChannal, OutChannal, kernel_size=3, bias=False, padding=1)

self.bn2 = nn.BatchNorm2d(OutChannal)

self.downsample = downsample

self.stride = stride

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity # Resnet`s essence

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self, Basicblock, layers, num_classes=Config.NUM_CLASSES):

super(ResNet, self).__init__()

self.inplanes = 64

self.dilation = 1

self.conv1 = nn.Conv2d(1, self.inplanes, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(self.inplanes)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(Basicblock, 64, block_num=layers[0], stride=1)

self.layer2 = self._make_layer(Basicblock, 128, block_num=layers[1], stride=2)

self.layer3 = self._make_layer(Basicblock, 256, block_num=layers[2], stride=2)

self.layer4 = self._make_layer(Basicblock, 512, block_num=layers[3], stride=2)

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512, num_classes)

# Judge layer`s type and initialize. Conv2d and BN initialization in a different way

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

# Zero-initialize the last BN in each residual branch, it can improves performance

for m in self.modules():

if isinstance(m, BasicBlock):

nn.init.constant_(m.bn2.weight, 0)

def _make_layer(self, Basicblock, planes, block_num, stride=1):

downsample = None

# Change channal size

if stride != 1 or self.inplanes != planes:

downsample = nn.Sequential(

nn.Conv2d(self.inplanes, planes, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(planes),

)

# Initial layers

layers = []

# 1.original layer, downsamples firstly.

layers.append(Basicblock(self.inplanes, planes, stride, downsample))

self.inplanes = planes

# 2.add layer

for i in range(1, block_num):

layers.append(Basicblock(self.inplanes, planes))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def _resnet(block, layers, **kwargs):

model = ResNet(block, layers, **kwargs)

return model

def resnet18():

return _resnet(BasicBlock, [2, 2, 2, 2])

def resnet34():

return _resnet(BasicBlock, [3, 4, 6, 3])