MYSQL数据库的主从切换

一、MHA简介

MHA(Master High Availability)目前在MySQL高可用方面是一个相对成熟的解决方案,它由日本DeNA公司的youshimaton(现就职于 Facebook公司)开发,是一套优秀的作为MySQL高可用性环境下故障切换和主从提升的高可用软件。在MySQL故障切换过程中,MHA能做到在 0~30秒之内自动完成数据库的故障切换操作,并且在进行故障切换的过程中,MHA能在最大程度上保证数据的一致性,以达到真正意义上的高可用。

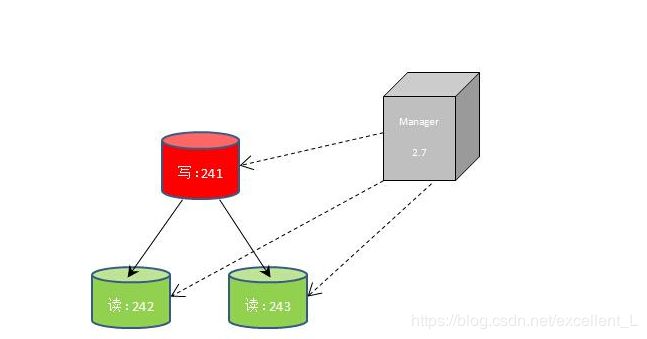

该软件由两部分组成:MHA Manager(管理节点)和MHA Node(数据节点)。MHA Manager可以单独部署在一台独立的机器上管理多个master-slave集群,也可以部署在一台slave节点上。MHA Node运行在每台MySQL服务器上,MHA Manager会定时探测集群中的master节点,当master出现故障时,它可以自动将最新数据的slave提升为新的master,然后将所有其 他的slave重新指向新的master。整个故障转移过程对应用程序完全透明。

在MHA自动故障切换过程中,MHA试图从宕机的主服务器上保存二进制日志,最大程度的保证数据的不丢失,但这并不总是可行的。例如,如果主服务器硬件故 障或无法通过ssh访问,MHA没法保存二进制日志,只进行故障转移而丢失了最新的数据。使用MySQL 5.5的半同步复制,可以大大降低数据丢失的风险。MHA可以与半同步复制结合起来。如果只有一个slave已经收到了最新的二进制日志,MHA可以将最 新的二进制日志应用于其他所有的slave服务器上,因此可以保证所有节点的数据一致性。

MHA框架图

MHA工作原理总结为以下几条:

- 从宕机崩溃的master保存二进制日志事件(binlog events);

- 识别含有最新更新的slave;

- 应用差异的中继日志(relay log)到其他slave;

- 应用从master保存的二进制日志事件(binlog events);

- 提升一个slave为新master;

- 使用其他的slave连接新的master进行复制。

二、MHA安装配置

实验环境: 一主两从的主从复制

server1 主库

server2 从库

server3 从库

server4 manager

首先将数据库配置成基于gtid下一主二从且半同步,主意这里半同步不要直接写入配置文件,在登录mysql后可手动添加,mysql的主从复制查看前面的博客,这里不再鳌述。

这里是三个配置文件里写入的内容,不写入半同步,三个库的server_id不同

MHA配置

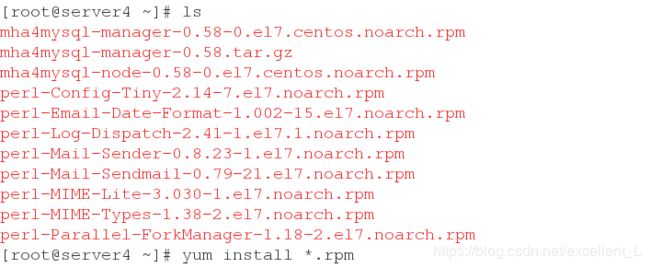

在server4上安装MHA软件包

分别在三个库安装node软件包

![]()

![]()

![]()

在managr中编辑配置文件

首先建立该目录

配置节点互相ssh免密登陆

在server4,manager节点生成key并分发给所有用户

[root@server4 ~]# cd .ssh/

[root@server4 .ssh]# ls

known_hosts

[root@server4 .ssh]# ssh-keygen

[root@server4 .ssh]# ls

id_rsa id_rsa.pub known_hosts

[root@server4 .ssh]# cd

[root@server4 ~]# scp -r .ssh/ server1:

root@server1's password:

known_hosts 100% 540 0.5KB/s 00:00

id_rsa 100% 1679 1.6KB/s 00:00

id_rsa.pub 100% 394 0.4KB/s 00:00

[root@server4 ~]# scp -r .ssh/ server2:

root@server2's password:

known_hosts 100% 540 0.5KB/s 00:00

id_rsa 100% 1679 1.6KB/s 00:00

id_rsa.pub 100% 394 0.4KB/s 00:00

[root@server4 ~]# scp -r .ssh/ server3:

root@server3's password:

known_hosts 100% 540 0.5KB/s 00:00

id_rsa 100% 1679 1.6KB/s 00:00

id_rsa.pub 100% 394 0.4KB/s 00:00

[root@server4 ~]# ssh-copy-id server1

[root@server4 ~]# ssh-copy-id server2

[root@server4 ~]# ssh-copy-id server3检测:

ssh检测successful,没有错误

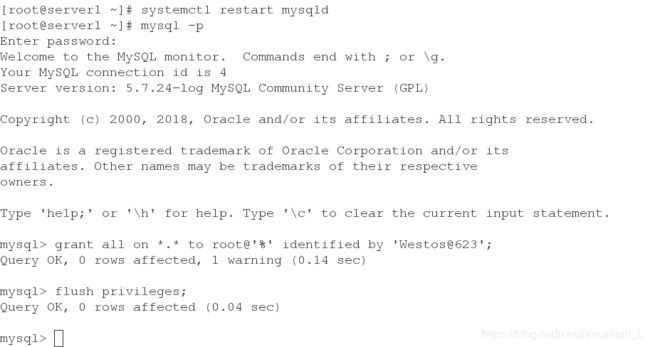

重新启动mysql

主库登录,给权限,并刷新授权表

两个从库登录,写入只读

调度器repl检测ok,没有错误

![]()

[root@server4 ~]# masterha_check_ssh --conf=/etc/masterha/app1.cnf

Wed Feb 27 11:05:24 2019 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Wed Feb 27 11:05:24 2019 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Wed Feb 27 11:05:24 2019 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Wed Feb 27 11:05:24 2019 - [info] Starting SSH connection tests..

Wed Feb 27 11:05:24 2019 - [debug]

Wed Feb 27 11:05:24 2019 - [debug] Connecting via SSH from [email protected](172.25.254.1:22) to [email protected](172.25.254.2:22)..

Wed Feb 27 11:05:24 2019 - [debug] ok.

Wed Feb 27 11:05:24 2019 - [debug] Connecting via SSH from [email protected](172.25.254.1:22) to [email protected](172.25.254.3:22)..

Warning: Permanently added '172.25.254.3' (ECDSA) to the list of known hosts.

Wed Feb 27 11:05:24 2019 - [debug] ok.

Wed Feb 27 11:05:25 2019 - [debug]

Wed Feb 27 11:05:24 2019 - [debug] Connecting via SSH from [email protected](172.25.254.2:22) to [email protected](172.25.254.1:22)..

Wed Feb 27 11:05:25 2019 - [debug] ok.

Wed Feb 27 11:05:25 2019 - [debug] Connecting via SSH from [email protected](172.25.254.2:22) to [email protected](172.25.254.3:22)..

Warning: Permanently added '172.25.254.3' (ECDSA) to the list of known hosts.

Wed Feb 27 11:05:25 2019 - [debug] ok.

Wed Feb 27 11:05:25 2019 - [debug]

Wed Feb 27 11:05:25 2019 - [debug] Connecting via SSH from [email protected](172.25.254.3:22) to [email protected](172.25.254.1:22)..

Wed Feb 27 11:05:25 2019 - [debug] ok.

Wed Feb 27 11:05:25 2019 - [debug] Connecting via SSH from [email protected](172.25.254.3:22) to [email protected](172.25.254.2:22)..

Wed Feb 27 11:05:25 2019 - [debug] ok.

Wed Feb 27 11:05:25 2019 - [info] All SSH connection tests passed successfully.

[root@server4 ~]# masterha_check_repl --conf=/etc/masterha/app1.cnf

Wed Feb 27 11:13:44 2019 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Wed Feb 27 11:13:44 2019 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Wed Feb 27 11:13:44 2019 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Wed Feb 27 11:13:44 2019 - [info] MHA::MasterMonitor version 0.58.

Wed Feb 27 11:13:45 2019 - [info] GTID failover mode = 1

Wed Feb 27 11:13:45 2019 - [info] Dead Servers:

Wed Feb 27 11:13:45 2019 - [info] Alive Servers:

Wed Feb 27 11:13:45 2019 - [info] 172.25.254.1(172.25.254.1:3306)

Wed Feb 27 11:13:45 2019 - [info] 172.25.254.2(172.25.254.2:3306)

Wed Feb 27 11:13:45 2019 - [info] 172.25.254.3(172.25.254.3:3306)

Wed Feb 27 11:13:45 2019 - [info] Alive Slaves:

Wed Feb 27 11:13:45 2019 - [info] 172.25.254.2(172.25.254.2:3306) Version=5.7.24-log (oldest major version between slaves) log-bin:enabled

Wed Feb 27 11:13:45 2019 - [info] GTID ON

Wed Feb 27 11:13:45 2019 - [info] Replicating from 172.25.254.1(172.25.254.1:3306)

Wed Feb 27 11:13:45 2019 - [info] Primary candidate for the new Master (candidate_master is set)

Wed Feb 27 11:13:45 2019 - [info] 172.25.254.3(172.25.254.3:3306) Version=5.7.24-log (oldest major version between slaves) log-bin:enabled

Wed Feb 27 11:13:45 2019 - [info] GTID ON

Wed Feb 27 11:13:45 2019 - [info] Replicating from 172.25.254.1(172.25.254.1:3306)

Wed Feb 27 11:13:45 2019 - [info] Not candidate for the new Master (no_master is set)

Wed Feb 27 11:13:45 2019 - [info] Current Alive Master: 172.25.254.1(172.25.254.1:3306)

Wed Feb 27 11:13:45 2019 - [info] Checking slave configurations..

Wed Feb 27 11:13:45 2019 - [info] Checking replication filtering settings..

Wed Feb 27 11:13:45 2019 - [info] binlog_do_db= , binlog_ignore_db=

Wed Feb 27 11:13:45 2019 - [info] Replication filtering check ok.

Wed Feb 27 11:13:45 2019 - [info] GTID (with auto-pos) is supported. Skipping all SSH and Node package checking.

Wed Feb 27 11:13:45 2019 - [info] Checking SSH publickey authentication settings on the current master..

Wed Feb 27 11:13:45 2019 - [info] HealthCheck: SSH to 172.25.254.1 is reachable.

Wed Feb 27 11:13:45 2019 - [info]

172.25.254.1(172.25.254.1:3306) (current master)

+--172.25.254.2(172.25.254.2:3306)

+--172.25.254.3(172.25.254.3:3306)

Wed Feb 27 11:13:45 2019 - [info] Checking replication health on 172.25.254.2..

Wed Feb 27 11:13:45 2019 - [info] ok.

Wed Feb 27 11:13:45 2019 - [info] Checking replication health on 172.25.254.3..

Wed Feb 27 11:13:45 2019 - [info] ok.

Wed Feb 27 11:13:45 2019 - [warning] master_ip_failover_script is not defined.

Wed Feb 27 11:13:45 2019 - [warning] shutdown_script is not defined.

Wed Feb 27 11:13:45 2019 - [info] Got exit code 0 (Not master dead).

MySQL Replication Health is OK.

测试:

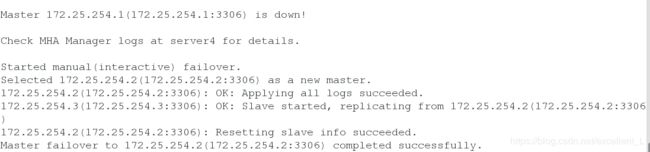

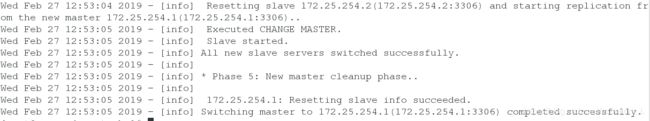

手动测试:当主库server1宕机,在server4进行手动切换

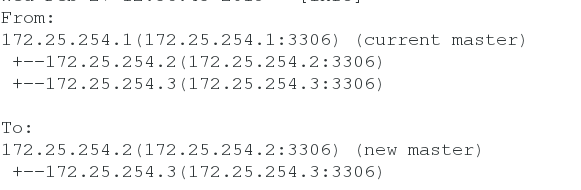

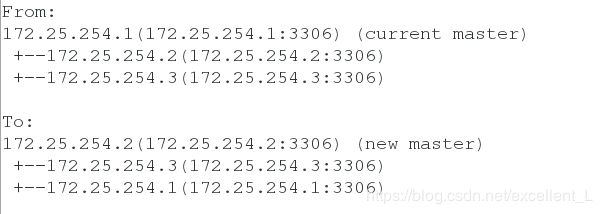

server4手动切换,down掉的主机是172.25.254.1,新的master是172.25.254.2

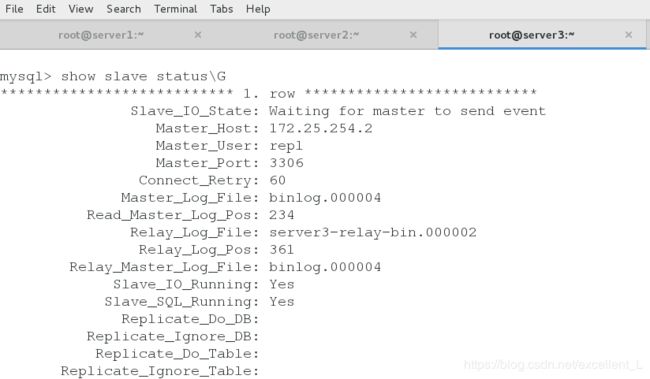

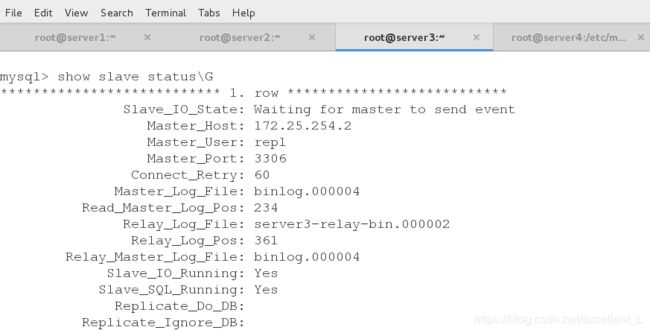

在server3从库查看,新的master是172.25.254.2

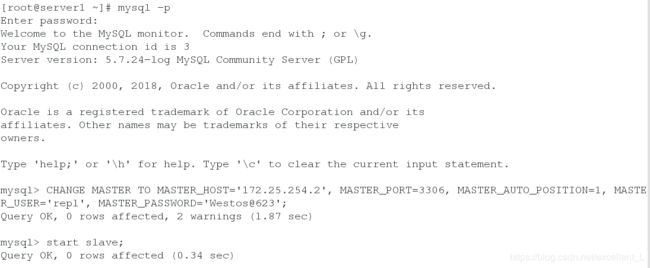

重新启动server1将其加入到数据库的备库

![]()

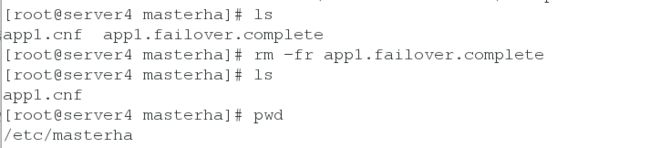

二、在主机不宕机时,进行切换

首先查看masterha,看是否生成文件app1.failover.complete,生成的话,要将其删除掉

在server4进行手动不宕机切换

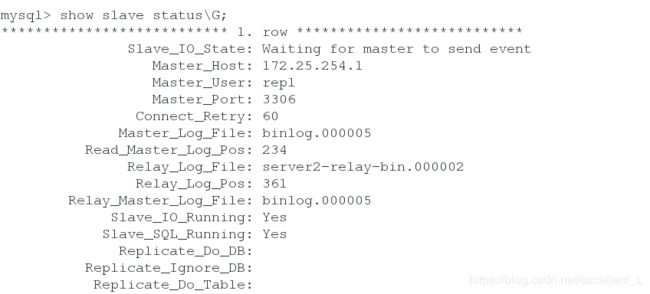

在server2查看新的主库是server1

在server3查看主库server1

飘逸pid主从切换

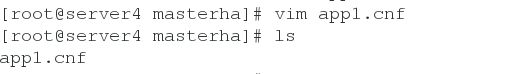

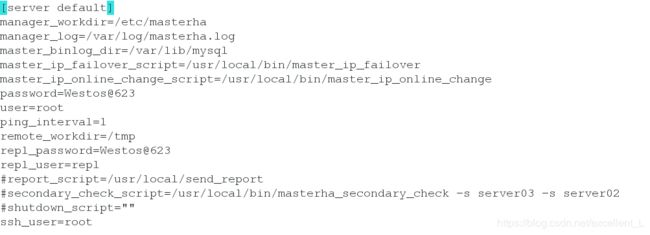

编辑配置文件打开pid注释

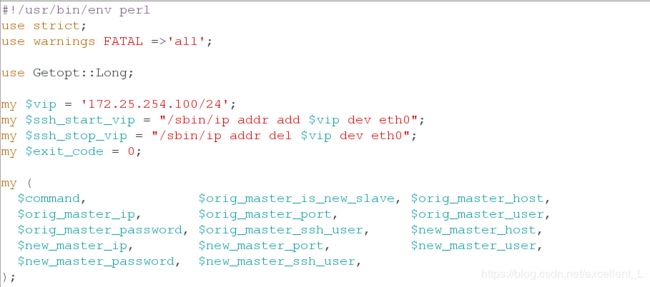

在/usr/local/bin/目录下拷贝老师的脚本

编辑两个脚本进行修改

![]()

![]()

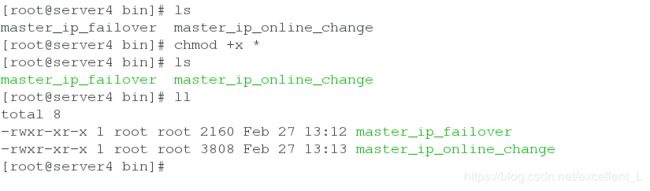

给脚本执行权限

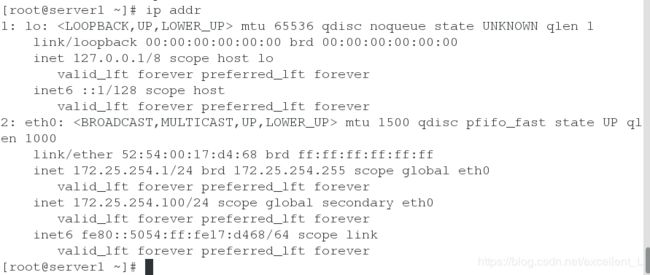

在主库建立虚拟ip(目前主库在server1)

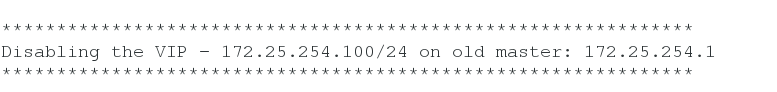

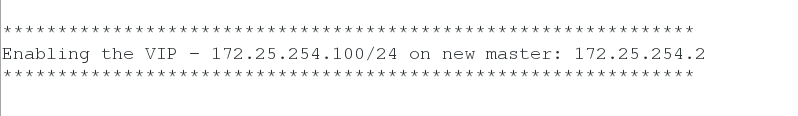

调度器手动执行切换

主库从172.25.254.1 切换到172.25.254.2

在172.25.254.1和172.25.254.2查看飘逸的ip

server1的虚拟ip不见了

server2上出现新的虚拟ip

server3查看目前的主库为172.25.254.2

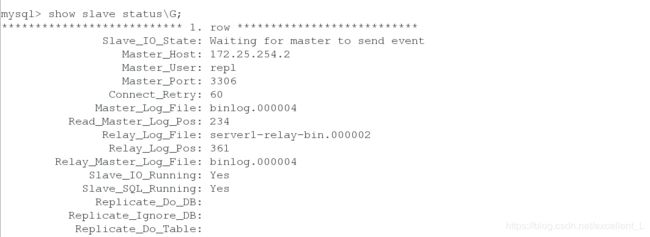

自动切换

在server4上执行自动切换

目前主库在server2

当停止主库会切换到新的server1主库

![]()

在server1查看新的飘逸来的ip

在server2查看ip,虚拟ip不见了,发生主从切换

恢复server2为从库