本文来源同事贾老板,本人负责编辑

1. 准备工作

准备安装包

NVIDIA-Linux-x86_64-381.22.run # 最新显卡驱动

cuda_8.0.61_375.26_linux.run # 最新CUDA安装包

cudnn-8.0-linux-x64-v6.0.tgz # cudnn库v6.0

cudnn-8.0-linux-x64-v5.1.tgz # cudnn库v5.1 (备用)安装基础环境

# 检查显卡

$ lspci | grep -i vga 04:00.0 VGA compatible controller: NVIDIA Corporation Device 1b00 (rev a1)

# 检查系统版本,确保系统支持(需要Linux-64bit系统)

$ uname -m && cat /etc/*release x86_64 CentOS Linux release 7.2.1511 (Core)

# 安装GCC

$ yum install gcc gcc-c++

# 安装Kernel Headers Packages

$ yum install kernel-devel-$(uname -r) kernel-headers-$(uname -r)

2. 安装显卡驱动

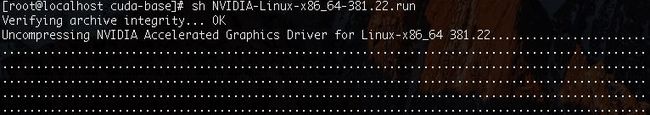

$ sh NVIDIA-Linux-x86_64-381.22.run

-

开始安装

-

Accept

-

Building kernerl modules 安装

-

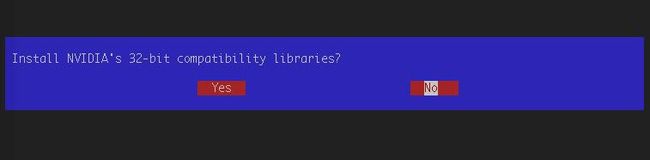

32bit兼容包选择, 这里要注意选择 No,不然后面就会出错。

-

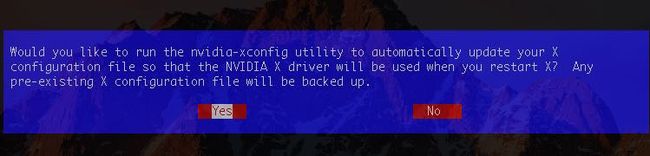

X- configuration 的选择页为 Yes

后面的都选择默认即可

3. 安装CUDA

- 开始安装

$ sh cuda_8.0.61_375.26_linux.run

# accept

-------------------------------------------------------------

Do you accept the previously read EULA?

accept/decline/quit: accept

# no

Install NVIDIA Accelerated Graphics Driver for Linux-x86_64 375.26?

(y)es/(n)o/(q)uit: n

-------------------------------------------------------------

# 后面的就都选yes或者default

Do you want to install the OpenGL libraries?

(y)es/(n)o/(q)uit [ default is yes ]:

Do you want to run nvidia-xconfig?

This will update the system X configuration file so that the NVIDIA X driver

is used. The pre-existing X configuration file will be backed up.

This option should not be used on systems that require a custom

X configuration, such as systems with multiple GPU vendors.

(y)es/(n)o/(q)uit [ default is no ]: y

Install the CUDA 8.0 Toolkit?

(y)es/(n)o/(q)uit: y

Enter Toolkit Location

[ default is /usr/local/cuda-8.0 ]:

Do you want to install a symbolic link at /usr/local/cuda?

(y)es/(n)o/(q)uit: y

Install the CUDA 8.0 Samples?

(y)es/(n)o/(q)uit: y

Enter CUDA Samples Location

[ default is /root ]:

Installing the NVIDIA display driver...

The driver installation has failed due to an unknown error. Please consult the driver installation log located at /var/log/nvidia-installer.log.

===========

= Summary =

===========

Driver: Not Selected

Toolkit: Installed in /usr/local/cuda-8.0

Samples: Installed in /root, but missing recommended libraries

Please make sure that

- PATH includes /usr/local/cuda-8.0/bin

- LD_LIBRARY_PATH includes /usr/local/cuda-8.0/lib64, or, add /usr/local/cuda-8.0/lib64 to /etc/ld.so.conf and run ldconfig as root

To uninstall the CUDA Toolkit, run the uninstall script in /usr/local/cuda-8.0/bin

Please see CUDA_Installation_Guide_Linux.pdf in /usr/local/cuda-8.0/doc/pdf for detailed information on setting up CUDA.

***WARNING: Incomplete installation! This installation did not install the CUDA Driver. A driver of version at least 361.00 is required for CUDA 8.0 functionality to work.

To install the driver using this installer, run the following command, replacing with the name of this run file:

sudo .run -silent -driver

Logfile is /tmp/cuda_install_192.log

- 验证安装结果

# 添加环境变量

# 在 ~/.bashrc的最后面添加下面两行

export PATH=/usr/local/cuda-8.0/bin:$PATH

export LD_LIBRARY_PATH=/usr/local/cuda-8.0/lib64:/usr/local/cuda-8.0/extras/CUPTI/lib64:$LD_LIBRARY_PATH

# 使生效

$ source ~/.bashrc

# 验证安装结果

$ nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2016 NVIDIA Corporation

Built on Tue_Jan_10_13:22:03_CST_2017

Cuda compilation tools, release 8.0, V8.0.61

$ nvidia-smi

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 381.22 Driver Version: 381.22 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Graphics Device Off | 0000:02:00.0 Off | N/A |

| 21% 50C P8 33W / 265W | 8MiB / 11172MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

4. 安装 cuDNN 库

$ tar -xvzf cudnn-8.0-linux-x64-v6.0.tgz

$ cp -P cuda/include/cudnn.h /usr/local/cuda-8.0/include

$ cp -P cuda/lib64/libcudnn* /usr/local/cuda-8.0/lib64

$ chmod a+r /usr/local/cuda-8.0/include/cudnn.h /usr/local/cuda-8.0/lib64/libcudnn*

5. 在 Docker 中使用 cuda

下面介绍了如何在docker中使用cuda,主要使用了nvidia-docker

- 安装docker

$ yum install docker

# 启动 Docker 服务,并将其设置为开机启动

$ systemctl start docker.service

$ systemctl enable docker.service

- 安装 nvidia-docker

# 1. Install nvidia-docker and nvidia-docker-plugin

$ wget -P /tmp https://github.com/NVIDIA/nvidia-docker/releases/download/v1.0.1/nvidia-docker-1.0.1-1.x86_64.rpm

$ sudo rpm -i /tmp/nvidia-docker*.rpm && rm /tmp/nvidia-docker*.rpm

$ sudo systemctl start nvidia-docker

# 2. 使用nvidia-docker启动容器

$ nvidia-docker run -it --name=CONTAINER_NAME -d DOCKER_IMAGE_NAME /bin/bash

# 3. 进入容器

$ docker attach CONTAINER_NAME

注意

2 使用nvidia-docker启动容器

这里需要对image进行重新编译,添加nvidia-docker需要的Label,否则运行起来的容器会不能使用GPU。

6. TensorFlow 的 GPU 使用

下载安装GPU版本的TensorFlow,运行以下代码即可测试,无报错说明cuda安装成功

import tensorflow as tf

# 新建一个 graph.

a = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[2, 3], name='a')

b = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[3, 2], name='b')

c = tf.matmul(a, b)

# 新建session with log_device_placement并设置为True.

sess = tf.Session(config=tf.ConfigProto(log_device_placement=True))

# 运行这个 op.

print sess.run(c)

7. Openface 的 GPU 使用

# 启动编译好的 openface 镜像 chinapnr/openface:0.3

$ nvidia-docker run -it -v /local-folder/:/root/openface_file/ --name=openface -d chinapnr/openface:0.3 /bin/bash

# 进入容器

$ docker exec -it openface /bin/bash

# 训练模型

$ cd /root/openface/training

$ ./main.lua -data /root/cuda-base/openface/CASPEAL-align-folder/ -device 1 -nGPU 1 -testing -alpha 0.2 -nEpochs 100 -epochSize 160 -peoplePerBatch 35 -imagesPerPerson 20 -retrain /root/openface/models/openface/nn4.small2.v1.t7 -modelDef /root/openface/models/openface/nn4.small2.def.lua -cache ../../cuda-base/openface/work