今天的深度学习训练我们要练习一下让计算机识别手写数字。其说是深度学习,我们搭建的神经层只有两层,输入层,输出层。但不要小看只有两层,我们的正确率已经达到了90+%。

废话不多说,先看代码

from keras.models import Sequential

from keras.layers import Dense, Activation

from keras import optimizers

from keras.datasets import mnist

from keras.utils.np_utils import to_categorical

import matplotlib.pyplot as plt

# collecting the data

(x_train, y_train), (x_test, y_test) = mnist.load_data('mnist.pkl.gz')

x = x_test[6]/125

x_train = x_train.reshape(60000, 784)/125

x_test = x_test.reshape(x_test.shape[0], 784)/125

y_train = to_categorical(y_train, num_classes=10)

y_test = to_categorical(y_test, num_classes=10)

# design the model

model = Sequential()

model.add(Dense(32, input_dim=784))

model.add(Activation('relu'))

model.add(Dense(10))

model.add(Activation('softmax'))

# compile the model and pick the loss function and the optimizer

rms = optimizers.RMSprop(lr=0.01, epsilon=1e-8, rho=0.9)

model.compile(optimizer=rms, loss='categorical_crossentropy', metrics=['accuracy'])

# training the model

model.fit(x_train, y_train, batch_size=20, epochs=2, shuffle=True)

# test the model

score = model.evaluate(x_test, y_test, batch_size=500)

h = model.predict_classes(x.reshape(1, -1), batch_size=1)

print 'loss:\t', score[0], '\naccuracy:\t', score[1]

print '\nclass:\t', h

plt.imshow(x)

plt.show()

好的,老生常谈先说第一段代码

from keras.models import Sequential

from keras.layers import Dense, Activation

from keras import optimizers

from keras.datasets import mnist

from keras.utils.np_utils import to_categorical

import matplotlib.pyplot as plt

- 在前几个文章中提到的,我们就不说了,因为前几篇文章介绍的很详细。如果有那些我没有说的,但没看懂请点击一下链接。线性回归|第一天的Keras、分类训练|第二天的Keras、多元分类|第三天的Keras.

- 好了,今天新导入的模块是mnist,这个模块是keras的数据集。我们倒入这个模块是为了获取mnist的训练数据,和测试数据。下面代码会详解。

- 另一个新的模块是to_categorical,这是一个函数,主要是把,我们的标签转换成一个二维向量。例如我们一共有5类,我们的标签分别是0,1,2,3,4。通过to_categorical我们就会把标签转换成下面的形式【0,0,0,0,1】(代表标签4)

- 还有一个新的模块 matplotlib.pyplot。matplotlib是一个python的包,主要是绘制表格等使用。我们今天用它来可视化我们的训练数据。建议安装此包是下载anaconda,管理工具。这样可以很好的解决依赖问题,特别是在windows下。一般我们google,或者baidu都可以,看到如何安装anaconda,或者直接看官网。当然pip安装matplotlib也可以。

收集数据

# collecting the data

(x_train, y_train), (x_test, y_test) = mnist.load_data('mnist.pkl.gz')

x = x_test[6]

x_train = x_train.reshape(60000, 784)/125

x_test = x_test.reshape(x_test.shape[0], 784)/125

y_train = to_categorical(y_train, num_classes=10)

y_test = to_categorical(y_test, num_classes=10)

- 通过mnist.load_data()我们可以获得我们需要的训练数据。这里有个东西需要注意一下。()里面的参数可以不填,但第一次我的下载失败了,文件破损,所以我就改为他的另一个数据包的名称。他的包一共有两个。另一个‘mnist.pnz'

- 因为我们得到的数据的shape是(60000,28,28)x_train。所以我们要改变一下其shape让其适合训练。自然是改称28*28维的向量了。所以reshape(60000,784)60000是指有60000个量,784是指每个量都是784维的。x_test也类似。x_test.shape[0]也就是原来的量的个数。

- 还有一点我们为什么要除上125呢?其实除不除,除多少看个人心意吧,因为x_train,每个元素都是介于0~255之间的数,为了训练起来方便我除上125,就变成了0~2之间的数字。

- 下一个我们用到的就是to_categorical了。上面已经说清楚了,就不废话了。

设计我们的模型

# design the model

model = Sequential()

model.add(Dense(32, input_dim=784))

model.add(Activation('relu'))

model.add(Dense(10))

model.add(Activation('softmax'))

好了,没什么新意。我前面集篇文章都讲的比较多。

选取优化器、损失函数,并编译我们的模型

# compile the model and pick the loss function and the optimizer

rms = optimizers.RMSprop(lr=0.01, epsilon=1e-8, rho=0.9)

model.compile(optimizer=rms, loss='categorical_crossentropy', metrics=['accuracy'])

- 这里唯一一个比较新的是我们选择了一个新的优化器RMSprop。详情请阅读优化器比较

训练我们的模型

# training the model

model.fit(x_train, y_train, batch_size=20, epochs=2, shuffle=True)

同样跟前几篇文章没什么不同。另外提议下batch_size的意义,就是加速我们的训练(GPU),增加更新次数。

测试我们的模型

# test the model

score = model.evaluate(x_test, y_test, batch_size=500)

h = model.predict_classes(x.reshape(1, -1), batch_size=1)

print 'loss:\t', score[0], '\naccuracy:\t', score[1]

print '\nclass:\t', h

plt.imshow(x)

plt.show()

- 首先evaluate一下我们的训练结果,得到了loss,和accuracy。

- 然后使用predict_classes(),输入一个图片,并将此图片shape成可输入的矩阵,并经过相同的预处理。注意的一点是这里我们reshape有一个参数是-1,什么意思呢?就是把数组分成x个量,每个量多少维根据数组大小自行调整。还是不理解事不。举个例子。

>>> data

array([[ 0.21033279, 0.50292265, 0.71271164, 0.47520358, 0.73701694,

0.82117798, 0.44008419, 0.59581689, 0.07051911, 0.57959824,

0.76565127, 0.51984695, 0.27255042, 0.59663595, 0.48586599,

0.234282 , 0.71937941, 0.99956487, 0.46895412, 0.37871936,

0.81054667, 0.09787709, 0.14693726, 0.81571586, 0.08852998,

0.73211671, 0.29407735, 0.37332085, 0.00451808, 0.60411745,

0.20248406, 0.36436494, 0.25961514, 0.22623853, 0.66947677,

0.54229594, 0.49394167, 0.47603329, 0.90753314, 0.04755629,

0.22703817, 0.69693293, 0.07821929, 0.14584769, 0.69374338,

0.22148599, 0.64267874, 0.79070401, 0.91767048, 0.95359906,

0.17062022, 0.64134807, 0.35871884, 0.86993997, 0.63867876,

0.39333417, 0.06902379, 0.68998664, 0.78482029, 0.94321673]])

>>> data.reshape(20,-1)

array([[ 0.21033279, 0.50292265, 0.71271164],

[ 0.47520358, 0.73701694, 0.82117798],

[ 0.44008419, 0.59581689, 0.07051911],

[ 0.57959824, 0.76565127, 0.51984695],

[ 0.27255042, 0.59663595, 0.48586599],

[ 0.234282 , 0.71937941, 0.99956487],

[ 0.46895412, 0.37871936, 0.81054667],

[ 0.09787709, 0.14693726, 0.81571586],

[ 0.08852998, 0.73211671, 0.29407735],

[ 0.37332085, 0.00451808, 0.60411745],

[ 0.20248406, 0.36436494, 0.25961514],

[ 0.22623853, 0.66947677, 0.54229594],

[ 0.49394167, 0.47603329, 0.90753314],

[ 0.04755629, 0.22703817, 0.69693293],

[ 0.07821929, 0.14584769, 0.69374338],

[ 0.22148599, 0.64267874, 0.79070401],

[ 0.91767048, 0.95359906, 0.17062022],

[ 0.64134807, 0.35871884, 0.86993997],

[ 0.63867876, 0.39333417, 0.06902379],

[ 0.68998664, 0.78482029, 0.94321673]])

>>> data.reshape(20,3)

array([[ 0.21033279, 0.50292265, 0.71271164],

[ 0.47520358, 0.73701694, 0.82117798],

[ 0.44008419, 0.59581689, 0.07051911],

[ 0.57959824, 0.76565127, 0.51984695],

[ 0.27255042, 0.59663595, 0.48586599],

[ 0.234282 , 0.71937941, 0.99956487],

[ 0.46895412, 0.37871936, 0.81054667],

[ 0.09787709, 0.14693726, 0.81571586],

[ 0.08852998, 0.73211671, 0.29407735],

[ 0.37332085, 0.00451808, 0.60411745],

[ 0.20248406, 0.36436494, 0.25961514],

[ 0.22623853, 0.66947677, 0.54229594],

[ 0.49394167, 0.47603329, 0.90753314],

[ 0.04755629, 0.22703817, 0.69693293],

[ 0.07821929, 0.14584769, 0.69374338],

[ 0.22148599, 0.64267874, 0.79070401],

[ 0.91767048, 0.95359906, 0.17062022],

[ 0.64134807, 0.35871884, 0.86993997],

[ 0.63867876, 0.39333417, 0.06902379],

[ 0.68998664, 0.78482029, 0.94321673]])

- 然后下面就是把我们的数据打印出来。

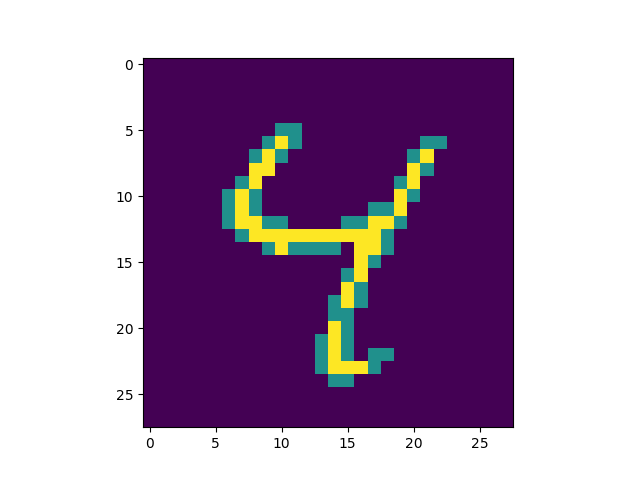

- plt 是先用plt.imshow()把我们的图片传进取,然后通过plt.show()展示出来。

效果如下:

好了,看看运行的结果

/home/kroossun/miniconda2/bin/python /home/kroossun/PycharmProjects/ML/matp.py

Using TensorFlow backend.

Epoch 1/2

2017-09-07 12:57:55.376184: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.1 instructions, but these are available on your machine and could speed up CPU computations.

2017-09-07 12:57:55.376250: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.2 instructions, but these are available on your machine and could speed up CPU computations.

2017-09-07 12:57:55.376256: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX instructions, but these are available on your machine and could speed up CPU computations.

2017-09-07 12:57:55.376260: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations.

2017-09-07 12:57:55.376265: W tensorflow/core/platform/cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations.

20/60000 [..............................] - ETA: 400s - loss: 2.5463 - acc: 0.0500

920/60000 [..............................] - ETA: 11s - loss: 1.1999 - acc: 0.6359

1740/60000 [..............................] - ETA: 7s - loss: 0.9247 - acc: 0.7195

2620/60000 [>.............................] - ETA: 6s - loss: 0.8059 - acc: 0.7637

3540/60000 [>.............................] - ETA: 5s - loss: 0.7262 - acc: 0.7870

4460/60000 [=>............................] - ETA: 4s - loss: 0.6716 - acc: 0.8043

5400/60000 [=>............................] - ETA: 4s - loss: 0.6472 - acc: 0.8128

6180/60000 [==>...........................] - ETA: 4s - loss: 0.6289 - acc: 0.8215

7020/60000 [==>...........................] - ETA: 4s - loss: 0.6008 - acc: 0.8296

7860/60000 [==>...........................] - ETA: 3s - loss: 0.5804 - acc: 0.8340

8760/60000 [===>..........................] - ETA: 3s - loss: 0.5643 - acc: 0.8388

9660/60000 [===>..........................] - ETA: 3s - loss: 0.5418 - acc: 0.8450

10440/60000 [====>.........................] - ETA: 3s - loss: 0.5324 - acc: 0.8489

11260/60000 [====>.........................] - ETA: 3s - loss: 0.5189 - acc: 0.8529

12160/60000 [=====>........................] - ETA: 3s - loss: 0.5135 - acc: 0.8552

13040/60000 [=====>........................] - ETA: 3s - loss: 0.5084 - acc: 0.8571

13820/60000 [=====>........................] - ETA: 3s - loss: 0.5000 - acc: 0.8607

14600/60000 [======>.......................] - ETA: 3s - loss: 0.4921 - acc: 0.8634

15420/60000 [======>.......................] - ETA: 3s - loss: 0.4859 - acc: 0.8654

16280/60000 [=======>......................] - ETA: 2s - loss: 0.4855 - acc: 0.8663

17040/60000 [=======>......................] - ETA: 2s - loss: 0.4778 - acc: 0.8684

17860/60000 [=======>......................] - ETA: 2s - loss: 0.4710 - acc: 0.8704

18720/60000 [========>.....................] - ETA: 2s - loss: 0.4685 - acc: 0.8715

19600/60000 [========>.....................] - ETA: 2s - loss: 0.4651 - acc: 0.8728

20500/60000 [=========>....................] - ETA: 2s - loss: 0.4612 - acc: 0.8741

21280/60000 [=========>....................] - ETA: 2s - loss: 0.4577 - acc: 0.8752

22100/60000 [==========>...................] - ETA: 2s - loss: 0.4530 - acc: 0.8767

22840/60000 [==========>...................] - ETA: 2s - loss: 0.4513 - acc: 0.8780

23680/60000 [==========>...................] - ETA: 2s - loss: 0.4479 - acc: 0.8793

24420/60000 [===========>..................] - ETA: 2s - loss: 0.4466 - acc: 0.8802

25260/60000 [===========>..................] - ETA: 2s - loss: 0.4435 - acc: 0.8811

26140/60000 [============>.................] - ETA: 2s - loss: 0.4414 - acc: 0.8826

27000/60000 [============>.................] - ETA: 2s - loss: 0.4388 - acc: 0.8833

27800/60000 [============>.................] - ETA: 2s - loss: 0.4363 - acc: 0.8842

28640/60000 [=============>................] - ETA: 2s - loss: 0.4352 - acc: 0.8848

29460/60000 [=============>................] - ETA: 1s - loss: 0.4356 - acc: 0.8856

30340/60000 [==============>...............] - ETA: 1s - loss: 0.4324 - acc: 0.8867

31120/60000 [==============>...............] - ETA: 1s - loss: 0.4331 - acc: 0.8870

31900/60000 [==============>...............] - ETA: 1s - loss: 0.4311 - acc: 0.8878

32780/60000 [===============>..............] - ETA: 1s - loss: 0.4303 - acc: 0.8884

33660/60000 [===============>..............] - ETA: 1s - loss: 0.4289 - acc: 0.8890

34400/60000 [================>.............] - ETA: 1s - loss: 0.4281 - acc: 0.8894

35240/60000 [================>.............] - ETA: 1s - loss: 0.4281 - acc: 0.8900

36100/60000 [=================>............] - ETA: 1s - loss: 0.4247 - acc: 0.8910

36980/60000 [=================>............] - ETA: 1s - loss: 0.4238 - acc: 0.8916

37860/60000 [=================>............] - ETA: 1s - loss: 0.4221 - acc: 0.8922

38660/60000 [==================>...........] - ETA: 1s - loss: 0.4219 - acc: 0.8927

39460/60000 [==================>...........] - ETA: 1s - loss: 0.4205 - acc: 0.8932

40320/60000 [===================>..........] - ETA: 1s - loss: 0.4184 - acc: 0.8941

41180/60000 [===================>..........] - ETA: 1s - loss: 0.4185 - acc: 0.8943

41940/60000 [===================>..........] - ETA: 1s - loss: 0.4176 - acc: 0.8948

42760/60000 [====================>.........] - ETA: 1s - loss: 0.4163 - acc: 0.8953

43660/60000 [====================>.........] - ETA: 1s - loss: 0.4173 - acc: 0.8955

44540/60000 [=====================>........] - ETA: 0s - loss: 0.4172 - acc: 0.8957

45340/60000 [=====================>........] - ETA: 0s - loss: 0.4156 - acc: 0.8961

46160/60000 [======================>.......] - ETA: 0s - loss: 0.4157 - acc: 0.8964

47040/60000 [======================>.......] - ETA: 0s - loss: 0.4153 - acc: 0.8967

47860/60000 [======================>.......] - ETA: 0s - loss: 0.4138 - acc: 0.8970

48720/60000 [=======================>......] - ETA: 0s - loss: 0.4125 - acc: 0.8975

49480/60000 [=======================>......] - ETA: 0s - loss: 0.4124 - acc: 0.8977

50300/60000 [========================>.....] - ETA: 0s - loss: 0.4121 - acc: 0.8981

51160/60000 [========================>.....] - ETA: 0s - loss: 0.4101 - acc: 0.8984

52060/60000 [=========================>....] - ETA: 0s - loss: 0.4081 - acc: 0.8990

52840/60000 [=========================>....] - ETA: 0s - loss: 0.4077 - acc: 0.8992

53680/60000 [=========================>....] - ETA: 0s - loss: 0.4062 - acc: 0.8997

54520/60000 [==========================>...] - ETA: 0s - loss: 0.4061 - acc: 0.9002

55380/60000 [==========================>...] - ETA: 0s - loss: 0.4063 - acc: 0.9004

56160/60000 [===========================>..] - ETA: 0s - loss: 0.4062 - acc: 0.9007

56980/60000 [===========================>..] - ETA: 0s - loss: 0.4055 - acc: 0.9011

57820/60000 [===========================>..] - ETA: 0s - loss: 0.4036 - acc: 0.9015

58680/60000 [============================>.] - ETA: 0s - loss: 0.4025 - acc: 0.9018

59480/60000 [============================>.] - ETA: 0s - loss: 0.4015 - acc: 0.9022

60000/60000 [==============================] - 3s - loss: 0.4007 - acc: 0.9025

Epoch 2/2

20/60000 [..............................] - ETA: 179s - loss: 0.4713 - acc: 0.9500

840/60000 [..............................] - ETA: 7s - loss: 0.2679 - acc: 0.9369

1580/60000 [..............................] - ETA: 5s - loss: 0.2831 - acc: 0.9411

2360/60000 [>.............................] - ETA: 5s - loss: 0.2772 - acc: 0.9445

3160/60000 [>.............................] - ETA: 4s - loss: 0.2998 - acc: 0.9383

3980/60000 [>.............................] - ETA: 4s - loss: 0.2976 - acc: 0.9384

4820/60000 [=>............................] - ETA: 4s - loss: 0.3141 - acc: 0.9349

5620/60000 [=>............................] - ETA: 4s - loss: 0.3278 - acc: 0.9324

6420/60000 [==>...........................] - ETA: 3s - loss: 0.3158 - acc: 0.9335

7280/60000 [==>...........................] - ETA: 3s - loss: 0.3245 - acc: 0.9327

8140/60000 [===>..........................] - ETA: 3s - loss: 0.3245 - acc: 0.9323

8920/60000 [===>..........................] - ETA: 3s - loss: 0.3175 - acc: 0.9337

9740/60000 [===>..........................] - ETA: 3s - loss: 0.3217 - acc: 0.9332

10620/60000 [====>.........................] - ETA: 3s - loss: 0.3181 - acc: 0.9339

11420/60000 [====>.........................] - ETA: 3s - loss: 0.3248 - acc: 0.9331

12160/60000 [=====>........................] - ETA: 3s - loss: 0.3259 - acc: 0.9334

12960/60000 [=====>........................] - ETA: 3s - loss: 0.3226 - acc: 0.9334

13760/60000 [=====>........................] - ETA: 3s - loss: 0.3196 - acc: 0.9342

14620/60000 [======>.......................] - ETA: 3s - loss: 0.3173 - acc: 0.9342

15400/60000 [======>.......................] - ETA: 2s - loss: 0.3161 - acc: 0.9343

16200/60000 [=======>......................] - ETA: 2s - loss: 0.3158 - acc: 0.9348

17060/60000 [=======>......................] - ETA: 2s - loss: 0.3131 - acc: 0.9349

17940/60000 [=======>......................] - ETA: 2s - loss: 0.3118 - acc: 0.9347

18660/60000 [========>.....................] - ETA: 2s - loss: 0.3111 - acc: 0.9350

19460/60000 [========>.....................] - ETA: 2s - loss: 0.3125 - acc: 0.9352

20320/60000 [=========>....................] - ETA: 2s - loss: 0.3119 - acc: 0.9351

21200/60000 [=========>....................] - ETA: 2s - loss: 0.3120 - acc: 0.9350

21980/60000 [=========>....................] - ETA: 2s - loss: 0.3161 - acc: 0.9347

22820/60000 [==========>...................] - ETA: 2s - loss: 0.3150 - acc: 0.9350

23680/60000 [==========>...................] - ETA: 2s - loss: 0.3112 - acc: 0.9352

24560/60000 [===========>..................] - ETA: 2s - loss: 0.3115 - acc: 0.9352

25300/60000 [===========>..................] - ETA: 2s - loss: 0.3120 - acc: 0.9353

26100/60000 [============>.................] - ETA: 2s - loss: 0.3147 - acc: 0.9350

26940/60000 [============>.................] - ETA: 2s - loss: 0.3171 - acc: 0.9348

27760/60000 [============>.................] - ETA: 2s - loss: 0.3148 - acc: 0.9351

28600/60000 [=============>................] - ETA: 2s - loss: 0.3145 - acc: 0.9352

29360/60000 [=============>................] - ETA: 1s - loss: 0.3153 - acc: 0.9352

30200/60000 [==============>...............] - ETA: 1s - loss: 0.3134 - acc: 0.9354

31040/60000 [==============>...............] - ETA: 1s - loss: 0.3150 - acc: 0.9355

31880/60000 [==============>...............] - ETA: 1s - loss: 0.3152 - acc: 0.9356

32680/60000 [===============>..............] - ETA: 1s - loss: 0.3176 - acc: 0.9353

33520/60000 [===============>..............] - ETA: 1s - loss: 0.3156 - acc: 0.9357

34400/60000 [================>.............] - ETA: 1s - loss: 0.3133 - acc: 0.9358

35200/60000 [================>.............] - ETA: 1s - loss: 0.3155 - acc: 0.9356

35920/60000 [================>.............] - ETA: 1s - loss: 0.3152 - acc: 0.9357

36740/60000 [=================>............] - ETA: 1s - loss: 0.3147 - acc: 0.9358

37620/60000 [=================>............] - ETA: 1s - loss: 0.3134 - acc: 0.9360

38500/60000 [==================>...........] - ETA: 1s - loss: 0.3164 - acc: 0.9358

39320/60000 [==================>...........] - ETA: 1s - loss: 0.3164 - acc: 0.9357

40120/60000 [===================>..........] - ETA: 1s - loss: 0.3171 - acc: 0.9358

40940/60000 [===================>..........] - ETA: 1s - loss: 0.3193 - acc: 0.9356

41780/60000 [===================>..........] - ETA: 1s - loss: 0.3197 - acc: 0.9356

42660/60000 [====================>.........] - ETA: 1s - loss: 0.3196 - acc: 0.9358

43440/60000 [====================>.........] - ETA: 1s - loss: 0.3201 - acc: 0.9357

44200/60000 [=====================>........] - ETA: 0s - loss: 0.3180 - acc: 0.9362

45040/60000 [=====================>........] - ETA: 0s - loss: 0.3167 - acc: 0.9365

45900/60000 [=====================>........] - ETA: 0s - loss: 0.3178 - acc: 0.9365

46700/60000 [======================>.......] - ETA: 0s - loss: 0.3183 - acc: 0.9366

47480/60000 [======================>.......] - ETA: 0s - loss: 0.3194 - acc: 0.9366

48380/60000 [=======================>......] - ETA: 0s - loss: 0.3191 - acc: 0.9369

49240/60000 [=======================>......] - ETA: 0s - loss: 0.3203 - acc: 0.9367

50000/60000 [========================>.....] - ETA: 0s - loss: 0.3198 - acc: 0.9368

50820/60000 [========================>.....] - ETA: 0s - loss: 0.3203 - acc: 0.9369

51640/60000 [========================>.....] - ETA: 0s - loss: 0.3193 - acc: 0.9370

52480/60000 [=========================>....] - ETA: 0s - loss: 0.3198 - acc: 0.9369

53200/60000 [=========================>....] - ETA: 0s - loss: 0.3194 - acc: 0.9370

54000/60000 [==========================>...] - ETA: 0s - loss: 0.3207 - acc: 0.9368

54840/60000 [==========================>...] - ETA: 0s - loss: 0.3211 - acc: 0.9370

55720/60000 [==========================>...] - ETA: 0s - loss: 0.3240 - acc: 0.9368

56600/60000 [===========================>..] - ETA: 0s - loss: 0.3253 - acc: 0.9365

57380/60000 [===========================>..] - ETA: 0s - loss: 0.3260 - acc: 0.9365

58200/60000 [============================>.] - ETA: 0s - loss: 0.3252 - acc: 0.9365

59040/60000 [============================>.] - ETA: 0s - loss: 0.3242 - acc: 0.9366

59840/60000 [============================>.] - ETA: 0s - loss: 0.3244 - acc: 0.9366

60000/60000 [==============================] - 3s - loss: 0.3243 - acc: 0.9366

500/10000 [>.............................] - ETA: 0s

1/1 [==============================] - 0s

loss: 0.348073045909

accuracy: 0.941699999571

class: [4]

可以看到,我们的图片是4识别出来也是4,另外在测试集上的正确是0.94也是很不错了呢,毕竟我们只有两层神经元啊。