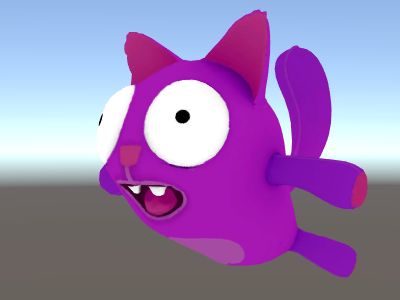

Shader学习笔记(二):Vertex/Fragment Shader

这篇文章讨论如何写顶点、片元着色器(Vertex/Fragment Shader)。

概念解释

先看一个完整例子,关键地方我做了标记。先熟悉大致结构,后面我会详细解释:

Shader "Unlit/NewUnlitShader"

{

Properties

{

_MainTex ("Texture", 2D) = "white" {}

}

SubShader

{

Tags { "RenderType"="Opaque" }

LOD 100

Pass

{

// CG程序起始位置

CGPROGRAM

// 编译指令:表示有顶点着色器

#pragma vertex vert

// 编译指令:表示有片元着色器

#pragma fragment frag

#pragma multi_compile_fog

#include "UnityCG.cginc"

// 自定义的结构

struct appdata

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

// 自定义的结构

struct v2f

{

float2 uv : TEXCOORD0;

UNITY_FOG_COORDS(1)

float4 vertex : SV_POSITION;

};

sampler2D _MainTex;

float4 _MainTex_ST;

// 顶点着色器函数

v2f vert (appdata v)

{

v2f o;

o.vertex = UnityObjectToClipPos(v.vertex);

o.uv = TRANSFORM_TEX(v.uv, _MainTex);

UNITY_TRANSFER_FOG(o,o.vertex);

return o;

}

// 片元着色器函数

fixed4 frag (v2f i) : SV_Target

{

fixed4 col = tex2D(_MainTex, i.uv);

UNITY_APPLY_FOG(i.fogCoord, col);

return col;

}

ENDCG

}

}

}关键概念的理解

- 可编程渲染管线:与固定渲染管线不同,可以让程序员自己控制渲染管线的一部分,分为Vertex Shader和Fragment Shader。

- Vertex Shader:作用于每个顶点,通常是处理从世界空间到裁剪空间(屏幕坐标)的坐标转换,后面紧接的是光栅化。

- Fragment Shader:作用于每个屏幕上的片元(这里可近似理解为像素),通常是计算颜色。

- ShaderLab:Unity专有的shader语言,Surface Shader全部由ShaderLab实现,Vertex/Fragment是由ShaderrLab内嵌CG/HLSL实现。

- Shading Language: 三种主流shader语言,均可以在ShaderLab中嵌套使用,官方推荐使用CG/HLSL(这两种语言由Microsoft和Nvidia达成一致,所以基本等价)。详见下面列表:

| 名字 | 全名 | 开发商 | 嵌套关键字 |

|---|---|---|---|

| CG | C for Graphic | Nvidia | CGPROGRAM |

| HLSL | High Level Shading Language | Microsoft | CGPROGRAM |

| GLSL | OpenGL Shading Language | OpenGL | GLSLPROGRAM |

DirectX在Windows和游戏领域称霸,OpenGL则在移动领域胜出。

关于两者孰优孰劣的讨论数不胜数。

这里总结了DirectX在Windows平台胜出的一些原因:

1. DirectX更早推出可编程渲染管线

2. OpenGL成员太多,新标准推进缓慢

3. DirectX不仅是图形库,还包括声音、网络等一套游戏开发解决方案

4. DirectX不向后兼容,OpenGL为兼容性保留了很多过时的设计

Vertex/Fragment语法

- SubShader:针对不同的硬件做不同的处理,运行时依次扫描,都失败则FallBack。

- Pass:一个SubShader中包含1到多个Pass,通常只需一个Pass。多个Pass可用于定义多渲染路径(Rendering Path),运行时选择执行哪个,常见于自定义光照模型中阴影处理。

- Shader Semantics:如

float4 vertex : POSITION,冒号后面是这个变量的语义,与GPU交互时用到。 - Vertex Shader的输入输出

- 输入:可以是以下三种:

- 由基本语义定义的变量:

POSITION,NORMAL,TEXCOORD0,TEXCOORD1, …,TANGENT,COLOR。 - 内置的结构:

appdata_base,appdata_tan,appdata_full。 - 自定义的结构。

- 由基本语义定义的变量:

- 输出:固定有

SV_POSITION,其他需要用到的varying变量。语义大部分情况下不重要,用TEXCOORD0就好。

- 输入:可以是以下三种:

- Fragment Shader的输入输出

- 输入:同Vertex Shader的输出。

- 输出:

SV_Target,通常是单个RGBA值。

实例解析

下面看几个Unity官方manual中的实例,加深理解:

- SimpleUnlitTextureShader:简单的纹理贴图,变换顶点坐标,按uv找贴图上的颜色。

Shader "Unlit/SimpleUnlitTexturedShader"

{

Properties

{

// we have removed support for texture tiling/offset,

// so make them not be displayed in material inspector

[NoScaleOffset] _MainTex ("Texture", 2D) = "white" {}

}

SubShader

{

Pass

{

CGPROGRAM

// use "vert" function as the vertex shader

#pragma vertex vert

// use "frag" function as the pixel (fragment) shader

#pragma fragment frag

// vertex shader inputs

struct appdata

{

float4 vertex : POSITION; // vertex position

float2 uv : TEXCOORD0; // texture coordinate

};

// vertex shader outputs ("vertex to fragment")

struct v2f

{

float2 uv : TEXCOORD0; // texture coordinate

float4 vertex : SV_POSITION; // clip space position

};

// vertex shader

v2f vert (appdata v)

{

v2f o;

// transform position to clip space

// (multiply with model*view*projection matrix)

o.vertex = mul(UNITY_MATRIX_MVP, v.vertex);

// just pass the texture coordinate

o.uv = v.uv;

return o;

}

// texture we will sample

sampler2D _MainTex;

// pixel shader; returns low precision ("fixed4" type)

// color ("SV_Target" semantic)

fixed4 frag (v2f i) : SV_Target

{

// sample texture and return it

fixed4 col = tex2D(_MainTex, i.uv);

return col;

}

ENDCG

}

}

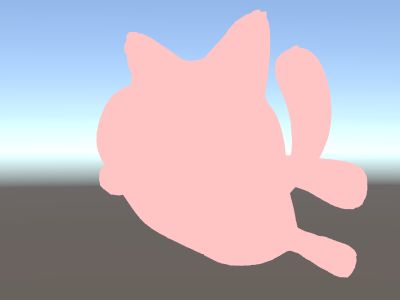

}- SingleColor:给模型赋予单色。vertex只传出SV_POSITION,fragment不传参。CG中直接引用了ShaderLab的Properties参数_Color(ShaderLab和CG的类型存在对应关系)。

Shader "Unlit/SingleColor"

{

Properties

{

// Color property for material inspector, default to white

_Color ("Main Color", Color) = (1,1,1,1)

}

SubShader

{

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

// vertex shader

// this time instead of using "appdata" struct, just spell inputs manually,

// and instead of returning v2f struct, also just return a single output

// float4 clip position

float4 vert (float4 vertex : POSITION) : SV_POSITION

{

return mul(UNITY_MATRIX_MVP, vertex);

}

// color from the material

fixed4 _Color;

// pixel shader, no inputs needed

fixed4 frag () : SV_Target

{

return _Color; // just return it

}

ENDCG

}

}

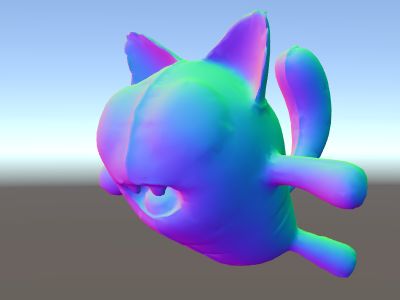

}- WorldSpaceNormal:根据法向量来变色。worldNormal作为varying变量传给fragment。

Shader "Unlit/WorldSpaceNormals"

{

// no Properties block this time!

SubShader

{

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

// include file that contains UnityObjectToWorldNormal helper function

#include "UnityCG.cginc"

struct v2f {

// we'll output world space normal as one of regular ("texcoord") interpolators

half3 worldNormal : TEXCOORD0;

float4 pos : SV_POSITION;

};

// vertex shader: takes object space normal as input too

v2f vert (float4 vertex : POSITION, float3 normal : NORMAL)

{

v2f o;

o.pos = UnityObjectToClipPos(vertex);

// UnityCG.cginc file contains function to transform

// normal from object to world space, use that

o.worldNormal = UnityObjectToWorldNormal(normal);

return o;

}

fixed4 frag (v2f i) : SV_Target

{

fixed4 c = 0;

// normal is a 3D vector with xyz components; in -1..1

// range. To display it as color, bring the range into 0..1

// and put into red, green, blue components

c.rgb = i.worldNormal*0.5+0.5;

return c;

}

ENDCG

}

}

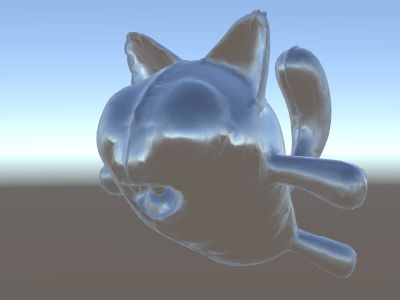

}- SkyReflection:反射环境。对比由Surface Shader的实现,可发现简洁很多。float4表示点,float3表示向量。

Shader "Unlit/SkyReflection"

{

SubShader

{

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct v2f {

half3 worldRefl : TEXCOORD0;

float4 pos : SV_POSITION;

};

v2f vert (float4 vertex : POSITION, float3 normal : NORMAL)

{

v2f o;

o.pos = UnityObjectToClipPos(vertex);

// compute world space position of the vertex

float3 worldPos = mul(_Object2World, vertex).xyz;

// compute world space view direction

float3 worldViewDir = normalize(UnityWorldSpaceViewDir(worldPos));

// world space normal

float3 worldNormal = UnityObjectToWorldNormal(normal);

// world space reflection vector

o.worldRefl = reflect(-worldViewDir, worldNormal);

return o;

}

fixed4 frag (v2f i) : SV_Target

{

// sample the default reflection cubemap, using the reflection vector

half4 skyData = UNITY_SAMPLE_TEXCUBE(unity_SpecCube0, i.worldRefl);

// decode cubemap data into actual color

half3 skyColor = DecodeHDR (skyData, unity_SpecCube0_HDR);

// output it!

fixed4 c = 0;

c.rgb = skyColor;

return c;

}

ENDCG

}

}

}再谈与Surface Shader的关系

上一篇说到Surface Shader是简化版的shader编写工具,通过封装减少了程序员的重复工作量。

那么Surface Shader是如何编译成Vertex/Fragment Shader的呢?它们三者的先后关系又是怎样?

我的理解是:Surface Shader的surf函数位于fragment shader的起始阶段,编译后生成的是Fragment Shader。这点可用Unity自带的shader inspector工具查看。具体可参见知乎上的讨论,说得很详细了,这里不再展开。