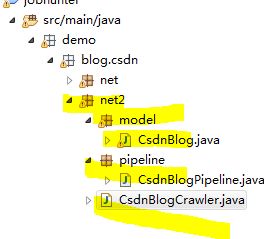

webmagic爬虫自学(四)爬取CSDN【列表+详情的基本页面组合】的页面

一、搭建webmagic项目环境部分代码,请参考

https://blog.csdn.net/qq_29914837/article/details/89309298

二、爬取CSDN【列表+详情的基本页面组合】的页面

package demo.blog.csdn.net2.model;

import java.util.Date;

import java.util.List;

import us.codecraft.webmagic.model.annotation.ExtractByUrl;

public class CsdnBlog {

//标题

private String article="";

//发布日期

private String time;

//作者

private String nick_name="";

//阅读数

private int read_count;

//标签

private List labelList;

private String label="";

//分类

private List categoryList;

private String category="";

//内容

private String content="";

//链接

@ExtractByUrl

private String url="";

public String getUrl() {

return url;

}

public void setUrl(String url) {

this.url = url;

}

//采集时间

private Date collect_time;

public Date getCollect_time() {

return collect_time;

}

public void setCollect_time(Date collect_time) {

this.collect_time = collect_time;

}

public String getArticle() {

return article;

}

public void setArticle(String article) {

this.article = article;

}

public String getTime() {

return time;

}

public void setTime(String time) {

this.time = time;

}

public String getNick_name() {

return nick_name;

}

public void setNick_name(String nick_name) {

this.nick_name = nick_name;

}

public int getRead_count() {

return read_count;

}

public void setRead_count(int read_count) {

this.read_count = read_count;

}

public List getLabelList() {

return labelList;

}

public void setLabelList(List labelList) {

this.labelList = labelList;

}

public List getCategoryList() {

return categoryList;

}

public void setCategoryList(List categoryList) {

this.categoryList = categoryList;

}

public String getContent() {

return content;

}

public void setContent(String content) {

this.content = content;

}

public String getLabel() {

return label;

}

public void setLabel(String label) {

this.label = label;

}

public String getCategory() {

return category;

}

public void setCategory(String category) {

this.category = category;

}

}

package demo.blog.csdn.net2.pipeline;

import us.codecraft.webmagic.ResultItems;

import us.codecraft.webmagic.Task;

import us.codecraft.webmagic.pipeline.Pipeline;

import util.JdbcUtil;

import java.sql.SQLException;

import java.sql.PreparedStatement;

import java.sql.Connection;

import java.sql.Date;

public class CsdnBlogPipeline implements Pipeline {

@Override

public void process(ResultItems resultItems, Task task) {

if (resultItems.get("article") != null) {

String article = resultItems.get("article").toString();

String time = resultItems.get("time").toString();

String nick_name = resultItems.get("nick_name").toString();

int read_count = Integer.parseInt(resultItems.get("read_count").toString());

String label = resultItems.get("label").toString();

String category = resultItems.get("category").toString();

String content = resultItems.get("content").toString();

String url = resultItems.get("url").toString();

System.out.println(article);

Connection conn = JdbcUtil.getConnection();

String sql = "INSERT INTO csdn ( article , time , nick_name , read_count , label , category , content , url ,collect_time) VALUES (?,?,?,?,?,?,?,?,?)";

try {

PreparedStatement ptmt = conn.prepareStatement(sql);

ptmt.setString(1, article);

ptmt.setString(2, time);

ptmt.setString(3, nick_name);

ptmt.setInt(4, read_count);

ptmt.setString(5, label);

ptmt.setString(6, category);

ptmt.setString(7, content);

ptmt.setString(8, url);

ptmt.setDate(9, new Date(System.currentTimeMillis()));

ptmt.execute();

} catch (SQLException e) {

e.printStackTrace();

}

}

}

}

解析mvc模式的 【列表+详情的基本页面组合】的页面

webmaigc不支持对列表分页提供自动下一页url获取,需要开发者手动将下一页url添加进page中时,需要判断当前爬出的列表页url下是否存在文章的url。

package demo.blog.csdn.net2;

import java.util.List;

import org.apache.log4j.Logger;

import demo.blog.csdn.net2.pipeline.CsdnBlogPipeline;

import us.codecraft.webmagic.Page;

import us.codecraft.webmagic.Site;

import us.codecraft.webmagic.Spider;

import us.codecraft.webmagic.processor.PageProcessor;

/**

* 爬取网址:https://blog.csdn.net/qq_29914837/article/list/0?

* 解析mvc模式的 【列表+详情的基本页面组合】的页面,

* @author yl

*/

public class CsdnBlogCrawler implements PageProcessor {

private Logger logger = Logger.getLogger(CsdnBlogCrawler.class);

public static final String csdn_name = "qq_29914837";

public static final String URL_LIST = "https://blog\\.csdn\\.net/" + csdn_name + "/article/list/[0-9]*?";

public static final String URL_POST = "https://blog\\.csdn\\.net/" + csdn_name + "/article/details/[0-9]*";

private Site site = Site.me().setDomain("blog.csdn.net").setSleepTime(3000).setUserAgent(

"Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.86 Safari/537.36");

@Override

public void process(Page page) {

try {

// 列表页

if (page.getUrl().regex(URL_LIST).match()) {

String url = page.getUrl().toString();

int i = Integer.parseInt(url.substring(url.lastIndexOf("/") + 1, url.lastIndexOf("?")));

//webmaigc不支持对列表分页提供自动下一页url获取,需要开发者手动在将下一页url添加进page中时,需要判断当前爬出的列表页url下是否存在文章。

if (page.getHtml().xpath("//div[@id=\"mainBox\"]/main/div[2]").links().regex(URL_POST).match()) {

page.addTargetRequests(page.getHtml().xpath("//div[@id=\"mainBox\"]/main/div[2]").links().regex(URL_POST).all());

page.addTargetRequests(page.getHtml().links().regex(URL_LIST).all());

i++;

//爬出列表页的时候,自动的将下一页的url手动拼接,添加进page中

page.addTargetRequest("https://blog.csdn.net/" + csdn_name + "/article/list/" + i + "?");

}

// 文章页

} else {

page.putField("article", page.getHtml().xpath("//h1[@class='title-article']/text()"));

page.putField("time", page.getHtml().xpath("//span[@class='time']/text()"));

page.putField("nick_name", page.getHtml().xpath("//a[@class='follow-nickName']/text()"));

page.putField("read_count", page.getHtml().xpath("//span[@class='read-count']/regex('\\d+')"));

//对于list数据采用 all()方法获取,setValue()方法将采集的list数据,拼接成字符串

page.putField("label", setValue(page.getHtml()

.xpath("//span[@class='tags-box artic-tag-box']//a[@class='tag-link']/text()").all()));

page.putField("category", setValue(

page.getHtml().xpath("//div[@class='tags-box space']//a[@class='tag-link']/text()").all()));

page.putField("content", page.getHtml().xpath("//div[@id='content_views']/html()"));

page.putField("url", page.getUrl());

if (page.getResultItems().get("article") == null) {

page.setSkip(true);

}

}

} catch (Exception e) {

e.printStackTrace();

logger.error("CSDN页面解析有误!");

}

}

@Override

public Site getSite() {

return site;

}

//setValue()方法将采集的list数据,拼接成字符串

private static String setValue(List list) {

StringBuilder sb = new StringBuilder();

if (list.size() > 0 && list != null) {

for (String string : list) {

sb.append(string).append("|");

}

return sb.substring(0, sb.lastIndexOf("|"));

} else {

return "";

}

}

public static void main(String[] args) {

Spider.create(new CsdnBlogCrawler()).addUrl("https://blog.csdn.net/" + csdn_name + "/article/list/1?")

.addPipeline(new CsdnBlogPipeline()).thread(5).run();

}

}

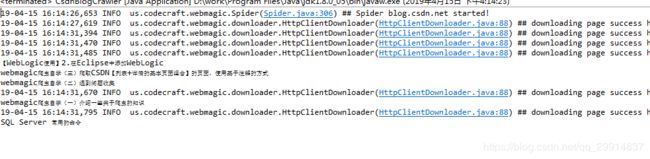

run as 运行 main 方法 ,控制台输出,代表爬虫成功,可以查看数据库是否有爬虫的文章信息。

如果你觉得本篇文章对你有所帮助的话,麻烦请点击头像右边的关注按钮,谢谢!

技术在交流中进步,知识在分享中传播