python, numpy, pandas 中对文件数据的处理

在数据分析中,我们需要把磁盘中的数据导入导出到系统,python,numpy,pandas中都提供了对数据的读取操作。

pandas中的解析函数:

| read_csv | Load delimited data from a file, URL, or file-like object. Use comma as default delimiter |

| read_table | Load delimited data from a file, URL, or file-like object. Use tab ('\t') as default delimiter |

| read_fwf | Read data in fixed-width column format (that is, no delimiters) |

| read_clipboard | Version ofread_table that reads data from the clipboard. Useful for converting tables from web pages |

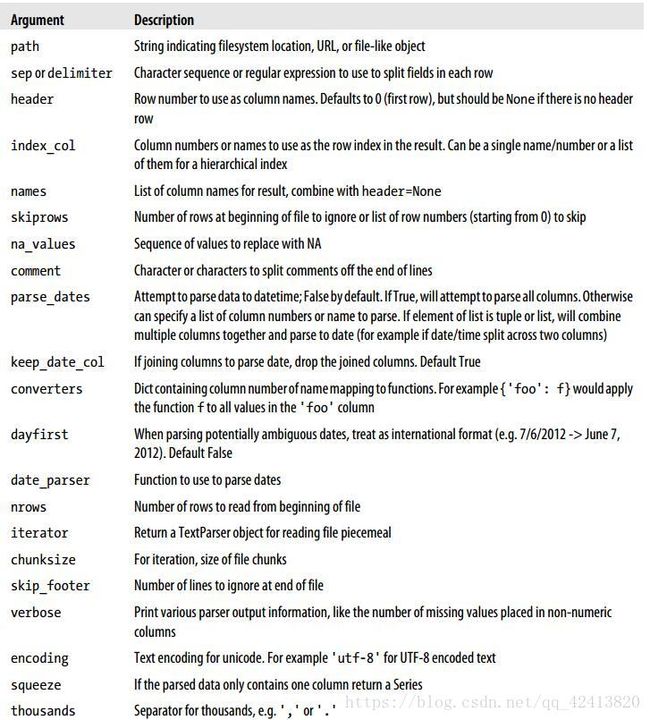

read_csv()从磁盘或者缓存中读取数据,函数参数如下:

read_csv(filepath_or_buffer, sep=',', delimiter=None, header='infer', names=None, index_col=None, usecols=None, squeeze=False, prefix=None, mangle_dupe_cols=True, dtype=None, engine=None, converters=None, true_values=None, false_values=None, skipinitialspace=False, skiprows=None, nrows=None, na_values=None, keep_default_na=True, na_filter=True, verbose=False, skip_blank_lines=True, parse_dates=False, infer_datetime_format=False, keep_date_col=False, date_parser=None, dayfirst=False, iterator=False, chunksize=None, compression='infer', thousands=None, decimal=b'.', lineterminator=None, quotechar='"', quoting=0, escapechar=None, comment=None, encoding=None, dialect=None, tupleize_cols=None, error_bad_lines=True, warn_bad_lines=True, skipfooter=0, doublequote=True, delim_whitespace=False, low_memory=True, memory_map=False, float_precision=None)

默认打开一个以 ' , ' 分割的CSV文件:

In [69]: !type D:\workspaces\datatest\ex1.csv # linux 中用 !cat a,b,c,d,message 1,2,3,4,hello 5,6,7,8,world 9,10,11,12,foo

将数据读取为Dataframe 对象:

In [70]: df = pd.read_csv('D:\workspaces\datatest\ex1.csv') # 用read_table也可以,要指定分隔符

# df = pd.read_table('D:\workspaces\datatest\ex1.csv',sep=',')

In [71]: df

Out[71]:

a b c d message

0 1 2 3 4 hello

1 5 6 7 8 world

2 9 10 11 12 foo

再实际中,并不是所有的文件都有标题行:

In [72]: !type D:\workspaces\datatest\ex2.csv 1,2,3,4,hello 5,6,7,8,world 9,10,11,12,foo

此时导入有两种方式:1)默认pandas分配的列名、 2)自己指定

In [76]: df = pd.read_csv('D:\workspaces\datatest\ex2.csv',header=None) # header=None 一定记得带 In [77]: df Out[77]: 0 1 2 3 4 0 1 2 3 4 hello 1 5 6 7 8 world 2 9 10 11 12 foo In [78]: names = ['a','b','c','d','message'] In [79]: df = pd.read_csv('D:\workspaces\datatest\ex2.csv',names=names) In [80]: df Out[80]: a b c d message 0 1 2 3 4 hello 1 5 6 7 8 world 2 9 10 11 12 foo

将 'message' 列指定为 Dataframe 的索引:

In [81]: df = pd.read_csv('D:\workspaces\datatest\ex2.csv',names=names,index_col='message')

In [82]: df

Out[82]:

a b c d

message

hello 1 2 3 4

world 5 6 7 8

foo 9 10 11 12

还可以将多个列做成一个层次化的索引:

In [83]: !type D:\workspaces\datatest\ex3.csv

key1,key2,value1,value2

one,a,1,2

one,b,3,4

one,c,5,6

one,d,7,8

two,a,9,10

two,b,11,12

two,c,13,14

two,d,15,16

In [85]: df = pd.read_csv('D:\workspaces\datatest\ex3.csv',index_col=['key1','key2'])

In [86]: df

Out[86]:

value1 value2

key1 key2

one a 1 2

b 3 4

c 5 6

d 7 8

two a 9 10

b 11 12

c 13 14

d 15 16

如果你想跳过文件中的某些行则可以用 skiprows :

In [88]: df = pd.read_csv('D:\workspaces\datatest\ex3.csv',skiprows=[1,4])

In [89]: df

Out[89]:

key1 key2 value1 value2

0 one b 3 4

1 one c 5 6

2 two a 9 10

3 two b 11 12

4 two c 13 14

5 two d 15 16

In [90]: df = pd.read_csv('D:\workspaces\datatest\ex3.csv',skiprows=[1,5])

In [91]: df

Out[91]:

key1 key2 value1 value2

0 one b 3 4

1 one c 5 6

2 one d 7 8

3 two b 11 12

4 two c 13 14

5 two d 15 16

缺失值:缺失值在实际中要么用空字符串表示,要么用某个标记值标记。

In [93]: !type D:\workspaces\datatest\ex1.csv

something,a,b,c,d,message

one,1,2,3,4,NA

two,5,6,,8,world

three,9,10,11,12,foo

In [94]: result = pd.read_csv('D:\workspaces\datatest\ex1.csv')

In [95]: result

Out[95]:

something a b c d message

0 one 1 2 3.0 4 NaN

1 two 5 6 NaN 8 world

2 three 9 10 11.0 12 foo

na_values 可以接受一组用于表示缺失值的字符串:

In [96]: result = pd.read_csv('D:\workspaces\datatest\ex1.csv',na_values= ['NULL'])

In [97]: result

Out[97]:

something a b c d message

0 one 1 2 3.0 4 NaN

1 two 5 6 NaN 8 world

2 three 9 10 11.0 12 foo用一个字典为各列指定不同的NA标记值:

In [98]: sentinels = {'message': ['foo', 'NA'], 'something': ['two']}

In [99]: result = pd.read_csv('D:\workspaces\datatest\ex1.csv',na_values=sentinels)

In [100]: result

Out[100]:

something a b c d message

0 one 1 2 3.0 4 NaN

1 NaN 5 6 NaN 8 world

2 three 9 10 11.0 12 NaN

read_csv、read_table的参数:

将文件中的数据逐块读取:如果要读取的文件太大,一次读取到内存会导致资源的严重占用,这时你希望读取一部分或者逐块读取:

nrows : 设置读取的行数;

In [122]: result = pd.read_csv('D:\workspaces\datatest\ex6.csv',nrows=5)

In [123]: result

Out[123]:

one two three four key

0 0.467976 -0.038649 -0.295344 -1.824726 L

1 -0.358893 1.404453 0.704965 -0.200638 B

2 -0.501840 0.659254 -0.421691 -0.057688 G

3 0.204886 1.074134 1.388361 -0.982404 R

4 0.354628 -0.133116 0.283763 -0.837063 Q

chunksize : 设置每块的行数

In [128]: chunk = pd.read_csv('D:\workspaces\datatest\ex6.csv',chunksize=

...: 1000)

In [129]: count = pd.Series([])

In [130]: for pie in chunk:

...: count = count.add(pie['key'].value_counts(),fill_value=0)

In [131]: count # sort_index() sort_values()

Out[131]:

0 151.0

1 146.0

2 152.0

3 162.0

4 171.0

5 157.0

6 166.0

7 164.0

8 162.0

9 150.0

A 320.0

B 302.0

C 286.0

D 320.0

E 368.0

dtype: float64

将数据写入到文件:to_csv() 默认使用 ',' 分割,也可以自己指定。

to_csv(path_or_buf=None, sep=',', na_rep='', float_format=None, columns=None, header=True, index=True, index_label=None, mode='w', encoding=None, co mpression=None, quoting=None, quotechar='"', line_terminator='\n', chunksize=None, tupleize_cols=None, date_format=None, doublequote=True, escapechar=None, decimal='.')

In [159]: data = pd.read_csv('D:\workspaces\datatest\ex1.csv')

In [160]: data

Out[160]:

something a b c d message

0 one 1 2 3.0 4 NaN

1 two 5 6 NaN 8 world

2 three 9 10 11.0 12 foo

In [161]: data.to_csv('D:\workspaces\datatest\out.csv')

In [162]: !type D:\workspaces\datatest\out.csv

,something,a,b,c,d,message

0,one,1,2,3.0,4,

1,two,5,6,,8,world

2,three,9,10,11.0,12,foo

In [163]: data.to_csv('D:\workspaces\datatest\out.csv',sep='_')

In [164]: !type D:\workspaces\datatest\out.csv

_something_a_b_c_d_message

0_one_1_2_3.0_4_

1_two_5_6__8_world

2_three_9_10_11.0_12_foo

在输出的时候,缺失值会被表示为空字符串,若想用其他值表示可用 na_rep:

In [169]: data = pd.read_csv('D:\workspaces\datatest\ex1.csv')

In [170]: data

Out[170]:

something a b c d message

0 one 1 2 3.0 4 NaN

1 two 5 6 NaN 8 world

2 three 9 10 11.0 12 foo

In [171]: data.to_csv('D:\workspaces\datatest\out.csv',na_rep='QS')

In [172]: !type D:\workspaces\datatest\out.csv

,something,a,b,c,d,message

0,one,1,2,3.0,4,QS

1,two,5,6,QS,8,world

2,three,9,10,11.0,12,foo

默认情况下会把行和列的标签都写入文件,可以指定不写入,也可以指定写入某些列:

In [183]: data.to_csv('D:\workspaces\datatest\out.csv',index=False,columns=['a','b','c'])

In [184]: !type D:\workspaces\datatest\out.csv

a,b,c

1,2,3.0

5,6,

9,10,11.0

Series也有 to_csv, pandas.read_csv (Series.from_csv 过时的方法) :

In [209]: dates = pd.date_range('6/18/2018', periods=7)

In [210]: dates

Out[210]:

DatetimeIndex(['2018-06-18', '2018-06-19', '2018-06-20', '2018-06-21',

'2018-06-22', '2018-06-23', '2018-06-24'],

dtype='datetime64[ns]', freq='D')

In [211]: ts = pd.Series(np.arange(7),index=dates)

In [213]: ts.to_csv('D:\workspaces\datatest\out_ts.csv')

In [214]: ts.from_csv('D:\workspaces\datatest\out_ts.csv')

D:\projects\env\Lib\site-packages\pandas\core\series.py:3726: FutureWarnin

g: from_csv is deprecated. Please use read_csv(...) instead. Note that som

e of the default arguments are different, so please refer to the documenta

tion for from_csv when changing your function calls

infer_datetime_format=infer_datetime_format)

Out[214]:

2018-06-18 0

2018-06-19 1

2018-06-20 2

2018-06-21 3

2018-06-22 4

2018-06-23 5

2018-06-24 6

dtype: int64

In [215]: pd.read_csv('D:\workspaces\datatest\out_ts.csv')

Out[215]:

2018-06-18 0

0 2018-06-19 1

1 2018-06-20 2

2 2018-06-21 3

3 2018-06-22 4

4 2018-06-23 5

5 2018-06-24 6

手工处理分隔符:

pandas提供的方法并不是通用的,有的时候还是需要我们收工处理,对于任何单字符分割的文件,可以直接使用python内置的CSV模块:

reader(...)

csv_reader = reader(iterable [, dialect='excel']

[optional keyword args])

for row in csv_reader:

process(row)

The "iterable" argument can be any object that returns a line

of input for each iteration, such as a file object or a list. The

optional "dialect" parameter is discussed below. The function

also accepts optional keyword arguments which override settings

provided by the dialect.

In [217]: !type D:\workspaces\datatest\ex1.csv

"w","f","c"

"4","5","6"

"4","3","2","1"

In [218]: import csv

In [219]: file = open('D:\workspaces\datatest\ex1.csv')

In [220]: reader = csv.reader(file)

In [221]: for line in reader:

...: print(line)

...:

['w', 'f', 'c']

['4', '5', '6']

['4', '3', '2', '1']

In [238]: file = open('D:\workspaces\datatest\ex1.csv')

In [239]: reader = csv.reader(file)

In [240]: lines = list(reader)

In [241]: header,values = lines[0],lines[1:]

In [242]: data = {header:val for header,val in zip(header,zip(*values))}

In [243]: data

Out[243]: {'c': ('6', '2'), 'f': ('5', '3'), 'w': ('4', '4')}

csv文件的形式有很多,只需要定义csv.Dialect的子类即可定义新格式:

class dialect(csv.Dialect): lineterminator = '\n' delimiter = ';' quotechar = '"' reader = csv.reader(f, dialect=dialect)

csv.reader参数的选项:

| delimiter | One-character string to separate fields. Defaults to ','. |

| lineterminator | Line terminator for writing, defaults to '\r\n'. Reader ignores this and recognizes cross-platform line terminators. |

| quotechar | Quote character for fields with special characters (like a delimiter). Default is '"'. |

| quoting | Quoting convention. Options include csv.QUOTE_ALL (quote all fields), csv.QUOTE_MINIMAL (only fields with special characters like the delimiter), csv.QUOTE_NONNUMERIC, and csv.QUOTE_NON (no quoting). See Python’s documentation for full details. Defaults to QUOTE_MINIMAL. |

| skipinitialspace | Ignore whitespace after each delimiter. Default False. |

| doublequote | How to handle quoting character inside a field. If True, it is doubled. See online documentation for full detail and behavior. |

| escapechar | String to escape the delimiter if quoting is set to csv.QUOTE_NONE. Disabled by default |

手工输出分隔符文件:

with open('data.csv', 'w') as f:

writer = csv.writer(f, dialect=dialect)

writer.writerow(('w', 'f', 'c'))

writer.writerow(('1', '2', '3'))

writer.writerow(('4', '5', '6'))

writer.writerow(('7', '8', '9'))

处理JSON 数据

JSON(JavaScript Object Natation)一种比较灵活的数据格式,现在网络传输数据多用JSON。

python 标准库中,就有对 json 的处理模块 json。

一个简单的JSON格式的数据:

obj = """ { "name": "Wes", "places_lived": ["United States", "Spain", "Germany"], "pet": null, "siblings": [{"name": "Scott", "age": 25, "pet": "Zuko"}, {"name": "Katie", "age": 33, "pet": "Cisco"}] } """

将JSON格式的数据转换成python对象形式 json.loads:

In [2]: import json

In [6]: result = json.loads(obj)

In [7]: result

Out[7]:

{'name': 'Wes',

'pet': None,

'places_lived': ['United States', 'Spain', 'Germany'],

'siblings': [{'age': 25, 'name': 'Scott', 'pet': 'Zuko'},

{'age': 33, 'name': 'Katie', 'pet': 'Cisco'}]

}

json.dumps 则相反,把python对象转换成JSON格式:

In [8]: toJson = json.dumps(result)

In [9]: toJson

Out[9]: '{

"pet": null,

"name": "Wes",

"siblings": [{"age": 25, "name": "Scott", "pet": "Zuko"}, {"age": 33, "name": "Katie", "pet": "Cisco"}],

"places_lived": ["United States", "Spain", "Germany"]

}'

JSON数据格式转换为python对象后,既可以转换为 Dataframe 了:

In [14]: df = pd.DataFrame(result['siblings'],columns=['name','age','pet']) In [15]: df Out[15]: name age pet 0 Scott 25 Zuko 1 Katie 33 Cisco

处理的数据还可以是 html:

这里我自己写了一个简单爬虫,从 https://finance.yahoo.com/quote/AAPL/options?ltr=1 收集了部分数据,感兴趣的话,也可以自己改改代码,自己玩玩。

from urllib import request

from bs4 import BeautifulSoup

import pandas as pd

class ParseHtml(object):

def __init__(self):

self.title = []

self.contexts = []

def _download(self, url):

if url is None:

return None

result = request.urlopen(url)

if result.getcode() != 200: # 判断返回的状态码 200 表示正常返回

return None

return result.read()

def _parser(self, context):

soup = BeautifulSoup(context, 'html.parser', from_encoding='utf-8') # 用 BeautifulSoup 根据下载的内容解析内容

table = soup.find('table', class_='puts table-bordered W(100%) Pos(r) list-options')

heads = table.find('tr').find_all('th') # 获取表头(所有的 th)

t_body_trs = table.find('tbody').find_all('tr') # 获取表格的所有行(除去表头tr外,所有的tr)

for head in heads:

self.title.append(head.get_text()) # 获取所有的表头值

for bt in t_body_trs:

tds = bt.find_all('td') # 获取每行的所有单元格

temp = []

for td in tds:

temp.append(td.get_text()) # 获取每行单元格的内容

self.contexts.append(temp)

return self.title, self.contexts

def gen_data_frame(self):

html = self._download('https://finance.yahoo.com/quote/AAPL/options?ltr=1')

t, c = self._parser(html)

data_frame = pd.DataFrame(c, columns=t) # 根据提取的内容生成 pd.Dataframe

return data_frame

if __name__ == '__main__':

ph = ParseHtml()

df = ph.gen_data_frame()

print(df)

pandas 中提供了一个 to_pickle方法用于保存二进制数据和read_pickle方法把数据读回python,

numpy中提供了一个 save方法用于保存二进制数据和load方法把数据读回python,

python中对二进制进行存储是使用内置的 pickle序列化。

In [13]: frame = pd.read_csv('D:\workspaces\datatest\ex2.csv',header=None)

In [14]: frame

Out[14]:

0 1 2 3 4

0 1 2 3 4 hello

1 5 6 7 8 world

2 9 10 11 12 foo

In [15]: frame.to_pickle('D:\workspaces\datatest\_frame_pickle')

In [16]: pd.read_pickle('D:\workspaces\datatest\_frame_pickle')

Out[16]:

0 1 2 3 4

0 1 2 3 4 hello

1 5 6 7 8 world

2 9 10 11 12 foo

使用 HDF5 格式:

HDF5的是层次性数据格式(hierarchical Data Format),能高效的读取磁盘上的二进制文件,是一个流行的工业级 C 语言库。每个HDF5文件都含有一个文件系统式的节点结构,能够存储多个数据集,并支持元数据。HDF5支持多种压缩器的及时压缩,高效的存储重复模式数据。

Python中的HDF5库有两个接口 PyTables 和 h5py。h5py提供了一种直接而高级的HDF5 API访问接口。而PyTables 则抽象HDF5法人许多细节已提供多种灵活的数据容器,表索引,查询功能以及对核外计算技术的某些支持。

pandas 有一个最小化的类似于字典的 HDFstore类,他通过PyTables 存储pandas对象:

若出现:ImportError: HDFStore requires PyTables, "No module named 'tables'" problem importing 安装 tables。pip install tables

In [4]: store = pd.HDFStore('mydata.h5')

In [5]: frame = pd.read_csv('D:\workspaces\datatest\ex2.csv',header=None)

In [6]: store['obj1'] = frame

In [7]: store

Out[7]:

File path: mydata.h5

In [8]: store['obj1']

Out[8]:

0 1 2 3 4

0 1 2 3 4 hello

1 5 6 7 8 world

2 9 10 11 12 foo

还可以处理 Microsoft Excel 文件 和 数据库中的数据,这里就不介绍了,有机会后面介绍有关 python数据库相关的知识。