Spring Boot与Mongo组合分页查询处理亿级级别数据

文章目录

- Spring Boot与Mongo组合分页查询处理亿级级别数据

- 0. 项目实施场景

- 1. 为什么不用`skip`和`limit`组合方式:

- 2. 主键`_id`查询方式:

- 3. 代码实现:

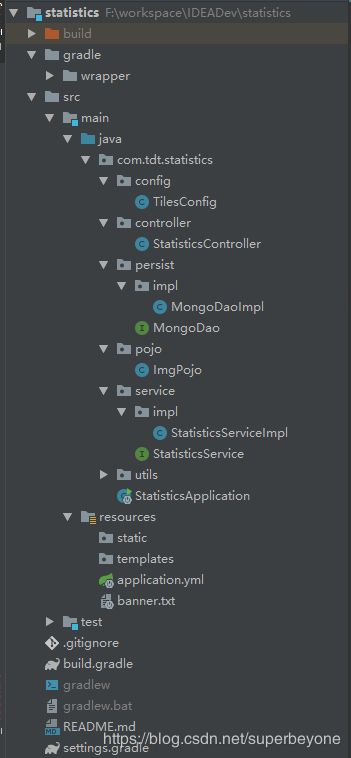

- 3.0 目录结构

- 3.1 application.yml

- 3.2 build.gradle

- 3.3 启动入口类

- 3.4 Config

- 3.5 POJO

- 3.6 Dao

- 3.7 Service

- 3.8 Controller

- 4. 备注

- 4.1 Mongo数据库连接池更多配置

- 4.2 国内镜像源配置

- 4.3 特点

- 4.4 缺点

Spring Boot与Mongo组合分页查询处理亿级级别数据

0. 项目实施场景

最近公司领导提出了一个需求,要求查询获取Mongo库内指定字段的数据,并写出到文件内,数据库内最少的数据量有 3亿 多条,如此庞大的数据量,单单是用一个jar来跑的话,的确很吃力,不过也没有办法,既然任务安排下来了,只能努力完成了。

1. 为什么不用skip和limit组合方式:

如果Mongo数据量过大的话,skip和limit组合查询方式,越往后查询,查询效率越低。

其实一开始是按照这种方式来写的,不过因为数据量太大了,后面的查询就渐渐吃不消了,无奈只能改版。

2. 主键_id查询方式:

思路:Mongo自维护的主键_id的 生成策略 12字节的ObjectId 值包括:

- 一个4字节的值,表示自Unix纪元以来的秒数

- 一个5字节的随机值

- 一个3字节的计数器,以随机值开始

ObjectId值共12字节,24位,前8位为入库的时间秒值,这是解决问题的关键。

本项目主要查询Mongo里面的filename字段,根据后缀名分别筛选出png和jpg格式的文件名并保存到文件中。

3. 代码实现:

3.0 目录结构

3.1 application.yml

spring:

profiles:

active: devMongo

---

server:

port: 9999

spring:

profiles: devMongo

devtools:

restart:

enabled: false

data:

mongodb:

#uri: mongodb://username:password@ip:port/admin

uri: mongodb://ip:port/dbName #Mongo连接地址

option:

min-connection-per-host: 50

max-connection-per-host: 150

threads-allowed-to-block-for-connection-multiplier: 5

tdt:

tiles:

collectionName: collecionName #collection name

execThreadCount: 50 #并行开启线程数

savePath: F:/data/ # 文件保存路径

everyThreadExecSeconds: 120 # 每个线程一次执行多少秒内的数据,即主键查询的时间跨度区间

readTimeOutTime: 10 # 为防止循环太快,每执行execThreadCount次,主线程会sleep该值,单位毫秒

parentDirectoryCount: 1000 # 文件保存散列策略即父文件夹数

minPKVal: 584f63554b2bdb19e0ec9d2c

maxPkVal: 5b3090a688eb6f3f0879acfa

#logging

logging:

level:

root: debug

3.2 build.gradle

buildscript {

ext {

springBootVersion = '2.1.1.RELEASE'

}

repositories {

maven { url 'http://maven.aliyun.com/nexus/content/groups/public/' }

maven { url 'http://maven.aliyun.com/nexus/content/repositories/jcenter' }

mavenCentral()

}

dependencies {

classpath("org.springframework.boot:spring-boot-gradle-plugin:${springBootVersion}")

}

}

apply plugin: 'java'

apply plugin: 'eclipse'

apply plugin: 'org.springframework.boot'

apply plugin: 'io.spring.dependency-management'

group = 'com.tdt'

version = '0.0.2'

sourceCompatibility = '1.8'

repositories {

maven { url 'http://maven.aliyun.com/nexus/content/groups/public/' }

maven { url 'http://maven.aliyun.com/nexus/content/repositories/jcenter' }

mavenCentral()

}

dependencies {

implementation('org.springframework.boot:spring-boot-starter-data-mongodb')

implementation('org.springframework.boot:spring-boot-starter-web')

testImplementation('org.springframework.boot:spring-boot-starter-test')

annotationProcessor "org.springframework.boot:spring-boot-configuration-processor"

compile group: 'org.slf4j', name: 'slf4j-api', version: '1.7.25'

compile group: 'com.spring4all', name: 'mongodb-plus-spring-boot-starter', version: '1.0.0.RELEASE'

}

configurations {

all*.exclude group: 'spring-boot-starter-logging'

}

jar {

manifest { //incubating版本,以后版本可能会改API

attributes("Main-Class": "com.tdt.statistics.StatisticsApplication",//启动程序入口全路径

"Implementation-Title": "Gradle")

}

into('lib') {

from configurations.runtime

}

}

3.3 启动入口类

import com.spring4all.mongodb.EnableMongoPlus;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

/**

* @author superbeyone

*/

@SpringBootApplication

@EnableMongoPlus

public class StatisticsApplication {

public static void main(String[] args) {

SpringApplication.run(StatisticsApplication.class, args);

}

}

3.4 Config

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.stereotype.Component;

/**

* @author Mr.superbeyone

* @project statistics

* @className TilesConfig

* @description 自定义配置类

* @create 2019-01-08 15:55

**/

@Component

@ConfigurationProperties(prefix = "tdt.tiles")

public class TilesConfig {

private int execThreadCount = 1;

private String collectionName;

private String savePath;

private int everyThreadExecSeconds = 10000;

private int readTimeOutTime = 1000;

private int parentDirectoryCount = 1000;

private String minPKVal;

private String maxPKVal;

//Get Set

}

3.5 POJO

/**

* @author Mr.superbeyone

* @project statistics

* @className ImgPojo

* @description

* @create 2019-01-08 10:55

**/

@Document(collection = "tiles")

public class ImgPojo implements Serializable {

private String filename;

//Get Set

}

3.6 Dao

/**

* @author Mr.superbeyone

* @project statistics

* @className StatisticsDao

* @description Dao

* @create 2019-01-08 10:50

**/

public interface MongoDao {

FindIterable<Document> getTilesResultByPrimaryKeyRegionDoc(ObjectId startId, ObjectId endId);

}

/**

* @author Mr.superbeyone

* @project statistics

* @className MongoDaoImpl

* @description

* @create 2019-01-08 10:51

**/

@Repository

public class MongoDaoImpl implements MongoDao {

@Autowired

MongoTemplate mongoTemplate;

@Autowired

TilesConfig tilesConfig;

private Logger logger = LoggerFactory.getLogger(getClass());

@Override

public FindIterable<Document> getTilesResultByPrimaryKeyRegionDoc(ObjectId startId, ObjectId endId) {

MongoCollection<Document> collection = mongoTemplate.getCollection(tilesConfig.getCollectionName());

Document document = new Document();

document.append("_id", new Document("$gte", startId).append("$lt", endId));

return collection.find(document).projection(new BasicDBObject().append("_id", 0).append("filename", 1));

}

}

3.7 Service

/**

* @author Mr.superbeyone

* @project statistics

* @className StatisticsService

* @description Service

* @create 2019-01-08 10:49

**/

public interface StatisticsService {

FindIterable<Document> getTilesResultByPrimaryKeyRegionDoc(ObjectId start, ObjectId end);

}

/**

* @author Mr.superbeyone

* @project statistics

* @className StatisticsServiceImpl

* @description

* @create 2019-01-08 10:50

**/

@Service

public class StatisticsServiceImpl implements StatisticsService {

@Autowired

MongoDao mongoDao;

private Logger logger = LoggerFactory.getLogger(getClass());

@Override

public FindIterable<Document> getTilesResultByPrimaryKeyRegionDoc(ObjectId start, ObjectId end) {

return mongoDao.getTilesResultByPrimaryKeyRegionDoc(start, end);

}

}

3.8 Controller

/**

* @author Mr.superbeyone

* @project statistics

* @className StatisticsController

* @description Controller

* @create 2019-01-08 10:49

**/

@RestController

public class StatisticsController {

@Autowired

StatisticsService statisticsService;

@Autowired

TilesConfig tilesConfig;

private Logger logger = LoggerFactory.getLogger(getClass());

@RequestMapping("/exec")

public String exec() {

String minPKVal = tilesConfig.getMinPKVal();

String maxPKVal = tilesConfig.getMaxPKVal();

ObjectId startId = new ObjectId(minPKVal);

ObjectId endId = new ObjectId(maxPKVal);

int startIdTimestamp = startId.getTimestamp();

int endIdTimestamp = endId.getTimestamp();

int everyThreadExecCount = tilesConfig.getEveryThreadExecSeconds();

ThreadPoolExecutor executor = new ThreadPoolExecutor(tilesConfig.getExecThreadCount(), 3 * tilesConfig.getExecThreadCount(), Integer.MAX_VALUE, TimeUnit.SECONDS, new LinkedTransferQueue<>());

ThreadPoolExecutor saveExecutor = new ThreadPoolExecutor(tilesConfig.getExecThreadCount(), 3 * tilesConfig.getExecThreadCount(), Integer.MAX_VALUE, TimeUnit.SECONDS, new LinkedTransferQueue<>());

ThreadPoolExecutor pngExecutor = new ThreadPoolExecutor(tilesConfig.getExecThreadCount(), 3 * tilesConfig.getExecThreadCount(), Integer.MAX_VALUE, TimeUnit.SECONDS, new LinkedTransferQueue<>());

ThreadPoolExecutor jpgExecutor = new ThreadPoolExecutor(tilesConfig.getExecThreadCount(), 3 * tilesConfig.getExecThreadCount(), Integer.MAX_VALUE, TimeUnit.SECONDS, new LinkedTransferQueue<>());

File savePath = new File(tilesConfig.getSavePath());

long taskCount = (endIdTimestamp - startIdTimestamp) / everyThreadExecCount + 1;

for (long i = 0; i < taskCount; i++) {

if (i % tilesConfig.getExecThreadCount() == 0) {

try {

Thread.sleep(tilesConfig.getReadTimeOutTime());

} catch (InterruptedException e) {

e.printStackTrace();

}

}

final long finalI = i;

executor.submit(() -> {

logger.debug("开始执行第[ {} ]个任务,共[ {} ]个任务", (finalI + 1), taskCount);

int ai = Integer.valueOf(String.valueOf(startIdTimestamp + finalI * everyThreadExecCount));

int bi = Integer.valueOf(String.valueOf(startIdTimestamp + (finalI + 1) * everyThreadExecCount));

ObjectId start = new ObjectId(Integer.toHexString(ai) + "0000000000000000");

ObjectId end = new ObjectId(Integer.toHexString(bi) + "0000000000000000");

FindIterable<Document> doc = statisticsService.getTilesResultByPrimaryKeyRegionDoc(start, end);

saveExecutor.submit(() -> {

MongoCursor<Document> iterator = doc.iterator();

StringBuilder pngBuilder = new StringBuilder();

StringBuilder jpgBuilder = new StringBuilder();

HashMap<String, String> map = new HashMap<>();

while (iterator.hasNext()) {

String filename = (String) iterator.next().get("filename");

if (filename.endsWith("png")) {

pngBuilder.append(filename).append(System.lineSeparator());

} else if (filename.endsWith("jpg")) {

jpgBuilder.append(filename).append(System.lineSeparator());

}

}

boolean flag = true;

if (pngBuilder.toString().length() > 0) {

pngExecutor.submit(() -> {

saveFile0(savePath, finalI, pngBuilder.toString(), "png");

});

flag = false;

}

if (jpgBuilder.toString().length() > 0) {

jpgExecutor.submit(() -> {

saveFile0(savePath, finalI, jpgBuilder.toString(), "jpg");

});

if (flag) {

flag = false;

}

}

if (flag) {

logger.debug("结束执行第[ {} ]个任务,该任务数据为空,共[ {} ]个任务", (finalI + 1), taskCount);

}

});

});

}

return "exec";

}

private void saveFile0(File savePath, long finalI, String png, String type) {

File saveFile = new File(savePath, type + File.separator + (finalI % tilesConfig.getParentDirectoryCount()) + File.separator + type + "_" + finalI + ".txt");

if (!saveFile.getParentFile().exists()) {

saveFile.getParentFile().mkdirs();

}

try (BufferedOutputStream bufferedOutputStream = new BufferedOutputStream(new FileOutputStream(saveFile))) {

bufferedOutputStream.write(png.getBytes());

} catch (Exception e) {

e.printStackTrace();

}

logger.debug("结束执行第[ {} ]个任务的[ {} ]类型文件保存操作,文件名:[ {} ]", (finalI + 1), type, saveFile.getName());

}

}

4. 备注

4.1 Mongo数据库连接池更多配置

Mongo数据库连接池

4.2 国内镜像源配置

国内镜像源配置

4.3 特点

根据配置选项的minPKVal和maxPkVal,可以多个jar同时查询,只需要配置不同的端口就行,不过,这样处理的话,可能会有一部分数据重复,重复的数据数量与大于maxPkVal(时间戳)在everyThreadExecSeconds时间段内的数据插入量有关。

根据此特点,也可以实现类似于端点续传的功能,不过略显不足的上述中数据重复问题是极有可能出现的。

其实上述问题也很好解决:

只需要判断一下当前传值给查询语句的最大值是不是大于想要终止的数据库最大值就行了,如果是的话,就用配置项的maxPkVal所获取的秒值加1即可。

4.4 缺点

- 如果数据库导入的时间跨度非常大,会有很多空轮询

- 如果数据库导入时间跨度小,而数据量巨大,只能把

everyThreadExecSeconds参数调小,来避免数据循环过多 - I/O阻塞 与 数据结果组合也会对效率有所影响