scrapy实战:伪造headers的多种实现

scrapy实战:伪造headers的多种实现

- 新建爬虫

- ip138.py

- 默认headers

- 默认User-Agent

- settins.py

- 默认HEADERS

- settins.py

- custom_settings

- headers=headers

- Middleware中间件设置

- middlewares.py

- settings.py

- 简单动态 User-Agent

- settings.py

- middlewares.py

- fake-useragent 切换User-Agent

- 安装

- middlewares.py

- settings.py

新建爬虫

为了分析我们伪造headers是否成功,我们先新建一个爬虫,目标可以随便选,我这里直接借用下个论题要用的爬虫。

#进入虚拟环境

cd /data/code/python/venv/venv_Scrapy/tutorial

# 新建爬虫

../bin/python3 ../bin/scrapy genspider -t basic ip138 www.ip138.com

ip138.py

修改 ip138.py 功能很简单只需要打印出全部的headers meta cookies,这里我们先只关注request headers

# -*- coding: utf-8 -*-

import scrapy

class Ip138Spider(scrapy.Spider):

name = 'ip138'

allowed_domains = ['www.ip138.com','2018.ip138.com']

start_urls = ['http://2018.ip138.com/ic.asp']

def parse(self, response):

print("*" * 40)

print("response text: %s" % response.text)

print("response headers: %s" % response.headers)

print("response meta: %s" % response.meta)

print("request headers: %s" % response.request.headers)

print("request cookies: %s" % response.request.cookies)

print("request meta: %s" % response.request.meta)

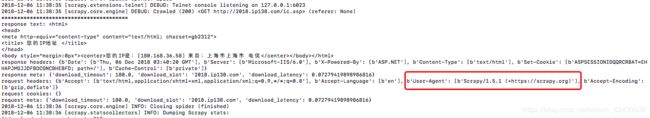

默认headers

运行爬虫

../bin/python3 ../bin/scrapy crawl ip138

很明显这种默认的request.headers很容易被封,我们需要伪装自己的headers

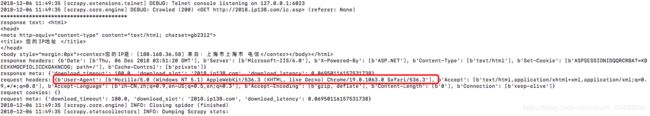

默认User-Agent

我们先尝试修改 默认的User-Agent

settins.py

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'tutorial (+http://www.yourdomain.com)'

USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1'

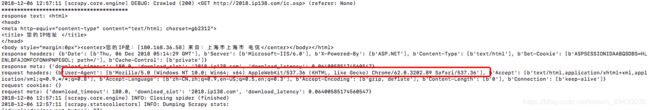

默认HEADERS

settins.py

#Override the default request headers:

DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.89 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

"Accept-Language": "zh-CN,zh;q=0.9,en-US;q=0.5,en;q=0.3",

"Accept-Encoding": "gzip, deflate",

'Content-Length': '0',

"Connection": "keep-alive"

}

custom_settings

除了可以在 settins.py 中设置,我们也可以为爬虫单独设置headers,例如

custom_settings = {

'DEFAULT_REQUEST_HEADERS' : {

'User-Agent': 'Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

"Accept-Language": "zh-CN,zh;q=0.9,en-US;q=0.5,en;q=0.3",

"Accept-Encoding": "gzip, deflate",

'Content-Length': '0',

"Connection": "keep-alive"

}

}

headers=headers

# -*- coding: utf-8 -*-

import scrapy

class Ip138Spider(scrapy.Spider):

name = 'ip138'

allowed_domains = ['www.ip138.com','2018.ip138.com']

start_urls = ['http://2018.ip138.com/ic.asp']

headers = {

'User-Agent': 'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; 360SE)',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

"Accept-Language": "zh-CN,zh;q=0.9,en-US;q=0.5,en;q=0.3",

"Accept-Encoding": "gzip, deflate",

'Content-Length': '0',

"Connection": "keep-alive"

}

def start_requests(self):

for url in self.start_urls:

yield scrapy.Request(url, headers=self.headers ,callback=self.parse)

def parse(self, response):

print("*" * 40)

print("response text: %s" % response.text)

print("response headers: %s" % response.headers)

print("response meta: %s" % response.meta)

print("request headers: %s" % response.request.headers)

print("request cookies: %s" % response.request.cookies)

print("request meta: %s" % response.request.meta)

Middleware中间件设置

我们使用startproject 创建项目的时候系统已经自动为我们创建了 middlewares.py 中间件py

我们可以在DownloaderMiddleware中间件中设置headers

middlewares.py

class TutorialDownloaderMiddleware(object):

def process_request(self, request, spider):

request.headers.setdefault('User-Agent', 'Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5')

当然我们也可以设置完整的headers

from scrapy.http.headers import Headers

headers = {

'User-Agent': 'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; 360SE)',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

"Accept-Language": "zh-CN,zh;q=0.9,en-US;q=0.5,en;q=0.3",

"Accept-Encoding": "gzip, deflate",

'Content-Length': '0',

"Connection": "keep-alive"

}

class TutorialDownloaderMiddleware(object):

def process_request(self, request, spider):

request.headers = Headers(headers)

settings.py

启动中间件

# Enable or disable downloader middlewares

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

DOWNLOADER_MIDDLEWARES = {

'tutorial.middlewares.TutorialDownloaderMiddleware': 1,

}

简单动态 User-Agent

在settings文件中添加一些UserAgent

settings.py

USER_AGENT_LIST=[

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1",

"Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; SE 2.X MetaSr 1.0; SE 2.X MetaSr 1.0; .NET CLR 2.0.50727; SE 2.X MetaSr 1.0)",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; 360SE)",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24"

]

middlewares.py

from tutorial.settings import USER_AGENT_LIST

class TutorialDownloaderMiddleware(object):

def process_request(self, request, spider):

request.headers.setdefault('User-Agent', random.choice(USER_AGENT_LIST))

fake-useragent 切换User-Agent

安装

../bin/pip3 install fake-useragent

middlewares.py

from fake_useragent import UserAgent

class RandomUserAgentMiddleware(object):

def __init__(self,crawler):

super(RandomUserAgentMiddleware, self).__init__()

self.ua = UserAgent()

self.ua_type = crawler.settings.get('RANDOM_UA_TYPE','random')

@classmethod

def from_crawler(cls,crawler):

return cls(crawler)

def process_request(self,request,spider):

def get_ua():

return getattr(self.ua,self.ua_type)

request.headers.setdefault('User-Agent',get_ua())

settings.py

settings.py启用中间件

RANDOM_UA_TYPE= 'random'

# Enable or disable downloader middlewares

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

DOWNLOADER_MIDDLEWARES = {

#'tutorial.middlewares.TutorialDownloaderMiddleware': 1,

'tutorial.middlewares.RandomUserAgentMiddleware': 543,

}

GitHub源码