Python-OpenCV:图像的全景拼接融合以及图片对齐处理

Opencv对图片切割和对图片对齐处理

以下原文转自:(作者: Wimb)

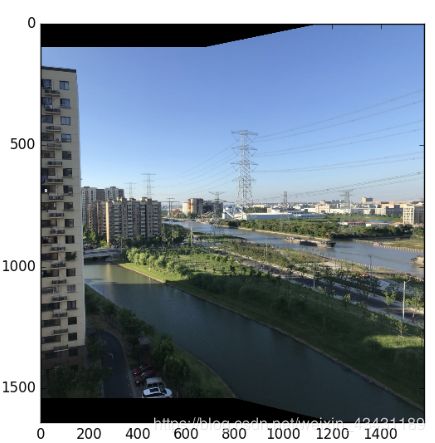

Python-OpenCV基础:图像的全景拼接

import numpy as np

import cv2 as cv

from matplotlib import pyplot as plt

if __name__ == '__main__':

top, bot, left, right = 100, 100, 0, 500

img1 = cv.imread('2.png')

img2 = cv.imread('1.png')

srcImg = cv.copyMakeBorder(img1, top, bot, left, right, cv.BORDER_CONSTANT, value=(0, 0, 0))

testImg = cv.copyMakeBorder(img2, top, bot, left, right, cv.BORDER_CONSTANT, value=(0, 0, 0))

img1gray = cv.cvtColor(srcImg, cv.COLOR_BGR2GRAY)

img2gray = cv.cvtColor(testImg, cv.COLOR_BGR2GRAY)

sift = cv.xfeatures2d_SIFT().create()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(img1gray, None)

kp2, des2 = sift.detectAndCompute(img2gray, None)

# FLANN parameters

FLANN_INDEX_KDTREE = 1

index_params = dict(algorithm=FLANN_INDEX_KDTREE, trees=5)

search_params = dict(checks=50)

flann = cv.FlannBasedMatcher(index_params, search_params)

matches = flann.knnMatch(des1, des2, k=2)

# Need to draw only good matches, so create a mask

matchesMask = [[0, 0] for i in range(len(matches))]

good = []

pts1 = []

pts2 = []

# ratio test as per Lowe's paper

for i, (m, n) in enumerate(matches):

if m.distance < 0.7*n.distance:

good.append(m)

pts2.append(kp2[m.trainIdx].pt)

pts1.append(kp1[m.queryIdx].pt)

matchesMask[i] = [1, 0]

draw_params = dict(matchColor=(0, 255, 0),

singlePointColor=(255, 0, 0),

matchesMask=matchesMask,

flags=0)

img3 = cv.drawMatchesKnn(img1gray, kp1, img2gray, kp2, matches, None, **draw_params)

plt.imshow(img3, ), plt.show()

rows, cols = srcImg.shape[:2]

MIN_MATCH_COUNT = 10

if len(good) > MIN_MATCH_COUNT:

src_pts = np.float32([kp1[m.queryIdx].pt for m in good]).reshape(-1, 1, 2)

dst_pts = np.float32([kp2[m.trainIdx].pt for m in good]).reshape(-1, 1, 2)

M, mask = cv.findHomography(src_pts, dst_pts, cv.RANSAC, 5.0)

warpImg = cv.warpPerspective(testImg, np.array(M), (testImg.shape[1], testImg.shape[0]), flags=cv.WARP_INVERSE_MAP)

for col in range(0, cols):

if srcImg[:, col].any() and warpImg[:, col].any():

left = col

break

for col in range(cols-1, 0, -1):

if srcImg[:, col].any() and warpImg[:, col].any():

right = col

break

res = np.zeros([rows, cols, 3], np.uint8)

for row in range(0, rows):

for col in range(0, cols):

if not srcImg[row, col].any():

res[row, col] = warpImg[row, col]

elif not warpImg[row, col].any():

res[row, col] = srcImg[row, col]

else:

srcImgLen = float(abs(col - left))

testImgLen = float(abs(col - right))

alpha = srcImgLen / (srcImgLen + testImgLen)

res[row, col] = np.clip(srcImg[row, col] * (1-alpha) + warpImg[row, col] * alpha, 0, 255)

# opencv is bgr, matplotlib is rgb

res = cv.cvtColor(res, cv.COLOR_BGR2RGB)

# show the result

plt.figure()

plt.imshow(res)

plt.show()

else:

print("Not enough matches are found - {}/{}".format(len(good), MIN_MATCH_COUNT))

matchesMask = None

#!usr/bin/python3

# -*-coding:utf-8-*-

from __future__ import print_function

import cv2

import numpy as np

# MAX_MATCHES = 500

MAX_MATCHES = 700

# MAX_MATCHES = 10000

# MAX_MATCHES = 15000

GOOD_MATCH_PERCENT = 0.25

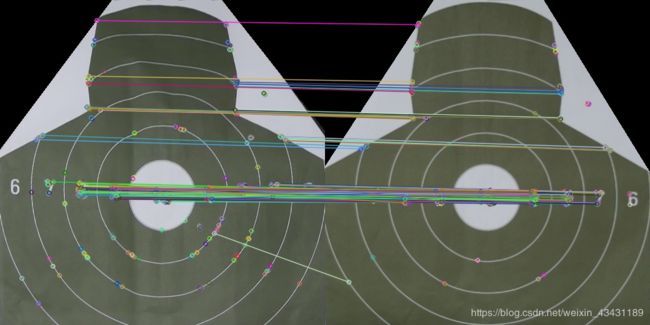

def alignImages(im1, im2):

# Convert images to grayscale

im1Gray = cv2.cvtColor(im1, cv2.COLOR_BGR2GRAY)

im2Gray = cv2.cvtColor(im2, cv2.COLOR_BGR2GRAY)

# Detect ORB features and compute descriptors.

orb = cv2.ORB_create(MAX_MATCHES)

# orb = cv2.xfeatures2SURF_create()

# orb = cv2.xfeatures2d.SURF_create()

# orb = cv2.AKAZE_create(MAX_MATCHES)

# detector = cv2.AKAZE_create(MAX_MATCHES)

keypoints1, descriptors1 = orb.detectAndCompute(im1Gray, None)

keypoints2, descriptors2 = orb.detectAndCompute(im2Gray, None)

# Match features.

matcher = cv2.DescriptorMatcher_create(cv2.DESCRIPTOR_MATCHER_BRUTEFORCE_HAMMING)

matches = matcher.match(descriptors1, descriptors2, None)

print(matches)

# Sort matches by score

matches.sort(key=lambda x: x.distance, reverse=False)

# Remove not so good matches

numGoodMatches = int(len(matches) * GOOD_MATCH_PERCENT)

matches = matches[:numGoodMatches]

# Draw top matches

imMatches = cv2.drawMatches(im1, keypoints1, im2, keypoints2, matches, None)

cv2.imwrite("./image_align/matches.jpg", imMatches)

# Extract location of good matches

points1 = np.zeros((len(matches), 2), dtype=np.float32)

points2 = np.zeros((len(matches), 2), dtype=np.float32)

for i, match in enumerate(matches):

points1[i, :] = keypoints1[match.queryIdx].pt

points2[i, :] = keypoints2[match.trainIdx].pt

# Find homography

h, mask = cv2.findHomography(points1, points2, cv2.RANSAC)

# Use homography

height, width, channels = im2.shape

im1Reg = cv2.warpPerspective(im1, h, (width, height))

return im1Reg, h

if __name__ == '__main__':

# Read reference image

# refFilename = "../success_image/good_img.jpg"

# refFilename = "../staticimg/base.png"

refFilename = "../success_images/001_success.jpg"

# refFilename = "../staticimg/100_success.jpg"

print("Reading reference image : ", refFilename)

imReference = cv2.imread(refFilename, cv2.IMREAD_COLOR)

# Read image to be aligned

imFilename = "../staticimg/oldimg_04.jpg"

# imFilename = "../cut_labels/cut_image.jpg"

#

# def point2area(points, img, color):

# """

# :param points: 点集合

# :param img: 图片位置

# :param color: BGR三色

# :return:将图片上点包围的区域涂上颜色

# """

# img = cv2.imread(img)

# res = cv2.fillPoly(img, [np.array(points)], color)

# cv2.imshow('fillpoly', res)

# cv2.waitKey(0)

# cv2.destroyAllWindows()

#

#

# if __name__ == '__main__':

# points = [(20, 20), (70, 70), (120, 200)]

# img = 'lena.png'

# color = [255, 255, 255]

# point2area(points, img, color)

# imFilename = "../staticimg/111.jpg"

# imFilename = "../staticimg/100_success.jpg"

print("Reading image to align : ", imFilename);

im = cv2.imread(imFilename, cv2.IMREAD_COLOR)

pointsone = [(350, 0), (512, 205), (512, 0)]

pointstwo = [(0, 0), (175, 0), (0, 234)]

# points = [(20, 20), (70, 70), (120, 200)]

color = [0, 0, 0]

res = cv2.fillPoly(im, [np.array(pointsone)], color)

res = cv2.fillPoly(im, [np.array(pointstwo)], color)

pointsthree = [(345, 0), (512, 270), (512, 0)]

pointsfour = [(0, 0), (170, 0), (0, 260)]

res = cv2.fillPoly(imReference, [np.array(pointsthree)], color)

res = cv2.fillPoly(imReference, [np.array(pointsfour)], color)

print("Aligning images ...")

# Registered image will be resotred in imReg.

# The estimated homography will be stored in h.

imReg, h = alignImages(im, imReference)

# Write aligned image to disk.

outFilename = "./image_align/aligned.jpg"

print("Saving aligned image : ", outFilename);

cv2.imwrite(outFilename, imReg)

# Print estimated homography

print("Estimated homography : \n", h)