分析针对{历史数据,每天的增量数据}

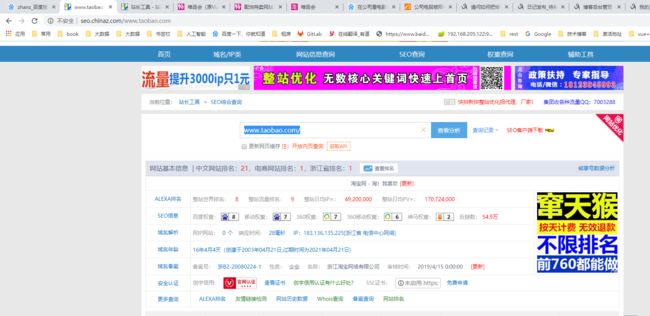

站长工具 seo 优化, pageRank

http://seo.chinaz.com/www.taobao.com

PV (page view ) UV(user view) 页面访问量,用户访问量

一针对增量数据进行分析。 设定淘宝300G/每天; 唯品会访问量为17G

建立数据表时预留两个字段,同样程序功能也预留一个模块。

一般 有指标和维度 。

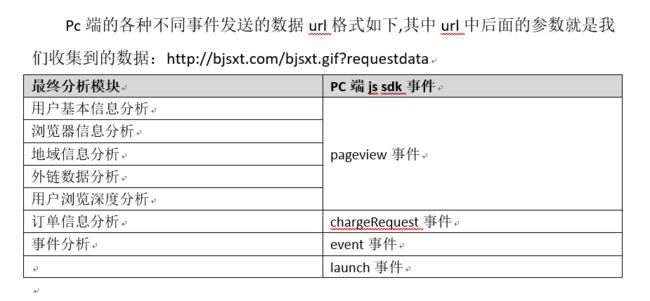

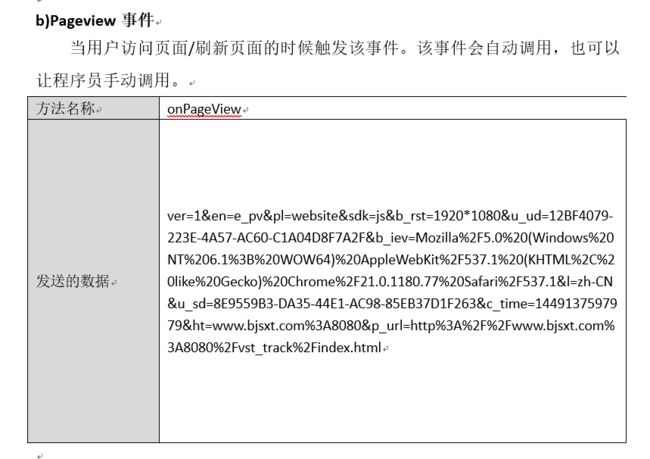

埋点 : 向后端大数据平台报告请求。

时间参数格式举例:

js-sdk,java-sdk

startURL工具画时序图

node204 上安装nginx(tengine)

1002 cd software/

1004 tar -zxvf tengine-2.1.0.tar.gz ## 解压nginx

1005 cd tengine-2.1.0

1007 ./configure

1008 yum -y install gcc ## 安装依赖 否则confugure报错 CentOS nginx checking for C compiler ... not found

1009 yum -y install gcc-c++

1010 yum -y install openssl openssl-devel

1011 ./configure

1012 make && make install

1013 whereis nginx

1014 cd /usr/local/nginx/

1016 cd sbin/

1018 ./nginx ## 启动nginx

1020 cd conf/

1021 ls

1022 cp nginx.conf nginx.conf.bak

1023 vi nginx.conf # 配置nginx

[root@node204 data]# cat /usr/local/nginx/conf/nginx.conf

#user nobody;

worker_processes 1;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 1024;

}

# load modules compiled as Dynamic Shared Object (DSO)

#

#dso {

# load ngx_http_fastcgi_module.so;

# load ngx_http_rewrite_module.so;

#}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

log_format my_format '$remote_addr^A$msec^A$http_host^A$request_uri';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#gzip on;

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

root html;

index index.html index.htm;

}

location = /log.gif {

default_type image/gif;

access_log /opt/data/access.log my_format;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}

# another virtual host using mix of IP-, name-, and port-based configuration

#

#server {

# listen 8000;

# listen somename:8080;

# server_name somename alias another.alias;

# location / {

# root html;

# index index.html index.htm;

# }

#}

# HTTPS server

#

#server {

# listen 443 ssl;

# server_name localhost;

# ssl_certificate cert.pem;

# ssl_certificate_key cert.key;

# ssl_session_cache shared:SSL:1m;

# ssl_session_timeout 5m;

# ssl_ciphers HIGH:!aNULL:!MD5;

# ssl_prefer_server_ciphers on;

# location / {

# root html;

# index index.html index.htm;

# }

#}

}

1024 mkdir -r /opt/data ## 创建日志存放的目录

1025 mkdir /opt/data

1026 cd vi /etc/init.d/nginx ## 配置service nginx 服务 ## 注意pid和安装目录需要与nginx.conf保持一致。 否则会报错

Centos7 配置systemctl的Nginx启动服务,start一直卡着,stop不生效https://www.cnblogs.com/JaminXie/p/11322697.html

[root@node204 data]# cat /etc/init.d/nginx

#!/bin/sh

#

# nginx - this script starts and stops the nginx daemon

#

# chkconfig: - 85 15

# description: Nginx is an HTTP(S) server, HTTP(S) reverse \

# proxy and IMAP/POP3 proxy server

# processname: nginx

# config: /etc/nginx/nginx.conf

# config: /etc/sysconfig/nginx

# pidfile: /usr/local/nginx/logs/nginx.pid

# Source function library.

. /etc/rc.d/init.d/functions

# Source networking configuration.

. /etc/sysconfig/network

# Check that networking is up.

[ "$NETWORKING" = "no" ] && exit 0

nginx="/usr/local/nginx/sbin/nginx"

prog=$(basename $nginx)

NGINX_CONF_FILE="/usr/local/nginx/conf/nginx.conf"

[ -f /etc/sysconfig/nginx ] && . /etc/sysconfig/nginx

lockfile=/var/lock/subsys/nginx

make_dirs() {

# make required directories

user=`nginx -V 2>&1 | grep "configure arguments:" | sed 's/[^*]*--user=\([^ ]*\).*/\1/g' -`

options=`$nginx -V 2>&1 | grep 'configure arguments:'`

for opt in $options; do

if [ `echo $opt | grep '.*-temp-path'` ]; then

value=`echo $opt | cut -d "=" -f 2`

if [ ! -d "$value" ]; then

# echo "creating" $value

mkdir -p $value && chown -R $user $value

fi

fi

done

}

start() {

[ -x $nginx ] || exit 5

[ -f $NGINX_CONF_FILE ] || exit 6

make_dirs

echo -n $"Starting $prog: "

daemon $nginx -c $NGINX_CONF_FILE

retval=$?

echo

[ $retval -eq 0 ] && touch $lockfile

return $retval

}

stop() {

echo -n $"Stopping $prog: "

killproc $prog -QUIT

retval=$?

echo

[ $retval -eq 0 ] && rm -f $lockfile

return $retval

}

restart() {

configtest || return $?

stop

sleep 1

start

}

reload() {

configtest || return $?

echo -n $"Reloading $prog: "

killproc $nginx -HUP

RETVAL=$?

echo

}

force_reload() {

restart

}

configtest() {

$nginx -t -c $NGINX_CONF_FILE

}

rh_status() {

status $prog

}

rh_status_q() {

rh_status >/dev/null 2>&1

}

case "$1" in

start)

rh_status_q && exit 0

$1

;;

stop)

rh_status_q || exit 0

$1

;;

restart|configtest)

$1

;;

reload)

rh_status_q || exit 7

$1

;;

force-reload)

force_reload

;;

status)

rh_status

;;

condrestart|try-restart)

rh_status_q || exit 0

;;

*)

echo $"Usage: $0 {start|stop|status|restart|condrestart|try-restart|reload|force-reload|configtest}"

exit 2

esac

1028 chmod +x /etc/init.d/nginx

1029 service nginx restart

1097 systemctl daemon-reload

1098 service nginx stop

1099 service nginx start

1100 cd /opt/data/ ##从window上传一张图片log.gif到 /usr/local/nginx/html/下。

1102 tail -f access.log

## http://node204/log.gif?name=zhangsan&age=19 可以收到日志

js-sdk,java-sdk访问方法:

analytics.js

(function() {

var CookieUtil = {

// get the cookie of the key is name

get : function(name) {

var cookieName = encodeURIComponent(name) + "=", cookieStart = document.cookie

.indexOf(cookieName), cookieValue = null;

if (cookieStart > -1) {

var cookieEnd = document.cookie.indexOf(";", cookieStart);

if (cookieEnd == -1) {

cookieEnd = document.cookie.length;

}

cookieValue = decodeURIComponent(document.cookie.substring(

cookieStart + cookieName.length, cookieEnd));

}

return cookieValue;

},

// set the name/value pair to browser cookie

set : function(name, value, expires, path, domain, secure) {

var cookieText = encodeURIComponent(name) + "="

+ encodeURIComponent(value);

if (expires) {

// set the expires time

var expiresTime = new Date();

expiresTime.setTime(expires);

cookieText += ";expires=" + expiresTime.toGMTString();

}

if (path) {

cookieText += ";path=" + path;

}

if (domain) {

cookieText += ";domain=" + domain;

}

if (secure) {

cookieText += ";secure";

}

document.cookie = cookieText;

},

setExt : function(name, value) {

this.set(name, value, new Date().getTime() + 315360000000, "/");

}

};

// 主体,其实就是tracker js

var tracker = {

// config

clientConfig : {

serverUrl : "http://node204/log.gif",

sessionTimeout : 360, // 360s -> 6min

maxWaitTime : 3600, // 3600s -> 60min -> 1h

ver : "1"

},

cookieExpiresTime : 315360000000, // cookie过期时间,10年

columns : {

// 发送到服务器的列名称

eventName : "en",

version : "ver",

platform : "pl",

sdk : "sdk",

uuid : "u_ud",

memberId : "u_mid",

sessionId : "u_sd",

clientTime : "c_time",

language : "l",

userAgent : "b_iev",

resolution : "b_rst",

currentUrl : "p_url",

referrerUrl : "p_ref",

title : "tt",

orderId : "oid",

orderName : "on",

currencyAmount : "cua",

currencyType : "cut",

paymentType : "pt",

category : "ca",

action : "ac",

kv : "kv_",

duration : "du"

},

keys : {

pageView : "e_pv",

chargeRequestEvent : "e_crt",

launch : "e_l",

eventDurationEvent : "e_e",

sid : "bftrack_sid",

uuid : "bftrack_uuid",

mid : "bftrack_mid",

preVisitTime : "bftrack_previsit",

},

/**

* 获取会话id

*/

getSid : function() {

return CookieUtil.get(this.keys.sid);

},

/**

* 保存会话id到cookie

*/

setSid : function(sid) {

if (sid) {

CookieUtil.setExt(this.keys.sid, sid);

}

},

/**

* 获取uuid,从cookie中

*/

getUuid : function() {

return CookieUtil.get(this.keys.uuid);

},

/**

* 保存uuid到cookie

*/

setUuid : function(uuid) {

if (uuid) {

CookieUtil.setExt(this.keys.uuid, uuid);

}

},

/**

* 获取memberID

*/

getMemberId : function() {

return CookieUtil.get(this.keys.mid);

},

/**

* 设置mid

*/

setMemberId : function(mid) {

if (mid) {

CookieUtil.setExt(this.keys.mid, mid);

}

},

startSession : function() {

// 加载js就触发的方法

if (this.getSid()) {

// 会话id存在,表示uuid也存在

if (this.isSessionTimeout()) {

// 会话过期,产生新的会话

this.createNewSession();

} else {

// 会话没有过期,更新最近访问时间

this.updatePreVisitTime(new Date().getTime());

}

} else {

// 会话id不存在,表示uuid也不存在

this.createNewSession();

}

this.onPageView();

},

onLaunch : function() {

// 触发launch事件

var launch = {};

launch[this.columns.eventName] = this.keys.launch; // 设置事件名称

this.setCommonColumns(launch); // 设置公用columns

this.sendDataToServer(this.parseParam(launch)); // 最终发送编码后的数据

},

onPageView : function() {

// 触发page view事件

if (this.preCallApi()) {

var time = new Date().getTime();

var pageviewEvent = {};

pageviewEvent[this.columns.eventName] = this.keys.pageView;

pageviewEvent[this.columns.currentUrl] = window.location.href; // 设置当前url

pageviewEvent[this.columns.referrerUrl] = document.referrer; // 设置前一个页面的url

pageviewEvent[this.columns.title] = document.title; // 设置title

this.setCommonColumns(pageviewEvent); // 设置公用columns

this.sendDataToServer(this.parseParam(pageviewEvent)); // 最终发送编码后的数据

this.updatePreVisitTime(time);

}

},

onChargeRequest : function(orderId, name, currencyAmount, currencyType, paymentType) {

// 触发订单产生事件

if (this.preCallApi()) {

if (!orderId || !currencyType || !paymentType) {

this.log("订单id、货币类型以及支付方式不能为空");

return;

}

if (typeof (currencyAmount) == "number") {

// 金额必须是数字

var time = new Date().getTime();

var chargeRequestEvent = {};

chargeRequestEvent[this.columns.eventName] = this.keys.chargeRequestEvent;

chargeRequestEvent[this.columns.orderId] = orderId;

chargeRequestEvent[this.columns.orderName] = name;

chargeRequestEvent[this.columns.currencyAmount] = currencyAmount;

chargeRequestEvent[this.columns.currencyType] = currencyType;

chargeRequestEvent[this.columns.paymentType] = paymentType;

this.setCommonColumns(chargeRequestEvent); // 设置公用columns

this.sendDataToServer(this.parseParam(chargeRequestEvent)); // 最终发送编码后的数据

this.updatePreVisitTime(time);

} else {

this.log("订单金额必须是数字");

return;

}

}

},

onEventDuration : function(category, action, map, duration) {

// 触发event事件

if (this.preCallApi()) {

if (category && action) {

var time = new Date().getTime();

var event = {};

event[this.columns.eventName] = this.keys.eventDurationEvent;

event[this.columns.category] = category;

event[this.columns.action] = action;

if (map) {

for ( var k in map) {

if (k && map[k]) {

event[this.columns.kv + k] = map[k];

}

}

}

if (duration) {

event[this.columns.duration] = duration;

}

this.setCommonColumns(event); // 设置公用columns

this.sendDataToServer(this.parseParam(event)); // 最终发送编码后的数据

this.updatePreVisitTime(time);

} else {

this.log("category和action不能为空");

}

}

},

/**

* 执行对外方法前必须执行的方法

*/

preCallApi : function() {

if (this.isSessionTimeout()) {

// 如果为true,表示需要新建

this.startSession();

} else {

this.updatePreVisitTime(new Date().getTime());

}

return true;

},

sendDataToServer : function(data) {

alert(data);

// 发送数据data到服务器,其中data是一个字符串

var that = this;

var i2 = new Image(1, 1);//  i2.onerror = function() {

// 这里可以进行重试操作

};

i2.src = this.clientConfig.serverUrl + "?" + data;

},

/**

* 往data中添加发送到日志收集服务器的公用部分

*/

setCommonColumns : function(data) {

data[this.columns.version] = this.clientConfig.ver;

data[this.columns.platform] = "website";

data[this.columns.sdk] = "js";

data[this.columns.uuid] = this.getUuid(); // 设置用户id

data[this.columns.memberId] = this.getMemberId(); // 设置会员id

data[this.columns.sessionId] = this.getSid(); // 设置sid

data[this.columns.clientTime] = new Date().getTime(); // 设置客户端时间

data[this.columns.language] = window.navigator.language; // 设置浏览器语言

data[this.columns.userAgent] = window.navigator.userAgent; // 设置浏览器类型

data[this.columns.resolution] = screen.width + "*" + screen.height; // 设置浏览器分辨率

},

/**

* 创建新的会员,并判断是否是第一次访问页面,如果是,进行launch事件的发送。

*/

createNewSession : function() {

var time = new Date().getTime(); // 获取当前操作时间

// 1. 进行会话更新操作

var sid = this.generateId(); // 产生一个session id

this.setSid(sid);

this.updatePreVisitTime(time); // 更新最近访问时间

// 2. 进行uuid查看操作

if (!this.getUuid()) {

// uuid不存在,先创建uuid,然后保存到cookie,最后触发launch事件

var uuid = this.generateId(); // 产品uuid

this.setUuid(uuid);

this.onLaunch();

}

},

/**

* 参数编码返回字符串

*/

parseParam : function(data) {

var params = "";

for ( var e in data) {

if (e && data[e]) {

params += encodeURIComponent(e) + "="

+ encodeURIComponent(data[e]) + "&";

}

}

if (params) {

return params.substring(0, params.length - 1);

} else {

return params;

}

},

/**

* 产生uuid

*/

generateId : function() {

var chars = '0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz';

var tmpid = [];

var r;

tmpid[8] = tmpid[13] = tmpid[18] = tmpid[23] = '-';

tmpid[14] = '4';

for (i = 0; i < 36; i++) {

if (!tmpid[i]) {

r = 0 | Math.random() * 16;

tmpid[i] = chars[(i == 19) ? (r & 0x3) | 0x8 : r];

}

}

return tmpid.join('');

},

/**

* 判断这个会话是否过期,查看当前时间和最近访问时间间隔时间是否小于this.clientConfig.sessionTimeout

i2.onerror = function() {

// 这里可以进行重试操作

};

i2.src = this.clientConfig.serverUrl + "?" + data;

},

/**

* 往data中添加发送到日志收集服务器的公用部分

*/

setCommonColumns : function(data) {

data[this.columns.version] = this.clientConfig.ver;

data[this.columns.platform] = "website";

data[this.columns.sdk] = "js";

data[this.columns.uuid] = this.getUuid(); // 设置用户id

data[this.columns.memberId] = this.getMemberId(); // 设置会员id

data[this.columns.sessionId] = this.getSid(); // 设置sid

data[this.columns.clientTime] = new Date().getTime(); // 设置客户端时间

data[this.columns.language] = window.navigator.language; // 设置浏览器语言

data[this.columns.userAgent] = window.navigator.userAgent; // 设置浏览器类型

data[this.columns.resolution] = screen.width + "*" + screen.height; // 设置浏览器分辨率

},

/**

* 创建新的会员,并判断是否是第一次访问页面,如果是,进行launch事件的发送。

*/

createNewSession : function() {

var time = new Date().getTime(); // 获取当前操作时间

// 1. 进行会话更新操作

var sid = this.generateId(); // 产生一个session id

this.setSid(sid);

this.updatePreVisitTime(time); // 更新最近访问时间

// 2. 进行uuid查看操作

if (!this.getUuid()) {

// uuid不存在,先创建uuid,然后保存到cookie,最后触发launch事件

var uuid = this.generateId(); // 产品uuid

this.setUuid(uuid);

this.onLaunch();

}

},

/**

* 参数编码返回字符串

*/

parseParam : function(data) {

var params = "";

for ( var e in data) {

if (e && data[e]) {

params += encodeURIComponent(e) + "="

+ encodeURIComponent(data[e]) + "&";

}

}

if (params) {

return params.substring(0, params.length - 1);

} else {

return params;

}

},

/**

* 产生uuid

*/

generateId : function() {

var chars = '0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz';

var tmpid = [];

var r;

tmpid[8] = tmpid[13] = tmpid[18] = tmpid[23] = '-';

tmpid[14] = '4';

for (i = 0; i < 36; i++) {

if (!tmpid[i]) {

r = 0 | Math.random() * 16;

tmpid[i] = chars[(i == 19) ? (r & 0x3) | 0x8 : r];

}

}

return tmpid.join('');

},

/**

* 判断这个会话是否过期,查看当前时间和最近访问时间间隔时间是否小于this.clientConfig.sessionTimeout

* 如果是小于,返回false;否则返回true。

*/

isSessionTimeout : function() {

var time = new Date().getTime();

var preTime = CookieUtil.get(this.keys.preVisitTime);

if (preTime) {

// 最近访问时间存在,那么进行区间判断

return time - preTime > this.clientConfig.sessionTimeout * 1000;

}

return true;

},

/**

* 更新最近访问时间

*/

updatePreVisitTime : function(time) {

CookieUtil.setExt(this.keys.preVisitTime, time);

},

/**

* 打印日志

*/

log : function(msg) {

console.log(msg);

},

};

// 对外暴露的方法名称

window.__AE__ = {

startSession : function() {

tracker.startSession();

},

onPageView : function() {

tracker.onPageView();

},

onChargeRequest : function(orderId, name, currencyAmount, currencyType, paymentType) {

tracker.onChargeRequest(orderId, name, currencyAmount, currencyType, paymentType);

},

onEventDuration : function(category, action, map, duration) {

tracker.onEventDuration(category, action, map, duration);

},

setMemberId : function(mid) {

tracker.setMemberId(mid);

}

};

// 自动加载方法

var autoLoad = function() {

// 进行参数设置

var _aelog_ = _aelog_ || window._aelog_ || [];

var memberId = null;

for (i = 0; i < _aelog_.length; i++) {

_aelog_[i][0] === "memberId" && (memberId = _aelog_[i][1]);

}

// 根据是给定memberid,设置memberid的值

memberId && __AE__.setMemberId(memberId);

// 启动session

__AE__.startSession();

};

autoLoad();

})();

demo.jsp

<%@ page contentType="text/html; charset=utf-8" pageEncoding="utf-8"%>

测试页面1

测试页面1

跳转到:

demo

demo2

demo3

demo4

demo2.jsp

<%@ page contentType="text/html; charset=utf-8" pageEncoding="utf-8"%>

测试页面2

测试页面2

跳转到:

demo

demo2

demo3

demo4

demo3.jsp

<%@ page contentType="text/html; charset=utf-8" pageEncoding="utf-8"%>

测试页面3

测试页面3

跳转到:

demo

demo2

demo3

demo4

demo4.jsp

<%@ page contentType="text/html; charset=utf-8" pageEncoding="utf-8"%>

测试页面4

测试页面4

在本页面设置memberid为zhangsan

跳转到:

demo

demo2

demo3

demo4

package com.sxt.client;

import java.io.UnsupportedEncodingException;

import java.net.URLEncoder;

import java.util.HashMap;

import java.util.Map;

import java.util.logging.Level;

import java.util.logging.Logger;

/**

* 分析引擎sdk java服务器端数据收集

*

* @author root

* @version 1.0

*

*/

public class AnalyticsEngineSDK {

// 日志打印对象

private static final Logger log = Logger.getGlobal();

// 请求url的主体部分

public static final String accessUrl = "http://node204/log.gif";

private static final String platformName = "java_server";

private static final String sdkName = "jdk";

private static final String version = "1";

/**

* 触发订单支付成功事件,发送事件数据到服务器

*

* @param orderId

* 订单支付id

* @param memberId

* 订单支付会员id

* @return 如果发送数据成功(加入到发送队列中),那么返回true;否则返回false(参数异常&添加到发送队列失败).

*/

public static boolean onChargeSuccess(String orderId, String memberId) {

try {

if (isEmpty(orderId) || isEmpty(memberId)) {

// 订单id或者memberid为空

log.log(Level.WARNING, "订单id和会员id不能为空");

return false;

}

// 代码执行到这儿,表示订单id和会员id都不为空。

Map data = new HashMap();

data.put("u_mid", memberId);

data.put("oid", orderId);

data.put("c_time", String.valueOf(System.currentTimeMillis()));

data.put("ver", version);

data.put("en", "e_cs");

data.put("pl", platformName);

data.put("sdk", sdkName);

// 创建url

String url = buildUrl(data);

// 发送url&将url加入到队列

SendDataMonitor.addSendUrl(url);

return true;

} catch (Throwable e) {

log.log(Level.WARNING, "发送数据异常", e);

}

return false;

}

/**

* 触发订单退款事件,发送退款数据到服务器

*

* @param orderId

* 退款订单id

* @param memberId

* 退款会员id

* @return 如果发送数据成功,返回true。否则返回false。

*/

public static boolean onChargeRefund(String orderId, String memberId) {

try {

if (isEmpty(orderId) || isEmpty(memberId)) {

// 订单id或者memberid为空

log.log(Level.WARNING, "订单id和会员id不能为空");

return false;

}

// 代码执行到这儿,表示订单id和会员id都不为空。

Map data = new HashMap();

data.put("u_mid", memberId);

data.put("oid", orderId);

data.put("c_time", String.valueOf(System.currentTimeMillis()));

data.put("ver", version);

data.put("en", "e_cr");

data.put("pl", platformName);

data.put("sdk", sdkName);

// 构建url

String url = buildUrl(data);

// 发送url&将url添加到队列中

SendDataMonitor.addSendUrl(url);

return true;

} catch (Throwable e) {

log.log(Level.WARNING, "发送数据异常", e);

}

return false;

}

/**

* 根据传入的参数构建url

*

* @param data

* @return

* @throws UnsupportedEncodingException

*/

private static String buildUrl(Map data)

throws UnsupportedEncodingException {

StringBuilder sb = new StringBuilder();

sb.append(accessUrl).append("?");

for (Map.Entry entry : data.entrySet()) {

if (isNotEmpty(entry.getKey()) && isNotEmpty(entry.getValue())) {

sb.append(entry.getKey().trim())

.append("=")

.append(URLEncoder.encode(entry.getValue().trim(), "utf-8"))

.append("&");

}

}

return sb.substring(0, sb.length() - 1);// 去掉最后&

}

/**

* 判断字符串是否为空,如果为空,返回true。否则返回false。

*

* @param value

* @return

*/

private static boolean isEmpty(String value) {

return value == null || value.trim().isEmpty();

}

/**

* 判断字符串是否非空,如果不是空,返回true。如果是空,返回false。

*

* @param value

* @return

*/

private static boolean isNotEmpty(String value) {

return !isEmpty(value);

}

}

package com.sxt.client;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.HttpURLConnection;

import java.net.URL;

import java.util.concurrent.BlockingQueue;

import java.util.concurrent.LinkedBlockingQueue;

import java.util.logging.Level;

import java.util.logging.Logger;

/**

* 发送url数据的监控者,用于启动一个单独的线程来发送数据

*

* @author root

*

*/

public class SendDataMonitor {

// 日志记录对象

private static final Logger log = Logger.getGlobal();

// 队列,用户存储发送url

private BlockingQueue queue = new LinkedBlockingQueue();

// 用于单列的一个类对象

private static SendDataMonitor monitor = null;

private SendDataMonitor() {

// 私有构造方法,进行单列模式的创建

}

/**

* 获取单列的monitor对象实例

*

* @return

*/

public static SendDataMonitor getSendDataMonitor() {

if (monitor == null) {

synchronized (SendDataMonitor.class) {

if (monitor == null) {

monitor = new SendDataMonitor();

Thread thread = new Thread(new Runnable() {

@Override

public void run() {

// 线程中调用具体的处理方法

SendDataMonitor.monitor.run();

}

});

// 测试的时候,不设置为守护模式

// thread.setDaemon(true);

thread.start();

}

}

}

return monitor;

}

/**

* 添加一个url到队列中去

*

* @param url

* @throws InterruptedException

*/

public static void addSendUrl(String url) throws InterruptedException {

getSendDataMonitor().queue.put(url);

}

/**

* 具体执行发送url的方法

*

*/

private void run() {

while (true) {

try {

String url = this.queue.take();

// 正式的发送url

HttpRequestUtil.sendData(url);

} catch (Throwable e) {

log.log(Level.WARNING, "发送url异常", e);

}

}

}

/**

* 内部类,用户发送数据的http工具类

*

* @author root

*

*/

public static class HttpRequestUtil {

/**

* 具体发送url的方法

*

* @param url

* @throws IOException

*/

public static void sendData(String url) throws IOException {

HttpURLConnection con = null;

BufferedReader in = null;

try {

URL obj = new URL(url); // 创建url对象

con = (HttpURLConnection) obj.openConnection(); // 打开url连接

// 设置连接参数

con.setConnectTimeout(5000); // 连接过期时间

con.setReadTimeout(5000); // 读取数据过期时间

con.setRequestMethod("GET"); // 设置请求类型为get

System.out.println("发送url:" + url);

// 发送连接请求

in = new BufferedReader(new InputStreamReader(

con.getInputStream()));

// TODO: 这里考虑是否可以

} finally {

try {

if (in != null) {

in.close();

}

} catch (Throwable e) {

// nothing

}

try {

con.disconnect();

} catch (Throwable e) {

// nothing

}

}

}

}

}

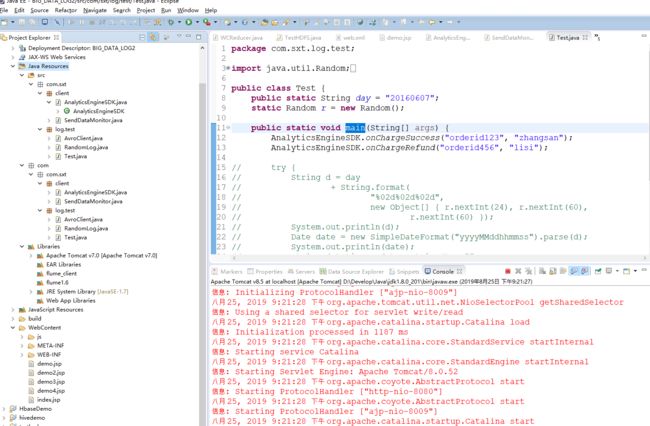

package com.sxt.log.test;

import java.util.Random;

import com.sxt.client.AnalyticsEngineSDK;

public class Test {

public static String day = "20160607";

static Random r = new Random();

public static void main(String[] args) {

AnalyticsEngineSDK.onChargeSuccess("orderid123", "zhangsan");

AnalyticsEngineSDK.onChargeRefund("orderid456", "lisi");

// try {

// String d = day

// + String.format(

// "%02d%02d%02d",

// new Object[] { r.nextInt(24), r.nextInt(60),

// r.nextInt(60) });

// System.out.println(d);

// Date date = new SimpleDateFormat("yyyyMMddhhmmss").parse(d);

// System.out.println(date);

// String datetime = date.getTime() + "";

// System.out.println(datetime);

// String prefix = datetime.substring(0, datetime.length() - 3);

// System.out.println(prefix + "." + r.nextInt(1000));

// System.out.println(new Date(1423634643000l));

// } catch (ParseException e) {

// e.printStackTrace();

// }

}

}

使用tomcat 8运行。访问jsp; 能够看到日志。

flume http://flume.apache.org/index.html

亦可以在命令行运行时的参数。

单节点配置

安装flume 204上。 可以参考官网文档,非常详细

1007 tar -zxvf apache-flume-1.6.0-bin.tar.gz -C /opt/sxt/

1008 cd /opt/sxt/apache-flume-1.6.0-bin/conf/

1015 cp flume-env.sh.template flume-env.sh

1016 vi flume-env.sh

export JAVA_HOME=/usr/java/jdk1.8.0_221

1020 vi /etc/profile

export FLUME_HOME=/opt/sxt/apache-flume-1.6.0-bin

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/bin:$HBASE_HOME/bin:$FLUME_HOME/bin

1021 source /etc/profile

1022 flume-ng

1023 flume-ng version ## 查看版本

1024 cd /opt/

1032 mkdir flumedir ## 配置flume

1033 cd flumedir/

1035 vi option

[root@node204 conf]# cat /opt/flumedir/option

# example.conf: A single-node Flume configuration

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = netcat ## netcat方式获取

a1.sources.r1.bind = node204

a1.sources.r1.port = 44444

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

1036 flume-ng agent --conf-file option --name a1 -Dflume.root.logger=INFO,console ## 启动flume

2019-08-27 04:41:00,537 INFO node.Application: Starting Source r1 2019-08-27 04:41:00,542 INFO source.NetcatSource: Source starting 2019-08-27 04:41:00,650 INFO source.NetcatSource: Created serverSocket:sun.nio.ch.ServerSocketChannelImpl[/192.168.112.204:44444]

node205上操作 telnet发送socket数据,查看flume204 是否接收到。 795 yum install -y telnet [root@node205 ~]# telnet node204 44444 Trying 192.168.112.204... Connected to node204. Escape character is '^]'. ## 分隔符] hello OK hi OK sxt OK nihao OK ##在node204 flume程序下可以看到telnet发送的信息。

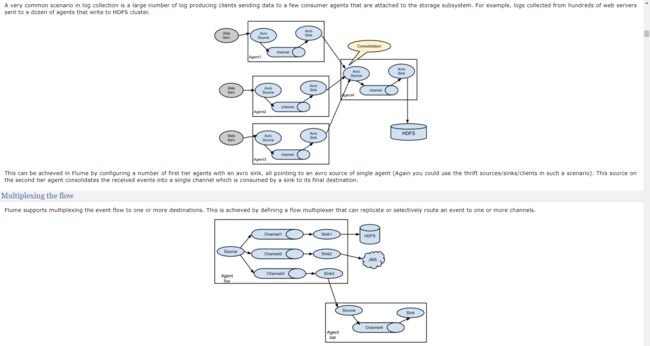

多节点配置

AVRO 格式(通过RPC发送数据)

node204(左) node205 (右)

node204

scp -r apache-flume-1.6.0-bin root@node205:`pwd` ## 分发文件

cd /opt/flumedir/

1048 vi option

# example.conf: A single-node Flume configuration

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = netcat

a1.sources.r1.bind = node204

a1.sources.r1.port = 44444

# Describe the sink

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = node205

a1.sinks.k1.port = 10086

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

node205 配置 flume环境变量

cd /opt/flumedir/

1048 vi option2

# example.conf: A single-node Flume configuration

# Name the components on this agent

a1.sources = r1

a1.sinks = k1

a1.channels = c1

# Describe/configure the source

a1.sources.r1.type = avro

a1.sources.r1.bind = node205

a1.sources.r1.port = 10086

# Describe the sink

a1.sinks.k1.type = logger

# Use a channel which buffers events in memory

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

# Bind the source and sink to the channel

a1.sources.r1.channels = c1

a1.sinks.k1.channel = c1

## a1.sources.r1.bind = node205 表示我监听本机端口获取数据。

node205 服务端先启动

flume-ng agent --conf-file option2 --name a1 -Dflume.root.logger=INFO,console

node204客户端在启动

flume-ng agent --conf-file option --name a1 -Dflume.root.logger=INFO,console

在node205上试用telnet发送消息,node205 flume conlose下能够查看到消息。

2019-08-27 05:16:11,893 INFO sink.LoggerSink: Event: { headers:{} body: 68 65 6C 6C 6F 0D hello. }

2019-08-27 05:16:11,893 INFO sink.LoggerSink: Event: { headers:{} body: 6E 69 6E 68 61 6F 08 08 08 0D ninhao.... }

2019-08-27 05:16:16,169 INFO sink.LoggerSink: Event: { headers:{} body: 77 6F 6D 65 6E 0D women. }

## 配置读取文件末尾Exec source ## tail 监控文件增量 [root@node204 ~]# cat /opt/flumedir/option3 # example.conf: A single-node Flume configuration # Name the components on this agent a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source a1.sources.r1.type = exec a1.sources.r1.command = tail -F /root/sxt.log # Describe the sink a1.sinks.k1.type = avro a1.sinks.k1.hostname = node205 a1.sinks.k1.port = 10086 # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 [root@node204 ~]# vi sxt.log ## 在node205上也启动option2 # flume-ng agent --conf-file option3 --name a1 -Dflume.root.logger=INFO,console [root@node204 ~]# echo 'sxt' >> sxt.log ## 此时,王文建最佳呢绒。node205的console会输出sxt字样。 [root@node204 ~]# echo 'sxt' >> sxt.log [root@node204 ~]# echo 'sxt' >> sxt.log [root@node204 ~]# echo 'sxt' >> sxt.log ## vi 操作 vi sxt.log sxt sxt sxt :.,$y ## 复制当前行到末尾行内容

配置文件夹作为source [root@node204 ~]# cat /opt/flumedir/option4 # Name the components on this agent a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source a1.sources.r1.type = spooldir a1.sources.r1.spoolDir = /root/abc/ a1.sources.r1.fileHeader = true ### 表示是否显示文件名 # Describe the sink a1.sinks.k1.type = logger # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 [root@node204 flumedir]# flume-ng agent --conf-file option4 --name a1 -Dflume.root.logger=INFO,console [root@node204 ~]# mkdir abc [root@node204 ~]# echo 1111 >> aaa [root@node204 ~]# echo 2222 >> aaa [root@node204 ~]# echo 3333 >> bbb [root@node204 ~]# echo 4444 >> bbb [root@node204 ~]# cp aaa abc/ [root@node204 ~]# cp bbb abc/ ##此时能够在option4下看到文件内容 [root@node204 ~]# cd abc/ [root@node204 abc]# ls aaa.COMPLETED bbb.COMPLETED

sink 为hdfs [root@node204 ~]# cat /opt/flumedir/option5 # Name the components on this agent a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source a1.sources.r1.type = exec a1.sources.r1.command = tail -F /root/sxt.log # Describe the sink a1.sinks.k1.type = hdfs a1.sinks.k1.hdfs.path = /flume/events/%y-%m-%d/%H%M/%S a1.sinks.k1.hdfs.filePrefix = events- a1.sinks.k1.hdfs.round = true a1.sinks.k1.hdfs.roundValue = 10 a1.sinks.k1.hdfs.roundUnit = minute a1.sinks.k1.hdfs.useLocalTimeStamp =true # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

[root@node204 flumedir]# flume-ng agent --conf-file option5 --name a1 -Dflume.root.logger=INFO,console ## 会在hdfs上创建目录和文件。 (必须启动hdfs)

[root@node204 ~]# echo 'hello sxt ni hao' >> sxt.log ## 继续最佳文件内容,hdfs数据增加。 [root@node204 ~]# echo 'hello sxt ni hao' >> sxt.log [root@node204 ~]# echo 'hello sxt ni hao' >> sxt.log [root@node204 ~]# echo 'hello sxt ni hao' >> sxt.log

[root@node204 ~]# cat aaa | tee a.log ## 一个source 多个sink;可以再用linux实现。 1111 2222 [root@node204 ~]# cat a.log 1111 2222

kafka 与flume一般组对使用

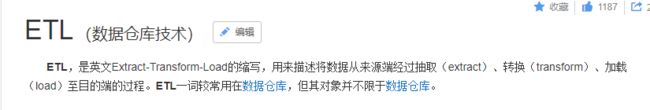

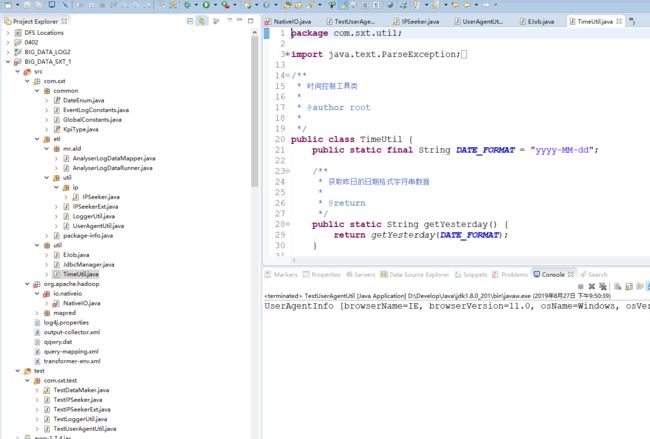

ETL 程序和操作

node204配置:

[root@node204 ~]# service nginx start [root@node204 ~]# cat /opt/flumedir/option6 a1.sources = r1 a1.sinks = k1 a1.channels = c1 a1.sources.r1.type = exec a1.sources.r1.command = tail -F /opt/data/access.log a1.sinks.k1.type=hdfs a1.sinks.k1.hdfs.path=hdfs://mycluster/log/%Y%m%d a1.sinks.k1.hdfs.rollCount=0 a1.sinks.k1.hdfs.rollInterval=0 a1.sinks.k1.hdfs.rollSize=10240 a1.sinks.k1.hdfs.idleTimeout=5 a1.sinks.k1.hdfs.fileType=DataStream a1.sinks.k1.hdfs.useLocalTimeStamp=true a1.sinks.k1.hdfs.callTimeout=40000 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 ## 使用eclipse 运行BIG_DATA_LOG2 项目,访问jsp页面触发埋点上报信息到nginx ,可以看到数据被上传到hdfs 。 tail -f /opt/data/access.log 查看访问的数据。

关于ETL在此处的作用 过滤脏数据(没有?或en参数的请求过滤掉) 解析ip 为地域 解析浏览器。 存储到hbase rowid 使用CRC32编码(将时间戳,事件,用户信息带入)

使用工具类: 解析浏览器

package com.sxt.etl.util;

import java.io.IOException;

import cz.mallat.uasparser.OnlineUpdater;

import cz.mallat.uasparser.UASparser;

/**

* 解析浏览器的user agent的工具类,内部就是调用这个uasparser jar文件

*

* @author root

*

*/

public class UserAgentUtil {

static UASparser uasParser = null;

// static 代码块, 初始化uasParser对象

static {

try {

uasParser = new UASparser(OnlineUpdater.getVendoredInputStream());

} catch (IOException e) {

e.printStackTrace();

}

}

/**

* 解析浏览器的user agent字符串,返回UserAgentInfo对象。

* 如果user agent为空,返回null。如果解析失败,也直接返回null。

*

* @param userAgent

* 要解析的user agent字符串

* @return 返回具体的值

*/

public static UserAgentInfo analyticUserAgent(String userAgent) {

UserAgentInfo result = null;

if (!(userAgent == null || userAgent.trim().isEmpty())) {

// 此时userAgent不为null,而且不是由全部空格组成的

try {

cz.mallat.uasparser.UserAgentInfo info = null;

info = uasParser.parse(userAgent);

result = new UserAgentInfo();

result.setBrowserName(info.getUaFamily());

result.setBrowserVersion(info.getBrowserVersionInfo());

result.setOsName(info.getOsFamily());

result.setOsVersion(info.getOsName());

} catch (IOException e) {

// 出现异常,将返回值设置为null

result = null;

}

}

return result;

}

/**

* 内部解析后的浏览器信息model对象

*

* @author root

*

*/

public static class UserAgentInfo {

private String browserName; // 浏览器名称

private String browserVersion; // 浏览器版本号

private String osName; // 操作系统名称

private String osVersion; // 操作系统版本号

public String getBrowserName() {

return browserName;

}

public void setBrowserName(String browserName) {

this.browserName = browserName;

}

public String getBrowserVersion() {

return browserVersion;

}

public void setBrowserVersion(String browserVersion) {

this.browserVersion = browserVersion;

}

public String getOsName() {

return osName;

}

public void setOsName(String osName) {

this.osName = osName;

}

public String getOsVersion() {

return osVersion;

}

public void setOsVersion(String osVersion) {

this.osVersion = osVersion;

}

@Override

public String toString() {

return "UserAgentInfo [browserName=" + browserName + ", browserVersion=" + browserVersion + ", osName="

+ osName + ", osVersion=" + osVersion + "]";

}

}

}

ip地址. qqwry.dat

package com.sxt.etl.util.ip;

import java.io.FileNotFoundException;

import java.io.IOException;

import java.io.RandomAccessFile;

import java.io.UnsupportedEncodingException;

import java.nio.ByteOrder;

import java.nio.MappedByteBuffer;

import java.nio.channels.FileChannel;

import java.util.ArrayList;

import java.util.Hashtable;

import java.util.List;

/**

* * 用来读取QQwry.dat文件,以根据ip获得好友位置,QQwry.dat的格式是 一. 文件头,共8字节 1. 第一个起始IP的绝对偏移, 4字节

* 2. 最后一个起始IP的绝对偏移, 4字节 二. "结束地址/国家/区域"记录区 四字节ip地址后跟的每一条记录分成两个部分 1. 国家记录 2.

* 地区记录 但是地区记录是不一定有的。而且国家记录和地区记录都有两种形式 1. 以0结束的字符串 2. 4个字节,一个字节可能为0x1或0x2 a.

* 为0x1时,表示在绝对偏移后还跟着一个区域的记录,注意是绝对偏移之后,而不是这四个字节之后 b. 为0x2时,表示在绝对偏移后没有区域记录

* 不管为0x1还是0x2,后三个字节都是实际国家名的文件内绝对偏移

* 如果是地区记录,0x1和0x2的含义不明,但是如果出现这两个字节,也肯定是跟着3个字节偏移,如果不是 则为0结尾字符串 三.

* "起始地址/结束地址偏移"记录区 1. 每条记录7字节,按照起始地址从小到大排列 a. 起始IP地址,4字节 b. 结束ip地址的绝对偏移,3字节

*

* 注意,这个文件里的ip地址和所有的偏移量均采用little-endian格式,而java是采用 big-endian格式的,要注意转换

*

* @author root

*/

public class IPSeeker {

// 一些固定常量,比如记录长度等等

private static final int IP_RECORD_LENGTH = 7;

private static final byte AREA_FOLLOWED = 0x01;

private static final byte NO_AREA = 0x2;

// 用来做为cache,查询一个ip时首先查看cache,以减少不必要的重复查找

private Hashtable ipCache;

// 随机文件访问类

private RandomAccessFile ipFile;

// 内存映射文件

private MappedByteBuffer mbb;

// 单一模式实例

private static IPSeeker instance = null;

// 起始地区的开始和结束的绝对偏移

private long ipBegin, ipEnd;

// 为提高效率而采用的临时变量

private IPLocation loc;

private byte[] buf;

private byte[] b4;

private byte[] b3;

/** */

/**

* 私有构造函数

*/

protected IPSeeker() {

ipCache = new Hashtable();

loc = new IPLocation();

buf = new byte[100];

b4 = new byte[4];

b3 = new byte[3];

try {

String ipFilePath = IPSeeker.class.getResource("/qqwry.dat")

.getFile();

ipFile = new RandomAccessFile(ipFilePath, "r");

} catch (FileNotFoundException e) {

System.out.println("IP地址信息文件没有找到,IP显示功能将无法使用");

ipFile = null;

}

// 如果打开文件成功,读取文件头信息

if (ipFile != null) {

try {

ipBegin = readLong4(0);

ipEnd = readLong4(4);

if (ipBegin == -1 || ipEnd == -1) {

ipFile.close();

ipFile = null;

}

} catch (IOException e) {

System.out.println("IP地址信息文件格式有错误,IP显示功能将无法使用");

ipFile = null;

}

}

}

/** */

/**

* @return 单一实例

*/

public static IPSeeker getInstance() {

if (instance == null) {

instance = new IPSeeker();

}

return instance;

}

/** */

/**

* 给定一个地点的不完全名字,得到一系列包含s子串的IP范围记录

*

* @param s

* 地点子串

* @return 包含IPEntry类型的List

*/

public List getIPEntriesDebug(String s) {

List ret = new ArrayList();

long endOffset = ipEnd + 4;

for (long offset = ipBegin + 4; offset <= endOffset; offset += IP_RECORD_LENGTH) {

// 读取结束IP偏移

long temp = readLong3(offset);

// 如果temp不等于-1,读取IP的地点信息

if (temp != -1) {

IPLocation loc = getIPLocation(temp);

// 判断是否这个地点里面包含了s子串,如果包含了,添加这个记录到List中,如果没有,继续

if (loc.country.indexOf(s) != -1 || loc.area.indexOf(s) != -1) {

IPEntry entry = new IPEntry();

entry.country = loc.country;

entry.area = loc.area;

// 得到起始IP

readIP(offset - 4, b4);

entry.beginIp = IPSeekerUtils.getIpStringFromBytes(b4);

// 得到结束IP

readIP(temp, b4);

entry.endIp = IPSeekerUtils.getIpStringFromBytes(b4);

// 添加该记录

ret.add(entry);

}

}

}

return ret;

}

/** */

/**

* 给定一个地点的不完全名字,得到一系列包含s子串的IP范围记录

*

* @param s

* 地点子串

* @return 包含IPEntry类型的List

*/

public List getIPEntries(String s) {

List ret = new ArrayList();

try {

// 映射IP信息文件到内存中

if (mbb == null) {

FileChannel fc = ipFile.getChannel();

mbb = fc.map(FileChannel.MapMode.READ_ONLY, 0, ipFile.length());

mbb.order(ByteOrder.LITTLE_ENDIAN);

}

int endOffset = (int) ipEnd;

for (int offset = (int) ipBegin + 4; offset <= endOffset; offset += IP_RECORD_LENGTH) {

int temp = readInt3(offset);

if (temp != -1) {

IPLocation loc = getIPLocation(temp);

// 判断是否这个地点里面包含了s子串,如果包含了,添加这个记录到List中,如果没有,继续

if (loc.country.indexOf(s) != -1

|| loc.area.indexOf(s) != -1) {

IPEntry entry = new IPEntry();

entry.country = loc.country;

entry.area = loc.area;

// 得到起始IP

readIP(offset - 4, b4);

entry.beginIp = IPSeekerUtils.getIpStringFromBytes(b4);

// 得到结束IP

readIP(temp, b4);

entry.endIp = IPSeekerUtils.getIpStringFromBytes(b4);

// 添加该记录

ret.add(entry);

}

}

}

} catch (IOException e) {

System.out.println(e.getMessage());

}

return ret;

}

/** */

/**

* 从内存映射文件的offset位置开始的3个字节读取一个int

*

* @param offset

* @return

*/

private int readInt3(int offset) {

mbb.position(offset);

return mbb.getInt() & 0x00FFFFFF;

}

/** */

/**

* 从内存映射文件的当前位置开始的3个字节读取一个int

*

* @return

*/

private int readInt3() {

return mbb.getInt() & 0x00FFFFFF;

}

/** */

/**

* 根据IP得到国家名

*

* @param ip

* ip的字节数组形式

* @return 国家名字符串

*/

public String getCountry(byte[] ip) {

// 检查ip地址文件是否正常

if (ipFile == null)

return "错误的IP数据库文件";

// 保存ip,转换ip字节数组为字符串形式

String ipStr = IPSeekerUtils.getIpStringFromBytes(ip);

// 先检查cache中是否已经包含有这个ip的结果,没有再搜索文件

if (ipCache.containsKey(ipStr)) {

IPLocation loc = (IPLocation) ipCache.get(ipStr);

return loc.country;

} else {

IPLocation loc = getIPLocation(ip);

ipCache.put(ipStr, loc.getCopy());

return loc.country;

}

}

/** */

/**

* 根据IP得到国家名

*

* @param ip

* IP的字符串形式

* @return 国家名字符串

*/

public String getCountry(String ip) {

return getCountry(IPSeekerUtils.getIpByteArrayFromString(ip));

}

/** */

/**

* 根据IP得到地区名

*

* @param ip

* ip的字节数组形式

* @return 地区名字符串

*/

public String getArea(byte[] ip) {

// 检查ip地址文件是否正常

if (ipFile == null)

return "错误的IP数据库文件";

// 保存ip,转换ip字节数组为字符串形式

String ipStr = IPSeekerUtils.getIpStringFromBytes(ip);

// 先检查cache中是否已经包含有这个ip的结果,没有再搜索文件

if (ipCache.containsKey(ipStr)) {

IPLocation loc = (IPLocation) ipCache.get(ipStr);

return loc.area;

} else {

IPLocation loc = getIPLocation(ip);

ipCache.put(ipStr, loc.getCopy());

return loc.area;

}

}

/**

* 根据IP得到地区名

*

* @param ip

* IP的字符串形式

* @return 地区名字符串

*/

public String getArea(String ip) {

return getArea(IPSeekerUtils.getIpByteArrayFromString(ip));

}

/** */

/**

* 根据ip搜索ip信息文件,得到IPLocation结构,所搜索的ip参数从类成员ip中得到

*

* @param ip

* 要查询的IP

* @return IPLocation结构

*/

public IPLocation getIPLocation(byte[] ip) {

IPLocation info = null;

long offset = locateIP(ip);

if (offset != -1)

info = getIPLocation(offset);

if (info == null) {

info = new IPLocation();

info.country = "未知国家";

info.area = "未知地区";

}

return info;

}

/**

* 从offset位置读取4个字节为一个long,因为java为big-endian格式,所以没办法 用了这么一个函数来做转换

*

* @param offset

* @return 读取的long值,返回-1表示读取文件失败

*/

private long readLong4(long offset) {

long ret = 0;

try {

ipFile.seek(offset);

ret |= (ipFile.readByte() & 0xFF);

ret |= ((ipFile.readByte() << 8) & 0xFF00);

ret |= ((ipFile.readByte() << 16) & 0xFF0000);

ret |= ((ipFile.readByte() << 24) & 0xFF000000);

return ret;

} catch (IOException e) {

return -1;

}

}

/**

* 从offset位置读取3个字节为一个long,因为java为big-endian格式,所以没办法 用了这么一个函数来做转换

*

* @param offset

* @return 读取的long值,返回-1表示读取文件失败

*/

private long readLong3(long offset) {

long ret = 0;

try {

ipFile.seek(offset);

ipFile.readFully(b3);

ret |= (b3[0] & 0xFF);

ret |= ((b3[1] << 8) & 0xFF00);

ret |= ((b3[2] << 16) & 0xFF0000);

return ret;

} catch (IOException e) {

return -1;

}

}

/**

* 从当前位置读取3个字节转换成long

*

* @return

*/

private long readLong3() {

long ret = 0;

try {

ipFile.readFully(b3);

ret |= (b3[0] & 0xFF);

ret |= ((b3[1] << 8) & 0xFF00);

ret |= ((b3[2] << 16) & 0xFF0000);

return ret;

} catch (IOException e) {

return -1;

}

}

/**

* 从offset位置读取四个字节的ip地址放入ip数组中,读取后的ip为big-endian格式,但是

* 文件中是little-endian形式,将会进行转换

*

* @param offset

* @param ip

*/

private void readIP(long offset, byte[] ip) {

try {

ipFile.seek(offset);

ipFile.readFully(ip);

byte temp = ip[0];

ip[0] = ip[3];

ip[3] = temp;

temp = ip[1];

ip[1] = ip[2];

ip[2] = temp;

} catch (IOException e) {

System.out.println(e.getMessage());

}

}

/**

* 从offset位置读取四个字节的ip地址放入ip数组中,读取后的ip为big-endian格式,但是

* 文件中是little-endian形式,将会进行转换

*

* @param offset

* @param ip

*/

private void readIP(int offset, byte[] ip) {

mbb.position(offset);

mbb.get(ip);

byte temp = ip[0];

ip[0] = ip[3];

ip[3] = temp;

temp = ip[1];

ip[1] = ip[2];

ip[2] = temp;

}

/**

* 把类成员ip和beginIp比较,注意这个beginIp是big-endian的

*

* @param ip

* 要查询的IP

* @param beginIp

* 和被查询IP相比较的IP

* @return 相等返回0,ip大于beginIp则返回1,小于返回-1。

*/

private int compareIP(byte[] ip, byte[] beginIp) {

for (int i = 0; i < 4; i++) {

int r = compareByte(ip[i], beginIp[i]);

if (r != 0)

return r;

}

return 0;

}

/**

* 把两个byte当作无符号数进行比较

*

* @param b1

* @param b2

* @return 若b1大于b2则返回1,相等返回0,小于返回-1

*/

private int compareByte(byte b1, byte b2) {

if ((b1 & 0xFF) > (b2 & 0xFF)) // 比较是否大于

return 1;

else if ((b1 ^ b2) == 0)// 判断是否相等

return 0;

else

return -1;

}

/**

* 这个方法将根据ip的内容,定位到包含这个ip国家地区的记录处,返回一个绝对偏移 方法使用二分法查找。

*

* @param ip

* 要查询的IP

* @return 如果找到了,返回结束IP的偏移,如果没有找到,返回-1

*/

private long locateIP(byte[] ip) {

long m = 0;

int r;

// 比较第一个ip项

readIP(ipBegin, b4);

r = compareIP(ip, b4);

if (r == 0)

return ipBegin;

else if (r < 0)

return -1;

// 开始二分搜索

for (long i = ipBegin, j = ipEnd; i < j;) {

m = getMiddleOffset(i, j);

readIP(m, b4);

r = compareIP(ip, b4);

// log.debug(Utils.getIpStringFromBytes(b));

if (r > 0)

i = m;

else if (r < 0) {

if (m == j) {

j -= IP_RECORD_LENGTH;

m = j;

} else

j = m;

} else

return readLong3(m + 4);

}

// 如果循环结束了,那么i和j必定是相等的,这个记录为最可能的记录,但是并非

// 肯定就是,还要检查一下,如果是,就返回结束地址区的绝对偏移

m = readLong3(m + 4);

readIP(m, b4);

r = compareIP(ip, b4);

if (r <= 0)

return m;

else

return -1;

}

/**

* 得到begin偏移和end偏移中间位置记录的偏移

*

* @param begin

* @param end

* @return

*/

private long getMiddleOffset(long begin, long end) {

long records = (end - begin) / IP_RECORD_LENGTH;

records >>= 1;

if (records == 0)

records = 1;

return begin + records * IP_RECORD_LENGTH;

}

/**

* 给定一个ip国家地区记录的偏移,返回一个IPLocation结构

*

* @param offset

* @return

*/

private IPLocation getIPLocation(long offset) {

try {

// 跳过4字节ip

ipFile.seek(offset + 4);

// 读取第一个字节判断是否标志字节

byte b = ipFile.readByte();

if (b == AREA_FOLLOWED) {

// 读取国家偏移

long countryOffset = readLong3();

// 跳转至偏移处

ipFile.seek(countryOffset);

// 再检查一次标志字节,因为这个时候这个地方仍然可能是个重定向

b = ipFile.readByte();

if (b == NO_AREA) {

loc.country = readString(readLong3());

ipFile.seek(countryOffset + 4);

} else

loc.country = readString(countryOffset);

// 读取地区标志

loc.area = readArea(ipFile.getFilePointer());

} else if (b == NO_AREA) {

loc.country = readString(readLong3());

loc.area = readArea(offset + 8);

} else {

loc.country = readString(ipFile.getFilePointer() - 1);

loc.area = readArea(ipFile.getFilePointer());

}

return loc;

} catch (IOException e) {

return null;

}

}

/**

* @param offset

* @return

*/

private IPLocation getIPLocation(int offset) {

// 跳过4字节ip

mbb.position(offset + 4);

// 读取第一个字节判断是否标志字节

byte b = mbb.get();

if (b == AREA_FOLLOWED) {

// 读取国家偏移

int countryOffset = readInt3();

// 跳转至偏移处

mbb.position(countryOffset);

// 再检查一次标志字节,因为这个时候这个地方仍然可能是个重定向

b = mbb.get();

if (b == NO_AREA) {

loc.country = readString(readInt3());

mbb.position(countryOffset + 4);

} else

loc.country = readString(countryOffset);

// 读取地区标志

loc.area = readArea(mbb.position());

} else if (b == NO_AREA) {

loc.country = readString(readInt3());

loc.area = readArea(offset + 8);

} else {

loc.country = readString(mbb.position() - 1);

loc.area = readArea(mbb.position());

}

return loc;

}

/**

* 从offset偏移开始解析后面的字节,读出一个地区名

*

* @param offset

* @return 地区名字符串

* @throws IOException

*/

private String readArea(long offset) throws IOException {

ipFile.seek(offset);

byte b = ipFile.readByte();

if (b == 0x01 || b == 0x02) {

long areaOffset = readLong3(offset + 1);

if (areaOffset == 0)

return "未知地区";

else

return readString(areaOffset);

} else

return readString(offset);

}

/**

* @param offset

* @return

*/

private String readArea(int offset) {

mbb.position(offset);

byte b = mbb.get();

if (b == 0x01 || b == 0x02) {

int areaOffset = readInt3();

if (areaOffset == 0)

return "未知地区";

else

return readString(areaOffset);

} else

return readString(offset);

}

/**

* 从offset偏移处读取一个以0结束的字符串

*

* @param offset

* @return 读取的字符串,出错返回空字符串

*/

private String readString(long offset) {

try {

ipFile.seek(offset);

int i;

for (i = 0, buf[i] = ipFile.readByte(); buf[i] != 0; buf[++i] = ipFile

.readByte())

;

if (i != 0)

return IPSeekerUtils.getString(buf, 0, i, "GBK");

} catch (IOException e) {

System.out.println(e.getMessage());

}

return "";

}

/**

* 从内存映射文件的offset位置得到一个0结尾字符串

*

* @param offset

* @return

*/

private String readString(int offset) {

try {

mbb.position(offset);

int i;

for (i = 0, buf[i] = mbb.get(); buf[i] != 0; buf[++i] = mbb.get())

;

if (i != 0)

return IPSeekerUtils.getString(buf, 0, i, "GBK");

} catch (IllegalArgumentException e) {

System.out.println(e.getMessage());

}

return "";

}

public String getAddress(String ip) {

String country = getCountry(ip).equals(" CZ88.NET") ? ""

: getCountry(ip);

String area = getArea(ip).equals(" CZ88.NET") ? "" : getArea(ip);

String address = country + " " + area;

return address.trim();

}

/**

* * 用来封装ip相关信息,目前只有两个字段,ip所在的国家和地区

*

*

* @author swallow

*/

public class IPLocation {

public String country;

public String area;

public IPLocation() {

country = area = "";

}

public IPLocation getCopy() {

IPLocation ret = new IPLocation();

ret.country = country;

ret.area = area;

return ret;

}

}

/**

* 一条IP范围记录,不仅包括国家和区域,也包括起始IP和结束IP *

*

*

* @author root

*/

public class IPEntry {

public String beginIp;

public String endIp;

public String country;

public String area;

public IPEntry() {

beginIp = endIp = country = area = "";

}

public String toString() {

return this.area + " " + this.country + "IP Χ:" + this.beginIp

+ "-" + this.endIp;

}

}

/**

* 操作工具类

*

* @author root

*

*/

public static class IPSeekerUtils {

/**

* 从ip的字符串形式得到字节数组形式

*

* @param ip

* 字符串形式的ip

* @return 字节数组形式的ip

*/

public static byte[] getIpByteArrayFromString(String ip) {

byte[] ret = new byte[4];

java.util.StringTokenizer st = new java.util.StringTokenizer(ip,

".");

try {

ret[0] = (byte) (Integer.parseInt(st.nextToken()) & 0xFF);

ret[1] = (byte) (Integer.parseInt(st.nextToken()) & 0xFF);

ret[2] = (byte) (Integer.parseInt(st.nextToken()) & 0xFF);

ret[3] = (byte) (Integer.parseInt(st.nextToken()) & 0xFF);

} catch (Exception e) {

System.out.println(e.getMessage());

}

return ret;

}

/**

* 对原始字符串进行编码转换,如果失败,返回原始的字符串

*

* @param s

* 原始字符串

* @param srcEncoding

* 源编码方式

* @param destEncoding

* 目标编码方式

* @return 转换编码后的字符串,失败返回原始字符串

*/

public static String getString(String s, String srcEncoding,

String destEncoding) {

try {

return new String(s.getBytes(srcEncoding), destEncoding);

} catch (UnsupportedEncodingException e) {

return s;

}

}

/**

* 根据某种编码方式将字节数组转换成字符串

*

* @param b

* 字节数组

* @param encoding

* 编码方式

* @return 如果encoding不支持,返回一个缺省编码的字符串

*/

public static String getString(byte[] b, String encoding) {

try {

return new String(b, encoding);

} catch (UnsupportedEncodingException e) {

return new String(b);

}

}

/**

* 根据某种编码方式将字节数组转换成字符串

*

* @param b

* 字节数组

* @param offset

* 要转换的起始位置

* @param len

* 要转换的长度

* @param encoding

* 编码方式

* @return 如果encoding不支持,返回一个缺省编码的字符串

*/

public static String getString(byte[] b, int offset, int len,

String encoding) {

try {

return new String(b, offset, len, encoding);

} catch (UnsupportedEncodingException e) {

return new String(b, offset, len);

}

}

/**

* @param ip

* ip的字节数组形式

* @return 字符串形式的ip

*/

public static String getIpStringFromBytes(byte[] ip) {

StringBuffer sb = new StringBuffer();

sb.append(ip[0] & 0xFF);

sb.append('.');

sb.append(ip[1] & 0xFF);

sb.append('.');

sb.append(ip[2] & 0xFF);

sb.append('.');

sb.append(ip[3] & 0xFF);

return sb.toString();

}

}

/**

* 获取全部ip地址集合列表

*

* @return

*/

public List getAllIp() {

List list = new ArrayList();

byte[] buf = new byte[4];

for (long i = ipBegin; i < ipEnd; i += IP_RECORD_LENGTH) {

try {

this.readIP(this.readLong3(i + 4), buf); // 读取ip,最终ip放到buf中

String ip = IPSeekerUtils.getIpStringFromBytes(buf);

list.add(ip);

} catch (Exception e) {

// nothing

}

}

return list;

}

}

测试时,jdk版本选择为1.7可以运行老师提供的源码

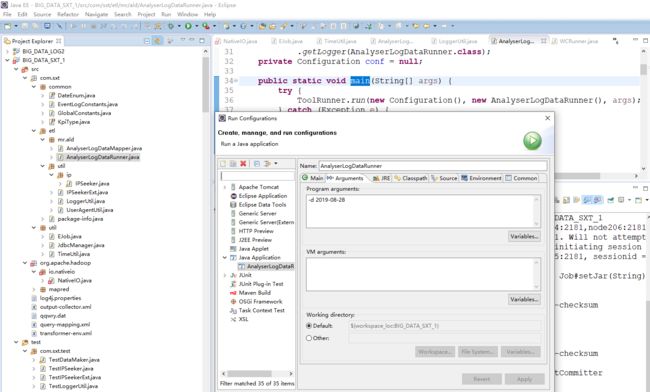

由于ETL 只需要过滤和解析,不涉及到聚合,直接写入到hbase.一次不需要reduce.

[root@node204 ~]# hadoop-daemon.sh stop namenode ## 切换active standby

配置程序的 hdfs 和zookeeper

@Override

public void setConf(Configuration conf) {

conf.set("fs.defaultFS", "hdfs://node203:8020");

// conf.set("yarn.resourcemanager.hostname", "node3");

conf.set("hbase.zookeeper.quorum", "node204,node205,node206");

this.conf = HBaseConfiguration.create(conf);

}

run main方法,并且通过runconfuguration配置参数 -d hdfs 上flume创建的文件目录。如 -d 2019-08-28 用-风格的日期