hbase是一个数据库,用于数据存储,mapreduce是一个计算框架,用于数据处理

集成的模型

hbase作为mapreduce的输入

hbase作为mapreduce的输出

hbase作为输入和输出

将hbase的jar包拷贝到hadoop的lib(一般不采取),可配置环境变量

export HBASE_HOME=/opt/modules/hbase-0.98.6-hadoop2

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:`${HBASE_HOME}/bin/hbase mapredcp`

运行官方提供了一些mapreduce的方法 lib/hbase-server-0.98.6-hadoop2.jar

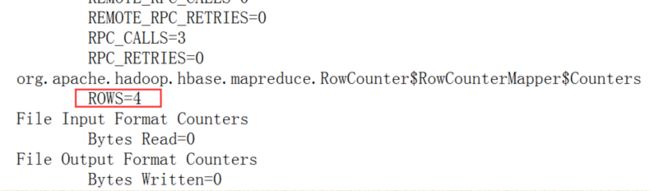

/opt/modules/hadoop-2.5.0/bin/yarn jar lib/hbase-server-0.98.6-hadoop2.jar rowcounter student:stu_info

自定义实现hbase的mapreduce

明确需求:将student:stu_info表中info列簇中的name列,导入到t5表中

实现需求:map-》reduce-》driver

input map shuffle reduce output

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;

import org.apache.hadoop.hbase.mapreduce.TableMapper;

import org.apache.hadoop.hbase.mapreduce.TableReducer;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

public class HMr extends Configured implements Tool{

/**

* map

*

* ImmutableBytesWritable, Result, KEYOUT, VALUEOUT

*/

public static class rdMapper extends TableMapper{

@Override

protected void map(

ImmutableBytesWritable key,Result value,Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

Put put = new Put(key.get());

for(Cell cell : value.rawCells()){

if("info".equals(Bytes.toString(CellUtil.cloneFamily(cell)))){

if("name".equals(Bytes.toString(CellUtil.cloneQualifier(cell)))){

put.add(cell);

}

}

}

context.write(key, put);

}

}

/**

* reduce

*KEYIN, VALUEIN, KEYOUT, Mutation

*/

public static class wrReduce extends TableReducer{

@Override

protected void reduce(

ImmutableBytesWritable key,

Iterable values,Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

for(Put put : values){

// List cells = put.get("info", "name");

// new put()

// put1.add("base",CellUtil.cloneQualifier(cell),CellUtil.cloneValue(cell));

context.write(key, put);

}

}

}

/**

* 当前的mapreduce的写法,只适用于我们的需求

*

* 添加的cell是否能插入我们的目标表

*

* 假设t5表的列簇不叫info,叫base

*

* 取出源表中cell的rowkey,col,value,组成新的cell,放到新的put实例中去

*/

/**

* driver

*/

public int run(String[] args) throws Exception {

// TODO Auto-generated method stub

Configuration conf = super.getConf();

Job job = Job.getInstance(conf, "mr-hbase");

job.setJarByClass(HMr.class);

/**

* map and reduce - inuput and output

*/

Scan scan = new Scan();

TableMapReduceUtil.initTableMapperJob(

"student:stu_info", // input table

scan, // Scan instance to control CF and attribute selection

rdMapper.class, // mapper class

ImmutableBytesWritable.class, // mapper output key

Put.class, // mapper output value

job);

TableMapReduceUtil.initTableReducerJob(

"t5", // output table

wrReduce.class, // reducer class

job);

job.setNumReduceTasks(1);

boolean isSuccess = job.waitForCompletion(true);

return isSuccess ? 0:1;

}

public static void main(String[] args) throws Exception {

//create a hbase conf instance

Configuration conf = HBaseConfiguration.create();

//run the job

int status = ToolRunner.run(conf, new HMr(), args);

//exit

System.exit(status);

}

}