TensorFlow实现AlexNet

一、AlexNet的介绍

2012年,Hinton的学生ALex Krizhevsky提出了深度卷积神经网络模型AlexNet,它可以算是LeNet的一种更深更宽的版本。AlexNet中包含了几个新的技术点,首次在CNN中成功应用了ReLU,Dropout和LRN等技术。AlexNet包含6亿3000万个连接,6000万个参数和65万个神经元,拥有5个卷积层,其中3个卷积层后面连接了最大池化层,最后还有三个全连接层。AlexNet以显著的优势赢得了竞争激烈的ILSVRC 2012比赛,top-5的错误率降低至16.4%。AlexNet是神经网络低谷期后的第一次发声,确立了深度学习在计算机视觉的统治地位,同时也推动了深度学习在语音识别、自然语言处理、强化学习等领域的拓展。

AlexNet的主要新技术点如下:

- 成功使用ReLU作为CNN的激活函数,并验证其效果在较深的网络超过了sigmoid,成功解决了sigmoid在网络较深时的梯度弥散问题。

- 训练时使用Dropout随机忽略一部分神经元,以避免模型过拟合,AlexNet中全连接层使用了dropout

- 在CNN中使用重叠的最大池化。此前CNN中普遍使用平均池化,AlexNet使用最大池化,避免了平均池化的模糊化效果。AlexNet提出让步长比池化核的尺寸更小,这样池化层的输出之间会有重叠和覆盖,提成了特征的丰富性。

- 提出了LRN层,对局部神经元的活动创建竞争机制,使得其中响应响应比较大的值变得相对强大,并抑制其他反馈较小的神经元,增强了模型的泛化能力。

- 使用CUDA加速深度卷积神经网络的训练,利用GPU强大的并行计算能力,处理神经网络训练时的而大量的矩阵运算。

- 数据增强,随机从

的原始图像中截取

的原始图像中截取 大小的区域,相当于增加了2048倍的数据量。论文中对图像的RGB数据进行了PCA处理,并对主成分做一个标准差为0.1的高斯扰动,增加一些噪声,这个trick可以让错误率下降1%。

大小的区域,相当于增加了2048倍的数据量。论文中对图像的RGB数据进行了PCA处理,并对主成分做一个标准差为0.1的高斯扰动,增加一些噪声,这个trick可以让错误率下降1%。

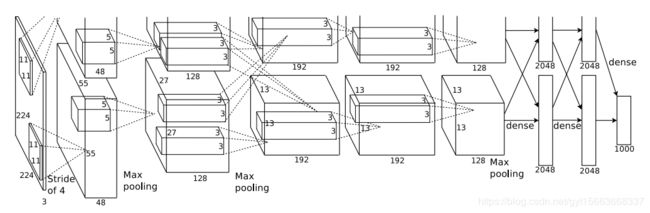

AlexNet有8个需要训练参数的层(不包括池化层和LRN层),前5层为卷积层,后三层为全连接层。AlexNet最后一层有1000类输出的softmax层用作分类。LRN层出现在第1及第2个卷积层后,而最大池化层出现在两个LRN层以及最后一个卷积层后。ReLU激活函数则应用在这8层每一层的后面。论文中应用了两块GPU。结构图如下所示:

二、实现AlexNet

在本节案例中,我们实现了基本的网络架构,但是我们没有使用训练集去训练网络。我们对ALexNet的forward和backward进行评测,

from datetime import datetime

import math

import time

import tensorflow as tf

# 设置批量大小,批量数

batch_size = 32

num_batches = 100

# 现实网络每一层结构,展示每一个卷积层或池化层输出tensor的尺寸

def print_activations(t):

print(t.op.name, ' ', t.get_shape().as_list())

# AlexNet的网络结构,定义函数inference

def inference(images):

"""

输入:数据图像

输出:第五个池化层,及参数

"""

parameters = []

# 第一层卷积层

with tf.name_scope('conv1') as scope: # 将scope内生成的变量自动命名为conv1/xxx

kernel = tf.Variable(tf.truncated_normal([11, 11, 3, 64], dtype=tf.float32, stddev=1e-1), name="weights") # 卷积核参数初始为标准差为0.1

conv = tf.nn.conv2d(images, kernel, [1, 4, 4, 1], padding='SAME') # 对图像进行卷积

biases = tf.Variable(tf.constant(0.0, shape=[64], dtype=tf.float32), trainable=True, name='biases') # 偏置

bias = tf.nn.bias_add(conv, biases) # 加偏置

conv1 = tf.nn.relu(bias, name=scope) # 激活

print_activations(conv1) # 输出第一个卷积层的名字和形状

parameters += [kernel, biases] # 将卷积核和偏置存入变量中

lrn1 = tf.nn.lrn(conv1, 4, bias=1.0, alpha=0.001/9, beta=0.75, name='lrn1') # 局部归一化层

pool1 = tf.nn.max_pool(lrn1, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='VALID', name='pool1') # 池化

print_activations(pool1) # 打印池化层的名字和形状

# 第二层卷积层

with tf.name_scope('conv2') as scope:

kernel = tf.Variable(tf.truncated_normal([5, 5, 64, 192], dtype=tf.float32, stddev=1e-1), name='weights')

conv = tf.nn.conv2d(pool1, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[192], dtype=tf.float32), trainable=True, name='biases')

bias = tf.nn.bias_add(conv, biases)

conv2 = tf.nn.relu(bias, name=scope)

print_activations(conv2)

parameters += [kernel, biases]

lrn2 = tf.nn.lrn(conv2, 4, bias=1.0, alpha=0.001/9, beta=0.75, name='lrn2')

pool2 = tf.nn.max_pool(lrn2, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='VALID', name='pool2')

print_activations(pool2)

# 第三层卷积层

with tf.name_scope('conv3') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 192, 384], dtype=tf.float32, stddev=1e-1), name='weights')

conv = tf.nn.conv2d(pool2, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[384], dtype=tf.float32), trainable=True, name='biases')

bias = tf.nn.bias_add(conv, biases)

conv3 = tf.nn.relu(bias, name=scope)

print_activations(conv3)

parameters += [kernel, biases]

# 第四层卷积层

with tf.name_scope('conv4') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 384, 256], dtype=tf.float32, stddev=1e-1), name='weights')

conv = tf.nn.conv2d(conv3, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[256], dtype=tf.float32), trainable=True, name='biases')

bias = tf.nn.bias_add(conv, biases)

conv4 = tf.nn.relu(bias, name=scope)

print_activations(conv4)

parameters += [kernel, biases]

# 第五层卷积层

with tf.name_scope('conv5') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 256, 256], dtype=tf.float32, stddev=1e-1), name='weights')

conv = tf.nn.conv2d(conv4, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[256], dtype=tf.float32), trainable=True, name='biases')

bias = tf.nn.bias_add(conv, biases)

conv5 = tf.nn.relu(bias, name=scope)

print_activations(conv5)

parameters += [kernel, biases]

pool5 = tf.nn.max_pool(conv5, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='VALID', name='pool5')

print_activations(pool5)

return pool5, parameters

# 评估每轮计算时间

def time_tensorflow_run(session, target, info_string):

num_steps_burn_in = 10 # 预热轮数

total_duration = 0.0 # 总时间

total_duration_squared = 0.0 # 平方和

for i in range(num_batches + num_steps_burn_in):

start_time = time.time()

_ = session.run(target)

duration = time.time() - start_time

if i >= num_steps_burn_in:

if not i % 10:

print('%s: step %d, duration = %.3f' % (datetime.now(), i - num_steps_burn_in, duration))

total_duration += duration

total_duration_squared += duration * duration

mn = total_duration / num_batches

vr = total_duration_squared / num_batches - mn * mn

sd = math.sqrt(vr)

print('%s: %s across %d steps, %.3f +/- %.3f sec/batch' % (datetime.now(), info_string, num_batches, mn, sd))

# 主函数

def run_benchmark():

with tf.Graph().as_default():

image_size = 224

images = tf.Variable(tf.random_normal([batch_size,

image_size,

image_size, 3], dtype=tf.float32, stddev=1e-1))

pool5, parameters = inference(images)

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

time_tensorflow_run(sess, pool5, 'Forword')

objective = tf.nn.l2_loss(pool5)

grad = tf.gradients(objective, parameters)

time_tensorflow_run(sess, grad, 'Forward-backward')

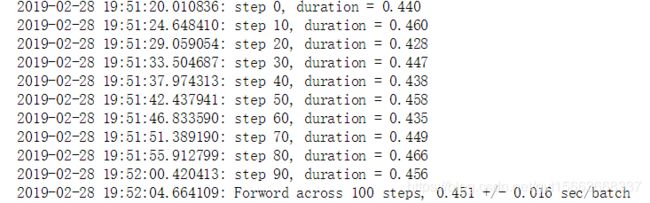

run_benchmark()上面是forward的计算时间。每轮大约是0.451秒,我们用的是CPU

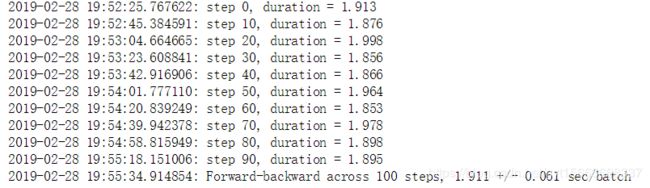

上边是backward的每轮运行时间,大约是1.911秒。有LRN层花费的时间是没有LRN层的3倍。并且LRN层对最终准确率的影响不是很大。所以在ALexNet之后的网络,很少有使用LRN的。