Matlab深度学习——入门

Matlab&深度学习

1.为什么使用Matlab?

如今Python语言占据了深度学习,然而Matlab也是可以做的。

- 好奇心,尝鲜,学习

- Matlab的优点:

- 使用应用程序和可视化工具创建、修改和分析深度学习架构

- 使用应用程序预处理数据,并自动对图像、视频和音频数据进行真值标注

- 在 NVIDIA® GPU、云和数据中心资源上加速算法,而无需专门编程

- 与基于 TensorFlow、PyTorch 和 MxNet 等框架的使用者开展协作

- 使用强化学习仿真和训练动态系统行为

- 从物理系统的 MATLAB 和 Simulink® 模型生成基于仿真的训练和测试数据

2.入门——手写数字识别

2.0环境介绍

- Matlab 2019b

- RTX 2060

- GPU支持

2.1手写数字图片集

MNIST是手写数字图片数据集,包含60000张训练样本和10000张测试样本。MNIST数据集来自美国国家标准与技术研究所,National Institute of Standards and Technology(NIST),M是Modified的缩写。训练集是由来自250个不同人手写的数字构成,其中50%是高中学生,50%来自人口普查局的工作人员。测试集也是同样比例的手写数字数据。每张图片有28x28个像素点构成,每个像素点用一个灰度值表示,这里是将28*28的像素展开为一个一维的行向量(每行784个值)。图片标签为one-hot编码:0-9

2.2Matlab读取Mnist数据集获取图像和标签

datapath = "./Mnist/";

filenameImagesTrain = strcat(datapath, "train-images-idx3-ubyte");

filenameLabelsTrain = strcat(datapath, "train-labels-idx1-ubyte");

filenameImagesTest = strcat(datapath, "t10k-images-idx3-ubyte");

filenameLabelsTest = strcat(datapath, "t10k-labels-idx1-ubyte");

XTrain = processMNISTimages(filenameImagesTrain);

YTrain = processMNISTlabels(filenameLabelsTrain);

XTest = processMNISTimages(filenameImagesTest);

YTest = processMNISTlabels(filenameLabelsTest);

% 处理Mnist数据集图像

function X = processMNISTimages(filename)

[fileID,errmsg] = fopen(filename,'r','b');

if fileID < 0

error(errmsg);

end

magicNum = fread(fileID,1,'int32',0,'b');

if magicNum == 2051

fprintf('\nRead MNIST image data...\n')

end

numImages = fread(fileID,1,'int32',0,'b');

fprintf('Number of images in the dataset: %6d ...\n',numImages);

numRows = fread(fileID,1,'int32',0,'b');

numCols = fread(fileID,1,'int32',0,'b');

X = fread(fileID,inf,'unsigned char');

X = reshape(X,numCols,numRows,numImages);

X = permute(X,[2 1 3]);

X = X./255;

X = reshape(X, [28,28,1,size(X,3)]);

X = dlarray(X, 'SSCB');

fclose(fileID);

end

% 处理Mnist数据集标签

function Y = processMNISTlabels(filename)

[fileID,errmsg] = fopen(filename,'r','b');

if fileID < 0

error(errmsg);

end

magicNum = fread(fileID,1,'int32',0,'b');

if magicNum == 2049

fprintf('\nRead MNIST label data...\n')

end

numItems = fread(fileID,1,'int32',0,'b');

fprintf('Number of labels in the dataset: %6d ...\n',numItems);

Y = fread(fileID,inf,'unsigned char');

Y = categorical(Y);

fclose(fileID);

end

运行结果:

Read MNIST image data...

Number of images in the dataset: 60000 ...

Read MNIST label data...

Number of labels in the dataset: 60000 ...

Read MNIST image data...

Number of images in the dataset: 10000 ...

Read MNIST label data...

Number of labels in the dataset: 10000 ...

2.3网络设计——LeNet5

LeNet-5的网络模型如下图所示

网络模型具体参数如下表所示

| 网络层 | 卷积核尺寸 | 步长 | 填充 | 输出大小 |

|---|---|---|---|---|

| 输入层 | 32 * 32 * 1 | |||

| 卷积层1 | 5 | 1 | 0 | 28 * 28 * 6 |

| 最大池化层1 | 2 | 2 | 0 | 14 * 14 * 6 |

| 卷积层2 | 5 | 1 | 0 | 10 * 10 * 6 |

| 最大池化层2 | 2 | 2 | 0 | 5 * 5 * 16 |

| 全连接层1 | 1 * 1 * 120 | |||

| 全连接层2 | 1 * 1 * 84 | |||

| 全连接层3 | 1 * 1 * 10 | |||

| Softmax层 | 1 * 1 * 10 | |||

| 分类层 | 1 * 1 * 10 |

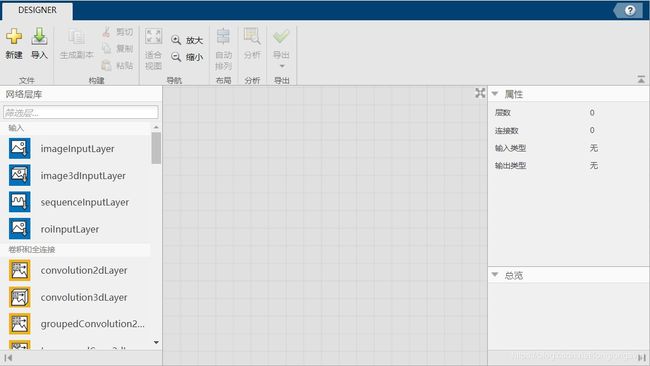

2.4Matlab——LeNet5设计

在Matlab 2019b中的App中有一个App,名为Deep Network Designer,即深度网络设计师,打开它,就可以通过拖动神经网络的组件来设计深度网络了

下表给出Matlab网络设计师中常见组件的相关信息

| 组件 | 翻译 |

|---|---|

| imageInputLayer | 图像输入层 |

| sequenceInputLayer | 序列输入层 |

| convolution2dLayer | 卷积层 |

| fullyConnectLayer | 全连接层 |

| reluLayer | relu层 |

| leakyReluLayer | leakyRelu层 |

| tanhLayer | tanhLayer层 |

| eluLayer | eLu层 |

| batchNormalizationLayer | BN层 |

| dropoutLayer | dropout层 |

| crossChannelNormalizationLayer | CCN层 |

| averagePooling2dLayer | 平均池化层 |

| globalAveragePooling2dLayer | 全局平均池化层 |

| maxPooling2dLayer | 最大池化层 |

| additionLayer | 加法层 |

| depthConcatenationLayer | 深度连接层 |

| concatenationLayer | 连接层 |

| softmaxLayer | softmax层 |

| classificationLayer | 分类层 |

| regressionLayer | 回归层 |

LeNet5设计图

设计完网络后用分析工具进行分析

分析结果无误后,导出LeNet5网络代码

layers = [

imageInputLayer([28 28 1],"Name","imageinput")

convolution2dLayer([5 5],6,"Name","conv1","Padding","same")

tanhLayer("Name","tanh1")

maxPooling2dLayer([2 2],"Name","maxpool1","Stride",[2 2])

convolution2dLayer([5 5],16,"Name","conv2")

tanhLayer("Name","tanh2")

maxPooling2dLayer([2 2],"Name","maxpool","Stride",[2 2])

fullyConnectedLayer(120,"Name","fc1")

fullyConnectedLayer(84,"Name","fc2")

fullyConnectedLayer(10,"Name","fc")

softmaxLayer("Name","softmax")

classificationLayer("Name","classoutput")];

至此,LeNet5网络Matlab设计已完成

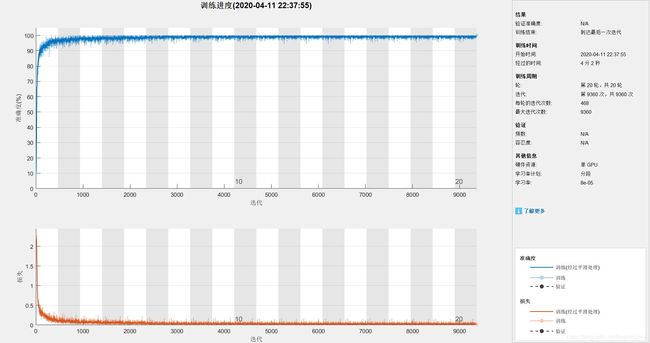

2.5训练LeNet5网络

在Matlab训练网络,可以使用以下代码来设置训练,详见注释。训练时如果可以使用GPU来加速,训练会很快完成

options = trainingOptions('sgdm', ... %优化器

'LearnRateSchedule','piecewise', ... %学习率

'LearnRateDropFactor',0.2, ... %

'LearnRateDropPeriod',5, ...

'MaxEpochs',20, ... %最大学习整个数据集的次数

'MiniBatchSize',128, ... %每次学习样本数

'Plots','training-progress'); %画出整个训练过程

doTraining = true; %是否训练

if doTraining

trainNet = trainNetwork(XTrain, YTrain,layers,options);

% 训练网络,XTrain训练的图片,YTrain训练的标签,layers要训练的网

% 络,options训练时的参数

end

save Minist_LeNet5 trainNet %训练完后保存模型

yTest = classify(trainNet, XTest); % 测试训练后的模型

accuracy = sum(yTest == YTest)/numel(YTest); %模型在测试集的准确率

2.6训练结果与测试

训练结果

测试模型

首先,给出测试示例图片

测试代码,详见注释

test_image = imread('5.jpg');

shape = size(test_image);

dimension=numel(shape);

if dimension > 2

test_image = rgb2gray(test_image); %灰度化

end

test_image = imresize(test_image, [28,28]); %保证输入为28*28

test_iamge = imbinarize(test_image,0.5); %二值化

test_image = imcomplement(test_image); %反转,使得输入网络时一定要保证图片

% 背景是黑色,数字部分是白色

imshow(test_image);

load('Minist_LeNet5');

% test_result = Recognition(trainNet, test_image);

result = classify(trainNet, test_image);

disp(test_result);

结束语

这个文章会适时更新的,欢迎大家学习,参考,评论,遇到问题在可以评论里Q我,当然关于“我的训练为啥不能用GPU这种玄学问题”,我能试着给予解答,但不保证能解决你的问题,或许可以用CPU来训练网络,不过我没试。可以看到Matlab在设计一些网络时还是很方便的——通过拖拖拽拽,哈哈,希望Matlab能引入更多的网络层组件,来使我们可以更好更方便地设计深度网络