shardingjdbc_学习笔记

sharding_jdbc_学习笔记

- 事务一致性问题(分表产生的跨节点带来的)

- 跨节点更新

- 跨节点查询

- 垂直与水平拆分

- 扫盲

- 分库分表带来问题

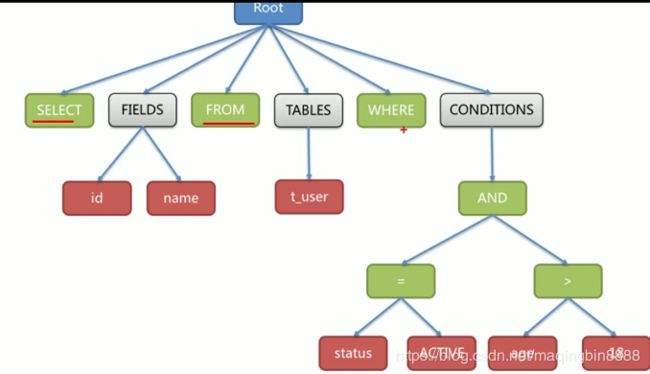

- 解析

- 路由

- bindingTable

- binding配置

- 绑定表不产生笛卡尔积

- 无笛卡尔积

- 主表在前以主表为主

- 不绑定则会产生笛卡尔积

- 分片可以是in

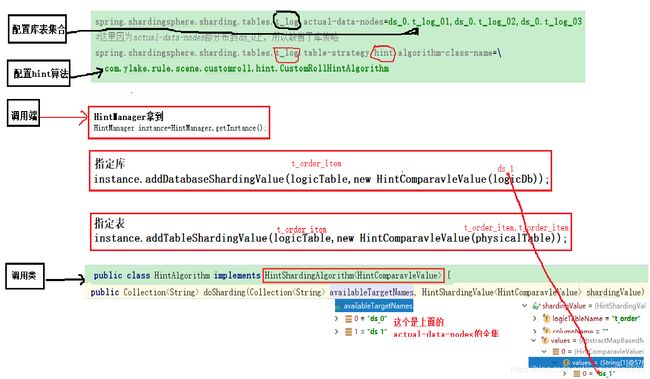

- hint指定库表

- 获得表分布

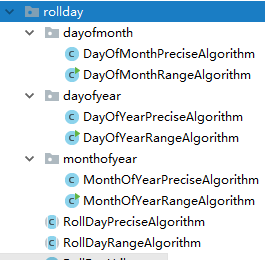

- 时间滚动

- 时间滚动的几种形式

- 代码结构

- 改写

- 正确性改写

- 优化性改写

- 执行-连接的管理

- 内存限制模式(OLAP)

- 连接限制模式(OLTP)

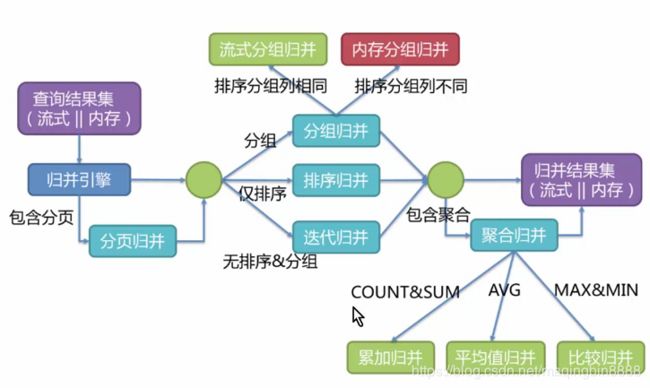

- 结果集归并

- 流式结果集

- 装饰者归并????

- group分组归并

- 雪花算法

- 配制

- 配制分片算法

- inline的spring配置

- standard的spring配置

- complex的spring配置

- hint的spring配置

- default的默认配置

- 打印日志

- 配置单表多表

- 配置单库单表

- 配置单库多表

- 配置多库多表

- 配置多库多表读写分离

- 不支持SQL

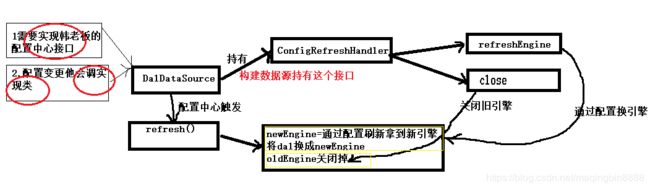

- 换数据源

- 应用得注意的点

- SQL语句中别使用schema

- 分页的性能优化

- 不支持的SQL

- CASE WHEN ,HAVING,UNION(ALL)

- 特殊的子查询

- 子查询中包含数据表的子查询

- 子查询上包含聚合函数不支持

- 分片键在聚合函数或运算表达式,会全表扫

- 关于distinct

- 接入的出现问题

- metadata导致的启动慢

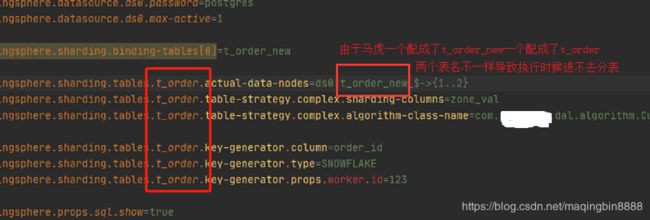

- 配置配错了总说找不到分表

- 由于对%2后产生数据倾斜

sharding学习视屏教程

事务一致性问题(分表产生的跨节点带来的)

产生原因

数据库层上讲: 跨物理数据源节点 产生的分布工

跨节点更新

某个业务代码下多条DML语句并且在一个事务中 路由后发现不在同一个库下 ,因此产生了跨节点操作: 这是跨物理数据源节点

解决方案:

1.尽量避免维度的划分不合理,尽量在同一个分片上 而避免跨节点

-

如果实在避免不了 则采用

-

柔性事务方式 最大努力 最终一致性

-

XA两阶段提交 (性能差)

-

链式提交方式 主要用于广播表

2.考虑在应用的Service层来解决比如TCC,saga,消费最终一致性来解决

跨节点查询

1.尽量落在同一分片上,避免跨节点查询

2.如果实在避免不了,则在应用的Servei层做微服,分别查出数据做merger操作

垂直与水平拆分

扫盲

- 垂直拆库:业务功能单元拆分,专库专用,不同业务模块彼此隔离

垂直拆表拆的是表结构字段,按访问热度拆,访问频率高的放一起比如商品列表,商品详情 列表访问高 详情访问低频 - 水平拆库

- 水平拆表

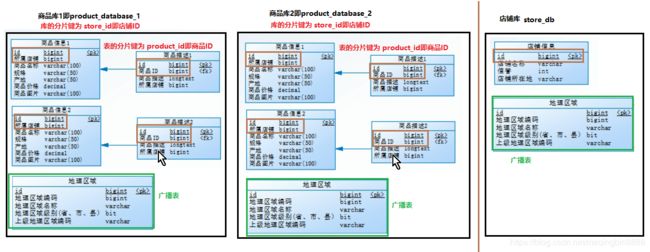

如下图所示 商品与店铺按功能模块做了垂直分库

而商品又根据 store_id 取模分库后 根据product_id分表

即库的分片键与表的分片键可以不一样

单纯的垂直分库是无法解决单库压力大的问题,会造成数据不均衡,因此有必要进行 垂直与水平相结合的方式

分库分表带来问题

- 事务-致性问题

- 跨节点join问题

- 跨节点分页 排序、函数,主键需要全局唯一,公共表

解决:

1.上来进行垂直分库业务解耦

2.不要急着水平分库,先从缓存入手,不行 再考虑读写分离

3.最后考虑水平分库分表,单表数据在1000万以内

解析

路由

bindingTable

binding配置

要注意两点

- binding-tables[0] 有一个数组 如果只有一个系列的绑定表不能省略数组 写成这样是错的

spring.shardingsphere.sharding.binding-tables =

product_info,product_descript

应用配置配错了spring.shardingjdbc.sharding.binding-tables[0] 配成了shardingjdbc的前缀导致自动装配无法装配绑定表,并且启动也不报错就是路由时执行能慢点儿 然后硬说绑定表未生效 后来发现自己配错了 晕

#设置product_info,product_descript为绑定表

spring.shardingsphere.sharding.binding-tables[0] = product_info,product_descript

spring.shardingsphere.sharding.binding-tables[1] = product_info,product_descript

绑定表不产生笛卡尔积

无笛卡尔积

SELECT i.* FROM t_order o JOIN t_order_item i ON o.order_id=i.order_id WHERE o.order_id in (10, 11);

当t_order 与t_order_item 配置了bindingTable后

//以t_order为主进行分片 没有笛卡尔积

SELECT i.* FROM t_order_0 o JOIN t_order_item_0 i ON o.order_id=i.order_id WHERE o.order_id in (10, 11);

SELECT i.* FROM t_order_1 o JOIN t_order_item_1 i ON o.order_id=i.order_id WHERE o.order_id in (10, 11);

主表在前以主表为主

SELECT i.* FROM t_order o JOIN t_order_item i ON o.order_id=i.order_id WHERE o.order_id in (10, 11);

- 以主表 t_order的路由分布为主

- 即使两个t_order t_order_iterm两个表的分片ID不一样,也以主表t_order为主

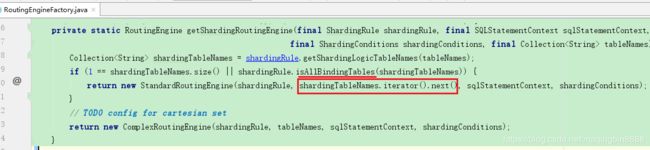

- 绑定表路由源码逻辑

"只有一个逻辑表 或 是绑定表"的话 就取逻辑表的第一个作为路由

shardingTableNames.iterator().next()

不绑定则会产生笛卡尔积

如果没有进行bindingTable那么进行迪卡尔积计算,所有的目标库进行排列组合

SELECT i.* FROM t_order_0 o JOIN t_order_item_0 i ON o.order_id=i.order_id WHERE o.order_id in (10, 11);

SELECT i.* FROM t_order_0 o JOIN t_order_item_1 i ON o.order_id=i.order_id WHERE o.order_id in (10, 11);

SELECT i.* FROM t_order_1 o JOIN t_order_item_0 i ON o.order_id=i.order_id WHERE o.order_id in (10, 11);

SELECT i.* FROM t_order_1 o JOIN t_order_item_1 i ON o.order_id=i.order_id WHERE o.order_id in (10, 11);

分片可以是in

==> Preparing: select * from t_order t where t.order_id in (?, ? )

==> Parameters: 373897739357913088(Long), 373897037306920961 (Long)

select * from t_order t where t.order_id in (?)

Actual SQL: m1 ::: select * from t_order_1 t where t. order_ id in (?)

参数为[373897739357913088, 373897037306920961]

Actual SQL: ml ::: select * from t_order_2 t where t. order_ id in (?)

参数为[373897739357913088, 373897037306920961]

通过两个参数取模后得到都是m1的库,并且有t_order_1 和 t_order_2

hint指定库表

HintManager instance = currHintManager.get();

instance.addDatabaseShardingValue("t_order",new HintComparavleValue("ds_1"));

instance.addTableShardingValue("t_order",new HintComparavleValue("t_order_0","t_order_1"));

获得表分布

Map> result = new HashMap>();

ShardingRule shardingRule = ((ShardingDataSource) dataSource).getRuntimeContext().getRule();

TableRule tableRule = shardingRule.getTableRule(logicTableName);

List list = tableRule.getActualDataNodes();

for (DataNode node : list ){

if (result.containsKey(node.getDataSourceName())){

Set tableSet = result.get(node.getDataSourceName());

tableSet.add(node.getTableName());

} else {

Set tableSet = new HashSet<>();

tableSet.add(node.getTableName());

result.put(node.getDataSourceName(),tableSet);

}

}

return result;

时间滚动

时间滚动的几种形式

代码结构

改写

正确性改写

表名替换 聚合函数改写 avg改成 sum account

分页改写

SELECT * FROM t_order ORDER BY id LIMIT 1000000, 10

改写后的值-->会导致性能慢

SELECT * FROM t_order ORDER BY id LIMIT 0, 1000010

应用最好写成这样 用一个自增有序的id做为条件,把前面的跳过去

SELECT * FROM t_order WHERE id > 100000 LIMIT 10

或者这样

SELECT * FROM t_order WHERE id > 100000 AND id <= 100010 ORDER BY id

优化性改写

order by 排序补列

group by 排序补列 并且补列order by 而走流式

主键自动生成 补主键列 ,补占位符? 通过修改语法树的列节点和binding

执行-连接的管理

内存限制模式(OLAP)

即每个表一个连接,通过多线程方式并发查询进行流式结果集合并, 通常是面向统计类需求即OLAP 最大限度提升吞吐量

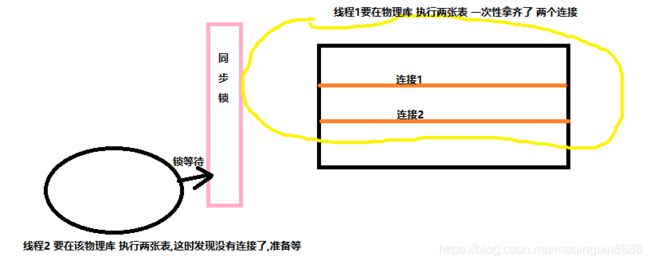

内存限制模式会出现死锁,sharding团队也成功的解决了,死锁的场景为:

改变是获取连接的时候进行同步锁

附:

加锁会降低并发 sharding也考虑了 并不是任何场景都加锁

1.如果是面向OLAP的场景,并且选择了内存限制模式 发现路由结果为 这个库下的一个表 那么不用加锁 只有路由同个库下的两个表时才加锁,因为按表建立连接,两张表不加锁就会发生上图死锁

2.如果是面向OLTP的场景,那么一定是按库建连接,这时连接会复用,不必要加锁

连接限制模式(OLTP)

即一个库下有20张表要访问处理的话,会只创建一个连接复用串行的处理(如果目标库为多个库仍然一个库一个连接并发处理),该模式通常带有分片键严格控制数据库连接,以保证本地事务

具体使用哪种模式看已使用的连接数 来平衡更合理 有一个平衡策略:

结果集归并

流式结果集

流式结果集排序,游标比较后下移 一边操作一边排序

特点:

不会一下将所有的结果集装入内存,极大节省内存消耗

装饰者归并???

group分组归并

SELECT name, SUM(score) FROM t_ score GROUP BY name ORDER BY name;

它可以进进流式归并 与 内存归并

- 流式归并:要求分组字段 与 排序字段 必须保持一致 这样每个库执行都是排序的 具体原理通过 通过 优先级队列比较后 进行游标下移来进行流式归并 流式归并的前提条件为 fetchsize打开

- 内存归并: 如果排序字段没有 或者 不是分组字段 则直接进行内存归并(消耗内存)

流式归并

group by name order by sum(score) 这种走内存归并 (一)

group by name order by name 这种走流式归并 (二)

group by name 这种会进行order by 补列 进行流式归并(三)

即只有(一) 这种情况会走内存

orderby groupby limit 分页的改写 都可以走流式

特别是分页这块儿 要加一处全局自增的ID做为查询条件索引变快

装饰器模式

是指处理聚合函数 三种类型,比较,求和和平均值 也是基于流式

我的问题:

1.装饰器到底是啥? 处理聚合函数的吗 怎么样能通俗话解释

2.迭代是指内存归并吗

3.如果order by id后走流式问题不会太大,排序也在数据库端 xx说不靠谱,面向OLAP的还可以,面向OLTP的不行啊

雪花算法

关于sharding雪花算法具体说明

配制

配制分片算法

inline的spring配置

spring.shardingsphere.sharding.binding-tables[0]=device,xxx其它表

spring.shardingsphere.sharding.tables.device.actual-data-nodes=ds${0..1}.device_$->{0..1}

#ds0库下的device_0001,device_0002一直到device_1024

#spring.shardingsphere.sharding.tables.device.actual-data-nodes=ds0.device_$->{ def list = []; (1..1024).each{element-> list << String.format("%04d",element)}; list}

#库规则

spring.shardingsphere.sharding.tables.device.database-strategy.inline.shardingColumn=id,device_name

#spring.shardingsphere.sharding.tables.device.database-strategy.inline.algorithmExpression=ds$->{id % 2}

spring.shardingsphere.sharding.tables.device.database-strategy.inline.algorithmExpression=ds$->{Math.abs(id.hashCode() + device_name.hashCode()) % 2}

#表规则

spring.shardingsphere.sharding.tables.device.table-strategy.inline.sharding-column=id

spring.shardingsphere.sharding.tables.device.table-strategy.inline.algorithm-expression=device_$->{Math.abs(String.valueOf(id).hashCode()) % 2}

#雪花算法

spring.shardingsphere.sharding.tables.device.key-generator.column=id

spring.shardingsphere.sharding.tables.device.key-generator.type=SNOWFLAKE

spring.shardingsphere.sharding.tables.device.key-generator.props.worker.id=123

standard的spring配置

spring.shardingsphere.sharding.binding-tables[0]=xxxdevicexxx,xxx

spring.shardingsphere.sharding.tables.xxxdevicexxx.actual-data-nodes=ds${0..1}.xxxdevicexxx_$->{0..1}

#ds0库下的xxxdevicexxx_0001,xxxdevicexxx_0002一直到xxxdevicexxx_1024

#spring.shardingsphere.sharding.tables.xxxdevicexxx.actual-data-nodes=ds0.xxxdevicexxx_$->{ def list = []; (1..1024).each{element-> list << String.format("%04d",element)}; list}

#库的分片规则

spring.shardingsphere.sharding.tables.xxxdevicexxx.database-strategy.standard.sharding-column=id

spring.shardingsphere.sharding.tables.xxxdevicexxx.database-strategy.standard.precise-algorithm-class-name=com.xxx.StandardDbPreciseAlgorithm

spring.shardingsphere.sharding.tables.xxxdevicexxx.database-strategy.standard.range-algorithm-class-name=com.xxx.StandardDbRangeAlgorithm

#表的分片规则

spring.shardingsphere.sharding.tables.xxxdevicexxx.table-strategy.standard.sharding-column=id

spring.shardingsphere.sharding.tables.xxxdevicexxx.table-strategy.standard.precise-algorithm-class-name=com.xxx.StandardTbPreciseAlgorithm

spring.shardingsphere.sharding.tables.xxxdevicexxx.table-strategy.standard.range-algorithm-class-name=com.xxx.StandardTbRangeAlgorithm

complex的spring配置

spring.shardingsphere.sharding.tables.xxxcomplex_ricexxx.actual-data-nodes=ds${0..1}.xxxcomplex_ricexxx_$->{0..1}

#ds0库下的xxxcomplex_ricexxx_0001,xxxcomplex_ricexxx_0002一直到xxxcomplex_ricexxx_1024

#spring.shardingsphere.sharding.tables.xxxcomplex_ricexxx.actual-data-nodes=ds0.xxxcomplex_ricexxx_$->{ def list = []; (1..1024).each{element-> list << String.format("%04d",element)}; list}

#库的分片算法

spring.shardingsphere.sharding.tables.xxxcomplex_ricexxx.database-strategy.complex.sharding-columns=id

spring.shardingsphere.sharding.tables.xxxcomplex_ricexxx.database-strategy.complex.algorithm-class-name=com.xxx.ComplexDbAlgorithm

#表的分片算法

spring.shardingsphere.sharding.tables.xxxcomplex_ricexxx.table-strategy.complex.sharding-columns=id

spring.shardingsphere.sharding.tables.xxxcomplex_ricexxx.table-strategy.complex.algorithm-class-name=com.xxx.ComplexTbAlgorithm

hint的spring配置

default的默认配置

#default 默认库表分片规则

spring.shardingsphere.sharding.default-database-strategy.inline.sharding-column=id

spring.shardingsphere.sharding.default-database-strategy.inline.algorithm-expression=ds$->{id % 2}

spring.shardingsphere.sharding.default-table-strategy.inline.sharding-column=id

spring.shardingsphere.sharding.default-table-strategy.inline.algorithm-expression=device_$->{id % 2}

#default 默认数据源

spring.shardingsphere.sharding.default-data-source-name=ds0

#default 默认雪花算法

spring.shardingsphere.sharding.default-key-generator.column=id

spring.shardingsphere.sharding.default-key-generator.type=SNOWFLAKE

spring.shardingsphere.sharding.default-key-generator.props.worker.id=123

###########################################

默认standard标准算法库分片

spring.shardingsphere.sharding.default-database-strategy.standard.sharding-column=device_name,id

spring.shardingsphere.sharding.default-database-strategy.standard.precise-algorithm-class-name=com.psbc.dmf.shardingsample.common.algorithm.StandardDbPreciseAlgorithm

spring.shardingsphere.sharding.default-database-strategy.standard.range-algorithm-class-name=com.psbc.dmf.shardingsample.common.algorithm.StandardDbRangeAlgorithm

默认standard标准算法表分片

spring.shardingsphere.sharding.default-table-strategy.standard.sharding-column=id

spring.shardingsphere.sharding.default-table-strategy.standard.precise-algorithm-class-name=com.psbc.dmf.shardingsample.common.algorithm.StandardTbPreciseAlgorithm

spring.shardingsphere.sharding.default-table-strategy.standard.range-algorithm-class-name=com.psbc.dmf.shardingsample.common.algorithm.StandardTbRangeAlgorithm

默认complex标准算法库分片

spring.shardingsphere.sharding.default-database-strategy.complex.sharding-columns=id

spring.shardingsphere.sharding.default-database-strategy.complex.algorithm-class-name=com.psbc.dmf.shardingsample.common.algorithm.ComplexDbAlgorithm

默认complex标准算法表分片

spring.shardingsphere.sharding.default-table-strategy.complex.sharding-columns=id

spring.shardingsphere.sharding.default-table-strategy.complex.algorithm-class-name=com.psbc.dmf.shardingsample.common.algorithm.ComplexTbAlgorithm

打印日志

spring.shardingsphere.props.sql.show=true

配置单表多表

配置单库单表

配置单库多表

配置多库多表

配置多库多表读写分离

详见链接

不支持SQL

| 不支持SQL | 原因 |

|---|---|

| CASE WHEN | 不支持case when |

| SELECT COUNT(*) FROM (SELECT * FROM t_order o) | 支持 |

| SELECT COUNT(*) FROM (SELECT * FROM t_order o where o.id in(SELECT id FROM t_order WHERE status = ?)) —即子查询嵌套不支持 | 不支持 |

| INSERT INTO tbl_name (col1, col2, …) VALUES(1+2, ?, …) | value里有运行符不支持 |

| INSERT … SELECT | insert into … select 是不支持的 |

| SELECT COUNT(col1) as count_alias FROM tbl_name GROUP BY col1 HAVING count_alias > ? | having是不支持的 |

| SELECT * FROM tbl_name1 UNION SELECT * FROM tbl_name2 | union是不支持的 |

| SELECT * FROM tbl_name1 UNION ALL SELECT * FROM tbl_name2 | union all不支持的 |

| SELECT * FROM ds.tbl_name1 | 不能包含库名 |

| sum(distinct colum1), sum(column1) from tab | 不支持两种聚合函数同时使用 |

| sum(distinct colum1) from tab | 这个是支持的 |

| 把distinct 给改写成group by的一种方式也是可以考虑 | aa |

distinct支持的语句

SELECT DISTINCT * FROM tbl_name WHERE col1 = ?

SELECT DISTINCT col1 FROM tbl_name

SELECT DISTINCT col1, col2, col3 FROM tbl_name

SELECT DISTINCT col1 FROM tbl_name ORDER BY col1

SELECT DISTINCT col1 FROM tbl_name ORDER BY col2

SELECT DISTINCT(col1) FROM tbl_name

SELECT AVG(DISTINCT col1) FROM tbl_name

SELECT SUM(DISTINCT col1) FROM tbl_name

SELECT COUNT(DISTINCT col1) FROM tbl_name

SELECT COUNT(DISTINCT col1) FROM tbl_name GROUP BY col1

SELECT COUNT(DISTINCT col1 + col2) FROM tbl_name

SELECT COUNT(DISTINCT col1), SUM(DISTINCT col1) FROM tbl_name

SELECT COUNT(DISTINCT col1), col1 FROM tbl_name GROUP BY col1

SELECT col1, COUNT(DISTINCT col1) FROM tbl_name GROUP BY col1

换数据源

配置中心变了之后 触发DalDataSource的 refresh()

应用得注意的点

SQL语句中别使用schema

还不支持在DQL和DML语句中使用Schema ,

select * from ds_0.t_order #这种是不支持的

分页的性能优化

select * from t_order order by id limit 1000000,10

select * from t_order order by id limit 0,1000010 (改写后)

建议的性能优化: select * from t_order where id>1000000 and id<1000010 order by id

或者 select * from t_order where id>1000000 limit 10

不支持的SQL

CASE WHEN ,HAVING,UNION(ALL)

不支持

特殊的子查询

子查询中包含数据表的子查询

select count(*)

from (select * from order o where o.id in(select id from order where status=?)) --这种不支持

子查询上包含聚合函数不支持

分片键在聚合函数或运算表达式,会全表扫

select * from t_order where to_date(create_time,‘yyyy-mm-dd’)=‘2019-01-01’

create_time在聚合函数中 不会指定,而会全表扫描

关于distinct

基本上全支持了,只有极个性的场景不支持,即普通聚合函数和disninct聚合函数同时使用不支持

select sum(distinct col1),sum(col2) from tb_name 即这种不支持

接入的出现问题

metadata导致的启动慢

- 要么将metadata缓存,如果直接缓存,连接还是持有的

- 具体的连接

- 要么将设置为false 即不进行元数据的检查

spring.shardingsphere.check.table.metadata.enabled =false - 并行执行默认为1,把数量改大点 spring.shardingsphere.max.connections.size.per.query

=50

配置配错了总说找不到分表

由于对%2后产生数据倾斜

库为ds0 表为device_0,device_1

库为ds1 表为device_0,device_1

1.由于库的分片键与 表的分片键相同并且取模规则也相同

如果id%2=0 则最终数据总会落到ds0.device_0上 ,那么ds0.devie_1就会浪费

如果id%2=1 则最终数据总会落到ds1.device_1上 ,那么ds1.devie_0就会浪费

解决这种情况为:

- 库按id直接取模 即 id%2

- 表按id字符串后的hashCode来取模 即String.values(id).hashCode() %2

由于取hashCode会有负值所以要加上Math.abs()进行绝对值一下

具体的配置如下:

spring.shardingsphere.sharding.tables.device.actual-data-nodes=ds${0..1}.device_$->{0..1}

spring.shardingsphere.sharding.tables.device.database-strategy.inline.shardingColumn=id

spring.shardingsphere.sharding.tables.device.database-strategy.inline.algorithmExpression=ds$->{id % 2}

spring.shardingsphere.sharding.tables.device.table-strategy.inline.sharding-column=id

spring.shardingsphere.sharding.tables.device.table-strategy.inline.algorithm-expression=device_$->{Math.abs(String.valueOf(id).hashCode()) % 2}