Hbase高性能分页

Hbase高性能分页

- 前言

- 实现

- HbasePage

- Partition

- HbaseQueryConsumSettingByPage

- HbasePageHelper

- HbaseQueryHelper

- 总结

前言

hbase分页要做到像mysql分页一样比较困难,但是我们可以只提供上一页和下一页的方式来实现高性能的Hbase分页。这里的分页借助了PageFilter,但是不仅仅只是依赖于它,PageFilter的分页是以Region为单位的,只能保证一个Region返回的数据是pageSize的大小,最终返回到客户端的数据会超过期望的分页条数。那么如何进行分页呢,就需要只以一个Region为单位,每次只查询一个Region就可以了。

实现

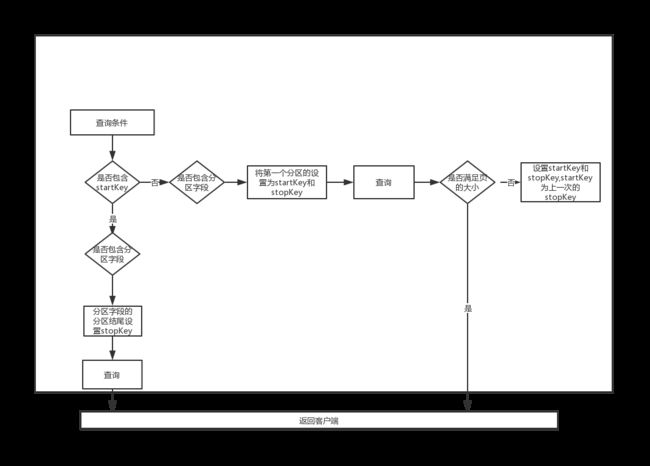

- 前端第一次查询的时候是不会携带startKey,第二次就会将最后一条记录的rowkey作为startKey传递到后天,后台需要做一下处理,startKey+|作为新的startKey,stopKey就要看有无分区字段了,如果有分区字段,那么stopKey就是该分区字段所在的分区的stopKey,如果没有分区字段,那么就要看是否有startKey,如果有startKey,那么就取startKey的分区号+|作为stopkey,否则就取表的第一号分区的start和stop作为startKey和stopKey来进行查询。

- 查询的结果如果满页的大小就返回至客户端,否则,如果有分区字段,直接返回至客户端,否则,将进入下一个分区进行查找,直到满足条件才返回至客户端。

HbasePage

描述了请求参数的集合。

import lombok.Data;

/**

* 描述:

* 时间:2019/10/26

* user:尹忠政

*/

@Data

public class HbasePage {

/**

* 分页大小

*/

private int pageSize;

/**

* 开始key

*/

private String startKey;

/**

* 结束key

*/

private String stopKey;

/**

* 表名

*/

private String tableName;

public String queryToString() {

return "";

}

}

Partition

分区对象

import lombok.AllArgsConstructor;

import lombok.Data;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.Serializable;

/**

* 描述:

* 时间:2019/10/25

* user:尹忠政

*/

@Data

@AllArgsConstructor

public class Partition implements Serializable {

byte[] startKey;

byte[] endKey;

@Override

public String toString() {

return "Partition{" +

"startKey=" + Bytes.toString(startKey) +

", endKey=" + Bytes.toString(endKey) +

'}';

}

}

HbaseQueryConsumSettingByPage

查询的用户自定义设置回调对象

import com.tbl.yth.commonutil.bigdata.query.inter.IHbaseQueryConsumSetting;

import org.apache.commons.lang3.StringUtils;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.filter.FilterList;

import org.apache.hadoop.hbase.filter.PageFilter;

import org.apache.hadoop.hbase.util.Bytes;

/**

* 描述:

* 时间:2019/10/26

* user:尹忠政

*/

public class HbaseQueryConsumSettingByPage implements IHbaseQueryConsumSetting {

private IHbaseQueryConsumSetting iHbaseQueryConsumSetting;

private HbasePage hbasePage;

public HbaseQueryConsumSettingByPage(IHbaseQueryConsumSetting iHbaseQueryConsumSetting, HbasePage hbasePage) {

this.iHbaseQueryConsumSetting = iHbaseQueryConsumSetting;

this.hbasePage = hbasePage;

}

@Override

public void setting(Scan scan, FilterList filterList) throws Exception {

if (!StringUtils.isEmpty(hbasePage.getStartKey())) {

scan.setStartRow(Bytes.toBytes(hbasePage.getStartKey()));

}

if (!StringUtils.isEmpty(hbasePage.getStopKey())) {

scan.setStopRow(Bytes.toBytes(hbasePage.getStopKey()));

}

iHbaseQueryConsumSetting.setting(scan, filterList);

filterList.addFilter(new PageFilter(hbasePage.getPageSize()));

}

}

HbasePageHelper

分页查询对象

import com.tbl.yth.commonutil.bigdata.conf.Constant;

import com.tbl.yth.commonutil.bigdata.conf.PartitionEnum;

import com.tbl.yth.commonutil.bigdata.query.HbaseQueryHelper;

import com.tbl.yth.commonutil.bigdata.query.inter.IHbaseQueryConsumSetting;

import com.tbl.yth.commonutil.bigdata.query.inter.IHbaseReader;

import com.tbl.yth.commonutil.bigdata.query.model.HbaseBaseModel;

import com.tbl.yth.commonutil.bigdata.util.HbaseHelper;

import com.tbl.yth.commonutil.common.util.common.Closer;

import org.apache.commons.lang3.StringUtils;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.filter.FilterList;

import org.apache.hadoop.hbase.filter.FirstKeyOnlyFilter;

import org.apache.hadoop.hbase.filter.PageFilter;

import org.apache.hadoop.hbase.util.Bytes;

import redis.clients.jedis.Jedis;

import java.io.*;

import java.util.ArrayList;

import java.util.Base64;

import java.util.Iterator;

import java.util.List;

/**

* 描述:

* 时间:2019/10/25

* user:尹忠政

*/

public class HbasePageHelper {

/**

* 分页查询hbase

*

* @param hbasePage 分页对象

* @param _class hbase表映射的对象

* @param iHbaseQueryConsumSetting 查询fliter回调

* @param iHbaseReader 行读取器

* @return

*/

public static <T extends HbaseBaseModel> List<T> queryByPage(HbasePage hbasePage, Class<T> _class, IHbaseQueryConsumSetting iHbaseQueryConsumSetting, IHbaseReader<T> iHbaseReader) {

List<T> result = new ArrayList<>();

try {

FilterList filterList = new FilterList();

iHbaseQueryConsumSetting.setting(new Scan(), filterList);

List<Partition> partitions = PartitionEnum.getPartitions(PartitionEnum.p(hbasePage.getTableName()));

if (partitions.size() == 0) {

return result;

}

HbaseQueryHelper<T> hbaseQueryHelper = HbaseQueryHelper.getInstance(_class);

if (StringUtils.isEmpty(hbasePage.getStartKey())) {

Partition partition = partitions.get(0);

hbasePage.setStartKey(Bytes.toString(partition.getStartKey()));

hbasePage.setStopKey(Bytes.toString(partition.getEndKey()));

} else {

Partition partition = getPartition(partitions, hbasePage.getStartKey().substring(0, PartitionEnum.getPartitionLen(hbasePage.getTableName())));

if (null == partition) {

return result;

}

hbasePage.setStartKey(hbasePage.getStartKey() + 0);

hbasePage.setStopKey(Bytes.toString(partition.getEndKey()));

}

doQuery(partitions, result, hbaseQueryHelper, hbasePage, iHbaseQueryConsumSetting, iHbaseReader);

} catch (Exception e) {

e.printStackTrace();

return result;

}

return result;

}

public static <T extends HbaseBaseModel> List<T> queryByPage(HbasePage hbasePage, Class<T> _class, String partition, IHbaseQueryConsumSetting iHbaseQueryConsumSetting, IHbaseReader<T> iHbaseReader) {

List<T> result = new ArrayList<>();

try {

if (StringUtils.isEmpty(hbasePage.getStartKey())) {

hbasePage.setStartKey(partition);

}

hbasePage.setStopKey(partition + Constant.ROW_KEY_SPLITE);

HbaseQueryHelper<T> hbaseQueryHelper = HbaseQueryHelper.getInstance(_class);

result = hbaseQueryHelper.queryByPage(hbasePage.getTableName(), new HbaseQueryConsumSettingByPage(iHbaseQueryConsumSetting, hbasePage), iHbaseReader);

} catch (Exception e) {

e.printStackTrace();

}

return result;

}

/**

* 递归查询hbase

*

* @param partitions 分区表

* @param result 结果集

* @param hbaseQueryHelper 查询类实例

* @param hbasePage 分页对象

* @param iHbaseQueryConsumSetting 查询条件回调

* @param iHbaseReader 行读取器

* @param

*/

private static <T extends HbaseBaseModel> void doQuery(List<Partition> partitions, List<T> result, HbaseQueryHelper<T> hbaseQueryHelper, HbasePage hbasePage, IHbaseQueryConsumSetting iHbaseQueryConsumSetting, IHbaseReader<T> iHbaseReader) {

List<T> ts = hbaseQueryHelper.queryByPage(hbasePage.getTableName(), new HbaseQueryConsumSettingByPage(iHbaseQueryConsumSetting, hbasePage), iHbaseReader);

result.addAll(ts);

if (ts.size() < hbasePage.getPageSize()) {

//这里需要更改start/stop

Partition partition = getNextPartition(partitions, hbasePage.getStopKey());

if (null == partition) {

return;

}

hbasePage.setStartKey(Bytes.toString(partition.getStartKey()));

hbasePage.setStopKey(Bytes.toString(partition.getEndKey()));

hbasePage.setPageSize(hbasePage.getPageSize() - ts.size());

doQuery(partitions, result, hbaseQueryHelper, hbasePage, iHbaseQueryConsumSetting, iHbaseReader);

}

}

/**

* 获取现存的Partition

*

* @param table

* @param partitionLen

* @return

* @throws IOException

*/

private static List<Partition> getPartitions(Table table, FilterList filterList, int partitionLen) throws IOException {

List<Partition> partitions = new ArrayList<>();

ResultScanner scanner = null;

try {

Scan scan = new Scan();

filterList.addFilter(new FirstKeyOnlyFilter());

filterList.addFilter(new PageFilter(1));

scan.setFilter(filterList);

scanner = table.getScanner(scan);

Iterator<Result> iterator = scanner.iterator();

while (iterator.hasNext()) {

Result next = iterator.next();

String row = Bytes.toString(next.getRow());

String substring = row.substring(0, partitionLen);

byte[] startKey = Bytes.toBytes(substring);

partitions.add(new Partition(startKey, Bytes.add(startKey, Bytes.toBytes(Constant.ROW_KEY_SPLITE))));

}

} catch (Exception e) {

e.printStackTrace();

} finally {

Closer.close(scanner);

}

return partitions;

}

private static String getRedisKey(String query) {

return "tbl:yth:hbase:query:" + Base64.getEncoder().encodeToString(query.getBytes());

}

/**

* 将队形序列化成字节

*

* @param o

* @return

*/

private static byte[] getObjectBytes(Object o) {

ByteArrayOutputStream byteArrayOutputStream = null;

ObjectOutputStream objectOutputStream = null;

try {

byteArrayOutputStream = new ByteArrayOutputStream();

objectOutputStream = new ObjectOutputStream(byteArrayOutputStream);

objectOutputStream.writeObject(o);

return byteArrayOutputStream.toByteArray();

} catch (Exception e) {

e.printStackTrace();

} finally {

Closer.close(objectOutputStream);

}

return null;

}

/**

* 反序列化

*

* @param bytes

* @return

*/

private static Object getObject(byte[] bytes) {

ObjectInputStream objectInputStream = null;

try {

objectInputStream = new ObjectInputStream(new ByteArrayInputStream(bytes));

return objectInputStream.readObject();

} catch (Exception e) {

e.printStackTrace();

} finally {

Closer.close(objectInputStream);

}

return null;

}

/**

* 从redis中获取分区列表

*

* @param hbasePage

* @param tableName

* @param filterList

* @param jedis

* @return

* @throws IOException

*/

private static synchronized List<Partition> getPartitionFromRedis(HbasePage hbasePage, String tableName, FilterList filterList, Jedis jedis) throws IOException {

String queryRedisKey = getRedisKey(hbasePage.queryToString());

List<Partition> partitions = null;

byte[] bytes = jedis.get(Bytes.toBytes(queryRedisKey));

if (bytes != null) {

partitions = (List<Partition>) getObject(bytes);

}

if (null == partitions) {

Table table = HbaseHelper.getTable(tableName);

partitions = getPartitions(table, filterList, PartitionEnum.getPartitionLen(tableName));

jedis.set(Bytes.toBytes(queryRedisKey), getObjectBytes(partitions));

jedis.expire(Bytes.toBytes(queryRedisKey), 10 * 60);

}

return partitions;

}

/**

* 通过startKey获取分区

*

* @param list

* @param startKey

* @return

*/

public static Partition getPartition(List<Partition> list, String startKey) {

for (Partition partition : list) {

if (Bytes.toString(partition.getStartKey()).equals(startKey)) {

return partition;

}

}

return null;

}

/**

* 获取下一个分区

*

* @param list

* @param stopKey

* @return

*/

public static Partition getNextPartition(List<Partition> list, String stopKey) {

for (int i = 0; i < list.size(); i++) {

Partition partition = list.get(i);

if (Bytes.equals(partition.getEndKey(), Bytes.toBytes(stopKey))) {

if (i < list.size() - 1) {

return list.get(i + 1);

}

return null;

}

}

return null;

}

}

HbaseQueryHelper

通用查询类,查询+结果映射

import com.tbl.yth.commonutil.bigdata.batch.common.HbaseFieldHelper;

import com.tbl.yth.commonutil.bigdata.query.common.QueryField;

import com.tbl.yth.commonutil.bigdata.query.inter.IHbaseQueryConsumSetting;

import com.tbl.yth.commonutil.bigdata.query.inter.IHbaseReader;

import com.tbl.yth.commonutil.bigdata.query.model.HbaseBaseModel;

import com.tbl.yth.commonutil.bigdata.util.HbaseHelper;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.filter.FilterList;

import org.apache.hadoop.hbase.filter.PageFilter;

import org.apache.hadoop.hbase.util.Bytes;

import java.util.*;

import java.util.concurrent.ConcurrentHashMap;

/**

* @author 尹忠政

* @describe Hbase 查询帮助类

* @time 2019/9/21

*

*

* simple example

*

* import com.tbl.bigdata.common.query.annotation.HbaseQuery;

* import com.tbl.bigdata.common.query.annotation.HbaseQueryField;

* import com.tbl.bigdata.common.query.model.HbaseBaseModel;

* @HbaseQuery public class RhkkVo extends HbaseBaseModel {

* @HbaseQueryField(field = "capture_time")

* private String captureTime = "";

* private long timestamp;

* @HbaseQueryField(field = "device_id")

* private String deviceId = "";

* @HbaseQueryField(field = "device_type")

* private String deviceType;

* private String imei = "";

* private String value = "";

* private String locationId = "";

* private short netSubtype;

* private short netType;

* }

*

* try {

* HbaseQueryHelper instance = HbaseQueryHelper.getInstance(RhkkVo.class);

* List query = instance.query(Constant.TABLE_RHKK, null, 10, new IHbaseQueryConsumSetting() {

* @Override public void setting(Scan scan, FilterList filterList) throws Exception {

* Filter filter = new RowFilter(CompareFilter.CompareOp.EQUAL,new SubstringComparator("|1|"));

* filterList.addFilter(filter);

* }

* });

* System.out.println(query.size());

*

* } catch (Exception e) {

* e.printStackTrace();

* }

*

* 该helper应该作为全局变量,一张表按理只应该建立一个helper示例,以提高搜索性能

*/

public class HbaseQueryHelper<T extends HbaseBaseModel> {

private static ConcurrentHashMap<String, HbaseQueryHelper> concurrentHashMap = new ConcurrentHashMap<>();

private List<QueryField> queryFields = new ArrayList<>();

private Set<String> cfs = new HashSet<>();

private Class<T> _class;

private HbaseQueryHelper(Class<T> _class) {

this._class = _class;

//递归获取所有的private字段

HbaseFieldHelper.makeQueryField(_class, queryFields, cfs);

}

public static <T extends HbaseBaseModel> HbaseQueryHelper getInstance(Class<T> _class) throws Exception {

return concurrentHashMap.computeIfAbsent(_class.getName(), s -> new HbaseQueryHelper<>(_class));

}

/**

* 分页查询

*

* @param tableName

* @param startRoeKey

* @param pageSize

* @param hbaseQueryConsumSetting

* @return

* @throws Exception

*/

public List<T> query(String tableName, String startRoeKey,String stopRowKey, long pageSize, IHbaseQueryConsumSetting hbaseQueryConsumSetting) throws Exception {

return query(tableName, startRoeKey,stopRowKey, pageSize, hbaseQueryConsumSetting, null);

}

/**

* scan查询

*

* @param tableName

* @param hbaseQueryConsumSetting

* @param iHbaseReader

* @return

* @throws Exception

*/

public List<T> query(String tableName,String startRoeKey,String stopRowKey, IHbaseQueryConsumSetting hbaseQueryConsumSetting, IHbaseReader<T> iHbaseReader) throws Exception {

return query(tableName, startRoeKey,stopRowKey, 0, hbaseQueryConsumSetting, iHbaseReader);

}

/**

* 查询Hbase 表

*

* @param tableName 表名称

* @param startRoeKey 开始的Keyy

* @param pageSize 分页大小

* @param hbaseQueryConsumSetting 查询设置接口

* @param iHbaseReader 查询读取器

* @return

* @throws Exception

*/

public List<T> query(String tableName, String startRoeKey,String stopRowKey, long pageSize, IHbaseQueryConsumSetting hbaseQueryConsumSetting, IHbaseReader<T> iHbaseReader) {

ResultScanner scanner = null;

try {

Table table = HbaseHelper.getTable(tableName);

Scan scan = new Scan();

FilterList filterList = new FilterList();

//设置开始rowKey

if (!StringUtils.isEmpty(startRoeKey)) {

scan.setStartRow(Bytes.toBytes(startRoeKey));

}

//设置startRowKey

if (!StringUtils.isEmpty(startRoeKey)) {

scan.setStopRow(Bytes.toBytes(stopRowKey));

}

//添加列族

for (String cf : cfs) {

scan.addFamily(Bytes.toBytes(cf));

}

if (null != hbaseQueryConsumSetting) {

hbaseQueryConsumSetting.setting(scan, filterList);

}

//设置分页

if (pageSize > 0) {

filterList.addFilter(new PageFilter(pageSize));

}

if (filterList.getFilters().size() > 0) {

scan.setFilter(filterList);

}

scanner = table.getScanner(scan);

return read(scanner, iHbaseReader);

} catch (Exception e) {

e.printStackTrace();

} finally {

if (null != scanner) {

scanner.close();

}

}

return new ArrayList<>();

}

/**

* 查询Hbase 表

*

* @param tableName 表名称

* @param hbaseQueryConsumSetting 查询设置接口

* @param iHbaseReader 查询读取器

* @return

* @throws Exception

*/

public List<T> queryByPage(String tableName, IHbaseQueryConsumSetting hbaseQueryConsumSetting, IHbaseReader<T> iHbaseReader) {

ResultScanner scanner = null;

try {

Table table = HbaseHelper.getTable(tableName);

Scan scan = new Scan();

FilterList filterList = new FilterList();

//添加列族

for (String cf : cfs) {

scan.addFamily(Bytes.toBytes(cf));

}

if (null != hbaseQueryConsumSetting) {

hbaseQueryConsumSetting.setting(scan, filterList);

}

if (filterList.getFilters().size() > 0) {

scan.setFilter(filterList);

}

scanner = table.getScanner(scan);

return read(scanner, iHbaseReader);

} catch (Exception e) {

e.printStackTrace();

} finally {

if (null != scanner) {

scanner.close();

}

}

return new ArrayList<>();

}

public List<T> query(String tableName, List<String> rks, IHbaseReader<T> iHbaseReader) {

List<T> ts = new ArrayList<>();

try {

Table table = HbaseHelper.getTable(tableName);

List<Get> gets = new ArrayList<>();

for (String rk : rks) {

Get get = new Get(Bytes.toBytes(rk));

//添加列族

for (String cf : cfs) {

get.addFamily(Bytes.toBytes(cf));

}

gets.add(get);

}

Result[] results = table.get(gets);

for (Result result : results) {

if (null == result.getRow()) continue;

T t = _class.newInstance();

t.setRowKey(Bytes.toString(result.getRow()));

for (QueryField queryField : queryFields) {

queryField.setValue(result, t);

}

if (null != iHbaseReader) {

iHbaseReader.reader(result, t);

}

ts.add(t);

}

} catch (Exception e) {

e.printStackTrace();

}

return ts;

}

private List<T> read(ResultScanner scanner, IHbaseReader<T> iHbaseReader) throws IllegalAccessException, InstantiationException {

List<T> ts = new ArrayList<>();

Iterator<Result> iterator = scanner.iterator();

while (iterator.hasNext()) {

Result next = iterator.next();

T t = _class.newInstance();

t.setRowKey(Bytes.toString(next.getRow()));

for (QueryField queryField : queryFields) {

queryField.setValue(next, t);

}

if (null != iHbaseReader) {

iHbaseReader.reader(next, t);

} else {

ts.add(t);

}

}

return ts;

}

}

总结

这种方式,解决了pageFilter的不足,前提条件是必须设置startkey和stopkey,在此前提下,一次返回至客户端的数据大多是同一客户端的数据,结合业务,调整好rowKey的规则,就可以在满足业务的情况下,提升查询效率。