Note coursera-machine learning

This is the note of machine learning course on Cousera. I will continuously update this blog.

------------------------------不华丽的分割线-----------------------------------------

* 同学分享了一个网址,包括了这门课程的video,ppt和pdf.

* 鉴于ppt包括了我所有的工作内容。所以直接上网址,这个就不再写啦。

* https://class.coursera.org/ml-005/lecture

----------------------------------------------------------------------------------------------

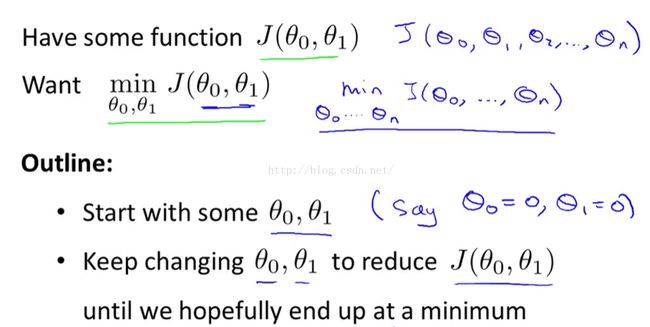

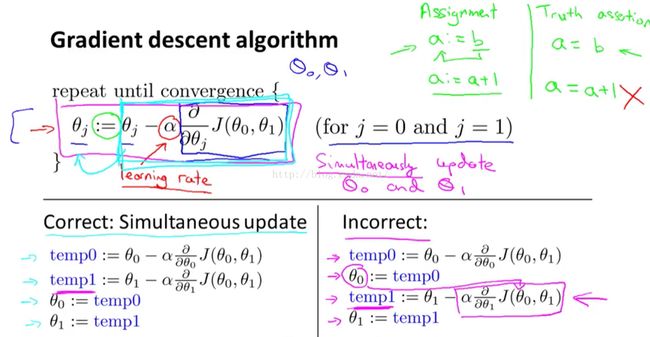

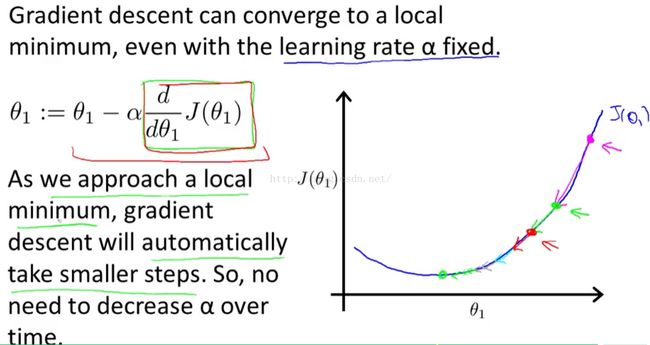

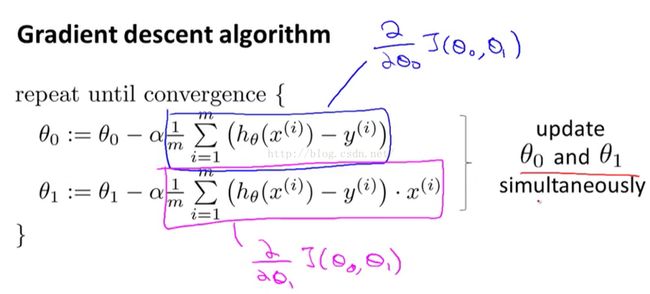

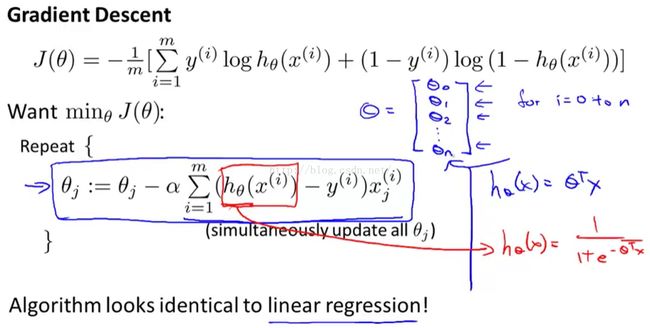

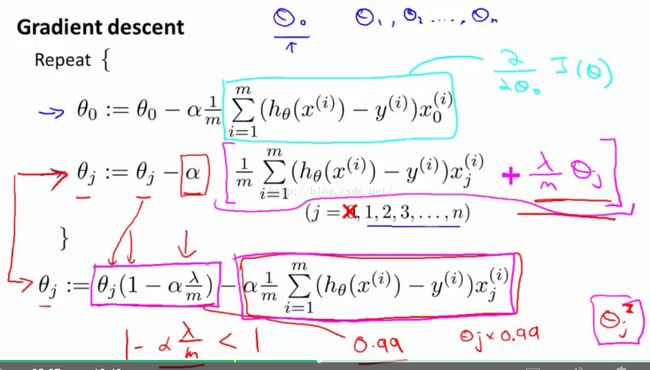

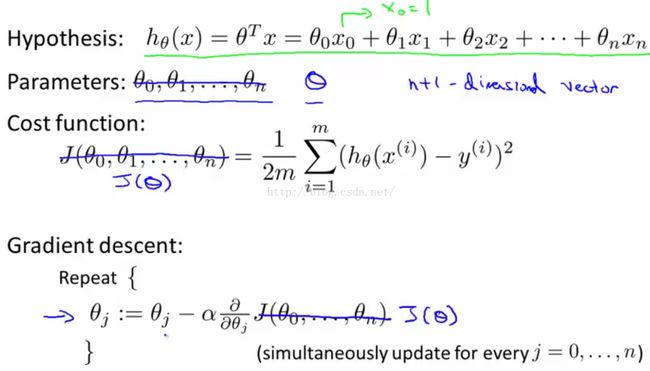

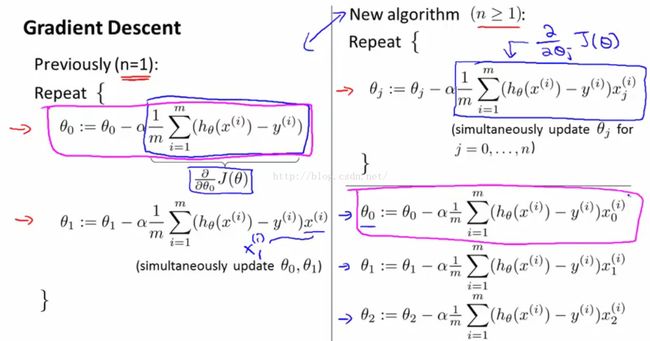

1. Gradient descent

- be care of the local optimum

-------------------------------------------***********************************---------------------------------------------------------------

-------------------------------------------------*******************************--------------------------------------------

2. Linear Algebra

3.Multivariate Linear Regression

the idea of vector and matrix

---------------------#####################-------------------------------------------------------

------------------------------**************************-------------------------------

---------------------------------------******************-------------------------------------------

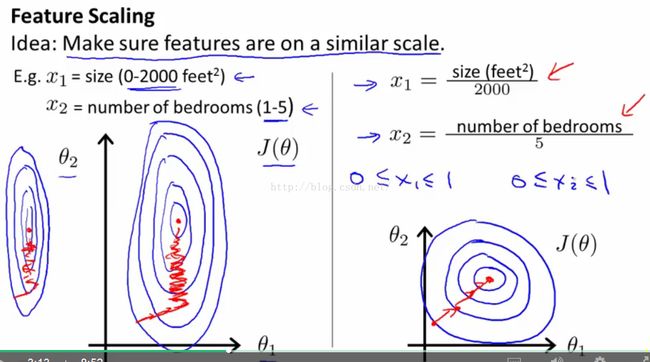

Normalization

Get every feature into approximately a -1<= x <=1 range.

About learning rate:

If gradient descent is not working, using smaller learning rate.

For sufficiently small learning rate, the cost function should decrease on every iteration.

But if learning rate is too small, gradient descent can be slow to converge.

Choice of features is an art.

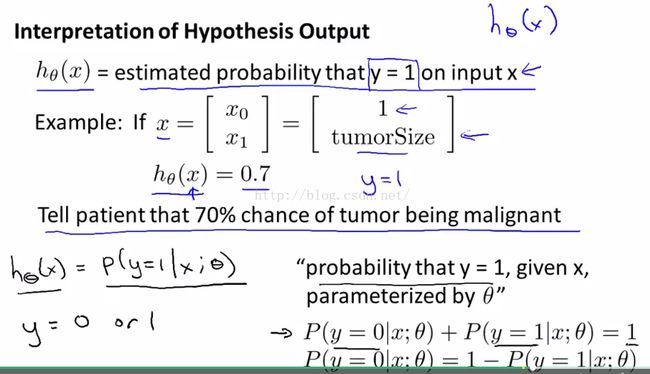

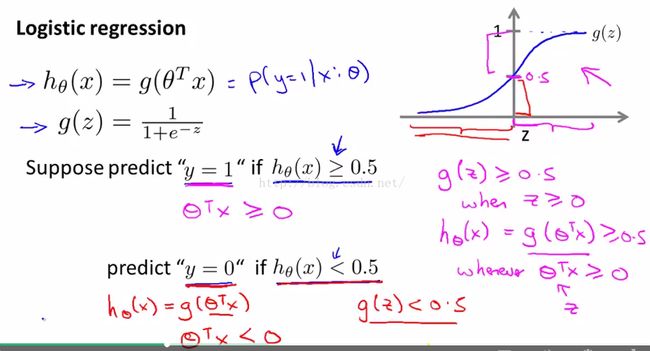

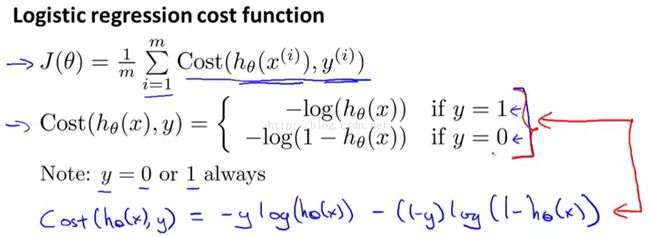

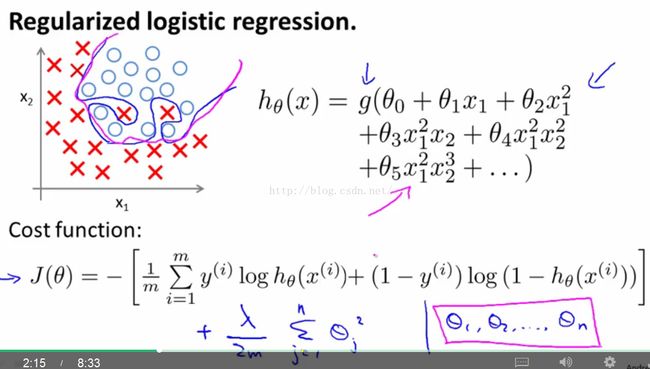

Cost function for logistic regression is quite different from the one for linear regression.

Cost function 的这个处理技巧很常用。

---------------------------------*****************-------------------------------------------

---------------------------------********************---------------------------------

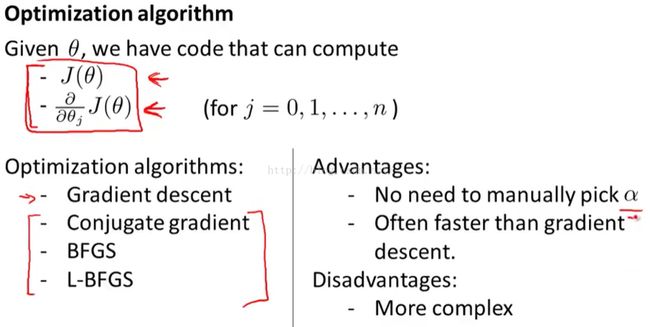

If we deal with large data, these algorithms are much faster than gradient descent algorithm.

--------------------------------------------*******************---------------------------------------

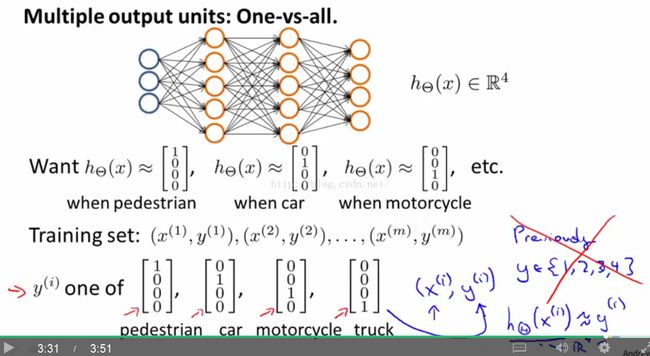

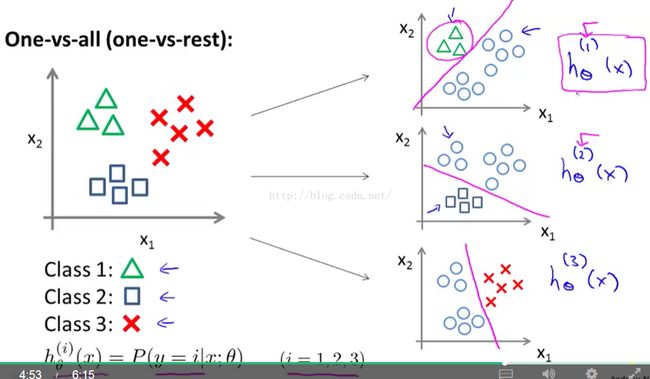

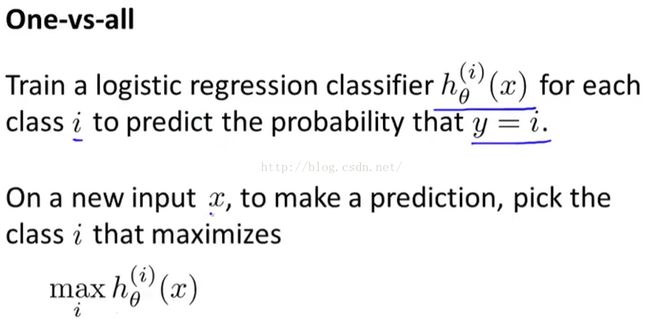

Multiclass Classification

--------------------------------------------***************-----------------------------------

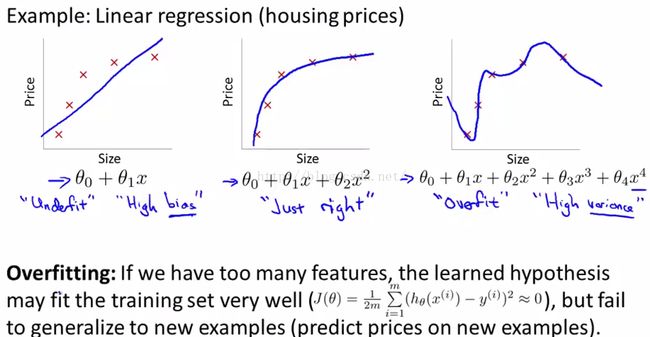

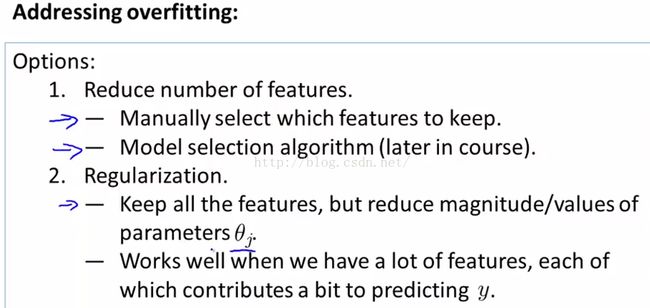

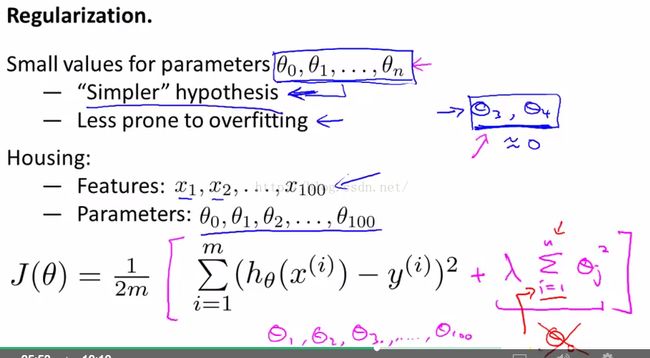

5. Regularization

Regularization can help to reduce overfitting.

-----------------------------------------------------------------*********************************-----------------------------------------------------

In the real task, it's hard to judge which features are useful. So we will shrink all thetas except theta 0 (actually theta 0 doesn't make a big difference).

----------------------------------------------***********************------------------------------------------

-----------------------------------------------******************************----------------------------------------------------------

Regularized Logistic Regression

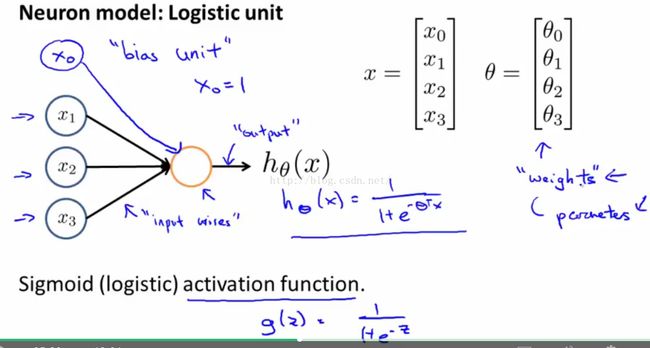

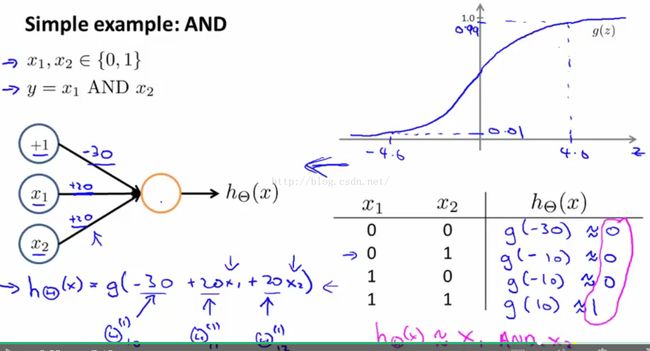

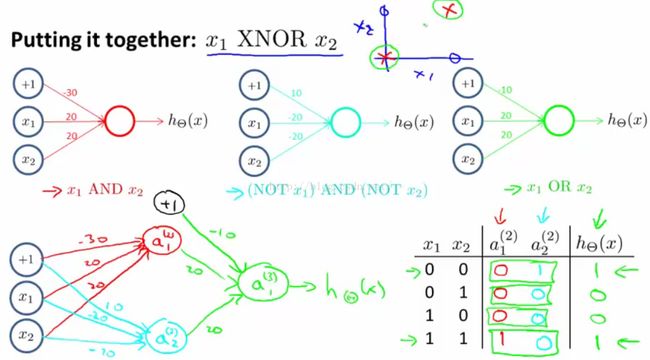

6. Neural Networks

终于到NN啦。由于deep learning的火爆,NN也是容光焕发啊。Andrew Ng 在2011年录的课程中,就流露出了对NN的重视,也提到自己在做相关方面的研究。google brain以及他之后的工作和成就,已经有目共睹了。

-----------------------------------------********************************------------------------------------------------------------------

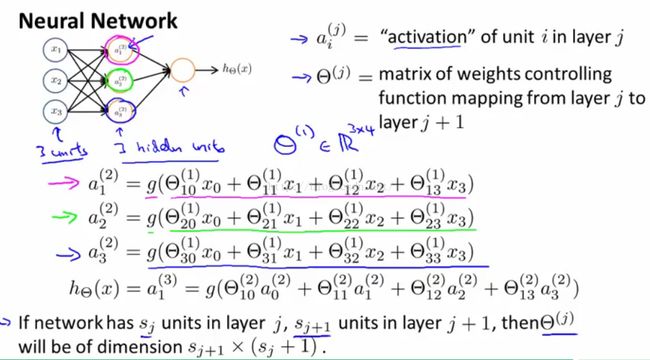

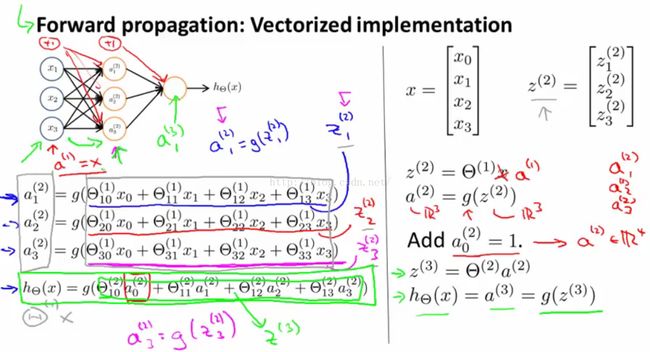

This picture is for vectorization.

---------------------------------------------------------********************************-----------------------------------------------------------------

Neural networks learn its own features.

----------------------------------------------***********************--------------------------------------------

-------------------------------------------************************-------------------------------------

It is tricky to use hidden layers to implement complex computing.

----------------------------------------------*****************---------------------------------------