神经网络算法:ResNet手写数字识别

ResNet手写数字识别

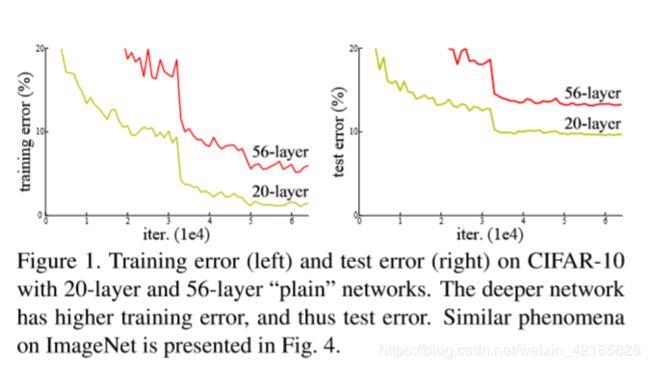

Kaiming He博士在ResNet论文中提出了这个思想,用于解决深层神经网络不好训练的问题.

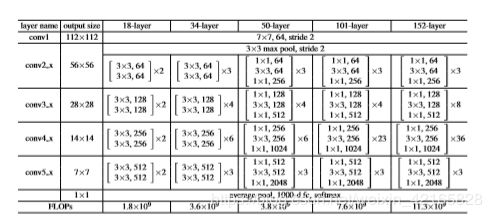

上图是论文中关于传统神经网络不好识别的描述,可以看到.随着神经网络的深度增大,训练的错误率逐渐上升.于是他提出了利用残差学习的思想,就是机器不再学习整个分布,而是学习当前网络生成的分布与原始分布的差异.在CIFAR-10上的网络结构如下:

他使用如下的误差训练公式,y-x即为残差:

![]()

具体在CIFAR-10上的训练网络如下图最右侧所示,

这些跳过来的路径使得梯度反向传播时很少出现误差消失的情况.

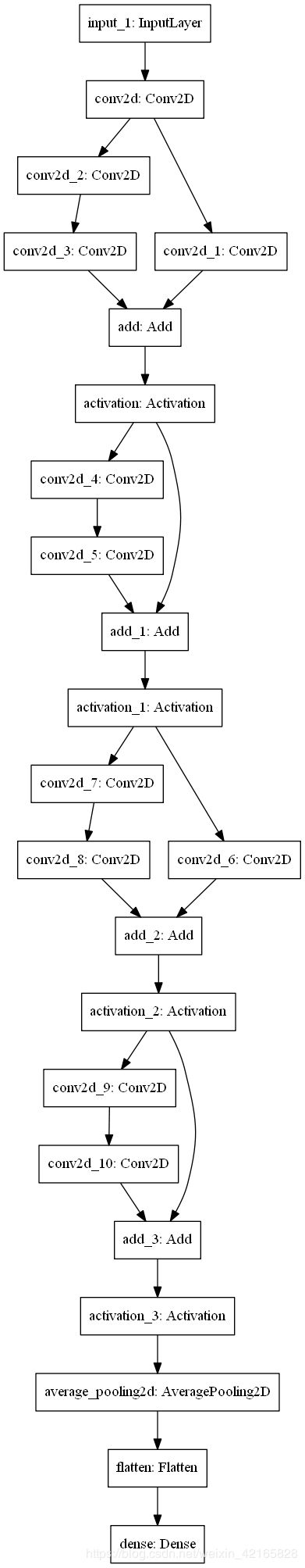

我在mnist数据集上参考了几篇论文,实现了类似的神经网络,效果比较相似,但是mnist基本上不用做池化操作,因为毕竟每个样本的大小只有28*28.使用keras训练,最终准确率大概为0.9906,最终把模型保存为h5文件.下面直接上训练的代码.recognize是使用训练好的模型做手写数字识别的模块,本身和深度学习关系不大.网络结构大概是这个样子,keras导出的,使用

keras.utils.plot_model(self.model,to_file='mnist_res.png')

就可以把模型的结构可视化了.

'''用于训练mnist模型,生成h5模型文件'''

import tensorflow as tf

from tensorflow import keras

import recogonize

'''

识别的效果:

Epoch 1/5

- 141s - loss: 0.2842 - acc: 0.9086 - val_loss: 0.0605 - val_acc: 0.9814

Epoch 2/5

- 153s - loss: 0.0776 - acc: 0.9775 - val_loss: 0.0465 - val_acc: 0.9847

Epoch 3/5

- 147s - loss: 0.0557 - acc: 0.9837 - val_loss: 0.0442 - val_acc: 0.9852

Epoch 4/5

- 142s - loss: 0.0439 - acc: 0.9872 - val_loss: 0.0319 - val_acc: 0.9898

Epoch 5/5

- 145s - loss: 0.0370 - acc: 0.9890 - val_loss: 0.0323 - val_acc: 0.9906

loss in test set: 0.032348819018113865

acc in test set: 0.9906

'''

class ResNet:

def __init__(self,input_shape,classes,img_cols,img_rows):

self.classes = classes

self.img_cols = img_cols

self.img_rows = img_rows

self.input_shape = img_cols, img_rows, 1

self.model = None

(train_images, train_labels), (test_images, test_labels) = keras.datasets.mnist.load_data()

self.train_images = train_images.reshape((train_images.shape[0], img_cols, img_rows, 1)).astype('float32')

self.test_images = test_images.reshape((test_images.shape[0], img_cols, img_rows, 1)).astype('float32')

self.train_images /= 255

self.test_images /= 255

self.train_labels = keras.utils.to_categorical(train_labels, classes)

self.test_labels = keras.utils.to_categorical(test_labels, classes)

#残差块,private

def __res_block(self,x, channels, i):

if i==1:

xstrides = (1,1)

x_add = x

else:

xstrides =(2,2)

x_add = keras.layers.Conv2D(channels,

kernel_size=3,

activation='relu',

padding='same',

strides=(2,2)

)(x)

x = keras.layers.Conv2D(channels,

kernel_size=3,

activation='relu',

padding='same',

strides=xstrides)(x)

x = keras.layers.Conv2D(channels,

kernel_size=3,

padding='same',

strides=(1,1))(x)

x = keras.layers.add([x, x_add])

x = keras.layers.Activation(keras.backend.relu)(x)

return x

def buildModel(self):

firstin = keras.layers.Input(shape=self.input_shape)

x = keras.layers.Conv2D(16,

kernel_size=7,

activation='relu',

input_shape=self.input_shape,

padding='same'

)(firstin)

for i in range(2):

x = self.__res_block(x,16,i)

for i in range(2):

x = self.__res_block(x,32,i)

x = keras.layers.AveragePooling2D(pool_size=(7, 7))(x)

x = keras.layers.Flatten()(x)

x = keras.layers.Dense(10, activation='softmax')(x)

self.model = keras.Model(inputs=firstin, outputs=x)

keras.utils.plot_model(self.model,to_file='mnist_res.png')

self.model.compile(loss='categorical_crossentropy',

optimizer='adadelta',

metrics=['accuracy']

)

def train(self):

self.model.fit(self.train_images,self.train_labels,

batch_size=10,

verbose=2,#精细化显示

validation_data=(self.test_images,self.test_labels),

epochs=5

)

self.model.save('mnist_res_model.h5')

json_str = self.model.to_json()

with open('mnist_res_model.json','w') as file:

file.write(json_str)

loss,acc = self.model.evaluate(self.test_images,self.test_labels,verbose=0)

print("loss in test set:",loss)

print("acc in test set:", acc)

def test(self):

recogonize.main(0)

def main(_):

tr = ResNet((28,28,1),classes=10,img_cols=28,img_rows=28)

tr.buildModel()

tr.train()

tr.test()

if __name__=="__main__":

tf.app.run()