憨批的语义分割5——DeeplabV3+模型解析以及训练自己的DeeplabV3+模型(划分斑马线)

憨批的语义分割5——DeeplabV3+模型解析以及训练自己的DeeplabV3+模型(划分斑马线)

- 学习前言

- 模型部分

- 什么是DeeplabV3+模型

- DeeplabV3+模型的代码实现

- 1、主干模型Xception。

- 2、DeeplabV3+的Decoder解码部分

- 代码测试

- 训练部分

- 训练的是什么

- 1、训练文件详解

- 2、LOSS函数的组成

- 训练代码

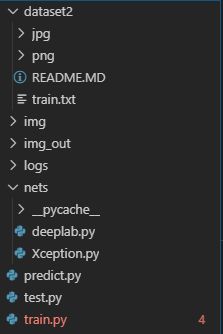

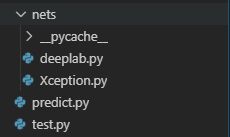

- 1、文件存放方式

- 2、训练文件

- 3、预测文件

- 训练结果

学习前言

DeeplabV3是一个比较新的模型了,他不仅比较新,而且参数量较大,本篇BLOG将会从整体上对DeeplabV3+进行一个分解……如果想要先有语义分割的基础,可以看我的博文憨批的语义分割2——训练自己的segnet模型(划分斑马线)

![]()

模型部分

想要看视频教程的可以去这里Keras 搭建自己的语义分割模型https://www.bilibili.com/video/av75562599/

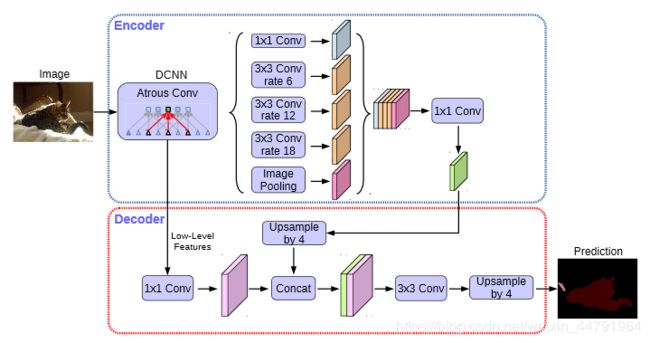

什么是DeeplabV3+模型

DeeplabV3+被认为是语义分割的新高峰,主要是因为这个模型的效果非常的好呀。

DeepLabv3+主要在模型的架构上作文章,为了融合多尺度信息,其引入了语义分割常用的encoder-decoder形式。在 encoder-decoder 架构中,引入可任意控制编码器提取特征的分辨率,通过空洞卷积平衡精度和耗时。

听起来很懵对吧,其实DeeplabV3的主要结构可以由下面这幅图得到。

由这幅图我们可以发现,其实deeplabV3+模型仍然是两个部分,一个部分是Encoder,一个部分是Decoder。

- 重点哈重点哈重点哈

- 重点哈重点哈重点哈!

- 重点哈重点哈重点哈!!!

其实deeplabV3+与我们之前介绍的pspnet、segnet、unet相比,其最大的特点就是引入了空洞卷积,在不损失信息的情况下,加大了感受野,让每个卷积输出都包含较大范围的信息。如下就是空洞卷积的一个示意图,所谓空洞就是特征点提取的时候会跨像素。

空洞卷积的目的其实也就是提取更有效的特征,所以它位于Encoder网络中用于特征提取。

我们再来仔细看看Encoder的结构。

其具有两个核心点:

1、在主干DCNN深度卷积神经网络里使用串行的Atrous Convolution。串行的意思就是一层又一层,普通的深度卷积神经网络的结构就是串行结构。

2、在图片经过主干DCNN深度卷积神经网络之后的结果分为两部分,一部分直接传入Decoder,另一部分经过并行的Atrous Convolution,分别用不同rate的Atrous Convolution进行特征提取,再进行合并,再进行1x1卷积压缩特征。

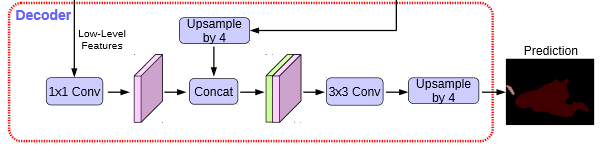

我们再来看看Decoder的结构。

Decoder看起来就简单多了,其输入有两部分,一部分是DCNN的输出,一部分是DCNN输出经过并行空洞卷积后的结果。

这两个结果经过一定的处理后Concat在一起,在DeeplabV3中,Upsample的方式是双线性插值。

然后就得到最后的结果啦~

DeeplabV3+模型的代码实现

DeeplabV3+模型的代码分为两部分。

1、主干模型Xception。

该部分用于特征提取,其结构是优化改进后的Xception,其相比一般的Xception最大的特点是引入了空洞卷积神经网络学习小记录22——Xception模型的复现详解:

from keras.models import Model

from keras import layers

from keras.layers import Input

from keras.layers import Lambda

from keras.layers import Activation

from keras.layers import Concatenate

from keras.layers import Add

from keras.layers import Dropout

from keras.layers import BatchNormalization

from keras.layers import Conv2D

from keras.layers import DepthwiseConv2D

from keras.layers import ZeroPadding2D

from keras.layers import GlobalAveragePooling2D

def _conv2d_same(x, filters, prefix, stride=1, kernel_size=3, rate=1):

# 计算padding的数量,hw是否需要收缩

if stride == 1:

return Conv2D(filters,

(kernel_size, kernel_size),

strides=(stride, stride),

padding='same', use_bias=False,

dilation_rate=(rate, rate),

name=prefix)(x)

else:

kernel_size_effective = kernel_size + (kernel_size - 1) * (rate - 1)

pad_total = kernel_size_effective - 1

pad_beg = pad_total // 2

pad_end = pad_total - pad_beg

x = ZeroPadding2D((pad_beg, pad_end))(x)

return Conv2D(filters,

(kernel_size, kernel_size),

strides=(stride, stride),

padding='valid', use_bias=False,

dilation_rate=(rate, rate),

name=prefix)(x)

def SepConv_BN(x, filters, prefix, stride=1, kernel_size=3, rate=1, depth_activation=False, epsilon=1e-3):

# 计算padding的数量,hw是否需要收缩

if stride == 1:

depth_padding = 'same'

else:

kernel_size_effective = kernel_size + (kernel_size - 1) * (rate - 1)

pad_total = kernel_size_effective - 1

pad_beg = pad_total // 2

pad_end = pad_total - pad_beg

x = ZeroPadding2D((pad_beg, pad_end))(x)

depth_padding = 'valid'

# 如果需要激活函数

if not depth_activation:

x = Activation('relu')(x)

# 分离卷积,首先3x3分离卷积,再1x1卷积

# 3x3采用膨胀卷积

x = DepthwiseConv2D((kernel_size, kernel_size), strides=(stride, stride), dilation_rate=(rate, rate),

padding=depth_padding, use_bias=False, name=prefix + '_depthwise')(x)

x = BatchNormalization(name=prefix + '_depthwise_BN', epsilon=epsilon)(x)

if depth_activation:

x = Activation('relu')(x)

# 1x1卷积,进行压缩

x = Conv2D(filters, (1, 1), padding='same',

use_bias=False, name=prefix + '_pointwise')(x)

x = BatchNormalization(name=prefix + '_pointwise_BN', epsilon=epsilon)(x)

if depth_activation:

x = Activation('relu')(x)

return x

def _xception_block(inputs, depth_list, prefix, skip_connection_type, stride,

rate=1, depth_activation=False, return_skip=False):

residual = inputs

for i in range(3):

residual = SepConv_BN(residual,

depth_list[i],

prefix + '_separable_conv{}'.format(i + 1),

stride=stride if i == 2 else 1,

rate=rate,

depth_activation=depth_activation)

if i == 1:

skip = residual

if skip_connection_type == 'conv':

shortcut = _conv2d_same(inputs, depth_list[-1], prefix + '_shortcut',

kernel_size=1,

stride=stride)

shortcut = BatchNormalization(name=prefix + '_shortcut_BN')(shortcut)

outputs = layers.add([residual, shortcut])

elif skip_connection_type == 'sum':

outputs = layers.add([residual, inputs])

elif skip_connection_type == 'none':

outputs = residual

if return_skip:

return outputs, skip

else:

return outputs

def Xception(inputs,alpha=1,OS=16):

if OS == 8:

entry_block3_stride = 1

middle_block_rate = 2 # ! Not mentioned in paper, but required

exit_block_rates = (2, 4)

atrous_rates = (12, 24, 36)

else:

entry_block3_stride = 2

middle_block_rate = 1

exit_block_rates = (1, 2)

atrous_rates = (6, 12, 18)

# 256,256,32

x = Conv2D(32, (3, 3), strides=(2, 2),

name='entry_flow_conv1_1', use_bias=False, padding='same')(inputs)

x = BatchNormalization(name='entry_flow_conv1_1_BN')(x)

x = Activation('relu')(x)

# 256,256,64

x = _conv2d_same(x, 64, 'entry_flow_conv1_2', kernel_size=3, stride=1)

x = BatchNormalization(name='entry_flow_conv1_2_BN')(x)

x = Activation('relu')(x)

# 256,256,128 -> 256,256,128 -> 128,128,128

x = _xception_block(x, [128, 128, 128], 'entry_flow_block1',

skip_connection_type='conv', stride=2,

depth_activation=False)

# 128,128,256 -> 128,128,256 -> 64,64,256

# skip = 128,128,256

x, skip1 = _xception_block(x, [256, 256, 256], 'entry_flow_block2',

skip_connection_type='conv', stride=2,

depth_activation=False, return_skip=True)

x = _xception_block(x, [728, 728, 728], 'entry_flow_block3',

skip_connection_type='conv', stride=entry_block3_stride,

depth_activation=False)

for i in range(16):

x = _xception_block(x, [728, 728, 728], 'middle_flow_unit_{}'.format(i + 1),

skip_connection_type='sum', stride=1, rate=middle_block_rate,

depth_activation=False)

x = _xception_block(x, [728, 1024, 1024], 'exit_flow_block1',

skip_connection_type='conv', stride=1, rate=exit_block_rates[0],

depth_activation=False)

x = _xception_block(x, [1536, 1536, 2048], 'exit_flow_block2',

skip_connection_type='none', stride=1, rate=exit_block_rates[1],

depth_activation=True)

return x,atrous_rates,skip1

2、DeeplabV3+的Decoder解码部分

这一部分对应着上面DeeplabV3+模型中的Decoder部分。(并行空洞卷积也在这一部分里面~)

其关键就是把获得的特征重新映射到比较大的图中的每一个像素点,用于每一个像素点的分类。

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import tensorflow as tf

from nets.Xception import Xception

from keras.models import Model

from keras import layers

from keras.layers import Input

from keras.layers import Lambda

from keras.layers import Activation

from keras.layers import Softmax,Reshape

from keras.layers import Concatenate

from keras.layers import Add

from keras.layers import Dropout

from keras.layers import BatchNormalization

from keras.layers import Conv2D

from keras.layers import DepthwiseConv2D

from keras.layers import ZeroPadding2D

from keras.layers import GlobalAveragePooling2D

from keras.utils.data_utils import get_file

from keras import backend as K

from keras.activations import relu

from keras.applications.imagenet_utils import preprocess_input

def SepConv_BN(x, filters, prefix, stride=1, kernel_size=3, rate=1, depth_activation=False, epsilon=1e-3):

# 计算padding的数量,hw是否需要收缩

if stride == 1:

depth_padding = 'same'

else:

kernel_size_effective = kernel_size + (kernel_size - 1) * (rate - 1)

pad_total = kernel_size_effective - 1

pad_beg = pad_total // 2

pad_end = pad_total - pad_beg

x = ZeroPadding2D((pad_beg, pad_end))(x)

depth_padding = 'valid'

# 如果需要激活函数

if not depth_activation:

x = Activation('relu')(x)

# 分离卷积,首先3x3分离卷积,再1x1卷积

# 3x3采用膨胀卷积

x = DepthwiseConv2D((kernel_size, kernel_size), strides=(stride, stride), dilation_rate=(rate, rate),

padding=depth_padding, use_bias=False, name=prefix + '_depthwise')(x)

x = BatchNormalization(name=prefix + '_depthwise_BN', epsilon=epsilon)(x)

if depth_activation:

x = Activation('relu')(x)

# 1x1卷积,进行压缩

x = Conv2D(filters, (1, 1), padding='same',

use_bias=False, name=prefix + '_pointwise')(x)

x = BatchNormalization(name=prefix + '_pointwise_BN', epsilon=epsilon)(x)

if depth_activation:

x = Activation('relu')(x)

return x

def Deeplabv3(input_shape=(512, 512, 3), classes=21, alpha=1.,OS=16):

img_input = Input(shape=input_shape)

# x=32, 32, 2048

x,atrous_rates,skip1 = Xception(img_input,alpha,OS=OS)

# 全部求平均后,再利用expand_dims扩充维度,1x1

b4 = GlobalAveragePooling2D()(x)

b4 = Lambda(lambda x: K.expand_dims(x, 1))(b4)

b4 = Lambda(lambda x: K.expand_dims(x, 1))(b4)

# 压缩filter

b4 = Conv2D(256, (1, 1), padding='same',

use_bias=False, name='image_pooling')(b4)

b4 = BatchNormalization(name='image_pooling_BN', epsilon=1e-5)(b4)

b4 = Activation('relu')(b4)

size_before = tf.keras.backend.int_shape(x)

# 直接利用resize_images扩充hw

# b4 = 64,64,256

b4 = Lambda(lambda x: tf.image.resize_images(x, size_before[1:3]))(b4)

# 调整通道

b0 = Conv2D(256, (1, 1), padding='same', use_bias=False, name='aspp0')(x)

b0 = BatchNormalization(name='aspp0_BN', epsilon=1e-5)(b0)

b0 = Activation('relu', name='aspp0_activation')(b0)

# 并行空洞卷积

# rate值与OS相关,SepConv_BN为先3x3膨胀卷积,再1x1卷积,进行压缩

# 其膨胀率就是rate值

# rate = 6 (12)

b1 = SepConv_BN(x, 256, 'aspp1',

rate=atrous_rates[0], depth_activation=True, epsilon=1e-5)

# rate = 12 (24)

b2 = SepConv_BN(x, 256, 'aspp2',

rate=atrous_rates[1], depth_activation=True, epsilon=1e-5)

# rate = 18 (36)

b3 = SepConv_BN(x, 256, 'aspp3',

rate=atrous_rates[2], depth_activation=True, epsilon=1e-5)

# 其实实际的意义就是对Xception的输出结果进行

x = Concatenate()([b4, b0, b1, b2, b3])

# 利用conv2d压缩

x = Conv2D(256, (1, 1), padding='same',

use_bias=False, name='concat_projection')(x)

x = BatchNormalization(name='concat_projection_BN', epsilon=1e-5)(x)

x = Activation('relu')(x)

x = Dropout(0.1)(x)

# skip1.shape[1:3] 为 128,128

# skip1 128, 128, 256

x = Lambda(lambda xx: tf.image.resize_images(x, skip1.shape[1:3]))(x)

# 128, 128, 48

dec_skip1 = Conv2D(48, (1, 1), padding='same',

use_bias=False, name='feature_projection0')(skip1)

dec_skip1 = BatchNormalization(

name='feature_projection0_BN', epsilon=1e-5)(dec_skip1)

dec_skip1 = Activation('relu')(dec_skip1)

# 128,128,304

x = Concatenate()([x, dec_skip1])

x = SepConv_BN(x, 256, 'decoder_conv0',

depth_activation=True, epsilon=1e-5)

x = SepConv_BN(x, 256, 'decoder_conv1',

depth_activation=True, epsilon=1e-5)

x = Conv2D(classes, (1, 1), padding='same')(x)

size_before3 = tf.keras.backend.int_shape(img_input)

x = Lambda(lambda xx:tf.image.resize_images(xx,size_before3[1:3]))(x)

x = Reshape((-1,classes))(x)

x = Softmax()(x)

inputs = img_input

model = Model(inputs, x, name='deeplabv3plus')

return model

代码测试

将上面两个代码分别保存为Xception.py和deeplab.py。按照如下方式存储:

此时我们运行test.py的代码:

from nets.deeplab import Deeplabv3

model = Deeplabv3(classes=2,OS=16)

model.summary()

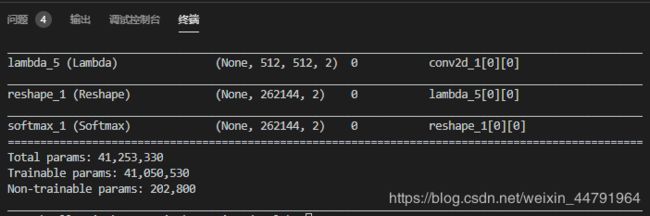

如果没有出错的话就会得到如下的结果:

这个模型很大呀,不过提取的特征很有用噢。

训练部分

训练的是什么

虽然把代码贴上来大家就会点运行然后就可以训练自己的模型,但是我还是想要大家知道,语义分割模型训练的是什么。

1、训练文件详解

这个要从训练文件讲起。

语义分割模型训练的文件分为两部分。

第一部分是原图,像这样:

![]()

第二部分标签,像这样:

![]()

当你们看到这个标签的时候你们会说,我靠,你给我看的什么辣鸡,全黑的算什么标签,其实并不是这样的,这个标签看起来全黑,但是实际上在斑马线的部分其RGB三个通道的值都是1。

其实给你们换一个图你们就可以更明显的看到了。

这是voc数据集中语义分割的训练集中的一幅图:

![]()

这是它的标签。

![]()

为什么这里的标签看起来就清楚的多呢,因为在voc中,其一共需要分21类,所以火车的RGB的值可能都大于10了,当然看得见。

所以,在训练集中,如果像本文一样分两类,那么背景的RGB就是000,斑马线的RGB就是111,如果分多类,那么还会存在222,333,444这样的。这说明其属于不同的类。

2、LOSS函数的组成

关于loss函数的组成我们需要看两个loss函数的组成部分,第一个是预测结果。

x = Conv2D(classes, (1, 1), padding='same')(x)

size_before3 = tf.keras.backend.int_shape(img_input)

x = Lambda(lambda xx:tf.image.resize_images(xx,size_before3[1:3]))(x)

x = Reshape((-1,classes))(x)

x = Softmax()(x)

inputs = img_input

model = Model(inputs, x, name='deeplabv3plus')

首先将其filter层的数量改为nclasses,利用tf.image.resize_images得到最后的输出为[416,416,nclasses]。之后利用Softmax估计属于每一个种类的概率。

其最后预测y_pre其实就是每一个像素点属于哪一个种类的概率。

第二个是真实值,真实值是这样处理的。

# 从文件中读取图像

img = Image.open(r".\dataset2\png" + '/' + name)

img = img.resize((int(WIDTH/4),int(HEIGHT/4)))

img = np.array(img)

seg_labels = np.zeros((int(HEIGHT/4),int(WIDTH/4),NCLASSES))

for c in range(NCLASSES):

seg_labels[: , : , c ] = (img[:,:,0] == c ).astype(int)

seg_labels = np.reshape(seg_labels, (-1,NCLASSES))

Y_train.append(seg_labels)

其将png图先进行resize,resize后其大小与预测y_pre的hw相同,然后读取每一个像素点属于什么种类,并存入。

其最后真实y_true其实就是每一个像素点确实属于哪个种类。

最后loss函数的组成就是y_true和y_pre的交叉熵。

训练代码

大家可以在我的github上下载完整的代码。

https://github.com/bubbliiiing/Semantic-Segmentation

数据集的链接为:

链接:https://pan.baidu.com/s/1uzwqLaCXcWe06xEXk1ROWw

提取码:pp6w

1、文件存放方式

2、训练文件

训练文件如下:

from nets.deeplab import Deeplabv3

from keras.utils.data_utils import get_file

from keras.optimizers import Adam

from keras.callbacks import TensorBoard, ModelCheckpoint, ReduceLROnPlateau, EarlyStopping

from PIL import Image

import keras

from keras import backend as K

import numpy as np

ALPHA = 1.0

WEIGHTS_PATH_X = "https://github.com/bonlime/keras-deeplab-v3-plus/releases/download/1.1/deeplabv3_xception_tf_dim_ordering_tf_kernels.h5"

NCLASSES = 2

HEIGHT = 416

WIDTH = 416

def generate_arrays_from_file(lines,batch_size):

# 获取总长度

n = len(lines)

i = 0

while 1:

X_train = []

Y_train = []

# 获取一个batch_size大小的数据

for _ in range(batch_size):

if i==0:

np.random.shuffle(lines)

name = lines[i].split(';')[0]

# 从文件中读取图像

img = Image.open(r".\dataset2\jpg" + '/' + name)

img = img.resize((WIDTH,HEIGHT))

img = np.array(img)

img = img/255

X_train.append(img)

name = (lines[i].split(';')[1]).replace("\n", "")

# 从文件中读取图像

img = Image.open(r".\dataset2\png" + '/' + name)

img = img.resize((int(WIDTH),int(HEIGHT)))

img = np.array(img)

seg_labels = np.zeros((int(HEIGHT),int(WIDTH),NCLASSES))

for c in range(NCLASSES):

seg_labels[: , : , c ] = (img[:,:,0] == c ).astype(int)

seg_labels = np.reshape(seg_labels, (-1,NCLASSES))

Y_train.append(seg_labels)

# 读完一个周期后重新开始

i = (i+1) % n

yield (np.array(X_train),np.array(Y_train))

def loss(y_true, y_pred):

crossloss = K.binary_crossentropy(y_true,y_pred)

loss = K.sum(crossloss)/HEIGHT/WIDTH

return loss

if __name__ == "__main__":

log_dir = "logs/"

# 获取model

model = Deeplabv3(classes=2,input_shape=(HEIGHT,WIDTH,3))

# model.summary()

weights_path = get_file('deeplabv3_xception_tf_dim_ordering_tf_kernels.h5',

WEIGHTS_PATH_X,

cache_subdir='models')

model.load_weights(weights_path,by_name=True)

# 打开数据集的txt

with open(r".\dataset2\train.txt","r") as f:

lines = f.readlines()

# 打乱行,这个txt主要用于帮助读取数据来训练

# 打乱的数据更有利于训练

np.random.seed(10101)

np.random.shuffle(lines)

np.random.seed(None)

# 90%用于训练,10%用于估计。

num_val = int(len(lines)*0.1)

num_train = len(lines) - num_val

# 保存的方式,1世代保存一次

checkpoint_period = ModelCheckpoint(

log_dir + 'ep{epoch:03d}-loss{loss:.3f}-val_loss{val_loss:.3f}.h5',

monitor='val_loss',

save_weights_only=True,

save_best_only=True,

period=1

)

# 学习率下降的方式,val_loss 1次不下降就下降学习率继续训练

reduce_lr = ReduceLROnPlateau(

monitor='val_loss',

factor=0.5,

patience=3,

verbose=1

)

# 是否需要早停,当val_loss一直不下降的时候意味着模型基本训练完毕,可以停止

early_stopping = EarlyStopping(

monitor='val_loss',

min_delta=0,

patience=10,

verbose=1

)

# 交叉熵

model.compile(loss = loss,

optimizer = Adam(lr=1e-3),

metrics = ['accuracy'])

batch_size = 1

print('Train on {} samples, val on {} samples, with batch size {}.'.format(num_train, num_val, batch_size))

# 开始训练

model.fit_generator(generate_arrays_from_file(lines[:num_train], batch_size),

steps_per_epoch=max(1, num_train//batch_size),

validation_data=generate_arrays_from_file(lines[num_train:], batch_size),

validation_steps=max(1, num_val//batch_size),

epochs=50,

initial_epoch=0,

callbacks=[checkpoint_period, reduce_lr])

model.save_weights(log_dir+'last1.h5')

3、预测文件

预测文件如下:

from nets.deeplab import Deeplabv3

from PIL import Image

import numpy as np

import random

import copy

import os

class_colors = [[0,0,0],[0,255,0]]

NCLASSES = 2

HEIGHT = 416

WIDTH = 416

model = model = Deeplabv3(classes=2,input_shape=(HEIGHT,WIDTH,3))

model.load_weights("logs/ep006-loss0.023-val_loss0.030.h5")

imgs = os.listdir("./img")

for jpg in imgs:

img = Image.open("./img/"+jpg)

old_img = copy.deepcopy(img)

orininal_h = np.array(img).shape[0]

orininal_w = np.array(img).shape[1]

img = img.resize((WIDTH,HEIGHT))

img = np.array(img)

img = img/255

img = img.reshape(-1,HEIGHT,WIDTH,3)

pr = model.predict(img)[0]

pr = pr.reshape((int(HEIGHT), int(WIDTH),NCLASSES)).argmax(axis=-1)

seg_img = np.zeros((int(HEIGHT), int(WIDTH),3))

colors = class_colors

for c in range(NCLASSES):

seg_img[:,:,0] += ( (pr[:,: ] == c )*( colors[c][0] )).astype('uint8')

seg_img[:,:,1] += ((pr[:,: ] == c )*( colors[c][1] )).astype('uint8')

seg_img[:,:,2] += ((pr[:,: ] == c )*( colors[c][2] )).astype('uint8')

seg_img = Image.fromarray(np.uint8(seg_img)).resize((orininal_w,orininal_h))

image = Image.blend(old_img,seg_img,0.3)

image.save("./img_out/"+jpg)

训练结果

原图:

![]()

这么大的模型真的分分钟过拟合,可以给你们看看过拟合后的结果。

划重点哈!

- 这是过拟合的画面。

- 这是过拟合的画面。

- 这是过拟合的画面。

想要好的斑马线训练效果建议使用我之前的模型segnet、pspnet此类的,或者使用基于mobilenet的deeplabv3+。

我也训练了基于mobilenetV2的DeeplabV3+模型,效果不错,参数只有200多万个~比segnet还少。