kubernetes存储(三)——K8S的Volumes配置管理(动态卷+StorageClass存储类(class)+部署mysql主从集群)

文章目录

- 1.StorageClass介绍

- 2.配置NFS Client Provisioner

- 3.StatefulSe控制器

- 3.1 通过无头服务创建StatefulSe控制器维持Pod的拓扑状态

- 3.2 StatefulSet的应用状态与弹缩

- 3.3 StatefulSet+存储

- 3.4 使用statefullset部署mysql主从集群

1.StorageClass介绍

StorageClass提供了一种描述存储类(class)的方法,不同的class可能会映射到不同的服务质量等级和备份策略或其他策略等。

每个 StorageClass 都包含 provisioner、parameters 和 reclaimPolicy 字段, 这些字段会在 StorageClass需要动态分配 PersistentVolume 时会使用到。

StorageClass的属性

• Provisioner(存储分配器):用来决定使用哪个卷插件分配 PV,该字段必须指定。可以指 定内部分配器,也可以指定外部分配器。外部分配器的代码地址为: kubernetesincubator/external-storage,其中包括NFS和Ceph等。

• Reclaim Policy(回收策略):通过reclaimPolicy字段指定创建的Persistent Volume的回收 策略,回收策略包括:Delete 或者 Retain,没有指定默认为Delete。

• 更多属性查看:https://kubernetes.io/zh/docs/concepts/storage/storage-classes/

2.配置NFS Client Provisioner

NFS Client Provisioner是一个automatic provisioner,使用NFS作为存储,自动创建PV和对应的 PVC,本身不提供NFS存储,需要外部先有一套NFS存储服务。

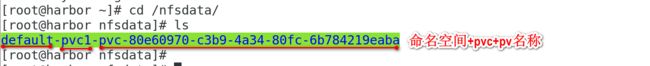

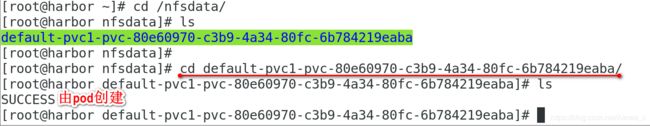

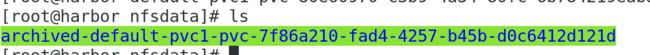

• PV以 ${namespace}-${pvcName}-${pvName}的命名格式提供(在NFS服务器上)

• PV回收的时候以 archieved-${namespace}-${pvcName}-${pvName} 的命名格式(在NFS 服务器上)

nfs-client-provisioner源码地址:https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client/deploy

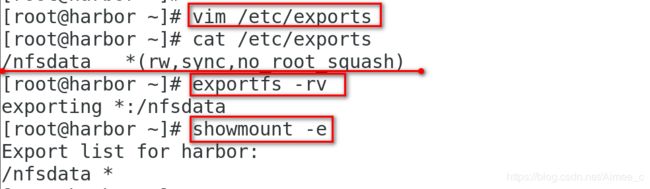

[root@harbor ~]# vim /etc/exports

[root@harbor ~]# cat /etc/exports

/nfsdata *(rw,sync,no_root_squash)

[root@harbor ~]# exportfs -rv

exporting *:/nfsdata

[root@harbor ~]# showmount -e

Export list for harbor:

/nfsdata *[kubeadm@server1 ~]$ cd vol/

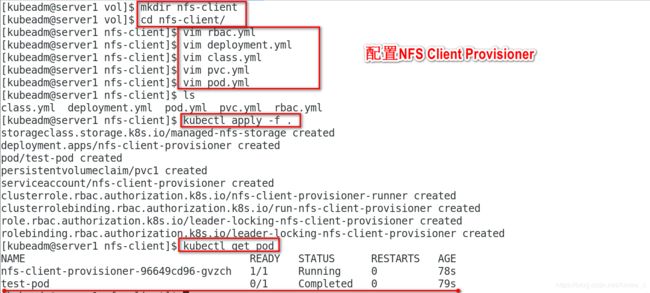

[kubeadm@server1 vol]$ mkdir nfs-client

[kubeadm@server1 vol]$ cd nfs-client/

[kubeadm@server1 nfs-client]$ vim rbac.yml ##添加角色绑定的名称空间

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io[kubeadm@server1 nfs-client]$ vim deployment.yml ##删除重复的选择器字段

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: nfs-client-provisioner:latest ##镜像需要下载并上传到仓库

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: red.org/nfs

- name: NFS_SERVER

value: 172.25.1.11

- name: NFS_PATH

value: /nfsdata

volumes:

- name: nfs-client-root

nfs:

server: 172.25.1.11

path: /nfsdata

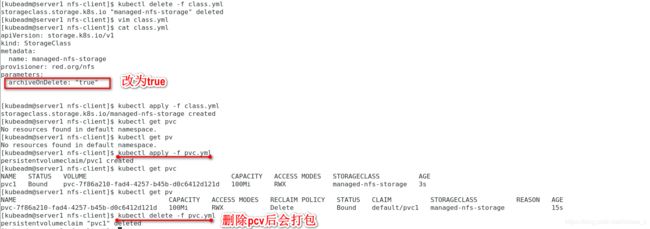

[kubeadm@server1 nfs-client]$ vim class.yml ##创建存储类

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: red.org/nfs

parameters:

archiveOnDelete: "false"

[kubeadm@server1 nfs-client]$ vim pvc.yml ##创建pvc

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc1

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Mi[kubeadm@server1 nfs-client]$ vim pod.yml

kind: Pod

apiVersion: v1

metadata:

name: test-pod

spec:

containers:

- name: test-pod

image: busybox

command:

- "/bin/sh"

args:

- "-c"

- "touch /mnt/SUCCESS && exit 0 || exit 1"

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: pvc1 默认的 StorageClass 将被用于动态的为没有特定 storage class 需求的 PersistentVolumeClaims 配置存储:(只能有一个默认StorageClass)

如果没有默认StorageClass,PVC 也没有指定storageClassName 的值,那么意味着它只能够跟 storageClassName 也是“”的 PV 进行绑定。

[kubeadm@server1 nfs-client]$ kubectl get pvc

No resources found in default namespace.

[kubeadm@server1 nfs-client]$ kubectl get pv

No resources found in default namespace.

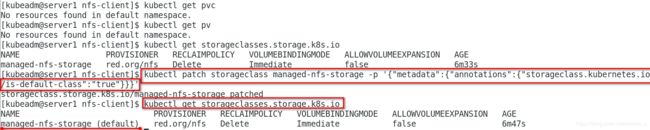

[kubeadm@server1 nfs-client]$ kubectl get storageclasses.storage.k8s.io

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage red.org/nfs Delete Immediate false 6m33s

[kubeadm@server1 nfs-client]$ kubectl patch storageclass managed-nfs-storage -p '{"metadata":{"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

storageclass.storage.k8s.io/managed-nfs-storage patched

[kubeadm@server1 nfs-client]$ kubectl get storageclasses.storage.k8s.io

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage (default) red.org/nfs Delete Immediate false 6m47s

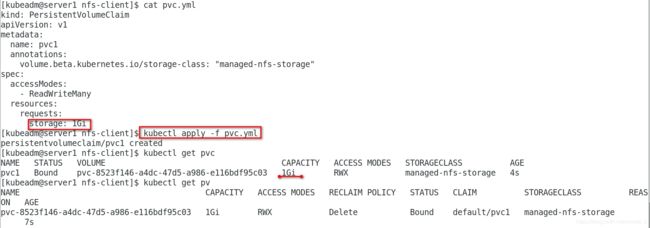

[kubeadm@server1 nfs-client]$ vim pvc.yml

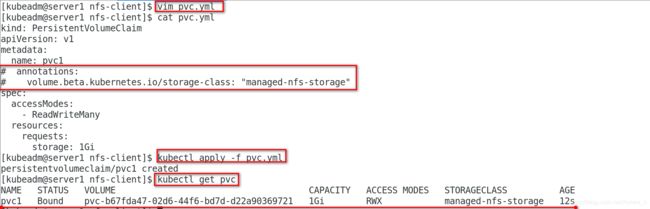

[kubeadm@server1 nfs-client]$ cat pvc.yml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc1

# annotations:

# volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

[kubeadm@server1 nfs-client]$ kubectl apply -f pvc.yml

persistentvolumeclaim/pvc1 created

[kubeadm@server1 nfs-client]$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc1 Bound pvc-b67fda47-02d6-44f6-bd7d-d22a90369721 1Gi RWX managed-nfs-storage 12s3.StatefulSe控制器

3.1 通过无头服务创建StatefulSe控制器维持Pod的拓扑状态

创建service

[kubeadm@server1 nginx]$ pwd

/home/kubeadm/vol/nginx

[kubeadm@server1 nginx]$ vim service.yml

[kubeadm@server1 nginx]$ cat service.yml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

[kubeadm@server1 nginx]$ kubectl apply -f service.yml

service/nginx created

[kubeadm@server1 nginx]$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 13d

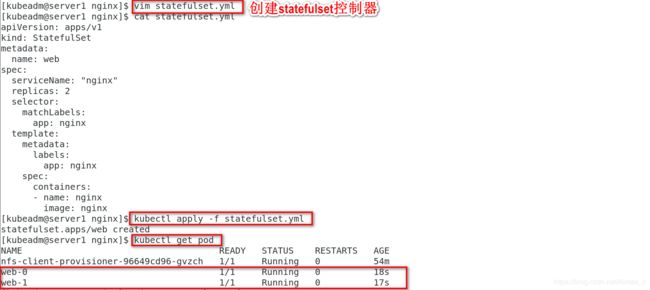

nginx ClusterIP None <none> 80/TCP 3s[kubeadm@server1 nginx]$ vim statefulset.yml

[kubeadm@server1 nginx]$ cat statefulset.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

[kubeadm@server1 nginx]$ kubectl apply -f statefulset.yml

statefulset.apps/web created

[kubeadm@server1 nginx]$ kubectl get pod

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-96649cd96-gvzch 1/1 Running 0 54m

web-0 1/1 Running 0 18s

web-1 1/1 Running 0 17s

[kubeadm@server1 nginx]$ vim /etc/resolv.conf

[kubeadm@server1 nginx]$ cat /etc/resolv.conf

nameserver 10.96.0.10

nameserver 114.114.114.114

[kubeadm@server1 nginx]$ kubectl describe svc nginx

Name: nginx

Namespace: default

Labels: app=nginx

Annotations: Selector: app=nginx

Type: ClusterIP

IP: None

Port: web 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.87:80,10.244.2.104:80

Session Affinity: None

Events: <none>

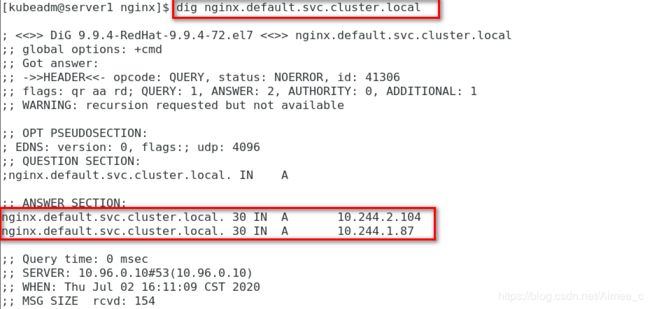

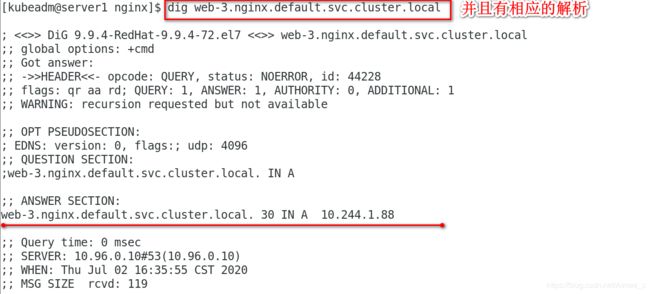

[kubeadm@server1 nginx]$ dig nginx.default.svc.cluster.local

; <<>> DiG 9.9.4-RedHat-9.9.4-72.el7 <<>> nginx.default.svc.cluster.local

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 41306

;; flags: qr aa rd; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;nginx.default.svc.cluster.local. IN A

;; ANSWER SECTION:

nginx.default.svc.cluster.local. 30 IN A 10.244.2.104

nginx.default.svc.cluster.local. 30 IN A 10.244.1.87

;; Query time: 0 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Thu Jul 02 16:11:09 CST 2020

;; MSG SIZE rcvd: 154

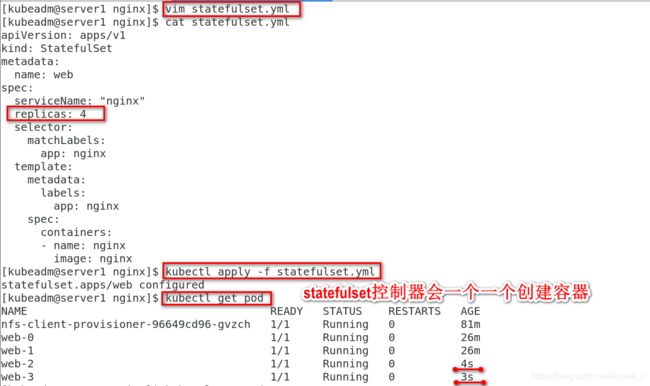

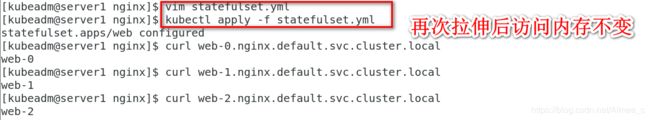

3.2 StatefulSet的应用状态与弹缩

StatefulSet将应用状态抽象成了两种情况:

• 拓扑状态:应用实例必须按照某种顺序启动。新创建的Pod必须和原来Pod的网络标识一样

• 存储状态:应用的多个实例分别绑定了不同存储数据。

StatefulSet给所有的Pod进行了编号,编号规则是:$(statefulset名称)-$(序号),从0开始。

Pod被删除后重建,重建Pod的网络标识也不会改变,Pod的拓扑状态按照Pod的“名字+编号”的方 式固定下来,并且为每个Pod提供了一个固定且唯一的访问入口,即Pod对应的DNS记录。

kubectl 弹缩

首先,想要弹缩的StatefulSet. 需先清楚是否能弹缩该应用.

kubectl get statefulsets •

改变StatefulSet副本数量: • $ kubectl scale statefulsets --replicas=

• 如果StatefulSet开始由 kubectl apply 或 kubectl create --save-config 创建,更新StatefulSet manifests中的 .spec.replicas, 然后执行命令 kubectl apply: • $ kubectl apply -f

• 也可以通过命令 kubectl edit 编辑该字段: • $ kubectl edit statefulsets

• 使用 kubectl patch: • $ kubectl patch statefulsets -p '{“spec”:{“replicas”:}}'

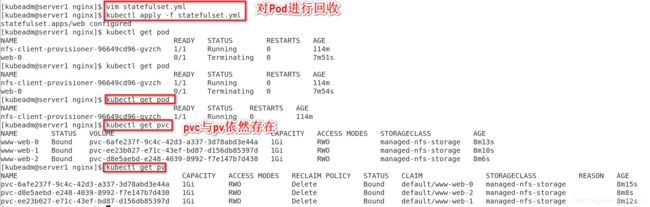

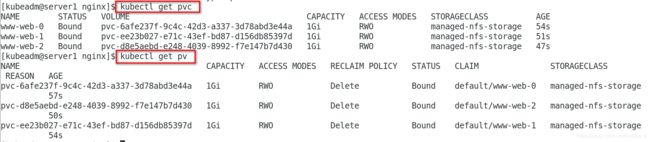

3.3 StatefulSet+存储

PV和PVC的设计,使得StatefulSet对存储状态的管理成为了可能:

Pod的创建也是严格按照编号顺序进行的。比如在web-0进入到running状态,并且Conditions为 Ready之前,web-1一直会处于pending状态。

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

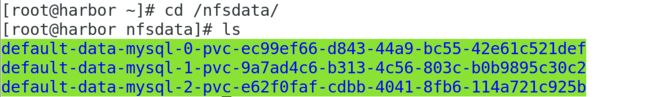

StatefulSet还会为每一个Pod分配并创建一个同样编号的PVC。这样,kubernetes就可以通过 Persistent Volume机制为这个PVC绑定对应的PV,从而保证每一个Pod都拥有一个独立的 Volume。

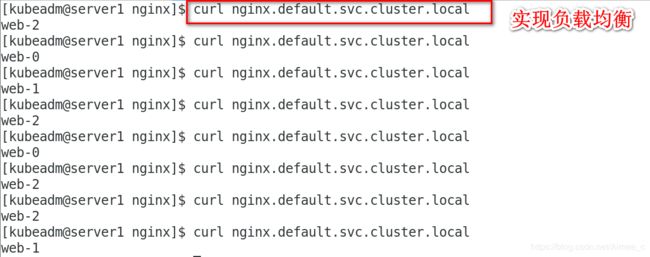

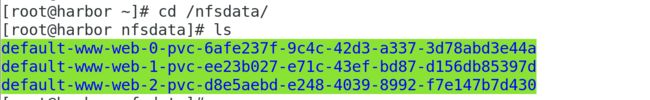

设置负载均衡

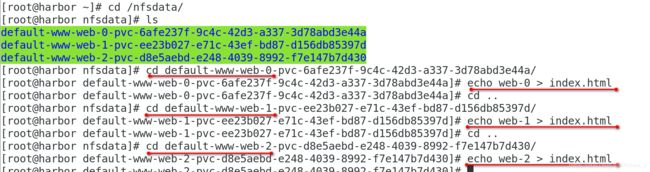

[root@harbor ~]# cd /nfsdata/

[root@harbor nfsdata]# ls

default-www-web-0-pvc-6afe237f-9c4c-42d3-a337-3d78abd3e44a

default-www-web-1-pvc-ee23b027-e71c-43ef-bd87-d156db85397d

default-www-web-2-pvc-d8e5aebd-e248-4039-8992-f7e147b7d430

[root@harbor nfsdata]# cd default-www-web-0-pvc-6afe237f-9c4c-42d3-a337-3d78abd3e44a/

[root@harbor default-www-web-0-pvc-6afe237f-9c4c-42d3-a337-3d78abd3e44a]# echo web-0 > index.html

[root@harbor default-www-web-0-pvc-6afe237f-9c4c-42d3-a337-3d78abd3e44a]# cd ..

[root@harbor nfsdata]# cd default-www-web-1-pvc-ee23b027-e71c-43ef-bd87-d156db85397d/

[root@harbor default-www-web-1-pvc-ee23b027-e71c-43ef-bd87-d156db85397d]# echo web-1 > index.html

[root@harbor default-www-web-1-pvc-ee23b027-e71c-43ef-bd87-d156db85397d]# cd ..

[root@harbor nfsdata]# cd default-www-web-2-pvc-d8e5aebd-e248-4039-8992-f7e147b7d430/

[root@harbor default-www-web-2-pvc-d8e5aebd-e248-4039-8992-f7e147b7d430]# echo web-2 > index.html

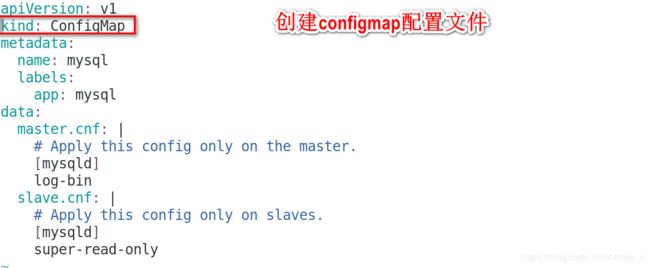

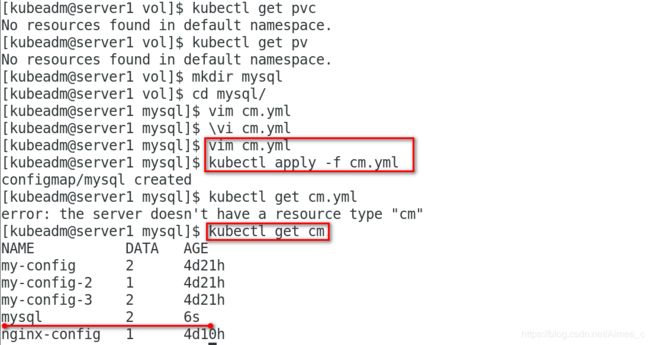

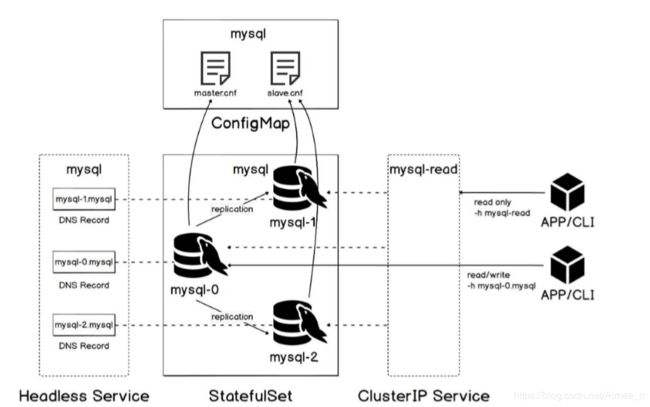

3.4 使用statefullset部署mysql主从集群

参考官网:https://kubernetes.io/zh/docs/tasks/run-application/run-replicated-stateful-application/

创建configmap配置文件

这个 ConfigMap 提供 my.cnf 覆盖,使可以独立控制 MySQL 主服务器和从服务器的配置。

[kubeadm@server1 mysql]$ cat cm.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql

labels:

app: mysql

data:

master.cnf: |

# Apply this config only on the master.

[mysqld]

log-bin

slave.cnf: |

# Apply this config only on slaves.

[mysqld]

super-read-only

[kubeadm@server1 mysql]$ vim cm.yml

[kubeadm@server1 mysql]$ kubectl apply -f cm.yml

configmap/mysql created

[kubeadm@server1 mysql]$ kubectl get cm.

NAME DATA AGE

my-config 2 4d21h

my-config-2 1 4d21h

my-config-3 2 4d21h

mysql 2 6s

nginx-config 1 4d10h

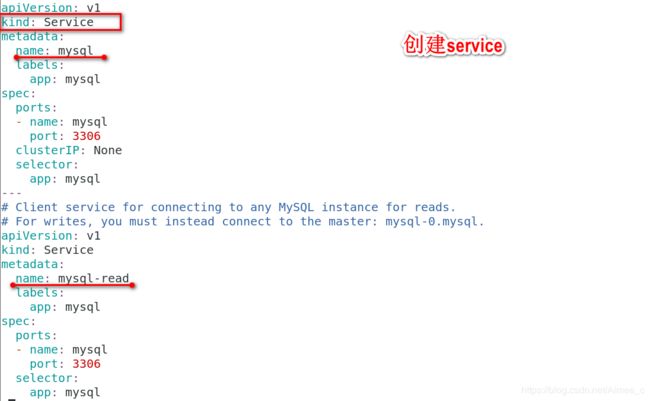

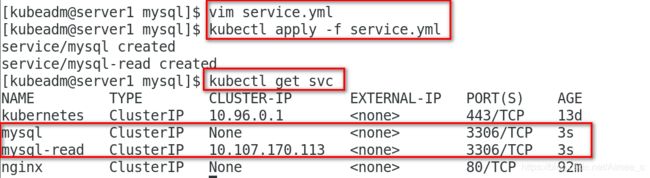

创建service

Headless Service 给 StatefulSet 控制器为集合中每个 Pod 创建的 DNS 条目提供了一个宿主。因为 Headless Service 名为 mysql,所以可以通过在同一 Kubernetes 集群和 namespace 中的任何其他 Pod 内解析 .mysql 来访问 Pod。

客户端 Service 称为 mysql-read,是一种常规 Service,具有其自己的群集 IP,该群集 IP 在报告为就绪的所有MySQL Pod 中分配连接。可能端点的集合包括 MySQL 主节点和所有从节点。

请注意,只有读取查询才能使用负载平衡的客户端 Service。因为只有一个 MySQL 主服务器,所以客户端应直接连接到 MySQL 主服务器 Pod (通过其在 Headless Service 中的 DNS 条目)以执行写入操作。

[kubeadm@server1 mysql]$ cat service.yml

apiVersion: v1

kind: Service

metadata:

name: mysql

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

clusterIP: None

selector:

app: mysql

---

# Client service for connecting to any MySQL instance for reads.

# For writes, you must instead connect to the master: mysql-0.mysql.

apiVersion: v1

kind: Service

metadata:

name: mysql-read

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

selector:

app: mysql

创建statefulset

[root@server2 mysql]# cat statefulset.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

spec:

selector:

matchLabels:

app: mysql

serviceName: mysql

replicas: 3

template:

metadata:

labels:

app: mysql

spec:

initContainers:

- name: init-mysql

image: mysql:5.7

command:

- bash

- "-c"

- |

set -ex

# Generate mysql server-id from pod ordinal index.

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

echo [mysqld] > /mnt/conf.d/server-id.cnf

# Add an offset to avoid reserved server-id=0 value.

echo server-id=$((100 + $ordinal)) >> /mnt/conf.d/server-id.cnf

# Copy appropriate conf.d files from config-map to emptyDir.

if [[ $ordinal -eq 0 ]]; then

cp /mnt/config-map/master.cnf /mnt/conf.d/

else

cp /mnt/config-map/slave.cnf /mnt/conf.d/

fi

volumeMounts:

- name: conf

mountPath: /mnt/conf.d

- name: config-map

mountPath: /mnt/config-map

- name: clone-mysql

image: xtrabackup:1.0

command:

- bash

- "-c"

- |

set -ex

# Skip the clone if data already exists.

[[ -d /var/lib/mysql/mysql ]] && exit 0

# Skip the clone on master (ordinal index 0).

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

[[ $ordinal -eq 0 ]] && exit 0

# Clone data from previous peer.

ncat --recv-only mysql-$(($ordinal-1)).mysql 3307 | xbstream -x -C /var/lib/mysql

# Prepare the backup.

xtrabackup --prepare --target-dir=/var/lib/mysql

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

containers:

- name: mysql

image: mysql:5.7

env:

- name: MYSQL_ALLOW_EMPTY_PASSWORD

value: "1"

ports:

- name: mysql

containerPort: 3306

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

resources:

requests:

cpu: 500m

memory: 512M

livenessProbe:

exec:

command: ["mysqladmin", "ping"]

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

readinessProbe:

exec:

# Check we can execute queries over TCP (skip-networking is off).

command: ["mysql", "-h", "127.0.0.1", "-e", "SELECT 1"]

initialDelaySeconds: 5

periodSeconds: 2

timeoutSeconds: 1

- name: xtrabackup

image: xtrabackup:1.0 ##用于打开3307端口,向slave发送数据

ports:

- name: xtrabackup

containerPort: 3307

command:

- bash

- "-c"

- |

set -ex

cd /var/lib/mysql

# Determine binlog position of cloned data, if any.

if [[ -f xtrabackup_slave_info && "x$(<xtrabackup_slave_info)" != "x" ]]; then

# XtraBackup already generated a partial "CHANGE MASTER TO" query

# because we're cloning from an existing slave. (Need to remove the tailing semicolon!)

cat xtrabackup_slave_info | sed -E 's/;$//g' > change_master_to.sql.in

# Ignore xtrabackup_binlog_info in this case (it's useless).

rm -f xtrabackup_slave_info xtrabackup_binlog_info

elif [[ -f xtrabackup_binlog_info ]]; then

# We're cloning directly from master. Parse binlog position.

[[ `cat xtrabackup_binlog_info` =~ ^(.*?)[[:space:]]+(.*?)$ ]] || exit 1

rm -f xtrabackup_binlog_info xtrabackup_slave_info

echo "CHANGE MASTER TO MASTER_LOG_FILE='${BASH_REMATCH[1]}',\

MASTER_LOG_POS=${BASH_REMATCH[2]}" > change_master_to.sql.in

fi

# Check if we need to complete a clone by starting replication.

if [[ -f change_master_to.sql.in ]]; then

echo "Waiting for mysqld to be ready (accepting connections)"

until mysql -h 127.0.0.1 -e "SELECT 1"; do sleep 1; done

echo "Initializing replication from clone position"

mysql -h 127.0.0.1 \

-e "$(<change_master_to.sql.in), \

MASTER_HOST='mysql-0.mysql', \

MASTER_USER='root', \

MASTER_PASSWORD='', \

MASTER_CONNECT_RETRY=10; \

START SLAVE;" || exit 1

# In case of container restart, attempt this at-most-once.

mv change_master_to.sql.in change_master_to.sql.orig

fi

# Start a server to send backups when requested by peers.

exec ncat --listen --keep-open --send-only --max-conns=1 3307 -c \

"xtrabackup --backup --slave-info --stream=xbstream --host=127.0.0.1 --user=root"

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

resources:

requests:

cpu: 100m

memory: 100Mi

volumes:

- name: conf

emptyDir: {}

- name: config-map

configMap:

name: mysql

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

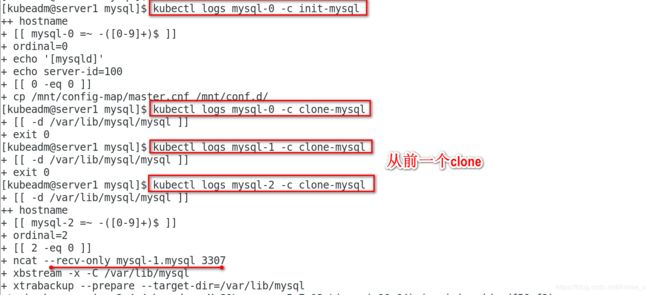

storage: 10Gi [kubeadm@server1 mysql]$ kubectl logs mysql-0 -c init-mysql

++ hostname

+ [[ mysql-0 =~ -([0-9]+)$ ]]

+ ordinal=0

+ echo '[mysqld]'

+ echo server-id=100

+ [[ 0 -eq 0 ]]

+ cp /mnt/config-map/master.cnf /mnt/conf.d/

[kubeadm@server1 mysql]$ kubectl logs mysql-0 -c clone-mysql

+ [[ -d /var/lib/mysql/mysql ]]

+ exit 0

[kubeadm@server1 mysql]$ kubectl logs mysql-1 -c clone-mysql

+ [[ -d /var/lib/mysql/mysql ]]

+ exit 0

[kubeadm@server1 mysql]$ kubectl logs mysql-2 -c clone-mysql

+ [[ -d /var/lib/mysql/mysql ]]

++ hostname

+ [[ mysql-2 =~ -([0-9]+)$ ]]

+ ordinal=2

+ [[ 2 -eq 0 ]]

+ ncat --recv-only mysql-1.mysql 3307

+ xbstream -x -C /var/lib/mysql

+ xtrabackup --prepare --target-dir=/var/lib/mysql

salve1复制master数据,slave2复制slave1的数据

[kubeadm@server1 mysql]$ kubectl exec mysql-0 -c mysql -- ls /etc/mysql/conf.d

master.cnf

server-id.cnf

[kubeadm@server1 mysql]$ kubectl exec mysql-0 -c mysql -- cat /etc/mysql/conf.d/master.cnf

# Apply this config only on the master.

[mysqld]

log-bin

[kubeadm@server1 mysql]$ kubectl exec mysql-0 -c mysql -- cat /etc/mysql/conf.d/server-id.cnf

[mysqld]

server-id=100

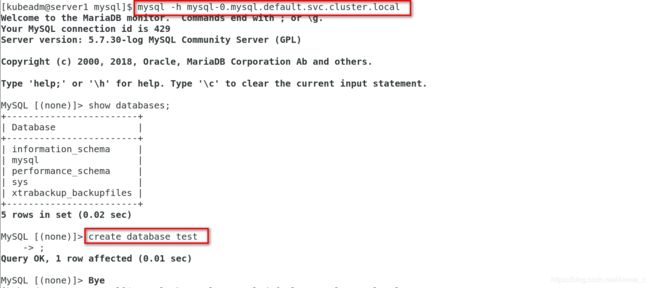

[kubeadm@server1 mysql]$ mysql -h mysql-0.mysql.default.svc.cluster.local

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 429

Server version: 5.7.30-log MySQL Community Server (GPL)

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [(none)]> show databases;

+------------------------+

| Database |

+------------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

| xtrabackup_backupfiles |

+------------------------+

5 rows in set (0.02 sec)

MySQL [(none)]> create database test;

Query OK, 1 row affected (0.01 sec)

MySQL [(none)]> Bye

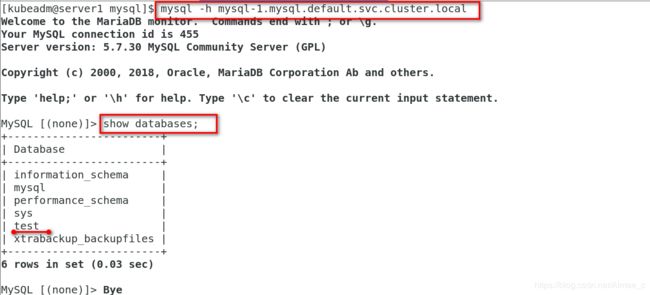

[kubeadm@server1 mysql]$ mysql -h mysql-1.mysql.default.svc.cluster.local

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 455

Server version: 5.7.30 MySQL Community Server (GPL)

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [(none)]> show databases;

+------------------------+

| Database |

+------------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

| test |

| xtrabackup_backupfiles |

+------------------------+

6 rows in set (0.03 sec)

MySQL [(none)]> Bye

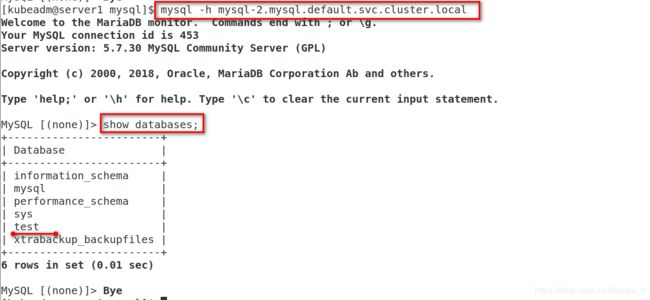

[kubeadm@server1 mysql]$ mysql -h mysql-2.mysql.default.svc.cluster.local

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 453

Server version: 5.7.30 MySQL Community Server (GPL)

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [(none)]> show databases;

+------------------------+

| Database |

+------------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

| test |

| xtrabackup_backupfiles |

+------------------------+

6 rows in set (0.01 sec)

MySQL [(none)]> Bye