MapReduce Design Patterns-chapter 7

CHAPTER 7:Input and Output Patterns

Customizing Input and Output in Hadoop

Hadoop allows you to modify the way data is loaded on disk in two major ways: configuring how contiguous chunks of input are generated from blocks in HDFS (or maybe more exotic sources), and configuring how records appear in the map phase. The two classes you’ll be playing with to do this are RecordReader and InputFormat.

Hadoop also allows you to modify the way data is stored in an analogous way: with an OutputFormat and a RecordWriter.

InputFormat

Hadoop relies on the input format of the job to do three things:1. Validate the input configuration for the job (i.e., checking that the data is there).

2. Split the input blocks and files into logical chunks of type InputSplit, each of which is assigned to a map task for processing.

3. Create the RecordReader implementation to be used to create key/value pairs from the raw InputSplit. These pairs are sent one by one to their mapper.

The InputFormat abstract class contains two abstract methods:

getSplits

The implementation of getSplits typically uses the given JobContext object to retrieve the configured input and return a List of InputSplit objects. The input splits have a method to return an array of machines associated with the locations of the data in the cluster, which gives clues to the framework as to which TaskTracker should process the map task. This method is also a good place to verify the configuration and throw any necessary exceptions, because the method is used on the front-end (i.e. before the job is submitted to the JobTracker).

The implementation of getSplits typically uses the given JobContext object to retrieve the configured input and return a List of InputSplit objects. The input splits have a method to return an array of machines associated with the locations of the data in the cluster, which gives clues to the framework as to which TaskTracker should process the map task. This method is also a good place to verify the configuration and throw any necessary exceptions, because the method is used on the front-end (i.e. before the job is submitted to the JobTracker).

createRecordReader

This method is used on the back-end to generate an implementation of Record Reader, which we’ll discuss in more detail shortly. Typically, a new instance is created and immediately returned, because the record reader has an initialize method that is called by the framework.

RecordReader

The RecordReader abstract class creates key/value pairs from a given InputSplit. While the InputSplit represents the byte-oriented view of the split, the RecordReader makes sense out of it for processing by a mapper.

OutputFormat

Hadoop relies on the output format of the job for two main tasks:

1. Validate the output configuration for the job.

2. Create the RecordWriter implementation that will write the output of the job.

1. Validate the output configuration for the job.

2. Create the RecordWriter implementation that will write the output of the job.

RecordWriter

The RecordWriter abstract class writes key/value pairs to a file system, or another output.

Generating Data

Problem:Generating random StackOverflow comments

Driver code:

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

int numMapTasks = Integer.parseInt(args[0]);

int numRecordsPerTask = Integer.parseInt(args[1]);

Path wordList = new Path(args[2]);

Path outputDir = new Path(args[3]);

Job job = new Job(conf, "RandomDataGenerationDriver");

job.setJarByClass(RandomDataGenerationDriver.class);

job.setNumReduceTasks(0);

job.setInputFormatClass(RandomStackOverflowInputFormat.class);

RandomStackOverflowInputFormat.setNumMapTasks(job, numMapTasks);

RandomStackOverflowInputFormat.setNumRecordPerTask(job,

numRecordsPerTask);

RandomStackOverflowInputFormat.setRandomWordList(job, wordList);

TextOutputFormat.setOutputPath(job, outputDir);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

System.exit(job.waitForCompletion(true) ? 0 : 2);

}InputSplit code:

The FakeInputSplit class simply extends InputSplit and implements Writable. There is no implementation for any of the overridden methods, or for methods requiring return values return basic values. This input split is used to trick the framework into assigning a task to generate the random data.

FakeInputSplit代表一个经过划分后的数据块

public static class FakeInputSplit extends InputSplit implements

Writable {

public void readFields(DataInput arg0) throws IOException {

}

public void write(DataOutput arg0) throws IOException {

}

public long getLength() throws IOException, InterruptedException {

return 0;

}

public String[] getLocations() throws IOException,

InterruptedException {

return new String[0];

}

}

RandomStackOverflowInputFormat会根据driver中的配置信息,生成需要数量的splits和相应的RecordReader

public static class RandomStackOverflowInputFormat extends

InputFormat {

public static final String NUM_MAP_TASKS = "random.generator.map.tasks";

public static final String NUM_RECORDS_PER_TASK =

"random.generator.num.records.per.map.task";

public static final String RANDOM_WORD_LIST =

"random.generator.random.word.file";

public List getSplits(JobContext job) throws IOException {

// Get the number of map tasks configured for

int numSplits = job.getConfiguration().getInt(NUM_MAP_TASKS, -1);

// Create a number of input splits equivalent to the number of tasks

ArrayList splits = new ArrayList();

for (int i = 0; i < numSplits; ++i) {

splits.add(new FakeInputSplit());

}

return splits;

}

public RecordReader createRecordReader(

InputSplit split, TaskAttemptContext context)

throws IOException, InterruptedException {

// Create a new RandomStackOverflowRecordReader and initialize it

RandomStackOverflowRecordReader rr =

new RandomStackOverflowRecordReader();

rr.initialize(split, context);

return rr;

}

public static void setNumMapTasks(Job job, int i) {

job.getConfiguration().setInt(NUM_MAP_TASKS, i);

}

public static void setNumRecordPerTask(Job job, int i) {

job.getConfiguration().setInt(NUM_RECORDS_PER_TASK, i);

}

public static void setRandomWordList(Job job, Path file) {

DistributedCache.addCacheFile(file.toUri(), job.getConfiguration());

}

} RecordReader code:

This record reader is where the data is actually generated. It is given during our FakeInputSplit during initialization, but simply ignores it. The number of records to create is pulled from the job configuration, and the list of random words is read from the DistributedCache. For each call to nextKeyValue, a random record is created using a simple random number generator. The body of the comment is generated by a helper function that randomly selects words from the list, between one and thirty words (also random). The counter is incremented to keep track of how many records have been generated. Once all the records are generated, the record reader returns

false, signaling the framework that there is no more input for the mapper.

false, signaling the framework that there is no more input for the mapper.

initialize初始化词表,供后续生成key/value对使用,在nextKeyValue方法中生成key和value,getProgress返回完成的进度,即已经产生的记录数/应该产生的记录数

public static class RandomStackOverflowRecordReader extends

RecordReader {

private int numRecordsToCreate = 0;

private int createdRecords = 0;

private Text key = new Text();

private NullWritable value = NullWritable.get();

private Random rndm = new Random();

private ArrayList randomWords = new ArrayList();

// This object will format the creation date string into a Date

// object

private SimpleDateFormat frmt = new SimpleDateFormat(

"yyyy-MM-dd'T'HH:mm:ss.SSS");

public void initialize(InputSplit split, TaskAttemptContext context)

throws IOException, InterruptedException {

// Get the number of records to create from the configuration

this.numRecordsToCreate = context.getConfiguration().getInt(

NUM_RECORDS_PER_TASK, -1);

// Get the list of random words from the DistributedCache

URI[] files = DistributedCache.getCacheFiles(context

.getConfiguration());

// Read the list of random words into a list

BufferedReader rdr = new BufferedReader(new FileReader(

files[0].toString()));

String line;

while ((line = rdr.readLine()) != null) {

randomWords.add(line);

}

rdr.close();

}

public boolean nextKeyValue() throws IOException,

InterruptedException {

// If we still have records to create

if (createdRecords < numRecordsToCreate) {

// Generate random data

int score = Math.abs(rndm.nextInt()) % 15000;

int rowId = Math.abs(rndm.nextInt()) % 1000000000;

int postId = Math.abs(rndm.nextInt()) % 100000000;

int userId = Math.abs(rndm.nextInt()) % 1000000;

String creationDate = frmt

.format(Math.abs(rndm.nextLong()));

// Create a string of text from the random words

String text = getRandomText();

String randomRecord = "|

";

key.set(randomRecord);

++createdRecords;

return true;

} else {

// We are done creating records

return false;

}

}

private String getRandomText() {

StringBuilder bldr = new StringBuilder();

int numWords = Math.abs(rndm.nextInt()) % 30 + 1;

for (int i = 0; i < numWords; ++i) {

bldr.append(randomWords.get(Math.abs(rndm.nextInt())

% randomWords.size())

+ " ");

}

return bldr.toString();

}

public Text getCurrentKey() throws IOException,

InterruptedException {

return key;

}

public NullWritable getCurrentValue() throws IOException,

InterruptedException {

return value;

}

public float getProgress() throws IOException, InterruptedException {

return (float) createdRecords / (float) numRecordsToCreate;

}

public void close() throws IOException {

// nothing to do here...

}

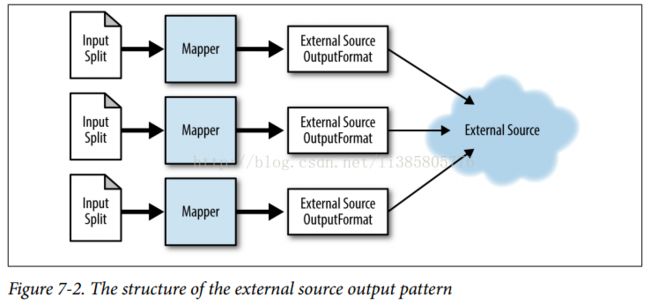

} External Source Output

Note that task failures are bound to happen, and when they do, any key/value pairs written in the write method can’t be reverted. In a typical MapReduce job, temporary output is written to the file system. In the event of a failure, this output is simply discarded. When writing to an external source directly, it will receive the data in a stream. If a task fails, the external source won’t automatically know about it and discard all the data it received from a task. If this is unacceptable, consider using a custom OutputCommitter to write temporary output to the file system. This temporary output can then be read, delivered to the external source, and deleted upon success, or deleted from the file system outright in the event of a failure.

Writing to Redis instances

In order to work with the Hadoop framework, Jedis is used to communicate with Redis.Jedis is an open-source “blazingly small and sane Redis java client.”

A Redis hash is a map between string fields and string values, similar to a Java Hash Map. Each hash is given a key to identify the hash. Every hash can store more than four billion field-value pairs.

Problem: Given a set of user information, randomly distributed user-to-reputation mappings to a configurable number of Redis instances in parallel.

OutputFormat code:

The output committer

is used by the framework to manage any temporary output before committing in case the task fails and needs to be reexecuted.

is used by the framework to manage any temporary output before committing in case the task fails and needs to be reexecuted.

public static class RedisHashOutputFormat extends OutputFormat {

public static final String REDIS_HOSTS_CONF =

"mapred.redishashoutputformat.hosts";

public static final String REDIS_HASH_KEY_CONF =

"mapred.redishashinputformat.key";

public static void setRedisHosts(Job job, String hosts) {

job.getConfiguration().set(REDIS_HOSTS_CONF, hosts);

}

public static void setRedisHashKey(Job job, String hashKey) {

job.getConfiguration().set(REDIS_HASH_KEY_CONF, hashKey);

}

public RecordWriter getRecordWriter(TaskAttemptContext job)

throws IOException, InterruptedException {

return new RedisHashRecordWriter(job.getConfiguration().get(

REDIS_HASH_KEY_CONF), job.getConfiguration().get(

REDIS_HOSTS_CONF));

}

public void checkOutputSpecs(JobContext job) throws IOException {

String hosts = job.getConfiguration().get(REDIS_HOSTS_CONF);

if (hosts == null || hosts.isEmpty()) {

throw new IOException(REDIS_HOSTS_CONF

+ " is not set in configuration.");

}

String hashKey = job.getConfiguration().get(

REDIS_HASH_KEY_CONF);

if (hashKey == null || hashKey.isEmpty()) {

throw new IOException(REDIS_HASH_KEY_CONF

+ " is not set in configuration.");

}

}

public OutputCommitter getOutputCommitter(TaskAttemptContext context)

throws IOException, InterruptedException {

return (new NullOutputFormat()).getOutputCommitter(context);

}

public static class RedisHashRecordWriter extends RecordWriter {

public static class RedisHashOutputFormat extends OutputFormat {

public static final String REDIS_HOSTS_CONF =

"mapred.redishashoutputformat.hosts";

public static final String REDIS_HASH_KEY_CONF =

"mapred.redishashinputformat.key";

public static void setRedisHosts(Job job, String hosts) {

job.getConfiguration().set(REDIS_HOSTS_CONF, hosts);

}

public static void setRedisHashKey(Job job, String hashKey) {

job.getConfiguration().set(REDIS_HASH_KEY_CONF, hashKey);

}

public RecordWriter getRecordWriter(TaskAttemptContext job)

throws IOException, InterruptedException {

return new RedisHashRecordWriter(job.getConfiguration().get(

REDIS_HASH_KEY_CONF), job.getConfiguration().get(

REDIS_HOSTS_CONF));

}

public void checkOutputSpecs(JobContext job) throws IOException {

String hosts = job.getConfiguration().get(REDIS_HOSTS_CONF);

if (hosts == null || hosts.isEmpty()) {

throw new IOException(REDIS_HOSTS_CONF

+ " is not set in configuration.");

}

String hashKey = job.getConfiguration().get(

REDIS_HASH_KEY_CONF);

if (hashKey == null || hashKey.isEmpty()) {

throw new IOException(REDIS_HASH_KEY_CONF

+ " is not set in configuration.");

}

}

public OutputCommitter getOutputCommitter(TaskAttemptContext context)

throws IOException, InterruptedException {

return (new NullOutputFormat()).getOutputCommitter(context);

}

public static class RedisHashRecordWriter extends RecordWriter {

public static class RedisHashRecordWriter extends RecordWriter {

private HashMap jedisMap = new HashMap();

private String hashKey = null;

public RedisHashRecordWriter(String hashKey, String hosts) {

this.hashKey = hashKey;

// Create a connection to Redis for each host

// Map an integer 0-(numRedisInstances - 1) to the instance

int i = 0;

for (String host : hosts.split(",")) {

Jedis jedis = new Jedis(host);

jedis.connect();

jedisMap.put(i, jedis);

++i;

}

}

public void write(Text key, Text value) throws IOException,

InterruptedException {

// Get the Jedis instance that this key/value pair will be

// written to

Jedis j = jedisMap.get(Math.abs(key.hashCode()) % jedisMap.size());

// Write the key/value pair

j.hset(hashKey, key.toString(), value.toString());

}

public void close(TaskAttemptContext context) throws IOException,

InterruptedException {

// For each jedis instance, disconnect it

for (Jedis jedis : jedisMap.values()) {

jedis.disconnect();

}

}

}

Mapper Code:

public static class RedisOutputMapper extends

Mapper {

private Text outkey = new Text();

private Text outvalue = new Text();

public void map(Object key, Text value, Context context)

throws IOException, InterruptedException {

Map parsed = MRDPUtils.transformXmlToMap(value

.toString());

String userId = parsed.get("Id");

String reputation = parsed.get("Reputation");

// Set our output key and values

outkey.set(userId);

outvalue.set(reputation);

context.write(outkey, outvalue);

}

} Drive Code:

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Path inputPath = new Path(args[0]);

String hosts = args[1];

String hashName = args[2];

Job job = new Job(conf, "Redis Output");

job.setJarByClass(RedisOutputDriver.class);

job.setMapperClass(RedisOutputMapper.class);

job.setNumReduceTasks(0);

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.setInputPaths(job, inputPath);

job.setOutputFormatClass(RedisHashOutputFormat.class);

RedisHashOutputFormat.setRedisHosts(job, hosts);

RedisHashOutputFormat.setRedisHashKey(job, hashName);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

int code = job.waitForCompletion(true) ? 0 : 2;

System.exit(code);

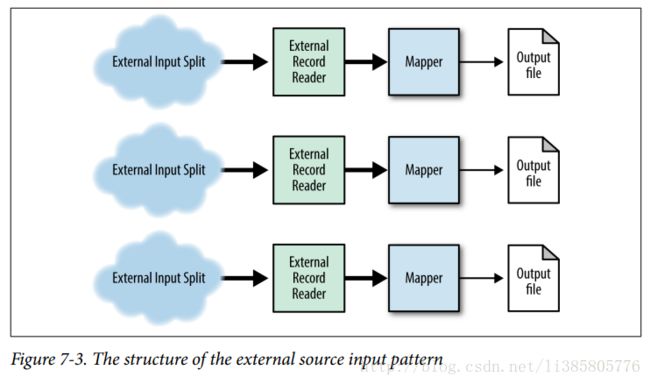

}External Source Input

The bottleneck for a MapReduce job implementing this pattern is going to be the source or the network.

Problem: Given a list of Redis instances in CSV format, read all the data stored in a configured hash in parallel.

InputSplit code.:

public static class RedisHashInputSplit extends InputSplit implements Writable {

private String location = null;

private String hashKey = null;

public RedisHashInputSplit() {

// Default constructor for reflection

}

public RedisHashInputSplit(String redisHost, String hash) {

this.location = redisHost;

this.hashKey = hash;

}

public String getHashKey() {

return this.hashKey;

}

public void readFields(DataInput in) throws IOException {

this.location = in.readUTF();

this.hashKey = in.readUTF();

}

public void write(DataOutput out) throws IOException {

out.writeUTF(location);

out.writeUTF(hashKey);

}

public long getLength() throws IOException, InterruptedException {

return 0;

}

public String[] getLocations() throws IOException, InterruptedException {

return new String[] { location };

}

}InputFormat code:

public static class RedisHashInputFormat extends InputFormat {

public static final String REDIS_HOSTS_CONF =

"mapred.redishashinputformat.hosts";

public static final String REDIS_HASH_KEY_CONF =

"mapred.redishashinputformat.key";

private static final Logger LOG = Logger

.getLogger(RedisHashInputFormat.class);

public static void setRedisHosts(Job job, String hosts) {

job.getConfiguration().set(REDIS_HOSTS_CONF, hosts);

}

public static void setRedisHashKey(Job job, String hashKey) {

job.getConfiguration().set(REDIS_HASH_KEY_CONF, hashKey);

}

public List getSplits(JobContext job) throws IOException {

String hosts = job.getConfiguration().get(REDIS_HOSTS_CONF);

if (hosts == null || hosts.isEmpty()) {

throw new IOException(REDIS_HOSTS_CONF

+ " is not set in configuration.");

}

String hashKey = job.getConfiguration().get(REDIS_HASH_KEY_CONF);

if (hashKey == null || hashKey.isEmpty()) {

throw new IOException(REDIS_HASH_KEY_CONF

+ " is not set in configuration.");

}

// Create an input split for each host

List splits = new ArrayList();

for (String host : hosts.split(",")) {

splits.add(new RedisHashInputSplit(host, hashKey));

}

LOG.info("Input splits to process: " + splits.size());

return splits;

}

public RecordReader createRecordReader(InputSplit split,

TaskAttemptContext context) throws IOException,

InterruptedException {

return new RedisHashRecordReader();

}

public static class RedisHashRecordReader extends RecordReader {

// code in next section

}

public static class RedisHashInputSplit extends

InputSplit implements Writable {

// code in next section

}

} RecordReader code:

public static class RedisHashRecordReader extends RecordReader {

private static final Logger LOG =

Logger.getLogger(RedisHashRecordReader.class);

private Iterator> keyValueMapIter = null;

private Text key = new Text(), value = new Text();

private float processedKVs = 0, totalKVs = 0;

private Entry currentEntry = null;

public void initialize(InputSplit split, TaskAttemptContext context)

throws IOException, InterruptedException {

// Get the host location from the InputSplit

String host = split.getLocations()[0];

String hashKey = ((RedisHashInputSplit) split).getHashKey();

LOG.info("Connecting to " + host + " and reading from "

+ hashKey);

Jedis jedis = new Jedis(host);

jedis.connect();

jedis.getClient().setTimeoutInfinite();

// Get all the key/value pairs from the Redis instance and store

// them in memory

totalKVs = jedis.hlen(hashKey);

keyValueMapIter = jedis.hgetAll(hashKey).entrySet().iterator();

LOG.info("Got " + totalKVs + " from " + hashKey);

jedis.disconnect();

}

public boolean nextKeyValue() throws IOException,

InterruptedException {

// If the key/value map still has values

if (keyValueMapIter.hasNext()) {

// Get the current entry and set the Text objects to the entry

currentEntry = keyValueMapIter.next();

key.set(currentEntry.getKey());

value.set(currentEntry.getValue());

return true;

} else {

// No more values? return false.

return false;

}

}

public Text getCurrentKey() throws IOException,

InterruptedException {

return key;

}

public Text getCurrentValue() throws IOException,

InterruptedException {

return value;

}

public float getProgress() throws IOException, InterruptedException {

return processedKVs / totalKVs;

}

public void close() throws IOException {

// nothing to do here

}

} Driver code:

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

String hosts = otherArgs[0];

String hashKey = otherArgs[1];

Path outputDir = new Path(otherArgs[2]);

Job job = new Job(conf, "Redis Input");

job.setJarByClass(RedisInputDriver.class);

// Use the identity mapper

job.setNumReduceTasks(0);

job.setInputFormatClass(RedisHashInputFormat.class);

RedisHashInputFormat.setRedisHosts(job, hosts);

RedisHashInputFormat.setRedisHashKey(job, hashKey);

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job, outputDir);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

System.exit(job.waitForCompletion(true) ? 0 : 3);

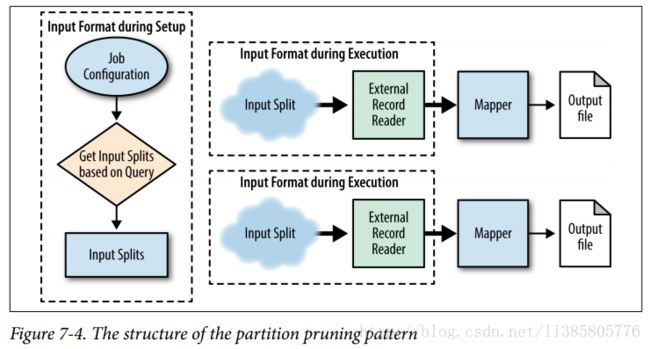

}Partition Pruning

Partition pruning changes only the amount of data that is read by the MapReduce job,not the eventual outcome of the analytic. The main reason for partition pruning is to reduce the overall processing time to read in data. This is done by ignoring input that will not produce any output before it even gets to a map task.

Problem: Given a set of user data, partition the user-to-reputation mappings by last access date across six Redis instances.

Custom WritableComparable code:

lastAccessMonth用于实现compareTo方法。hashcode方法,直接将各个域连接成整个字符串然后用字符串的hashcode

public static class RedisKey implements WritableComparable {

private int lastAccessMonth = 0;

private Text field = new Text();

public int getLastAccessMonth() {

return this.lastAccessMonth;

}

public void setLastAccessMonth(int lastAccessMonth) {

this.lastAccessMonth = lastAccessMonth;

}

public Text getField() {

return this.field;

}

public void setField(String field) {

this.field.set(field);

}

public void readFields(DataInput in) throws IOException {

lastAccessMonth = in.readInt();

this.field.readFields(in);

}

public void write(DataOutput out) throws IOException {

out.writeInt(lastAccessMonth);

this.field.write(out);

}

public int compareTo(RedisKey rhs) {

if (this.lastAccessMonth == rhs.getLastAccessMonth()) {

return this.field.compareTo(rhs.getField());

} else {

return this.lastAccessMonth < rhs.getLastAccessMonth() ? -1 : 1;

}

}

public String toString() {

return this.lastAccessMonth + "\t" + this.field.toString();

}

public int hashCode() {

return toString().hashCode();

}

} public static class RedisLastAccessOutputFormat

extends OutputFormat {

public RecordWriter getRecordWriter(

TaskAttemptContext job) throws IOException, InterruptedException {

return new RedisLastAccessRecordWriter();

}

public void checkOutputSpecs(JobContext context) throws IOException,

InterruptedException {

}

public OutputCommitter getOutputCommitter(TaskAttemptContext context)

throws IOException, InterruptedException {

return (new NullOutputFormat()).getOutputCommitter(context);

}

public static class RedisLastAccessRecordWriter

extends RecordWriter {

// Code in next section

}

} RecordWriter code:

public static class RedisLastAccessRecordWriter

extends RecordWriter {

private HashMap jedisMap = new HashMap();

public RedisLastAccessRecordWriter() {

// Create a connection to Redis for each host

int i = 0;

for (String host : MRDPUtils.REDIS_INSTANCES) {

Jedis jedis = new Jedis(host);

jedis.connect();

jedisMap.put(i, jedis);

jedisMap.put(i + 1, jedis);

i += 2;

}

}

public void write(RedisKey key, Text value) throws IOException,

InterruptedException {

// Get the Jedis instance that this key/value pair will be

// written to -- (0,1)->0, (2-3)->1, ... , (10-11)->5

Jedis j = jedisMap.get(key.getLastAccessMonth());

// Write the key/value pair

j.hset(MONTH_FROM_INT.get(key.getLastAccessMonth()), key

.getField().toString(), value.toString());

}

public void close(TaskAttemptContext context) throws IOException,

InterruptedException {

// For each jedis instance, disconnect it

for (Jedis jedis : jedisMap.values()) {

jedis.disconnect();

}

}

} Mapper code.:

public static class RedisLastAccessOutputMapper extends

Mapper {

// This object will format the creation date string into a Date object

private final static SimpleDateFormat frmt = new SimpleDateFormat(

"yyyy-MM-dd'T'HH:mm:ss.SSS");

private RedisKey outkey = new RedisKey();

private Text outvalue = new Text();

public void map(Object key, Text value, Context context)

throws IOException, InterruptedException {

Map parsed = MRDPUtils.transformXmlToMap(value

.toString());

String userId = parsed.get("Id");

String reputation = parsed.get("Reputation");

// Grab the last access date

String strDate = parsed.get("LastAccessDate");

// Parse the string into a Calendar object

Calendar cal = Calendar.getInstance();

cal.setTime(frmt.parse(strDate));

// Set our output key and values

outkey.setLastAccessMonth(cal.get(Calendar.MONTH));

outkey.setField(userId);

outvalue.set(reputation);

context.write(outkey, outvalue);

}

} Driver code:

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Path inputPath = new Path(args[0]);

Job job = new Job(conf, "Redis Last Access Output");

job.setJarByClass(PartitionPruningOutputDriver.class);

job.setMapperClass(RedisLastAccessOutputMapper.class);

job.setNumReduceTasks(0);

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.setInputPaths(job, inputPath);

job.setOutputFormatClass(RedisHashSetOutputFormat.class);

job.setOutputKeyClass(RedisKey.class);

job.setOutputValueClass(Text.class);

int code = job.waitForCompletion(true) ? 0 : 2;

System.exit(code);

}

Problem: Given a query for user to reputation mappings by months, read only the data required to satisfy the query in parallel.

InputSplit code.:

The input split shown here is very similar to the input split in “External Source Input Example” (page 197). Instead of storing one hash key, we are going to store multiple hash keys. This is because the data is partitioned based on month, instead of all the data being randomly distributed in one hash

public static class RedisLastAccessInputSplit

extends InputSplit implements Writable {

private String location = null;

private List hashKeys = new ArrayList();

public RedisLastAccessInputSplit() {

// Default constructor for reflection

}

public RedisLastAccessInputSplit(String redisHost) {

this.location = redisHost;

}

public void addHashKey(String key) {

hashKeys.add(key);

}

public void removeHashKey(String key) {

hashKeys.remove(key);

}

public List getHashKeys() {

return hashKeys;

}

public void readFields(DataInput in) throws IOException {

location = in.readUTF();

int numKeys = in.readInt();

hashKeys.clear();

for (int i = 0; i < numKeys; ++i) {

hashKeys.add(in.readUTF());

}

}

public void write(DataOutput out) throws IOException {

out.writeUTF(location);

out.writeInt(hashKeys.size());

for (String key : hashKeys) {

out.writeUTF(key);

}

}

public long getLength() throws IOException, InterruptedException {

return 0;

}

public String[] getLocations() throws IOException, InterruptedException {

return new String[] { location };

}

} InputFormat code:

This input format class intelligently creates RedisLastAccessInputSplit objects from the selected months of data. Much like the output format we showed earlier in “OutputFormat code” (page 207), this output format writes RedisKey objects, this input format reads the same objects and is templated to enforce this on mapper implementations. It initially creates a hash map of host-to-input splits in order to add the hash keys to the input split, rather than adding both months of data to the same split. If a split has not been created for a particular month, a new one is created and the month hash key is added. Otherwise, the hash key is added to the split that has already been created. A List is then created out of the values stored in the map. This will create a number of input splits equivalent to the number of Redis instances required to satisfy the query.

public static class RedisLastAccessInputFormat

extends InputFormat {

public static final String REDIS_SELECTED_MONTHS_CONF =

"mapred.redilastaccessinputformat.months";

private static final HashMap MONTH_FROM_STRING =

new HashMap();

private static final HashMap MONTH_TO_INST_MAP =

new HashMap();

private static final Logger LOG = Logger

.getLogger(RedisLastAccessInputFormat.class);

static {

// Initialize month to Redis instance map

// Initialize month 3 character code to integer

}

public static void setRedisLastAccessMonths(Job job, String months) {

job.getConfiguration().set(REDIS_SELECTED_MONTHS_CONF, months);

}

public List getSplits(JobContext job) throws IOException {

String months = job.getConfiguration().get(

REDIS_SELECTED_MONTHS_CONF);

if (months == null || months.isEmpty()) {

throw new IOException(REDIS_SELECTED_MONTHS_CONF

+ " is null or empty.");

}

// Create input splits from the input months

HashMap instanceToSplitMap =

new HashMap();

for (String month : months.split(",")) {

String host = MONTH_TO_INST_MAP.get(month);

RedisLastAccessInputSplit split = instanceToSplitMap.get(host);

if (split == null) {

split = new RedisLastAccessInputSplit(host);

split.addHashKey(month);

instanceToSplitMap.put(host, split);

} else {

split.addHashKey(month);

}

}

LOG.info("Input splits to process: " +

instanceToSplitMap.values().size());

return new ArrayList(instanceToSplitMap.values());

}

public RecordReader createRecordReader(

InputSplit split, TaskAttemptContext context)

throws IOException, InterruptedException {

return new RedisLastAccessRecordReader();

}

public static class RedisLastAccessRecordReader

extends RecordReader {

// Code in next section

}

} RecordReader code:

The RedisLastAccessRecordReader is a bit more complicated than the other record readers we have seen. It needs to read from multiple hashes, rather than just reading everything at once in the initialize method. Here, the configuration is simply read in this method. In nextKeyValue, a new connection to Redis is created if the iterator through the hash is null, or if we have reached the end of all the hashes to read. If the iterator through the hashes does not have a next value, we immediately return false, as there is no more data for the map task. Otherwise, we connect to Redis and pull all the data from the specific hash. The hash iterator is then used to exhaust all the field value pairs from Redis. A do-while loop is used to ensure that once a hash iterator is complete, it will loop back around to get data from the next hash or inform the task there is no more data to be read. The implementation of the remaining methods are identical to that of the RedisHash RecordReader and are omitted.

同一个节点上两个月份的数据,通过不同的hashkey可以查询到对应的数据

public static class RedisLastAccessRecordReader

extends RecordReader {

private static final Logger LOG = Logger

.getLogger(RedisLastAccessRecordReader.class);

private Entry currentEntry = null;

private float processedKVs = 0, totalKVs = 0;

private int currentHashMonth = 0;

private Iterator> hashIterator = null;

private Iterator hashKeys = null;

private RedisKey key = new RedisKey();

private String host = null;

private Text value = new Text();

public void initialize(InputSplit split, TaskAttemptContext context)

throws IOException, InterruptedException {

// Get the host location from the InputSplit

host = split.getLocations()[0];

// Get an iterator of all the hash keys we want to read

hashKeys = ((RedisLastAccessInputSplit) split)

.getHashKeys().iterator();

LOG.info("Connecting to " + host);

}

public boolean nextKeyValue() throws IOException,

InterruptedException {

boolean nextHashKey = false;

do {

// if this is the first call or the iterator does not have a

// next

if (hashIterator == null || !hashIterator.hasNext()) {

// if we have reached the end of our hash keys, return

// false

if (!hashKeys.hasNext()) {

// ultimate end condition, return false

return false;

} else {

// Otherwise, connect to Redis and get all

// the name/value pairs for this hash key

Jedis jedis = new Jedis(host);

jedis.connect();

String strKey = hashKeys.next();

currentHashMonth = MONTH_FROM_STRING.get(strKey);

hashIterator = jedis.hgetAll(strKey).entrySet()

.iterator();

jedis.disconnect();

}

}

// If the key/value map still has values

if (hashIterator.hasNext()) {

// Get the current entry and set

// the Text objects to the entry

currentEntry = hashIterator.next();

key.setLastAccessMonth(currentHashMonth);

key.setField(currentEntry.getKey());

value.set(currentEntry.getValue());

} else {

nextHashKey = true;

}

} while (nextHashKey);

return true;

}

...

} Driver code.

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

String lastAccessMonths = args[0];

Path outputDir = new Path(args[1]);

Job job = new Job(conf, "Redis Input");

job.setJarByClass(PartitionPruningInputDriver.class);

// Use the identity mapper

job.setNumReduceTasks(0);

job.setInputFormatClass(RedisLastAccessInputFormat.class);

RedisLastAccessInputFormat.setRedisLastAccessMonths(job,

lastAccessMonths);

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job, outputDir);

job.setOutputKeyClass(RedisKey.class);

job.setOutputValueClass(Text.class);

System.exit(job.waitForCompletion(true) ? 0 : 2);

}