目录

- Typical Loss

- MSE

- Derivative

- MSE Gradient

- Softmax

- Derivative

Typical Loss

Mean Squared Error

- Cross Entropy Loss

- binary

- multi-class

- +softmax

MSE

\(loss = \sum[y-(xw+b)]^2\)

\(L_{2-norm} = ||y-(xw+b)||_2\)

\(loss = norm(y-(xw+b))^2\)

Derivative

\(loss = \sum[y-f_\theta(x)]^2\)

\(\frac{\nabla\text{loss}}{\nabla{\theta}}=2\sum{[y-f_\theta(x)]}*\frac{\nabla{f_\theta{(x)}}}{\nabla{\theta}}\)

MSE Gradient

import tensorflow as tfx = tf.random.normal([2, 4])

w = tf.random.normal([4, 3])

b = tf.zeros([3])

y = tf.constant([2, 0])

with tf.GradientTape() as tape:

tape.watch([w, b])

prob = tf.nn.softmax(x @ w + b, axis=1)

loss = tf.reduce_mean(tf.losses.MSE(tf.one_hot(y, depth=3), prob))

grads = tape.gradient(loss, [w, b])

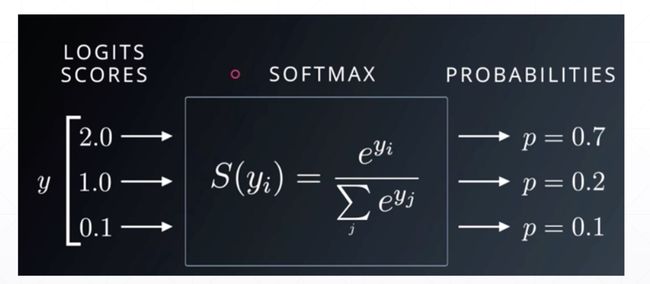

grads[0]grads[1]Softmax

soft version of max

大的越来越大,小的越来越小、越密集

Derivative

\[ p_i = \frac{e^{a_i}}{\sum_{k=1}^Ne^{a_k}} \]

- i=j

\[ \frac{\partial{p_i}}{\partial{a_j}}=\frac{\partial{\frac{e^{a_i}}{\sum_{k=1}^Ne^{a_k}}}}{{\partial{a_j}}} = p_i(1-p_j) \]

- \(i\neq{j}\)

\[ \frac{\partial{p_i}}{\partial{a_j}}=\frac{\partial{\frac{e^{a_i}}{\sum_{k=1}^Ne^{a_k}}}}{{\partial{a_j}}} = -p_j*p_i \]

x = tf.random.normal([2, 4])

w = tf.random.normal([4, 3])

b = tf.zeros([3])

y = tf.constant([2, 0])

with tf.GradientTape() as tape:

tape.watch([w, b])

logits =x @ w + b

loss = tf.reduce_mean(

tf.losses.categorical_crossentropy(tf.one_hot(y, depth=3),

logits,

from_logits=True))

grads = tape.gradient(loss, [w, b])

grads[0]grads[1]