ELK-6.0.0(二)搭建kibana和logstash服务器

操作流程

- 安装kibana

- 安装logstash

- 配置logstash

- kibana上查看日志

- 收集nginx日志

- 使用beats采集日志

上篇回顾:https://blog.csdn.net/tladagio/article/details/88222927

一、安装kibana

1、由于上一篇中我们已经配置过yum源,这里就不用再配置了,直接yum安装即可,安装命令如下,在主节点上安装:

[root@master-node ~]# yum -y install kibana 若yum安装的速度太慢,可以直接下载rpm包来进行安装:

[root@master-node ~]# wget https://artifacts.elastic.co/downloads/kibana/kibana-6.0.0-x86_64.rpm

[root@master-node ~]# rpm -ivh kibana-6.0.0-x86_64.rpm2、安装完成后,对kibana进行配置:

[root@master-node ~]# vim /etc/kibana/kibana.yml #增加以下内容

[root@master-node ~]# cat /etc/kibana/kibana.yml | grep ^[^#]

server.port: 5601 #配置kibana的端口

server.host: "192.168.2.10" #配置监听IP

elasticsearch.url: "http://192.168.2.10:9200" #配置es服务器的IP地址,如果是集群则配置该集群中主节点的IP

logging.dest: /var/log/kibana.log #配置kibana的日志文件路径,不然默认是messages里记录日志3、创建日志文件:

[root@master-node ~]# touch /var/log/kibana.log;chmod 777 /var/log/kibana.log4、启动kibana服务,并检查进程和监听端口

[root@master-node ~]# systemctl start kibana

[root@master-node ~]# systemctl status kibana

● kibana.service - Kibana

Loaded: loaded (/etc/systemd/system/kibana.service; disabled; vendor preset: disabled)

Active: active (running) since Thu 2019-03-07 21:54:24 CST; 2s ago

Main PID: 103670 (node)

CGroup: /system.slice/kibana.service

└─103670 /usr/share/kibana/bin/../node/bin/node --no-warnings /usr/share/kibana/bin/../src/cli -c /etc/kibana/kibana.ym...

Mar 07 21:54:24 master-node systemd[1]: Started Kibana.

Mar 07 21:54:24 master-node systemd[1]: Starting Kibana...

[root@master-node ~]# netstat -ntlp | grep 5601

tcp 0 0 192.168.2.10:5601 0.0.0.0:* LISTEN 103670/node 说明:由于kibana是使用node.js开发的,所以进程名称为node

5、然后在浏览器里进行访问:http://192.168.2.10:5601/ ,由于我们并没有安装x-pack,所以此时是没有用户名和密码的,可以直接访问的:

到此我们的kibana就安装完成了,接下来就是安装logstash,不然kibana是没法用的。

二、安装logstash

1、在数据节点:192.168.2.123安装logstash,但是要注意的是目前logstash不支持JDK1.9。

yum安装

[root@data-node1 ~]# yum install -y logstashyum源的速度太慢的话就下载rpm包来进行安装:

[root@data-node1 ~]# wget https://artifacts.elastic.co/downloads/logstash/logstash-6.0.0.rpm

[root@data-node1 ~]# rpm -ivh logstash-6.0.0.rpm

warning: logstash-6.0.0.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:logstash-1:6.0.0-1 ################################# [100%]

Using provided startup.options file: /etc/logstash/startup.options

Successfully created system startup script for Logstash2、安装完之后,先不要启动服务,先创建logstash收集syslog日志:

[root@data-node1 ~]# vim /etc/logstash/conf.d/syslog.conf

[root@data-node1 ~]# cat /etc/logstash/conf.d/syslog.conf

input { # 定义日志源

syslog {

type => "system-syslog" # 定义类型

port => 10514 # 定义监听端口

}

}

output { # 定义日志输出

stdout {

codec => rubydebug # 将日志输出到当前的终端上显示

}

}检测配置文件是否有错:

[root@data-node1 ~]# cd /usr/share/logstash/bin/

[root@data-node1 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

Sending Logstash's logs to /var/log/logstash which is now configured via log4j2.properties

Configuration OK #显示OK则表示配置文件没有问题命令说明:

- --path.settings 用于指定logstash的配置文件所在的目录

- -f 指定需要被检测的配置文件的路径

- --config.test_and_exit 指定检测完之后就退出,不然就会直接启动了

3、配置kibana服务器的ip以及配置的监听端口:

[root@data-node1 ~]# vim /etc/rsyslog.conf

#### RULES ####

*.* @@192.168.2.123:10514 #添加4、重启rsyslog,让配置生效:

[root@data-node1 ~]# systemctl restart rsyslog

5、指定配置文件,启动logstash:

[root@data-node1 ~]# cd /usr/share/logstash/bin/

[root@data-node1 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf

Sending Logstash's logs to /var/log/logstash which is now configured via log4j2.properties

# 这时终端会停留在这里,因为我们在配置文件中定义的是将信息输出到当前终端6、打开新终端检查一下10514端口是否已被监听:

[root@data-node1 ~]# netstat -ntlp | grep 10514

tcp6 0 0 :::10514 :::* LISTEN 105994/java 7、然后在节点2(192.168.2.180)的机器ssh登录到这台机器上,查看节点1(192.168.2.123)日志输出:

{

"severity" => 6,

"pid" => "106243",

"program" => "sshd",

"message" => "Connection closed by 212.0.155.150 [preauth]\n",

"type" => "system-syslog",

"priority" => 86,

"logsource" => "data-node1",

"@timestamp" => 2019-03-07T06:34:45.000Z,

"@version" => "1",

"host" => "192.168.2.123",

"facility" => 10,

"severity_label" => "Informational",

"timestamp" => "Mar 7 14:34:45",

"facility_label" => "security/authorization"

}

{

"severity" => 6,

"pid" => "106251",

"program" => "sshd",

"message" => "Accepted password for root from 192.168.2.180 port 45666 ssh2\n",

"type" => "system-syslog",

"priority" => 86,

"logsource" => "data-node1",

"@timestamp" => 2019-03-07T06:34:52.000Z,

"@version" => "1",

"host" => "192.168.2.123",

"facility" => 10,

"severity_label" => "Informational",

"timestamp" => "Mar 7 14:34:52",

"facility_label" => "security/authorization"

}

{

"severity" => 6,

"program" => "systemd",

"message" => "Started Session 203 of user root.\n",

"type" => "system-syslog",

"priority" => 30,

"logsource" => "data-node1",

"@timestamp" => 2019-03-07T06:34:52.000Z,

"@version" => "1",

"host" => "192.168.2.123",

"facility" => 3,

"severity_label" => "Informational",

"timestamp" => "Mar 7 14:34:52",

"facility_label" => "system"

}

{

"severity" => 6,

"program" => "systemd-logind",

"message" => "New session 203 of user root.\n",

"type" => "system-syslog",

"priority" => 38,

"logsource" => "data-node1",

"@timestamp" => 2019-03-07T06:34:52.000Z,

"@version" => "1",

"host" => "192.168.2.123",

"facility" => 4,

"severity_label" => "Informational",

"timestamp" => "Mar 7 14:34:52",

"facility_label" => "security/authorization"

}

{

"severity" => 6,

"program" => "systemd",

"message" => "Starting Session 203 of user root.\n",

"type" => "system-syslog",

"priority" => 30,

"logsource" => "data-node1",

"@timestamp" => 2019-03-07T06:34:52.000Z,

"@version" => "1",

"host" => "192.168.2.123",

"facility" => 3,

"severity_label" => "Informational",

"timestamp" => "Mar 7 14:34:52",

"facility_label" => "system"

}

{

"severity" => 6,

"pid" => "106251",

"program" => "sshd",

"message" => "pam_unix(sshd:session): session opened for user root by (uid=0)\n",

"type" => "system-syslog",

"priority" => 86,

"logsource" => "data-node1",

"@timestamp" => 2019-03-07T06:34:52.000Z,

"@version" => "1",

"host" => "192.168.2.123",

"facility" => 10,

"severity_label" => "Informational",

"timestamp" => "Mar 7 14:34:52",

"facility_label" => "security/authorization"

}如上,可以看到,终端中以JSON的格式打印了收集到的日志,测试成功。

三、配置logstash

1、以上只是测试的配置,这一步我们需要重新改一下配置文件,让收集的日志信息输出到es服务器中,而不是当前终端:

[root@data-node1 ~]# vim /etc/logstash/conf.d/syslog.conf

[root@data-node1 ~]# cat /etc/logstash/conf.d/syslog.conf

input {

syslog {

type => "system-syslog"

port => 10514

}

}

output {

elasticsearch {

hosts => ["192.168.2.10:9200"] # 定义es服务器的ip

index => "system-syslog-%{+YYYY.MM}" # 定义索引

}

}同样的需要检测配置文件有没有错:

[root@data-node1 ~]# cd /usr/share/logstash/bin/

[root@data-node1 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit

Sending Logstash's logs to /var/log/logstash which is now configured via log4j2.properties

Configuration OK2、启动logstash服务,并检查进程以及监听端口:

[root@data-node1 ~]# systemctl start logstash.service 错误解决:

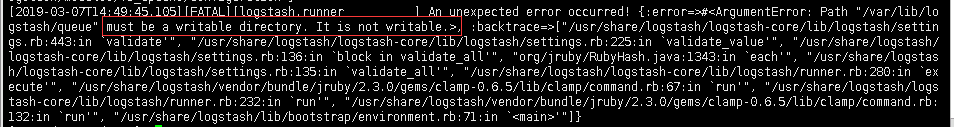

启动logstash后,进程是正常存在的,但是9600以及10514端口却没有被监听。于是查看logstash的日志看看有没有错误信息的输出,但是发现没有记录日志信息,那就只能转而去查看messages的日志,发现错误信息如下:

这是因为权限不够,既然是权限不够,那就设置权限即可:

[root@data-node1 ~]# chown logstash /var/log/logstash/logstash-plain.log

[root@data-node1 ~]# ll /var/log/logstash/logstash-plain.log

-rw-r--r-- 1 logstash root 5264 Mar 7 14:48 /var/log/logstash/logstash-plain.log

[root@data-node1 ~]# systemctl restart logstash.service设置完权限重启服务之后,发现还是没有监听端口,查看logstash-plain.log文件记录的错误日志信息如下:

可以看到,依旧是权限的问题,提示必须是可写目录。这是因为之前我们以root的身份在终端启动过logstash,所以产生的相关文件的属组属主都是root,同样的,也是设置一下权限即可:

[root@data-node1 ~]# ll /var/lib/logstash/

total 12

drwxr-xr-x 2 root root 4096 Mar 7 14:06 dead_letter_queue

drwxr-xr-x 2 root root 4096 Mar 7 14:06 queue

-rw-r--r-- 1 root root 36 Mar 7 14:31 uuid

You have new mail in /var/spool/mail/root

[root@data-node1 ~]# chown -R logstash /var/lib/logstash/

[root@data-node1 ~]# systemctl restart logstash.service 这次就没问题了,端口正常监听了,这样我们的logstash服务就启动成功了:

[root@data-node1 ~]# netstat -ntlp | grep 9600

tcp6 0 0 127.0.0.1:9600 :::* LISTEN 110205/java

[root@data-node1 ~]# netstat -ntlp | grep 10514

tcp6 0 0 :::10514 :::* LISTEN 110205/java但是可以看到,logstash的监听ip是127.0.0.1这个本地ip,本地ip无法远程通信,所以需要修改一下配置文件,配置一下监听的ip:

[root@data-node1 ~]# vim /etc/logstash/logstash.yml

http.host: "192.168.2.123"

[root@data-node1 ~]# systemctl restart logstash

[root@data-node1 ~]# netstat -lntp |grep 9600

tcp6 0 0 192.168.2.123:9600 :::* LISTEN 10091/java

[root@data-node1 ~]# 四、kibana上查看日志

1、完成了logstash服务器的搭建之后,回到主节点的kibana服务器上查看日志,执行以下命令可以获取索引信息:

[root@master-node ~]# curl '192.168.2.10:9200/_cat/indices?v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .kibana DejiBLz1TP6Q9CBj62pqlA 1 1 1 0 7.3kb 3.6kb

green open system-syslog-2019.03 IJdjjctHSKeuqRZiFOIXCw 5 1 77 0 668.9kb 334.4kb

You have new mail in /var/spool/mail/root如上,可以看到,在logstash配置文件中定义的system-syslog索引成功获取到了,证明配置没问题,logstash与es通信正常。

2、获取指定索引详细信息,注意这里的文件名system-syslog-*的时间,可以在上面找到

[root@master-node ~]# curl -XGET '192.168.2.10:9200/system-syslog-2019.03?pretty'

{

"system-syslog-2019.03" : {

"aliases" : { },

"mappings" : {

"system-syslog" : {

"properties" : {

"@timestamp" : {

"type" : "date"

},

"@version" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"facility" : {

"type" : "long"

},

"facility_label" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"host" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"logsource" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"message" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"pid" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"priority" : {

"type" : "long"

},

"program" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"severity" : {

"type" : "long"

},

"severity_label" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"timestamp" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"type" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

}

}

}

},

"settings" : {

"index" : {

"creation_date" : "1551970487290",

"number_of_shards" : "5",

"number_of_replicas" : "1",

"uuid" : "IJdjjctHSKeuqRZiFOIXCw",

"version" : {

"created" : "6000099"

},

"provided_name" : "system-syslog-2019.03"

}

}

}

}3、如果日后需要删除索引的话,使用以下命令可以删除指定索引:

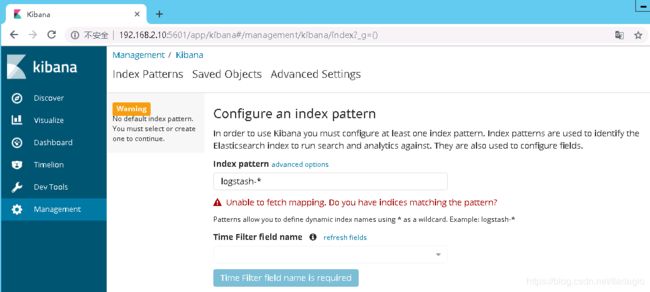

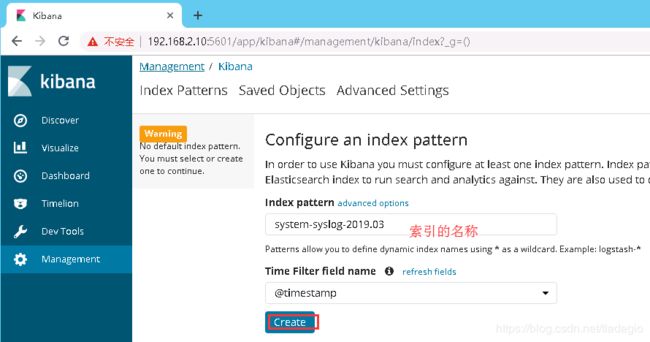

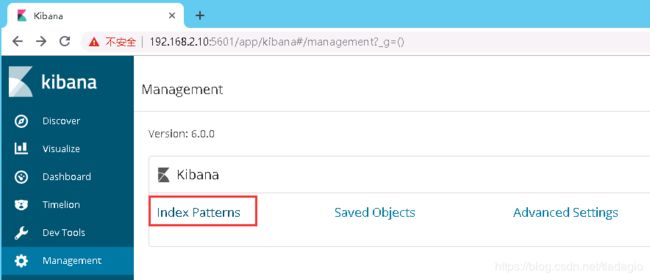

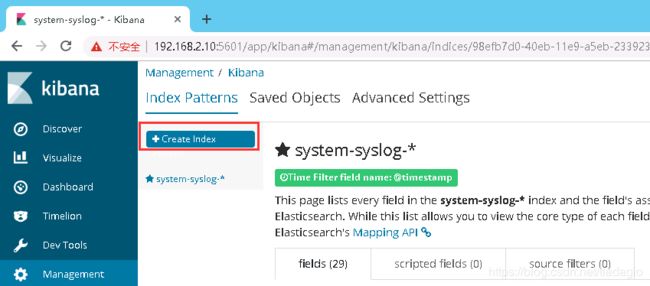

curl -XDELETE 'localhost:9200/system-syslog-2019.03'4、es与logstash能够正常通信后就可以去配置kibana了,浏览器访问:192.168.2.10:5601,到kibana页面上配置索引:

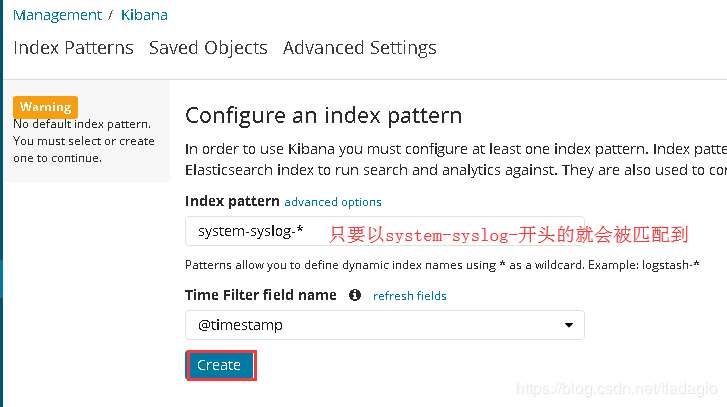

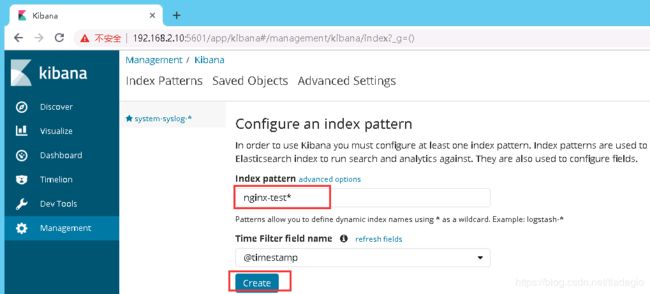

也可以使用通配符,进行批量匹配:

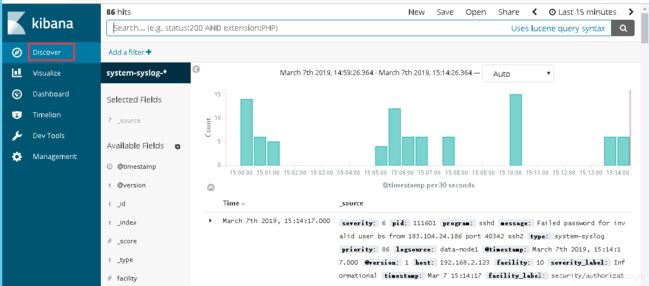

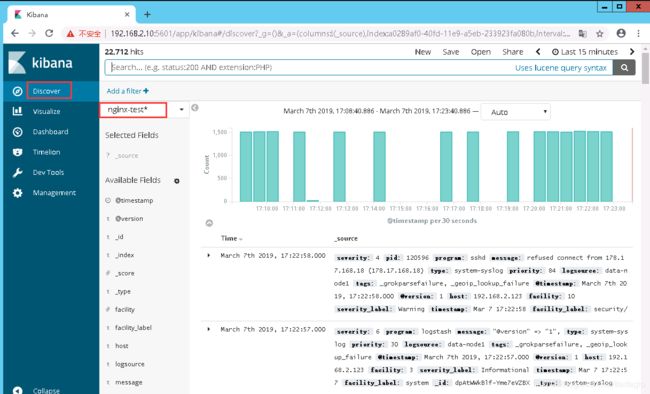

5、配置成功后点击 “Discover” ,右边会自动显示内容,如果无法查看就修改右上角的时间

其实这里显示的日志数据就是 /var/log/messages 文件里的数据,因为logstash里配置的就是收集messages 文件里的数据。

以上这就是如何使用logstash收集系统日志,输出到es服务器上,并在kibana的页面上进行查看。

五、logstash收集nginx日志

1、和收集syslog一样,首先需要编辑配置文件,这一步在logstash服务器上完成:

[root@data-node1 ~]# vim /etc/logstash/conf.d/nginx.conf # 增加如下内容

input {

file { # 指定一个文件作为输入源

path => "/tmp/elk_access.log" # 指定文件的路径

start_position => "beginning" # 指定何时开始收集

type => "nginx" # 定义日志类型,可自定义

}

}

filter { # 配置过滤器

grok {

match => { "message" => "%{IPORHOST:http_host} %{IPORHOST:clientip} - %{USERNAME:remote_user} \[%{HTTPDATE:timestamp}\] \"(?:%{WORD:http_verb} %{NOTSPACE:http_request}(?: HTTP/%{NUMBER:http_version})?|%{DATA:raw_http_request})\" %{NUMBER:response} (?:%{NUMBER:bytes_read}|-) %{QS:referrer} %{QS:agent} %{QS:xforwardedfor} %{NUMBER:request_time:float}"} # 定义日志的输出格式

}

geoip {

source => "clientip"

}

}

output {

stdout { codec => rubydebug }

elasticsearch {

hosts => ["192.168.2.10:9200"]

index => "nginx-test-%{+YYYY.MM.dd}"

}

}2、同样的编辑完配置文件之后,还需要检测配置文件是否有错:

[root@data-node1 ~]# cd /usr/share/logstash/bin

[root@data-node1 bin]# ./logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/nginx.conf --config.test_and_exit

Sending Logstash's logs to /var/log/logstash which is now configured via log4j2.properties

Configuration OK3、安装nginx。配置yum源,yum安装nginx

[root@data-node1 ~]# vim /etc/yum.repos.d/epel.repo

[root@data-node1 ~]# cat /etc/yum.repos.d/epel.repo

[epel]

name=aliyun epel

baseurl=http://mirrors.aliyun.com/epel/7Server/x86_64/

gpgcheck=0

[root@data-node1 ~]# yum install -y nginx4、nginx虚拟主机配置文件所在的目录中,新建一个虚拟主机配置文件:(这里是直接修改原配置文件)

[root@data-node1 ~]# cd /etc/nginx/

[root@data-node1 nginx]# vim nginx.conf

access_log /tmp/elk_access.log main2;

server {

listen 80 default_server;

listen [::]:80 default_server;

server_name elk.test.com;

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

location / {

proxy_pass http://192.168.2.10:5601;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}5、配置nginx的主配置文件,因为需要配置日志格式,在 log_format combined_realip 那一行的下面增加以下内容:

[root@data-node1 nginx]# vim nginx.conf

log_format main2 '$http_host $remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$upstream_addr" $request_time';6、完成以上配置文件的编辑之后,检测配置文件有没有错误,没有的话就reload重新加载:

[root@data-node1 ~]# which nginx

/usr/sbin/nginx

[root@data-node1 ~]# /usr/sbin/nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@data-node1 ~]# systemctl start nginx

[root@data-node1 ~]# /usr/sbin/nginx -s reload7、重启logstash服务,生成日志的索引:

systemctl restart logstash8、重启完成后,在主节点es服务器上检查是否有nginx-test开头的索引生成:

[root@master-node ~]# curl '192.168.2.10:9200/_cat/indices?v'

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .kibana DejiBLz1TP6Q9CBj62pqlA 1 1 2 0 15.1kb 7.5kb

green open nginx-test-2019.03.07 Lxu8wj_1S_KMMIZrSGc12w 5 1 85878 0 14.3mb 7.1mb

green open system-syslog-2019.03 IJdjjctHSKeuqRZiFOIXCw 5 1 86498 0 15.6mb 7.8mb

[root@master-node ~]# 9、nginx-test索引已经生成了,那么这时就可以到kibana上配置该索引:

10、配置完成之后就可以在 “Discover” 里进行查看nginx的访问日志数据了:

六、beats采集日志

之前也介绍过beats是ELK体系中新增的一个工具,它属于一个轻量的日志采集器,以上我们使用的日志采集工具是logstash,但是logstash占用的资源比较大,没有beats轻量,所以官方也推荐使用beats来作为日志采集工具。而且beats可扩展,支持自定义构建。

1、官方介绍:

https://www.elastic.co/cn/products/beats