使用Intellij构建spark源码阅读环境

步骤1:

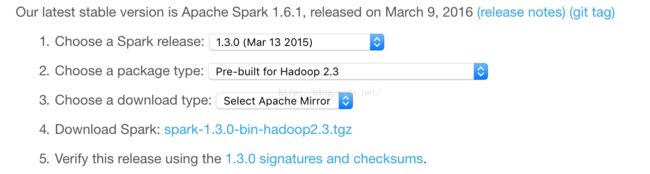

下载spark-源码,这里以spark1.3为例。

http://spark.apache.org/downloads.html

2.下载编译后的spark源码,这里下载根据Hadoop2.3版本编译的。

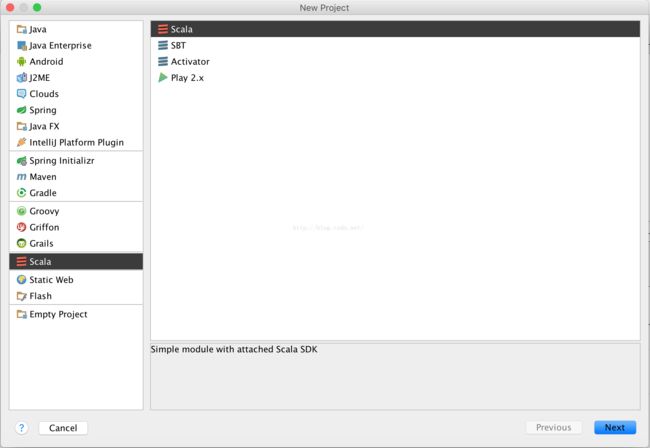

3.使用Intellij新建scala工程(使用scala编写spark application,也可以新建java工程)

5.配置项目scala版本

6.导入spark依赖版-spark-assembly-1.3.0-hadoop2.4.0.jar

导入路径为:

FIle->ProjectStructure->project Settings->Libraries->+java->选择spark-assembly-1.3.0-hadoop2.4.0.jar。

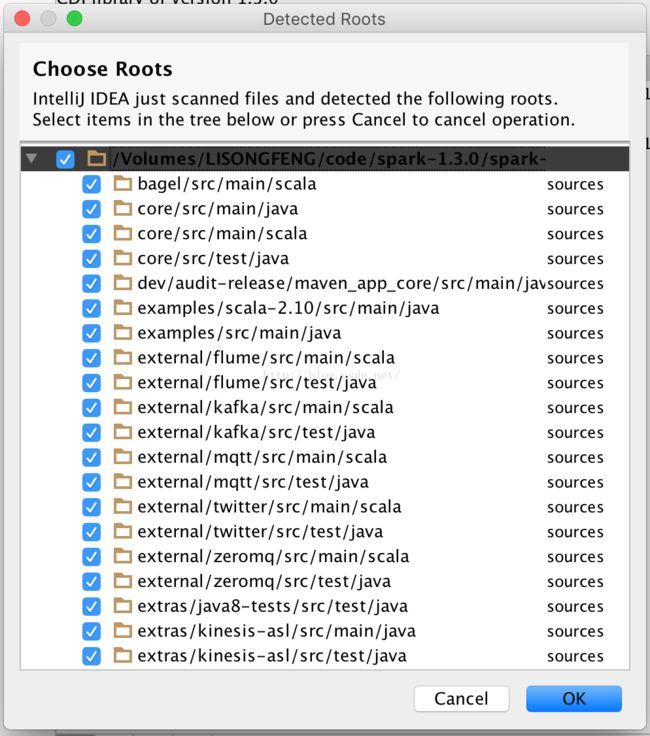

7.导入spark-源码

点加号,选择spark1.3编译前源码,点确认。

8.新建spark-scala-hello-world项目,代码如下:

/**

* Created With Intellij

* User: lisongfeng

* Date: 16/6/3

* Time: 下午5:34

*/

import org.apache.spark.SparkConf

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

/**

* @author Administrator

*/

object LineCount {

def main(args: Array[String]) {

val conf = new SparkConf()

.setAppName("LineCount")

.setMaster("local[3]")

val sc = new SparkContext(conf);

val lines = sc.textFile("/usr/local/README.md", 3)

lines.filter( _.contains("Home")).foreach(println)

val lines1 = sc.textFile("/usr/local/README1.md", 3)

val rdd2 = lines.union(lines1)

rdd2.foreach(println _ )

lines.map(_ * 2)

}

}

可以插入断点,可以把断点插入到Executor、Driver等中。

项目执行结果如下:

/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/bin/java -Didea.launcher.port=7532 "-Didea.launcher.bin.path=/Applications/IntelliJ IDEA 14.app/Contents/bin" -Dfile.encoding=UTF-8 -classpath "/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/lib/ant-javafx.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/lib/dt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/lib/javafx-mx.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/lib/jconsole.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/lib/packager.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/lib/sa-jdi.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/lib/tools.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/charsets.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/deploy.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/javaws.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/jce.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/jfr.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/jfxswt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/jsse.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/management-agent.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/plugin.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/resources.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/rt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/ext/cldrdata.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/ext/dnsns.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/ext/jfxrt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/ext/localedata.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/ext/nashorn.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/ext/sunec.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/ext/sunjce_provider.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/ext/sunpkcs11.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_45.jdk/Contents/Home/jre/lib/ext/zipfs.jar:/Users/lisongfeng/gitRepository/scala13/out/production/scala13:/Users/lisongfeng/.ivy2/cache/org.scala-lang/scala-library/jars/scala-library-2.10.5.jar:/Users/lisongfeng/.ivy2/cache/org.scala-lang/scala-reflect/jars/scala-reflect-2.10.5.jar:/Volumes/LISONGFENG/code/spark-1.3.0-bin-hadoop2.4/spark-1.3.0-bin-hadoop2.4/lib/spark-assembly-1.3.0-hadoop2.4.0.jar:/Applications/IntelliJ IDEA 14.app/Contents/lib/idea_rt.jar" com.intellij.rt.execution.application.AppMain LineCount

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

16/06/19 23:48:02 INFO SparkContext: Running Spark version 1.3.0

16/06/19 23:48:08 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

16/06/19 23:48:09 INFO SecurityManager: Changing view acls to: lisongfeng

16/06/19 23:48:09 INFO SecurityManager: Changing modify acls to: lisongfeng

16/06/19 23:48:09 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(lisongfeng); users with modify permissions: Set(lisongfeng)

16/06/19 23:48:10 INFO Slf4jLogger: Slf4jLogger started

16/06/19 23:48:10 INFO Remoting: Starting remoting

16/06/19 23:48:11 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://[email protected]:49763]

16/06/19 23:48:11 INFO Utils: Successfully started service 'sparkDriver' on port 49763.

16/06/19 23:48:11 INFO SparkEnv: Registering MapOutputTracker

16/06/19 23:48:11 INFO SparkEnv: Registering BlockManagerMaster

16/06/19 23:48:11 INFO DiskBlockManager: Created local directory at /var/folders/00/tg296f_x5yl42sbgfmxndm400000gn/T/spark-a07c726d-752d-46a0-b2d1-c2f7d6ef89aa/blockmgr-72709721-9dcf-431f-a4ae-a085016b9b92

16/06/19 23:48:11 INFO MemoryStore: MemoryStore started with capacity 983.1 MB

16/06/19 23:48:11 INFO HttpFileServer: HTTP File server directory is /var/folders/00/tg296f_x5yl42sbgfmxndm400000gn/T/spark-ceca0f7b-3167-4e54-98be-8da9d74ebbc6/httpd-b2bab4f3-903e-4007-87b9-84a724c53b73

16/06/19 23:48:11 INFO HttpServer: Starting HTTP Server

16/06/19 23:48:11 INFO Server: jetty-8.y.z-SNAPSHOT

16/06/19 23:48:11 INFO AbstractConnector: Started [email protected]:49764

16/06/19 23:48:11 INFO Utils: Successfully started service 'HTTP file server' on port 49764.

16/06/19 23:48:11 INFO SparkEnv: Registering OutputCommitCoordinator

16/06/19 23:48:11 INFO Server: jetty-8.y.z-SNAPSHOT

16/06/19 23:48:11 INFO AbstractConnector: Started [email protected]:4040

16/06/19 23:48:11 INFO Utils: Successfully started service 'SparkUI' on port 4040.

16/06/19 23:48:11 INFO SparkUI: Started SparkUI at http://192.168.1.107:4040

16/06/19 23:48:12 INFO Executor: Starting executor ID on host localhost

16/06/19 23:48:12 INFO AkkaUtils: Connecting to HeartbeatReceiver: akka.tcp://[email protected]:49763/user/HeartbeatReceiver

16/06/19 23:48:12 INFO NettyBlockTransferService: Server created on 49765

16/06/19 23:48:12 INFO BlockManagerMaster: Trying to register BlockManager

16/06/19 23:48:12 INFO BlockManagerMasterActor: Registering block manager localhost:49765 with 983.1 MB RAM, BlockManagerId(, localhost, 49765)

16/06/19 23:48:12 INFO BlockManagerMaster: Registered BlockManager

16/06/19 23:48:13 INFO MemoryStore: ensureFreeSpace(159118) called with curMem=0, maxMem=1030823608

16/06/19 23:48:13 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 155.4 KB, free 982.9 MB)

16/06/19 23:48:13 INFO MemoryStore: ensureFreeSpace(22692) called with curMem=159118, maxMem=1030823608

16/06/19 23:48:13 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 22.2 KB, free 982.9 MB)

16/06/19 23:48:13 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on localhost:49765 (size: 22.2 KB, free: 983.0 MB)

16/06/19 23:48:13 INFO BlockManagerMaster: Updated info of block broadcast_0_piece0

16/06/19 23:48:13 INFO SparkContext: Created broadcast 0 from textFile at MAIN.scala:24

16/06/19 23:48:13 INFO FileInputFormat: Total input paths to process : 1

16/06/19 23:48:13 INFO SparkContext: Starting job: foreach at MAIN.scala:25

16/06/19 23:48:13 INFO DAGScheduler: Got job 0 (foreach at MAIN.scala:25) with 3 output partitions (allowLocal=false)

16/06/19 23:48:13 INFO DAGScheduler: Final stage: Stage 0(foreach at MAIN.scala:25)

16/06/19 23:48:13 INFO DAGScheduler: Parents of final stage: List()

16/06/19 23:48:13 INFO DAGScheduler: Missing parents: List()

16/06/19 23:48:13 INFO DAGScheduler: Submitting Stage 0 (MapPartitionsRDD[2] at filter at MAIN.scala:25), which has no missing parents

16/06/19 23:48:13 INFO MemoryStore: ensureFreeSpace(2872) called with curMem=181810, maxMem=1030823608

16/06/19 23:48:13 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 2.8 KB, free 982.9 MB)

16/06/19 23:48:13 INFO MemoryStore: ensureFreeSpace(2045) called with curMem=184682, maxMem=1030823608

16/06/19 23:48:13 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 2045.0 B, free 982.9 MB)

16/06/19 23:48:13 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on localhost:49765 (size: 2045.0 B, free: 983.0 MB)

16/06/19 23:48:13 INFO BlockManagerMaster: Updated info of block broadcast_1_piece0

16/06/19 23:48:13 INFO SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:839

16/06/19 23:48:13 INFO DAGScheduler: Submitting 3 missing tasks from Stage 0 (MapPartitionsRDD[2] at filter at MAIN.scala:25)

16/06/19 23:48:13 INFO TaskSchedulerImpl: Adding task set 0.0 with 3 tasks

16/06/19 23:48:13 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, PROCESS_LOCAL, 1289 bytes)

16/06/19 23:48:14 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, localhost, PROCESS_LOCAL, 1289 bytes)

16/06/19 23:48:14 INFO TaskSetManager: Starting task 2.0 in stage 0.0 (TID 2, localhost, PROCESS_LOCAL, 1289 bytes)

16/06/19 23:48:14 INFO Executor: Running task 0.0 in stage 0.0 (TID 0)

16/06/19 23:48:14 INFO Executor: Running task 1.0 in stage 0.0 (TID 1)

16/06/19 23:48:14 INFO Executor: Running task 2.0 in stage 0.0 (TID 2)

16/06/19 23:48:14 INFO HadoopRDD: Input split: file:/usr/local/README.md:1620+812

16/06/19 23:48:14 INFO HadoopRDD: Input split: file:/usr/local/README.md:810+810

16/06/19 23:48:14 INFO HadoopRDD: Input split: file:/usr/local/README.md:0+810

16/06/19 23:48:14 INFO deprecation: mapred.tip.id is deprecated. Instead, use mapreduce.task.id

16/06/19 23:48:14 INFO deprecation: mapred.task.id is deprecated. Instead, use mapreduce.task.attempt.id

16/06/19 23:48:14 INFO deprecation: mapred.task.is.map is deprecated. Instead, use mapreduce.task.ismap

16/06/19 23:48:14 INFO deprecation: mapred.task.partition is deprecated. Instead, use mapreduce.task.partition

16/06/19 23:48:14 INFO deprecation: mapred.job.id is deprecated. Instead, use mapreduce.job.id

# Homebrew

Code is under the [BSD 2 Clause (NetBSD) license](https://github.com/Homebrew/homebrew/tree/master/LICENSE.txt).

Homebrew's current maintainers are [Misty De Meo](https://github.com/mistydemeo), [Andrew Janke](https://github.com/apjanke), [Xu Cheng](https://github.com/xu-cheng), [Mike McQuaid](https://github.com/mikemcquaid), [Baptiste Fontaine](https://github.com/bfontaine), [Brett Koonce](https://github.com/asparagui), [Martin Afanasjew](https://github.com/UniqMartin), [Dominyk Tiller](https://github.com/DomT4), [Tim Smith](https://github.com/tdsmith) and [Alex Dunn](https://github.com/dunn).

Former maintainers with significant contributions include [Jack Nagel](https://github.com/jacknagel), [Adam Vandenberg](https://github.com/adamv) and Homebrew's creator: [Max Howell](https://github.com/mxcl).

`brew help`, `man brew` or check [our documentation](https://github.com/Homebrew/homebrew/tree/master/share/doc/homebrew#readme).

Second, read the [Troubleshooting Checklist](https://github.com/Homebrew/homebrew/blob/master/share/doc/homebrew/Troubleshooting.md#troubleshooting).

Our CI infrastructure was paid for by [our Kickstarter supporters](https://github.com/Homebrew/homebrew/blob/master/SUPPORTERS.md).

16/06/19 23:48:14 INFO Executor: Finished task 1.0 in stage 0.0 (TID 1). 1792 bytes result sent to driver

16/06/19 23:48:14 INFO Executor: Finished task 2.0 in stage 0.0 (TID 2). 1792 bytes result sent to driver

16/06/19 23:48:14 INFO Executor: Finished task 0.0 in stage 0.0 (TID 0). 1792 bytes result sent to driver

16/06/19 23:48:14 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 184 ms on localhost (1/3)

16/06/19 23:48:14 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 218 ms on localhost (2/3)

16/06/19 23:48:14 INFO TaskSetManager: Finished task 2.0 in stage 0.0 (TID 2) in 188 ms on localhost (3/3)

16/06/19 23:48:14 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

16/06/19 23:48:14 INFO DAGScheduler: Stage 0 (foreach at MAIN.scala:25) finished in 0.252 s

16/06/19 23:48:14 INFO DAGScheduler: Job 0 finished: foreach at MAIN.scala:25, took 0.481620 s

16/06/19 23:48:14 INFO MemoryStore: ensureFreeSpace(73391) called with curMem=186727, maxMem=1030823608

16/06/19 23:48:14 INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 71.7 KB, free 982.8 MB)

16/06/19 23:48:14 INFO BlockManager: Removing broadcast 1

16/06/19 23:48:14 INFO BlockManager: Removing block broadcast_1

16/06/19 23:48:14 INFO MemoryStore: Block broadcast_1 of size 2872 dropped from memory (free 1030566362)

16/06/19 23:48:14 INFO BlockManager: Removing block broadcast_1_piece0

16/06/19 23:48:14 INFO MemoryStore: Block broadcast_1_piece0 of size 2045 dropped from memory (free 1030568407)

16/06/19 23:48:14 INFO BlockManagerInfo: Removed broadcast_1_piece0 on localhost:49765 in memory (size: 2045.0 B, free: 983.0 MB)

16/06/19 23:48:14 INFO BlockManagerMaster: Updated info of block broadcast_1_piece0

16/06/19 23:48:14 INFO ContextCleaner: Cleaned broadcast 1

16/06/19 23:48:14 INFO MemoryStore: ensureFreeSpace(31262) called with curMem=255201, maxMem=1030823608

16/06/19 23:48:14 INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 30.5 KB, free 982.8 MB)

16/06/19 23:48:14 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on localhost:49765 (size: 30.5 KB, free: 983.0 MB)

16/06/19 23:48:14 INFO BlockManagerMaster: Updated info of block broadcast_2_piece0

16/06/19 23:48:14 INFO SparkContext: Created broadcast 2 from textFile at MAIN.scala:26

16/06/19 23:48:14 INFO FileInputFormat: Total input paths to process : 1

16/06/19 23:48:14 INFO SparkContext: Starting job: foreach at MAIN.scala:30

16/06/19 23:48:14 INFO DAGScheduler: Got job 1 (foreach at MAIN.scala:30) with 6 output partitions (allowLocal=false)

16/06/19 23:48:14 INFO DAGScheduler: Final stage: Stage 1(foreach at MAIN.scala:30)

16/06/19 23:48:14 INFO DAGScheduler: Parents of final stage: List()

16/06/19 23:48:14 INFO DAGScheduler: Missing parents: List()

16/06/19 23:48:14 INFO DAGScheduler: Submitting Stage 1 (UnionRDD[5] at union at MAIN.scala:29), which has no missing parents

16/06/19 23:48:14 INFO MemoryStore: ensureFreeSpace(3536) called with curMem=286463, maxMem=1030823608

16/06/19 23:48:14 INFO MemoryStore: Block broadcast_3 stored as values in memory (estimated size 3.5 KB, free 982.8 MB)

16/06/19 23:48:14 INFO MemoryStore: ensureFreeSpace(2589) called with curMem=289999, maxMem=1030823608

16/06/19 23:48:14 INFO MemoryStore: Block broadcast_3_piece0 stored as bytes in memory (estimated size 2.5 KB, free 982.8 MB)

16/06/19 23:48:14 INFO BlockManagerInfo: Added broadcast_3_piece0 in memory on localhost:49765 (size: 2.5 KB, free: 983.0 MB)

16/06/19 23:48:14 INFO BlockManagerMaster: Updated info of block broadcast_3_piece0

16/06/19 23:48:14 INFO SparkContext: Created broadcast 3 from broadcast at DAGScheduler.scala:839

16/06/19 23:48:14 INFO DAGScheduler: Submitting 6 missing tasks from Stage 1 (UnionRDD[5] at union at MAIN.scala:29)

16/06/19 23:48:14 INFO TaskSchedulerImpl: Adding task set 1.0 with 6 tasks

16/06/19 23:48:14 INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID 3, localhost, PROCESS_LOCAL, 1398 bytes)

16/06/19 23:48:14 INFO TaskSetManager: Starting task 1.0 in stage 1.0 (TID 4, localhost, PROCESS_LOCAL, 1398 bytes)

16/06/19 23:48:14 INFO TaskSetManager: Starting task 2.0 in stage 1.0 (TID 5, localhost, PROCESS_LOCAL, 1398 bytes)

16/06/19 23:48:14 INFO Executor: Running task 1.0 in stage 1.0 (TID 4)

16/06/19 23:48:14 INFO Executor: Running task 2.0 in stage 1.0 (TID 5)

16/06/19 23:48:14 INFO Executor: Running task 0.0 in stage 1.0 (TID 3)

16/06/19 23:48:14 INFO HadoopRDD: Input split: file:/usr/local/README.md:1620+812

16/06/19 23:48:14 INFO HadoopRDD: Input split: file:/usr/local/README.md:810+810

16/06/19 23:48:14 INFO HadoopRDD: Input split: file:/usr/local/README.md:0+810

16/06/19 23:48:14 INFO Executor: Finished task 1.0 in stage 1.0 (TID 4). 1792 bytes result sent to driver

16/06/19 23:48:14 INFO Executor: Finished task 2.0 in stage 1.0 (TID 5). 1792 bytes result sent to driver

This is our PGP key which is valid until June 17, 2016.

* Key ID: `0xE33A3D3CCE59E297`

* Fingerprint: `C657 8F76 2E23 441E C879 EC5C E33A 3D3C CE59 E297`

* Full key: https://keybase.io/homebrew/key.asc

## License

Code is under the [BSD 2 Clause (NetBSD) license](https://github.com/Homebrew/homebrew/tree/master/LICENSE.txt).

Documentation is under the [Creative Commons Attribution license](https://creativecommons.org/licenses/by/4.0/).

## Sponsors

Our CI infrastructure was paid for by [our Kickstarter supporters](https://github.com/Homebrew/homebrew/blob/master/SUPPORTERS.md).

Our CI infrastructure is hosted by [The Positive Internet Company](http://www.positive-internet.com).

## Who Are You?

# Homebrew

Homebrew's current maintainers are [Misty De Meo](https://github.com/mistydemeo), [Andrew Janke](https://github.com/apjanke), [Xu Cheng](https://github.com/xu-cheng), [Mike McQuaid](https://github.com/mikemcquaid), [Baptiste Fontaine](https://github.com/bfontaine), [Brett Koonce](https://github.com/asparagui), [Martin Afanasjew](https://github.com/UniqMartin), [Dominyk Tiller](https://github.com/DomT4), [Tim Smith](https://github.com/tdsmith) and [Alex Dunn](https://github.com/dunn).

Our bottles (binary packages) are hosted by Bintray.

Former maintainers with significant contributions include [Jack Nagel](https://github.com/jacknagel), [Adam Vandenberg](https://github.com/adamv) and Homebrew's creator: [Max Howell](https://github.com/mxcl).

Features, usage and installation instructions are [summarised on the homepage](http://brew.sh).

[](https://bintray.com/homebrew)

## What Packages Are Available?

1. Type `brew search` for a list.

2. Or visit [braumeister.org](http://braumeister.org) to browse packages online.

3. Or use `brew search --desc` to browse packages from the command line.

## More Documentation

`brew help`, `man brew` or check [our documentation](https://github.com/Homebrew/homebrew/tree/master/share/doc/homebrew#readme).

## Troubleshooting

First, please run `brew update` and `brew doctor`.

Second, read the [Troubleshooting Checklist](https://github.com/Homebrew/homebrew/blob/master/share/doc/homebrew/Troubleshooting.md#troubleshooting).

**If you don't read these it will take us far longer to help you with your problem.**

## Security

Please report security issues to [email protected].

16/06/19 23:48:14 INFO Executor: Finished task 0.0 in stage 1.0 (TID 3). 1792 bytes result sent to driver

16/06/19 23:48:14 INFO TaskSetManager: Starting task 3.0 in stage 1.0 (TID 6, localhost, PROCESS_LOCAL, 1399 bytes)

16/06/19 23:48:14 INFO Executor: Running task 3.0 in stage 1.0 (TID 6)

16/06/19 23:48:14 INFO TaskSetManager: Finished task 1.0 in stage 1.0 (TID 4) in 11 ms on localhost (1/6)

16/06/19 23:48:14 INFO TaskSetManager: Starting task 4.0 in stage 1.0 (TID 7, localhost, PROCESS_LOCAL, 1399 bytes)

16/06/19 23:48:14 INFO TaskSetManager: Finished task 2.0 in stage 1.0 (TID 5) in 12 ms on localhost (2/6)

16/06/19 23:48:14 INFO Executor: Running task 4.0 in stage 1.0 (TID 7)

16/06/19 23:48:14 INFO TaskSetManager: Starting task 5.0 in stage 1.0 (TID 8, localhost, PROCESS_LOCAL, 1399 bytes)

16/06/19 23:48:14 INFO Executor: Running task 5.0 in stage 1.0 (TID 8)

16/06/19 23:48:14 INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID 3) in 16 ms on localhost (3/6)

16/06/19 23:48:14 INFO HadoopRDD: Input split: file:/usr/local/README1.md:0+810

16/06/19 23:48:14 INFO HadoopRDD: Input split: file:/usr/local/README1.md:810+810

16/06/19 23:48:14 INFO HadoopRDD: Input split: file:/usr/local/README1.md:1620+812

16/06/19 23:48:14 INFO Executor: Finished task 4.0 in stage 1.0 (TID 7). 1792 bytes result sent to driver

16/06/19 23:48:14 INFO Executor: Finished task 3.0 in stage 1.0 (TID 6). 1792 bytes result sent to driver

16/06/19 23:48:14 INFO TaskSetManager: Finished task 4.0 in stage 1.0 (TID 7) in 11 ms on localhost (4/6)

16/06/19 23:48:14 INFO Executor: Finished task 5.0 in stage 1.0 (TID 8). 1792 bytes result sent to driver

16/06/19 23:48:14 INFO TaskSetManager: Finished task 3.0 in stage 1.0 (TID 6) in 14 ms on localhost (5/6)

16/06/19 23:48:14 INFO TaskSetManager: Finished task 5.0 in stage 1.0 (TID 8) in 12 ms on localhost (6/6)

This is our PGP key which is valid until June 17, 2016.

* Key ID: `0xE33A3D3CCE59E297`

* Fingerprint: `C657 8F76 2E23 441E C879 EC5C E33A 3D3C CE59 E297`

* Full key: https://keybase.io/homebrew/key.asc

# Homebrew

## Who Are You?

Homebrew's current maintainers are [Misty De Meo](https://github.com/mistydemeo), [Andrew Janke](https://github.com/apjanke), [Xu Cheng](https://github.com/xu-cheng), [Mike McQuaid](https://github.com/mikemcquaid), [Baptiste Fontaine](https://github.com/bfontaine), [Brett Koonce](https://github.com/asparagui), [Martin Afanasjew](https://github.com/UniqMartin), [Dominyk Tiller](https://github.com/DomT4), [Tim Smith](https://github.com/tdsmith) and [Alex Dunn](https://github.com/dunn).

Former maintainers with significant contributions include [Jack Nagel](https://github.com/jacknagel), [Adam Vandenberg](https://github.com/adamv) and Homebrew's creator: [Max Howell](https://github.com/mxcl).

Features, usage and installation instructions are [summarised on the homepage](http://brew.sh).

## What Packages Are Available?

1. Type `brew search` for a list.

2. Or visit [braumeister.org](http://braumeister.org) to browse packages online.

3. Or use `brew search --desc` to browse packages from the command line.

## More Documentation

`brew help`, `man brew` or check [our documentation](https://github.com/Homebrew/homebrew/tree/master/share/doc/homebrew#readme).

## Troubleshooting

First, please run `brew update` and `brew doctor`.

Second, read the [Troubleshooting Checklist](https://github.com/Homebrew/homebrew/blob/master/share/doc/homebrew/Troubleshooting.md#troubleshooting).

**If you don't read these it will take us far longer to help you with your problem.**

## Security

Please report security issues to [email protected].

## License

Code is under the [BSD 2 Clause (NetBSD) license](https://github.com/Homebrew/homebrew/tree/master/LICENSE.txt).

Documentation is under the [Creative Commons Attribution license](https://creativecommons.org/licenses/by/4.0/).

## Sponsors

Our CI infrastructure was paid for by [our Kickstarter supporters](https://github.com/Homebrew/homebrew/blob/master/SUPPORTERS.md).

Our CI infrastructure is hosted by [The Positive Internet Company](http://www.positive-internet.com).

Our bottles (binary packages) are hosted by Bintray.

16/06/19 23:48:14 INFO TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

[](https://bintray.com/homebrew)

16/06/19 23:48:14 INFO DAGScheduler: Stage 1 (foreach at MAIN.scala:30) finished in 0.029 s

16/06/19 23:48:14 INFO DAGScheduler: Job 1 finished: foreach at MAIN.scala:30, took 0.054069 s

Process finished with exit code 0

从执行结果中可以看到spark各个组件的启动过程。

插入断点,可以进行debug。