sparkstreaming接受kafka数据实时存入hbse并集成rest服务

1:整个项目的流程分析

- 通过flume向kafka发送数据,然后通过sparkstreaming实时处理kafka的数据,处理完之后存到hbse,算法模型通过rest服务调用处理完的数据

2:服务器各组件的版本

- java version “1.7.0_65”

- Scala 2.11.8

- Spark version 2.1.0

- flume-1.6.0

- kafka_2.10-0.8.2.1

- hbase-1.0.0

- 服务器版本Aliyun Linux release6 15.01

- dubbo2.8.4

- 开发工具eclipse

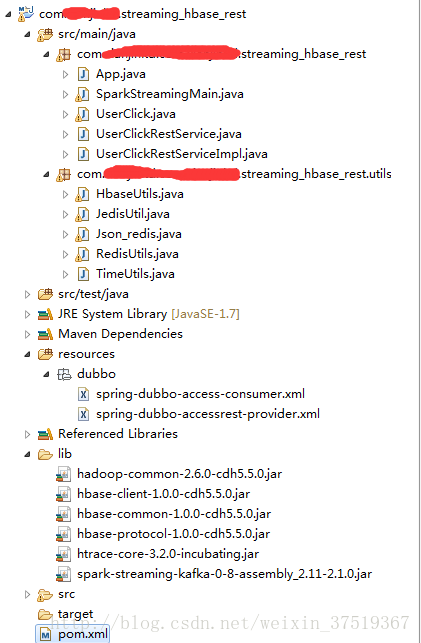

3:整个项目的代码结构

4:pom文件

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>comgroupId>

<artifactId>streaming_hbase_restartifactId>

<version>0.0.1-SNAPSHOTversion>

<packaging>jarpackaging>

<name>com.streaming_hbase_restname>

<url>http://maven.apache.orgurl>

<dependencies>

<dependency>

<groupId>junitgroupId>

<artifactId>junitartifactId>

<version>3.8.1version>

<scope>testscope>

dependency>

<dependency>

<groupId>javax.servletgroupId>

<artifactId>javax.servlet-apiartifactId>

<version>3.1.0version>

dependency>

<dependency>

<groupId>jdk.toolsgroupId>

<artifactId>jdk.toolsartifactId>

<version>1.6version>

<scope>systemscope>

<systemPath>${JAVA_HOME}/lib/tools.jarsystemPath>

dependency>

<dependency>

<groupId>javax.annotationgroupId>

<artifactId>javax.annotation-apiartifactId>

<version>1.2version>

dependency>

<dependency>

<groupId>org.codehaus.jacksongroupId>

<artifactId>jackson-mapper-aslartifactId>

<version>1.9.12version>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>dubboartifactId>

<version>2.8.4version>

dependency>

<dependency>

<groupId>org.javassistgroupId>

<artifactId>javassistartifactId>

<version>3.15.0-GAversion>

dependency>

<dependency>

<groupId>org.apache.minagroupId>

<artifactId>mina-coreartifactId>

<version>1.1.7version>

dependency>

<dependency>

<groupId>org.glassfish.grizzlygroupId>

<artifactId>grizzly-coreartifactId>

<version>2.1.4version>

dependency>

<dependency>

<groupId>org.apache.httpcomponentsgroupId>

<artifactId>httpclientartifactId>

<version>4.2.1version>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>fastjsonartifactId>

<version>1.1.39version>

dependency>

<dependency>

<groupId>com.thoughtworks.xstreamgroupId>

<artifactId>xstreamartifactId>

<version>1.4.1version>

dependency>

<dependency>

<groupId>org.apache.bsfgroupId>

<artifactId>bsf-apiartifactId>

<version>3.1version>

dependency>

<dependency>

<groupId>org.apache.zookeepergroupId>

<artifactId>zookeeperartifactId>

<version>3.4.6version>

dependency>

<dependency>

<groupId>com.github.sgroschupfgroupId>

<artifactId>zkclientartifactId>

<version>0.1version>

dependency>

<dependency>

<groupId>org.apache.curatorgroupId>

<artifactId>curator-frameworkartifactId>

<version>2.5.0version>

dependency>

<dependency>

<groupId>com.googlecode.xmemcachedgroupId>

<artifactId>xmemcachedartifactId>

<version>1.3.6version>

dependency>

<dependency>

<groupId>org.apache.cxfgroupId>

<artifactId>cxf-rt-frontend-simpleartifactId>

<version>2.6.1version>

dependency>

<dependency>

<groupId>org.apache.cxfgroupId>

<artifactId>cxf-rt-transports-httpartifactId>

<version>2.6.1version>

dependency>

<dependency>

<groupId>org.apache.thriftgroupId>

<artifactId>libthriftartifactId>

<version>0.8.0version>

dependency>

<dependency>

<groupId>com.cauchogroupId>

<artifactId>hessianartifactId>

<version>4.0.7version>

dependency>

<dependency>

<groupId>org.mortbay.jettygroupId>

<artifactId>jettyartifactId>

<version>6.1.26version>

<exclusions>

<exclusion>

<groupId>org.mortbay.jettygroupId>

<artifactId>servlet-apiartifactId>

exclusion>

exclusions>

dependency>

<dependency>

<groupId>log4jgroupId>

<artifactId>log4jartifactId>

<version>1.2.16version>

dependency>

<dependency>

<groupId>org.slf4jgroupId>

<artifactId>slf4j-apiartifactId>

<version>1.6.2version>

dependency>

<dependency>

<groupId>redis.clientsgroupId>

<artifactId>jedisartifactId>

<version>2.1.0version>

dependency>

<dependency>

<groupId>javax.validationgroupId>

<artifactId>validation-apiartifactId>

<version>1.0.0.GAversion>

dependency>

<dependency>

<groupId>org.hibernategroupId>

<artifactId>hibernate-validatorartifactId>

<version>4.2.0.Finalversion>

dependency>

<dependency>

<groupId>javax.cachegroupId>

<artifactId>cache-apiartifactId>

<version>0.4version>

dependency>

<dependency>

<groupId>org.jboss.resteasygroupId>

<artifactId>resteasy-jaxrsartifactId>

<version>3.0.7.Finalversion>

dependency>

<dependency>

<groupId>org.jboss.resteasygroupId>

<artifactId>resteasy-clientartifactId>

<version>3.0.7.Finalversion>

dependency>

<dependency>

<groupId>org.jboss.resteasygroupId>

<artifactId>resteasy-nettyartifactId>

<version>3.0.7.Finalversion>

dependency>

<dependency>

<groupId>org.jboss.resteasygroupId>

<artifactId>resteasy-jdk-httpartifactId>

<version>3.0.7.Finalversion>

dependency>

<dependency>

<groupId>org.jboss.resteasygroupId>

<artifactId>resteasy-jackson-providerartifactId>

<version>3.0.7.Finalversion>

dependency>

<dependency>

<groupId>org.jboss.resteasygroupId>

<artifactId>resteasy-jaxb-providerartifactId>

<version>3.0.7.Finalversion>

dependency>

<dependency>

<groupId>org.apache.tomcat.embedgroupId>

<artifactId>tomcat-embed-coreartifactId>

<version>8.0.11version>

dependency>

<dependency>

<groupId>org.apache.tomcat.embedgroupId>

<artifactId>tomcat-embed-logging-juliartifactId>

<version>8.0.11version>

dependency>

<dependency>

<groupId>com.esotericsoftware.kryogroupId>

<artifactId>kryoartifactId>

<version>2.24.0version>

dependency>

<dependency>

<groupId>de.javakaffeegroupId>

<artifactId>kryo-serializersartifactId>

<version>0.26version>

dependency>

<dependency>

<groupId>de.ruedigermoellergroupId>

<artifactId>fstartifactId>

<version>1.55version>

dependency>

<dependency>

<groupId>org.springframeworkgroupId>

<artifactId>spring-contextartifactId>

<version>4.3.6.RELEASEversion>

dependency>

<dependency>

<groupId>org.scala-langgroupId>

<artifactId>scala-libraryartifactId>

<version>2.10.4version>

dependency>

<dependency>

<groupId>org.scala-langgroupId>

<artifactId>scala-libraryartifactId>

<version>2.11.8version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-streaming_2.11artifactId>

<version>2.1.0version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-core_2.10artifactId>

<version>2.1.0version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-tags_2.10artifactId>

<version>2.1.0version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-launcher_2.10artifactId>

<version>2.1.0version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-network-shuffle_2.10artifactId>

<version>2.1.0version>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-unsafe_2.10artifactId>

<version>2.1.0version>

dependency>

<dependency>

<groupId>org.apache.commonsgroupId>

<artifactId>commons-cryptoartifactId>

<version>1.0.0version>

dependency>

<dependency>

<groupId>net.sf.py4jgroupId>

<artifactId>py4jartifactId>

<version>0.10.4version>

dependency>

<dependency>

<groupId>io.nettygroupId>

<artifactId>netty-allartifactId>

<version>4.0.21.Finalversion>

dependency>

<dependency>

<groupId>net.razorvinegroupId>

<artifactId>pyroliteartifactId>

<version>4.13version>

dependency>

<dependency>

<groupId>commons-logginggroupId>

<artifactId>commons-loggingartifactId>

<version>1.1.1version>

dependency>

<dependency>

<groupId>net.sf.json-libgroupId>

<artifactId>json-libartifactId>

<version>2.4version>

<classifier>jdk15classifier>

dependency>

<dependency>

<groupId>com.google.guavagroupId>

<artifactId>guavaartifactId>

<version>14.0version>

dependency>

<dependency>

<groupId>org.apache.kafkagroupId>

<artifactId>kafka-clientsartifactId>

<version>0.8.2.1version>

dependency>

<dependency>

<groupId>redis.clientsgroupId>

<artifactId>jedisartifactId>

<version>2.9.0version>

dependency>

<dependency>

<groupId>org.apache.commonsgroupId>

<artifactId>commons-pool2artifactId>

<version>2.4.3version>

dependency>

dependencies>

project>

4:rest服务相关代码

- UserClick

- 因为本需求是要根据userid去统计点击次数,所有该JavaBean只有两个属性

package com.streaming_hbase_rest;

import java.io.Serializable;

import javax.validation.constraints.NotNull;

import javax.xml.bind.annotation.XmlRootElement;

@XmlRootElement

public class UserClick implements Serializable {

@NotNull

private String userId;

@NotNull

private String clickNum;

public UserClick() {

}

public String getUserId() {

return userId;

}

public void setUserId(String userId) {

this.userId = userId;

}

public String getClickNum() {

return clickNum;

}

public void setClickNum(String clickNum) {

this.clickNum = clickNum;

}

}

- UserClickRestService

- 对外提供服务的时候所暴露的接口

package com.streaming_hbase_rest;

import javax.ws.rs.GET;

import javax.ws.rs.Path;

import javax.ws.rs.PathParam;

import javax.ws.rs.Produces;

import javax.ws.rs.core.MediaType;

@Path("clickNum")

@Produces({MediaType.APPLICATION_JSON, MediaType.TEXT_XML})

public interface UserClickRestService {

@GET

@Path("{userId}")

public String getClickNum(@PathParam("userId")String userId);

}

- UserClickRestServiceImpl

- 接口的具体实现类,在这里去hbase做了查询,返回给调用者某个用户的具体的点击次数

package com.streaming_hbase_rest;

import javax.ws.rs.PathParam;

import com.streaming_hbase_rest.utils.HbaseUtils;

public class UserClickRestServiceImpl implements UserClickRestService {

public HbaseUtils hu = new HbaseUtils();

@Override

public String getClickNum(@PathParam("userId") String userId) {

System.out.println("rest服务开始查询");

System.out.println("本次查询的userid为:" + userId);

String clickNum = hu.getClickNumByUserid("app_clickNum", userId);

System.out.println("查询结果为:" + clickNum);

return clickNum == "null" ? "0" : clickNum;

}

}

- spring-dubbo-accessrest-provider.xml

- dubbo服务端的配置文件,消费端的就不介绍了,因为消费端不需要做任何的配置,通过url就可以调用接口

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:p="http://www.springframework.org/schema/p"

xmlns:c="http://www.springframework.org/schema/c"

xmlns:context="http://www.springframework.org/schema/context"

xmlns:aop="http://www.springframework.org/schema/aop"

xmlns:mvc="http://www.springframework.org/schema/mvc"

xmlns:dubbo="http://code.alibabatech.com/schema/dubbo"

xsi:schemaLocation=

"http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/context

http://www.springframework.org/schema/context/spring-context.xsd

http://www.springframework.org/schema/aop

http://www.springframework.org/schema/aop/spring-aop.xsd

http://www.springframework.org/schema/mvc

http://www.springframework.org/schema/mvc/spring-mvc.xsd

http://code.alibabatech.com/schema/dubbo

http://code.alibabatech.com/schema/dubbo/dubbo.xsd

">

<dubbo:application name="countClickNumByUser-provider" />

<dubbo:registry address="zookeeper://master:2181" check="false" subscribe="false" />

<dubbo:protocol name="rest" port="8889" server="tomcat" >dubbo:protocol>

<dubbo:service interface="com.streaming_hbase_rest.UserClickRestService" ref="userClickRestService_hbase" version="1.0.0" >

<dubbo:method name="getClickNum" timeout="100000000" retries="2" loadbalance="leastactive" actives="50" />

dubbo:service>

<bean id="userClickRestService_hbase" class="com.streaming_hbase_rest.UserClickRestServiceImpl">bean>

beans>- 至此rest服务基本结束,服务的启动将和streaming一起启动,后面会做介绍

5:工具类相关代码

- TimeUtils

- 一个简单的时间戳转换工具类

package com.streaming_hbase_rest.utils;

import java.text.SimpleDateFormat;

/**

* Created by MR.wu on 2017/10/19.

*/

public class TimeUtils {

/**

* 将十位java时间戳转换为时间

*/

public String processJavaTimestamp(String timestamp){

SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

long lt = 0;

String clickTime;

try {

lt = new Long(timestamp);

} catch (NumberFormatException e) {

e.printStackTrace();

}

java.util.Date date = new java.util.Date(lt* 1000L);

clickTime = simpleDateFormat.format(date);

return clickTime;

}

}

- Json_redis

- 解析json的工具类,由于第一个版本是基于redis的,所有名字有点矛盾,不要在意细节

- 可以根据自己的业务需求进行更改

package com.streaming_hbase_rest.utils;

import java.io.Serializable;

import net.sf.json.JSONObject;

public class Json_redis implements Serializable {

public TimeUtils timeUtils = new TimeUtils();

/**

* 解析json数据

*/

public String[] pressJson(JSONObject log_data) {

System.out.println("开始处理Jason数据>>>>>" + log_data);

String[] jsonKey = new String[] { "product", "userAgent", "inTime",

"clickElement", "userId", "clickTime", "outTime", "location" };

String apk_type = "null";// 设备

String user_id = "null";// 设备

String apk_version = "null";// APP版本

String product = log_data.getString(jsonKey[0]);

// 对product数据进行判断

if (product.indexOf("|") == -1 || product.equals("|")) {// 数据格式不对,没有"|"或者只有一个"|"返回两个null

apk_type = "null";

apk_version = "null";

} else if (product.startsWith("|")) {// 前面没数据

apk_type = "null";

apk_version = product.split("\\|")[1];

} else if (product.endsWith("|")) {// 后面没数据

apk_type = product.split("\\|")[0];

apk_version = "null";

} else {// 正确数据

apk_type = product.split("\\|")[0];

apk_version = product.split("\\|")[1];

}

String userAgents = log_data.getString(jsonKey[1]);

String device_no = "null";

String device_name = "null";

// 对userAgents数据进行判断

if (userAgents.equals("|") || userAgents.indexOf("|") == -1) {// 数据格式不对,没有|或者只有一个|返回两个null

device_no = "null"; // 设备号

device_name = "null"; // 设备型号

} else if (userAgents.endsWith("|")) {// 后面没数据

device_no = userAgents.split("\\|")[0]; // 设备号

device_name = "null"; // 设备型号

} else if (userAgents.startsWith("|")) {// 前面没数据

device_no = "null"; // 设备号

device_name = userAgents.split("\\|")[1]; // 设备型号

System.out.println(userAgents.split("\\|").length);

} else {// 正常数据

device_no = userAgents.split("\\|")[0]; // 设备号

device_name = userAgents.split("\\|")[1]; // 设备型号

}

String click_element = log_data.getString(jsonKey[3]);

user_id = log_data.getString(jsonKey[4]);

String click_time = log_data.getString(jsonKey[5]);

String in_time = "null";

String out_time = "null";

if (!click_time.equals("null") && click_time != null

&& !click_time.isEmpty()) {

System.out.println("+++++++++++++++++++++++++click_time=>>>"

+ click_time.toString());

// 将点击时间的时间戳转换为标准时间

click_time = timeUtils.processJavaTimestamp(click_time);

} else {

click_time = "null";

}

/**

* 判断是否包含位置字段

*/

String location = "null";

if (log_data.toString().contains("location")) {

location = log_data.getString(jsonKey[7]);

}

if (log_data.toString().equals("inTime")

&& log_data.toString().equals("outTime")) {

in_time = log_data.getString(jsonKey[2]);

out_time = log_data.getString(jsonKey[6]);

}

if (!in_time.equals("null") && in_time != null) {

in_time = timeUtils.processJavaTimestamp(in_time);

} else {

in_time = "null";

}

if (!out_time.equals("null") && out_time != null) {

out_time = timeUtils.processJavaTimestamp(out_time);

} else {

out_time = "null";

}

String[] canshu = new String[] { user_id, click_element, apk_type,

apk_version, device_no, device_name, click_time, in_time,

out_time, location };

return canshu;

}

}

- HbaseUtils

package com.streaming_hbase_rest.utils;

import java.io.IOException;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

import java.util.Set;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.util.Bytes;

public class HbaseUtils {

public static Configuration conf=null;

public static Connection connect=null;

static {

conf = HBaseConfiguration.create();

conf.addResource("hbase-site.xml");

try {

connect = ConnectionFactory.createConnection(conf);

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

/* get data. */

public String getClickNumByUserid(String tableName, String user_id)

{

System.out.println("开始根据userid查询历史的点击次数");

/* get table. */

Table table;

String value="null";

try {

table = connect.getTable(TableName.valueOf(tableName));

Get get = new Get(Bytes.toBytes(user_id));

System.out.println("get>>>>>"+get);

Result result = table.get(get);

if(!result.isEmpty()){

for (Cell cell : result.listCells()) {

String column = new String(CellUtil.cloneQualifier(cell));

if(column.equals("clickNum")){

value = new String(CellUtil.cloneValue(cell));

}

}

}

table.close();

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

return value;

}

/* put data into table. */

public void insertClickData(String tableName,String[] parameters)

throws IOException {

/* get table. */

TableName tn = TableName.valueOf(tableName);

Table table = connect.getTable(tn);

/* create put. */

Put put = new Put(Bytes.toBytes(parameters[0]));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("user_id"), Bytes.toBytes(parameters[0]));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("clickNum"), Bytes.toBytes(parameters[1]));

/* put data. */

table.put(put);

table.close();

System.out.println("add data Success!");

}

/* put data into table. */

public void insertOriginalData(String tableName,String[] parameters)

throws IOException {

/* get table. */

TableName tn = TableName.valueOf(tableName);

Table table = connect.getTable(tn);

Long time = System.currentTimeMillis();

String rowkey = parameters[0]+time.toString();

/* create put. */

Put put = new Put(Bytes.toBytes(rowkey));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("user_id"), Bytes.toBytes(parameters[0]));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("click_element"), Bytes.toBytes(parameters[1]));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("apk_type"), Bytes.toBytes(parameters[2]));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("apk_version"), Bytes.toBytes(parameters[3]));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("device_no"), Bytes.toBytes(parameters[4]));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("device_name"), Bytes.toBytes(parameters[5]));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("click_time"), Bytes.toBytes(parameters[6]));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("in_time"), Bytes.toBytes(parameters[7]));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("out_time"), Bytes.toBytes(parameters[8]));

put.addColumn(Bytes.toBytes("info"), Bytes.toBytes("location"), Bytes.toBytes(parameters[9]));

/* put data. */

table.put(put);

table.close();

System.out.println("add data Success!");

}

}

6:程序的入口

- SparkStreamingMain

- 在这个类里面启动了streaming和rest服务

package com.streaming_hbase_rest;

import java.io.IOException;

import java.util.HashMap;

import java.util.Map;

import java.util.regex.Pattern;

import net.sf.json.JSONArray;

import net.sf.json.JSONObject;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.function.VoidFunction;

import org.apache.spark.streaming.Duration;

import org.apache.spark.streaming.api.java.JavaPairReceiverInputDStream;

import org.apache.spark.streaming.api.java.JavaStreamingContext;

import org.apache.spark.streaming.kafka.KafkaUtils;

import org.springframework.context.support.ClassPathXmlApplicationContext;

import scala.Tuple2;

import com.streaming_hbase_rest.utils.HbaseUtils;

import com.streaming_hbase_rest.utils.Json_redis;

/**

* Created by Mr.wu on 2017/9/18.

*/

public class SparkStreamingMain {

public static HbaseUtils hu = new HbaseUtils();

public static Json_redis js = new Json_redis();

public static void main(String[] args) throws IOException {

System.out.println("RestService启动");

ClassPathXmlApplicationContext context = new ClassPathXmlApplicationContext(

"dubbo/spring-dubbo-accessrest-provider.xml");

context.start();

System.out.println("RestService服务端已启动");

startStreaming();

System.in.read();

}

public static void startStreaming() {

System.out.println("streaming启动中>>>>>>>>>>>>>>>>>>");

// 设置匹配模式,以空格分隔

// 接收数据的地址和端口

String zkQuorum = "master:2181,slave1:2181,slave2:2181,slave3:2181,dw:2181";

// 话题所在的组

String group = "group1";

// 话题名称以“,”分隔

String topics = "countClickNumByUserid";

// 每个话题的分片数

int numThreads = 2;

SparkConf sparkConf = new SparkConf()

.setAppName("CountClickNumByUser_hbase").setMaster("local[*]")

.set("spark.streaming.receiver.writeAheadLog.enable", "true");

JavaStreamingContext jssc = new JavaStreamingContext(sparkConf,

new Duration(10000));

jssc.checkpoint("/sparkstreaming_rest/checkpoint"); // 设置检查点

// 存放话题跟分片的映射关系

Map topicmap = new HashMap<>();

String[] topicsArr = topics.split(",");

int n = topicsArr.length;

for (int i = 0; i < n; i++) {

topicmap.put(topicsArr[i], numThreads);

}

System.out.println("开始从kafka获得数据>>");

// Set topicSet = new HashSet();

// topicSet.add("countClickNumByUserid");

// HashMap kafkaParam = new HashMap();

// kafkaParam.put("metadata.broker.list",

// "master:9092,slave1:9092,slave2:9092,slave3:9092,dw:9092");

// 直接操作kafka跳过zookeeper

// JavaPairInputDStream lines =

// KafkaUtils.createDirectStream(

// jssc,

// String.class,

// String.class,

// StringDecoder.class,

// StringDecoder.class,

// kafkaParam,

// topicSet

// );

// 从Kafka中获取数据转换成RDD

JavaPairReceiverInputDStream lines = KafkaUtils

.createStream(jssc, zkQuorum, group, topicmap);

System.out.println("从kafka获得数据结束基于Direct>>");

System.out.println("输出从kafka获得数据>>");

lines.print();

//lines.count().print();

// ----------

lines.foreachRDD(new VoidFunction>() {

@Override

public void call(JavaPairRDD rdd) throws Exception {

VoidFunction> aa;

// TODO Auto-generated method stub

rdd.foreach(new VoidFunction>() {

@Override

public void call(Tuple2 arg0)

throws Exception {

// TODO Auto-generated method stub

System.out.println("数据处理中>>");

final Pattern SPACE = Pattern.compile(" ");

String APP_log_data = arg0._2;

JSONArray jsonArray = null;

try {

APP_log_data = APP_log_data.split("\\^")[1];

JSONObject log_data2 = JSONObject

.fromObject(APP_log_data);

jsonArray = log_data2.getJSONArray("data");

} catch (Exception e) {

// TODO Auto-generated catch block

}

for (int i = 0; i < jsonArray.size(); i++) {

JSONObject log_data = jsonArray.getJSONObject(i);

String[] parameters = js.pressJson(log_data);

hu.insertOriginalData(

"app_spark_streaming_history", parameters);

String user_id = parameters[0];

String click_element = parameters[1];

// 如果user_id不为null,则向hbase加载历史数据,并放进map里面

if (user_id != "null" && click_element != "null") {

// 根据处理的user_id查询历史数据然后放统计表

String historyClickNum = hu

.getClickNumByUserid("app_clickNum",

user_id);

System.out.println("当前处理数据的user_id为:" + user_id

+ "从历史表里面拿到的历史点击次数为historyClickNum:"

+ historyClickNum);

if (historyClickNum == "null") {

System.out.println("没有历史数据");

// 没有历史数据

hu.insertClickData("app_clickNum",

new String[] { user_id, "1" });

} else {

System.out.println("有历史数据,开始进行累加");

// 有历史数据进行累加

Integer clickNum = new Integer(

historyClickNum);

System.out.println("累加前的点击次数为:"

+ clickNum.toString());

clickNum = clickNum + 1;

System.out.println("累加后的点击次数为:"

+ clickNum.toString());

hu.insertClickData(

"app_clickNum",

new String[] { user_id,

clickNum.toString() });

}

}

}

}

});

}

});

jssc.start();

try {

jssc.awaitTermination();

} catch (InterruptedException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

- 到此为止所有的代码写完了,接下来分享一下在开发过程中遇到的各种问题

7:错误总结和bug汇总

- 在sparkstreaming和rest服务集成的时候出现了javax.servlet包冲突,具体的报错信息忘记记录了,我的解决办法是将下面的包放到了pom文件的最开始,然后这个问题就解决了

<dependency>

<groupId>javax.servletgroupId>

<artifactId>javax.servlet-apiartifactId>

<version>3.1.0version>

dependency>- 在访问hbase的时候遇到了,链接hbase的时候一开始什么错都不报,程序一直在等待,过了很久之后会报错什么could not find location….. 具体的报错信息记不住了。通过到服务器上zookeeper的注册目录下查看发现根目录下没有hbase文件夹,查看hbase的配置文件发现hbase的下面这个配置是:

<property>

<name>zookeeper.znode.parentname>

<value>/mnt/usr/local/zookeeper/hbasevalue>

property>- 再次到zookeeper的注册目录下面发现hbase注册到了/mnt/usr/local/zookeeper/hbase目录下

-

- 尴尬啊,学艺不精啊!!!!

- 解决办法有两种

-

- 修改hbase的配置文件改成

<property>

<name>zookeeper.znode.parentname>

<value>/hbasevalue>

property>-

- 在代码里面创建hbaseconf的时候指定zookeeper.znode.parent的目录(待验证,我用的是第一种办法)

- 项目终于跑起来了,后来通过监测发现丢数据特别严重(每天大概有几百万行的数据,但是处理的数据只有几万行),一开始的写法和上面的代码不同,错误代码如下,直接进行了flatMap没有进行foreachRDD操作(大写的尴尬啊)在网上找的大多数的demo都没有进行foreachRDD操作,如果你是运用到工作中,实际的生产中切记一定要foreachRDD

- 在处理丢数据的时候顺便尝试了KafkaUtils.createStream和KafkaUtils.createDirectStream两种方式从卡夫卡拿数据

- 先来分析一下两种实现的不同之处

-

- KafkaUtils.createStream是基于zookeeper的,offset有zookeeper管理

-

- KafkaUtils.createDirectStream是直接跳过了zookeeper直接操作kafka,所以要自己记录offset

- 经过对比发现KafkaUtils.createStream在开启WAL和checkpoint之后速度要稍微慢于KafkaUtils.createDirectStream

总结:

由于之前没做过spark,在这个过程中走了好多弯路,在网上找了一些demo但是都会有各种问题,都是一些学习的demo,很少有运用到生产的demo,所以我在做好这个项目以后就想着写篇博客供大家参考,里面还有很多的不足之处,希望大家可以批评指正。

最后展示下rest服务调用的图片

- 通过url直接调用服务,不需要做任何的配置,查询userid为2353087的点击次数

- 通过代码调用,我在这里是去查询了配置文件里所有id

import java.io.FileInputStream;

import java.util.Iterator;

import java.util.Properties;

import javax.ws.rs.client.Client;

import javax.ws.rs.client.ClientBuilder;

import javax.ws.rs.client.WebTarget;

import javax.ws.rs.core.Response;

public class TestConsumer {

public static void main(String[] args) throws Exception {

// TODO Auto-generated method stub

final String port = "8889";

Properties pro = new Properties();

FileInputStream in = new FileInputStream("aa.properties");

pro.load(in);

Iterator it=pro.stringPropertyNames().iterator();

while(it.hasNext()){

String key=it.next();

System.out.println(key);

getUser("http://localhost:" + port + "/clickNum/"+key+".json");

Thread.sleep(3000);

}

}

private static void getUser(String url) {

System.out.println("Getting user via " + url);

Client client = ClientBuilder.newClient();

WebTarget target = client.target(url);

Response response = target.request().get();

try {

if (response.getStatus() != 200) {

throw new RuntimeException("Failed with HTTP error code : " + response.getStatus());

}

System.out.println("Successfully got result: " + response.readEntity(String.class));

} finally {

client.close();

}

}

}