SVM原理和SOM算法总结

1 原问题

约定:Data= ( x i , y i ) i = 1 N , x i ∈ R p , y i ∈ { 1 , − 1 } {(x_i,y_i)}_{i=1}^N,\quad x_i\in R^p,\ y_i\in \{1,-1\} (xi,yi)i=1N,xi∈Rp, yi∈{1,−1},

则SVM变为如下的最优化问题:

(1) { m i n 1 2 w T w s . t . y i ( w T x i + b ) ≥ 1 ⟺ 1 − y i ( w T x i + b ) ≤ 0 \left\{\begin{array}{l}min \frac{1}{2}w^T w \\ s.t. y_i(w^Tx_i+b)\geq 1\Longleftrightarrow 1-y_i(w^Tx_i+b)\leq 0 \end{array}\right. {min21wTws.t.yi(wTxi+b)≥1⟺1−yi(wTxi+b)≤0

令 L ( w , b , α ) = 1 2 w T w + ∑ i = 1 N α i ( 1 − y i ( w T x i + b ) ) \mathcal{L}(w,b,\alpha)=\frac{1}{2}w^Tw+\sum\limits_{i=1}^N \alpha_i(1-y_i(w^Tx_i+b)) L(w,b,α)=21wTw+i=1∑Nαi(1−yi(wTxi+b)),

这里 α i ≥ 0 , 1 − y i ( w T x i + b ) ≤ 0. \alpha_i\geq 0,1-y_i(w^Tx_i+b)\leq 0. αi≥0,1−yi(wTxi+b)≤0.

下面证明问题(1)等价于下面的问题 ( P ) (P) (P).

( P ) { min w , b max α L ( w , b , α ) s . t . α i ≥ 0 (P)\left\{\begin{array}{l}\min\limits_{w,b}\max\limits_{\alpha}\mathcal{L}(w,b,\alpha)\\ s.t. \alpha_i\geq 0\end{array}\right. (P){w,bminαmaxL(w,b,α)s.t.αi≥0

证明:讨论两种情况:

如果 1 − y i ( w T x i + b ) ≥ 0 1-y_i(w^Tx_i+b)\geq 0 1−yi(wTxi+b)≥0,又由于 α i ≥ 0 \alpha_i\geq 0 αi≥0,所以,

max α L ( w , b , α ) = 1 2 w T w + ∞ = ∞ \max\limits_{\alpha}\mathcal{L}(w,b,\alpha)=\frac{1}{2}w^Tw+\infty=\infty αmaxL(w,b,α)=21wTw+∞=∞.

如果 1 − y i ( w T x i + b ) ≤ 0 1-y_i(w^Tx_i+b)\leq 0 1−yi(wTxi+b)≤0,则 max α L ( w , b , α ) = 1 2 w T w + 0 = 1 2 w T w \max\limits_{\alpha}\mathcal{L}(w,b,\alpha)=\frac{1}{2}w^Tw+0=\frac{1}{2}w^Tw αmaxL(w,b,α)=21wTw+0=21wTw.

综上, min w , b max α L ( w , b , α ) = min w , b { ∞ , 1 2 w T w } = 1 2 w T w . 证 毕 \min\limits_{w,b}\max\limits_{\alpha}\mathcal{L}(w,b,\alpha)=\min\limits_{w,b}\{\infty,\frac{1}{2}w^Tw\}=\frac{1}{2}w^Tw.证毕 w,bminαmaxL(w,b,α)=w,bmin{∞,21wTw}=21wTw.证毕

2 对偶问题

弱对偶定理: min w , b max α L ( w , b , α ) ≥ max λ min w , b L ( w , b , α ) \min\limits_{w,b}\max\limits_{\alpha}\mathcal{L}(w,b,\alpha)\geq \max\limits_{\lambda}\min\limits_{w,b}\mathcal{L}(w,b,\alpha) w,bminαmaxL(w,b,α)≥λmaxw,bminL(w,b,α).

强对偶定理: min w , b max α L ( w , b , α ) = max λ min w , b L ( w , b , α ) \min\limits_{w,b}\max\limits_{\alpha}\mathcal{L}(w,b,\alpha)= \max\limits_{\lambda}\min\limits_{w,b}\mathcal{L}(w,b,\alpha) w,bminαmaxL(w,b,α)=λmaxw,bminL(w,b,α).

由于原问题 ( P ) (P) (P)目标函数是二次函数(凸函数),约束条件是线性,所以对于问题 ( P ) (P) (P),强对偶定理成立。

即原问题 ( P ) (P) (P)等价于下面的对偶问题(D):

(D) { max α min w , b L ( w , b , α ) s . t . α i ≥ 0 \left\{\begin{array}{l}\max\limits_{\alpha}\min\limits_{w,b}\mathcal{L}(w,b,\alpha)\\ s.t. \alpha_i\geq 0\end{array}\right. {αmaxw,bminL(w,b,α)s.t.αi≥0

3对偶问题的求解

(D) { max α min w , b L ( w , b , α ) s . t . α i ≥ 0 \left\{\begin{array}{l}\max\limits_{\alpha}\min\limits_{w,b}\mathcal{L}(w,b,\alpha)\\ s.t. \alpha_i\geq 0\end{array}\right. {αmaxw,bminL(w,b,α)s.t.αi≥0

先来求 min w , b L ( w , b , α ) \min\limits_{w,b}\mathcal{L}(w,b,\alpha) w,bminL(w,b,α),这时需要 L ( w , b , α ) \mathcal{L}(w,b,\alpha) L(w,b,α)函数分别对w,b求导如下。

∂ L ∂ b = ∂ ∂ b ( − ∑ i = 1 N α i y i b ) = − ∑ i = 1 N α i y i = 0 \frac{\partial L}{\partial b}=\frac{\partial}{\partial b}(-\sum\limits_{i=1}^N\alpha_i y_i b) =-\sum\limits_{i=1}^N\alpha_i y_i=0 ∂b∂L=∂b∂(−i=1∑Nαiyib)=−i=1∑Nαiyi=0,即 ∑ i = 1 N α i y i = 0 \sum\limits_{i=1}^N\alpha_i y_i=0 i=1∑Nαiyi=0.

将其带入 L ( w , b , α ) \mathcal{L}(w,b,\alpha) L(w,b,α)得,

L ( w , b , α ) = 1 2 w T w + ∑ i = 1 N α i − ∑ i = 1 N α i y i ( w T x i + b ) = 1 2 w T w + ∑ i = 1 N α i − ∑ i = 1 N α i y i w T x i − ∑ i = 1 N α i y i b = 1 2 w T w + ∑ i = 1 N α i − ∑ i = 1 N α i y i w T x i . \begin{array}{ll}\mathcal{L}(w,b,\alpha)&=\frac{1}{2}w^Tw+\sum\limits_{i=1}^N\alpha_i-\sum\limits_{i=1}^N\alpha_i y_i(w^Tx_i+b)\\ &=\frac{1}{2}w^Tw+\sum\limits_{i=1}^N\alpha_i-\sum\limits_{i=1}^N \alpha_i y_iw^Tx_i-\sum\limits_{i=1}^N\alpha_i y_ib\\ &=\frac{1}{2}w^Tw+\sum\limits_{i=1}^N\alpha_i-\sum\limits_{i=1}^N \alpha_i y_iw^Tx_i\end{array}. L(w,b,α)=21wTw+i=1∑Nαi−i=1∑Nαiyi(wTxi+b)=21wTw+i=1∑Nαi−i=1∑NαiyiwTxi−i=1∑Nαiyib=21wTw+i=1∑Nαi−i=1∑NαiyiwTxi.

∂ L ∂ w = 1 2 ⋅ 2 ⋅ w − ∑ i = 1 N α i y i x i = 0 ⟹ w = ∑ i = 1 N α i y i x i \frac{\partial\mathcal{L}}{\partial w}=\frac{1}{2}\cdot 2\cdot w- \sum\limits_{i=1}^N\alpha_iy_ix_i=0\Longrightarrow w=\sum\limits_{i=1}^N\alpha_iy_ix_i ∂w∂L=21⋅2⋅w−i=1∑Nαiyixi=0⟹w=i=1∑Nαiyixi.

再将上式带入 L L L中得,

min w , b L ( w , b , α ) = 1 2 ( ∑ i = 1 N α i y i x i ) T ( ∑ i = 1 N α i y i x i ) − ∑ i = 1 N α i y i ( ∑ j = 1 N α j y j x j ) T x i + ∑ i = 1 N α i = 1 2 ∑ i = 1 N α i y i x i T ∑ j = 1 N α j y j x j − ∑ i = 1 N α i y i ∑ j = 1 N α j y j x j T x i + ∑ i = 1 N α i = 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j x i T x j − ∑ i = 1 N ∑ j = 1 N α i α j y i y j x j T x i + ∑ i = 1 N α i = − 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j x i T x j + ∑ i = 1 N α i \begin{array}{ll}\min\limits_{w,b}\mathcal{L}(w,b,\alpha)&=\frac{1}{2}(\sum\limits_{i=1}^N\alpha_i y_ix_i)^T (\sum\limits_{i=1}^N\alpha_i y_ix_i)-\sum\limits_{i=1}^N\alpha_i y_i (\sum\limits_{j=1}^N\alpha_j y_jx_j)^T x_i+\sum\limits_{i=1}^N\alpha_i\\ &=\frac{1}{2}\sum\limits_{i=1}^N\alpha_i y_ix_i^T\sum\limits_{j=1}^N \alpha_jy_jx_j-\sum\limits_{i=1}^N\alpha_i y_i\sum\limits_{j=1}^N \alpha_jy_jx_j^Tx_i+\sum\limits_{i=1}^N\alpha_i \\ &=\frac{1}{2}\sum\limits_{i=1}^N\sum\limits_{j=1}^N\alpha_i \alpha_j y_iy_jx_i^Tx_j-\sum\limits_{i=1}^N\sum\limits_{j=1}^N\alpha_i \alpha_j y_iy_jx_j^Tx_i+\sum\limits_{i=1}^N\alpha_i \\ &=-\frac{1}{2}\sum\limits_{i=1}^N\sum\limits_{j=1}^N\alpha_i \alpha_j y_iy_jx_i^Tx_j+\sum\limits_{i=1}^N\alpha_i \end{array} w,bminL(w,b,α)=21(i=1∑Nαiyixi)T(i=1∑Nαiyixi)−i=1∑Nαiyi(j=1∑Nαjyjxj)Txi+i=1∑Nαi=21i=1∑NαiyixiTj=1∑Nαjyjxj−i=1∑Nαiyij=1∑NαjyjxjTxi+i=1∑Nαi=21i=1∑Nj=1∑NαiαjyiyjxiTxj−i=1∑Nj=1∑NαiαjyiyjxjTxi+i=1∑Nαi=−21i=1∑Nj=1∑NαiαjyiyjxiTxj+i=1∑Nαi,

,上面用到了 x i T ⋅ x j = x j T ⋅ x i x_i^T\cdot x_j=x_j^T\cdot x_i xiT⋅xj=xjT⋅xi.

综上,问题(D)等价于下面的 ( D 1 ) (D_1) (D1):

( D 1 ) { max α − 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j x i T x j + ∑ i = 1 N α i s . t . α i ≥ 0 ∑ i = 1 N α i y i = 0 (D_1)\left\{\begin{array}{l} \max\limits_{\alpha}-\frac{1}{2}\sum\limits_{i=1}^N\sum\limits_{j=1}^N\alpha_i \alpha_j y_iy_jx_i^Tx_j+\sum\limits_{i=1}^N\alpha_i \\ s.t.\ \ \alpha_i \geq 0\\ \qquad \sum\limits_{i=1}^N\alpha_i y_i=0\end{array}\right. (D1)⎩⎪⎪⎪⎪⎨⎪⎪⎪⎪⎧αmax−21i=1∑Nj=1∑NαiαjyiyjxiTxj+i=1∑Nαis.t. αi≥0i=1∑Nαiyi=0

事实上,在SVM中经常使用核函数来将输入映射到高维空间,内积只是核函数的一种。我们把 x i , x j x_i,x_j xi,xj的内积 x i T x j x_i^Tx_j xiTxj改为 K ( x i , x j ) = ϕ ( x i ) T ϕ ( x j ) K(x_i,x_j)=\phi(x_i)^T\phi(x_j) K(xi,xj)=ϕ(xi)Tϕ(xj),则(D2)变为:

( D 1 ′ ) { max α − 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j K ( x i , x j ) + ∑ i = 1 N α i s . t . α i ≥ 0 ∑ i = 1 N α i y i = 0 (D_1')\left\{\begin{array}{l} \max\limits_{\alpha}-\frac{1}{2}\sum\limits_{i=1}^N\sum\limits_{j=1}^N\alpha_i \alpha_j y_iy_jK(x_i,x_j)+\sum\limits_{i=1}^N\alpha_i \\ s.t.\ \ \alpha_i \geq 0\\ \qquad \sum\limits_{i=1}^N\alpha_i y_i=0\end{array}\right. (D1′)⎩⎪⎪⎪⎪⎨⎪⎪⎪⎪⎧αmax−21i=1∑Nj=1∑NαiαjyiyjK(xi,xj)+i=1∑Nαis.t. αi≥0i=1∑Nαiyi=0

此时,与上面类似,我们有 w = ∑ i = 1 N α i y i ϕ ( x i ) . w=\sum\limits_{i=1}^N\alpha_iy_i\phi(x_i). w=i=1∑Nαiyiϕ(xi).

4 KKT条件

考虑如下一般形式的约束优化问题:

{ min f ( x ) , s . t . g i ( x ) ≤ 0 , i = 1 , 2 , ⋯ , m , h i ( x ) = 0 , i = 1 , 2 , ⋯ , ℓ . \left\{\begin{array}{l}\min f(x),\\ s.t.\ g_i(x)\leq 0,i=1,2,\cdots,m,\\ \qquad h_i(x)=0,i=1,2,\cdots,\ell.\end{array}\right. ⎩⎨⎧minf(x),s.t. gi(x)≤0,i=1,2,⋯,m,hi(x)=0,i=1,2,⋯,ℓ.

假设 x ∗ x^{*} x∗是原问题的局部最优解,且 x ∗ x^* x∗处某个"适当的条件(constraint qualification, 也称约束规范)"成立,则存在 α , μ \alpha, \mu α,μ使得,

∇ f ( x ∗ ) + ∑ i = 1 m α i ∇ g i ( x ∗ ) − ∑ j = 1 ℓ μ j ∇ h j ( x ∗ ) = 0 α i ≥ 0 , i = 1 , 2 , ⋯ m , g i ( x ∗ ) ≤ 0 , i = 1 , 2 , ⋯ , m , h j ( x ∗ ) = 0 , i = 1 , 2 , ⋯ , ℓ , α i g i ( x ∗ ) = 0 , i = 1 , 2 , ⋯ , m . \begin{array}{r} \nabla f(x^*)+\sum\limits_{i=1}^m\alpha_i \nabla g_i(x^*)- \sum\limits_{j=1}^{\ell}\mu_j\nabla h_j(x^*)=0\\ \alpha_i \geq 0,i=1,2,\cdots m,\\ g_i(x^*)\leq 0,i=1,2,\cdots,m,\\ h_j(x^*)=0,i=1,2,\cdots,\ell,\\ \alpha_i g_i(x^*)=0,i=1,2,\cdots,m.\end{array} ∇f(x∗)+i=1∑mαi∇gi(x∗)−j=1∑ℓμj∇hj(x∗)=0αi≥0,i=1,2,⋯m,gi(x∗)≤0,i=1,2,⋯,m,hj(x∗)=0,i=1,2,⋯,ℓ,αigi(x∗)=0,i=1,2,⋯,m.

,以上5条称为Karush-Kuhn_Tucker(KKT)条件。

对于SVM的对偶问题:

(D) { max α min w , b L ( w , b , α ) s . t . α i ≥ 0 \left\{\begin{array}{l}\max\limits_{\alpha}\min\limits_{w,b}\mathcal{L}(w,b,\alpha)\\ s.t. \alpha_i \geq 0\end{array}\right. {αmaxw,bminL(w,b,α)s.t.αi≥0

其中, min w , b L ( w , b , α ) \min\limits_{w,b}\mathcal{L}(w,b,\alpha) w,bminL(w,b,α)对应的KKT条件为:

{ ∂ L ∂ w = 0 , ∂ L ∂ b = 0 1 − y i ( w T x i + b ) ≤ 0 , α i ≥ 0 , α i ( 1 − y i ( w T x i + b ) = 0. \left\{\begin{array}{l} \frac{\partial L}{\partial w}=0,\frac{\partial L}{\partial b}=0\\ 1-y_i(w^Tx_i+b)\leq 0,\\ \alpha_i \geq 0,\\ \alpha_i (1-y_i(w^Tx_i+b)=0.\end{array}\right. ⎩⎪⎪⎨⎪⎪⎧∂w∂L=0,∂b∂L=01−yi(wTxi+b)≤0,αi≥0,αi(1−yi(wTxi+b)=0.

最后一条称为互补松弛条件(slackness complenmentary)。

注意:对于 1 − y i ( w T x i + b ) < 0 1-y_i(w^Tx_i+b)<0 1−yi(wTxi+b)<0的点,要想互补松弛条件成立, α i = 0 \alpha_i =0 αi=0必须成立。即,对于非支撑向量, α \alpha α的值都为0. 也就是说,对于目标函数 L L L来说,真正起作用的只有支撑向量。

前面我们已经求出 w ∗ = ∑ i = 1 N α i y i x i w^*=\sum\limits_{i=1}^N\alpha_iy_ix_i w∗=i=1∑Nαiyixi,下面我们来求b。

由前面分析,存在支撑向量 ( x k , y k ) (x_k,y_k) (xk,yk)使得 1 − y k ( w T x k + b ) = 0 1-y_k(w^Tx_k+b)=0 1−yk(wTxk+b)=0,即 y k ( w T x k + b ) = 1 ⇒ y k 2 ( w T x k + b ) = w T x k + b = y k y_k(w^Tx_k+b)=1\Rightarrow y_k^2(w^Tx_k+b)=w^Tx_k+b=y_k yk(wTxk+b)=1⇒yk2(wTxk+b)=wTxk+b=yk。

从而, b ∗ = y k − w T x k = y k − ∑ i = 1 N α i y i x i T x k b^*=y_k-w^Tx_k=y_k-\sum\limits_{i=1}^N\alpha_iy_ix_i^Tx_k b∗=yk−wTxk=yk−i=1∑NαiyixiTxk。

注意:(1) α \alpha α在非支撑向量上都为0,所有的非零 α \alpha α只有在支撑向量才会出现。

(2) 对于使用核函数的情况, w ∗ = ∑ i = 1 N α i y i ϕ ( x i ) w^*=\sum\limits_{i=1}^N\alpha_iy_i\phi(x_i) w∗=i=1∑Nαiyiϕ(xi), b ∗ = y k − ∑ i = 1 N α i y i K ( x i , x k ) b^*=y_k-\sum\limits_{i=1}^N\alpha_iy_iK(x_i,x_k) b∗=yk−i=1∑NαiyiK(xi,xk).

上面我们已经求出 w ∗ , b ∗ w^*,b^* w∗,b∗的值,但是这两个式子都有未知量 α \alpha α, 所以还需要首先求出 α \alpha α的值。这就要用到SMO算法。

5 软间隔SVM

经过加入松弛变量 ξ i \xi_i ξi 后,模型修改为:

( 2 ) { m i n 1 2 w T w + C ∑ i = 1 N ξ i s . t . y i ( w T x i + b ) ≥ 1 − ξ i ξ i ≥ 0 (2)\left\{\begin{array}{l}min \frac{1}{2}w^T w+C\sum\limits_{i=1}^N\xi_i \\ s.t. \ \ y_i(w^Tx_i+b)\geq 1-\xi_i\\ \qquad \xi_i\geq 0 \end{array}\right. (2)⎩⎪⎪⎨⎪⎪⎧min21wTw+Ci=1∑Nξis.t. yi(wTxi+b)≥1−ξiξi≥0

将其转化为:

( P 2 ) { min w , b , ξ max α , μ L ( w , b , α , μ ) = 1 2 w T w + C ∑ i = 1 N ξ i − ∑ i = 1 N α i ( y i ( w T x i + b ) − 1 + ξ i ) − ∑ i = 1 N μ i ξ i s . t . ∀ i , α i ≥ 0 ∀ i , μ i ≥ 0 (P_2)\left\{\begin{array}{l}\min\limits_{w,b,\xi}\max\limits_{\alpha,\mu}\mathcal{L}(w,b,\alpha,\mu) =\frac{1}{2}w^Tw+C\sum\limits_{i=1}^N\xi_i-\sum\limits_{i=1}^N\alpha_i (y_i(w^Tx_i+b)-1+\xi_i)-\sum\limits_{i=1}^N\mu_i\xi_i\\ s.t.\ \ \forall i, \alpha_i\geq 0\\ \qquad \forall i, \mu_i\geq 0 \end{array}\right. (P2)⎩⎪⎪⎨⎪⎪⎧w,b,ξminα,μmaxL(w,b,α,μ)=21wTw+Ci=1∑Nξi−i=1∑Nαi(yi(wTxi+b)−1+ξi)−i=1∑Nμiξis.t. ∀i,αi≥0∀i,μi≥0

对应的对偶问题为:

( D 2 ) { max α , μ min w , b , ξ L ( w , b , α , μ ) = 1 2 w T w + C ∑ i = 1 N ξ i − ∑ i = 1 N α i ( y i ( w T x i + b ) − 1 + ξ i ) − ∑ i = 1 N μ i ξ i s . t . ∀ i , α i ≥ 0 ∀ i , μ i ≥ 0 (D_2)\left\{\begin{array}{l}\max\limits_{\alpha,\mu}\min\limits_{w,b,\xi}\mathcal{L}(w,b,\alpha,\mu) =\frac{1}{2}w^Tw+C\sum\limits_{i=1}^N\xi_i-\sum\limits_{i=1}^N\alpha_i (y_i(w^Tx_i+b)-1+\xi_i)-\sum\limits_{i=1}^N\mu_i\xi_i\\ s.t.\ \ \forall i, \alpha_i\geq 0\\ \qquad \forall i, \mu_i\geq 0 \end{array}\right. (D2)⎩⎪⎪⎨⎪⎪⎧α,μmaxw,b,ξminL(w,b,α,μ)=21wTw+Ci=1∑Nξi−i=1∑Nαi(yi(wTxi+b)−1+ξi)−i=1∑Nμiξis.t. ∀i,αi≥0∀i,μi≥0

其中, min w , b , ξ L ( w , b , α , ξ ) \min\limits_{w,b,\xi}\mathcal{L}(w,b,\alpha,\xi) w,b,ξminL(w,b,α,ξ)对应的KKT条件为:

{ ∂ L ∂ w = 0 , ∂ L ∂ b = 0 , ∂ L ∂ ξ = 0 y i ( w T x i + b ) − 1 + ξ i ≥ 0 ξ i ≥ 0 α i ≥ 0 , μ i ≥ 0 α i ( y i ( w T x i + b ) − 1 + ξ i ) = 0 μ i ξ i = 0 \left\{\begin{array}{l} \frac{\partial L}{\partial w}=0,\frac{\partial L}{\partial b}=0, \frac{\partial L}{\partial \xi}=0\\ y_i(w^Tx_i+b)-1+\xi_i\geq 0\\ \xi_i\geq 0\\ \alpha_i\geq 0,\mu_i\geq 0\\ \alpha_i(y_i(w^Tx_i+b)-1+\xi_i)=0\\ \mu_i\xi_i=0\end{array}\right. ⎩⎪⎪⎪⎪⎪⎪⎨⎪⎪⎪⎪⎪⎪⎧∂w∂L=0,∂b∂L=0,∂ξ∂L=0yi(wTxi+b)−1+ξi≥0ξi≥0αi≥0,μi≥0αi(yi(wTxi+b)−1+ξi)=0μiξi=0

其中,第一个条件可化为:

w = ∑ i = 1 N α i y i x i , 0 = ∑ i = 1 N α i y i , α i = C − μ i w=\sum\limits_{i=1}^N\alpha_iy_ix_i, 0=\sum\limits_{i=1}^N\alpha_iy_i, \ \alpha_i=C-\mu_i w=i=1∑Nαiyixi,0=i=1∑Nαiyi, αi=C−μi.

将这些等式带入,可以得到下面的等价问题:

( D 2 ′ ) { max α − 1 2 ∑ i = 1 N ∑ j = 1 N α i α j y i y j x i T x j + ∑ i = 1 N α i s . t . 0 ≤ α i ≤ C ∑ i = 1 N α i y i = 0 (D_2')\left\{\begin{array}{l} \max\limits_{\alpha}-\frac{1}{2}\sum\limits_{i=1}^N\sum\limits_{j=1}^N\alpha_i\alpha_j y_iy_jx_i^Tx_j+\sum\limits_{i=1}^N\alpha_i\\ s.t.\ \ 0\leq \alpha_i\leq C\\ \qquad \sum\limits_{i=1}^N\alpha_iy_i=0\end{array}\right. (D2′)⎩⎪⎪⎪⎪⎨⎪⎪⎪⎪⎧αmax−21i=1∑Nj=1∑NαiαjyiyjxiTxj+i=1∑Nαis.t. 0≤αi≤Ci=1∑Nαiyi=0

令 f ( x ) = w T x + b f(x)=w^Tx+b f(x)=wTx+b,从得到的结果逆向推理,可以得到下面的式子:

α i = 0 → μ i = C → ξ i = 0 → y i f ( x i ) ≥ 1 0 < α i < C → μ i ≠ 0 → ξ i = 0 → α i ( y i f ( x i ) − 1 ) = 0 → y i f ( x i ) = 1 α i = c → μ i = 0 → ξ i ≥ 0 → α i ( y i f ( x i ) − 1 + ξ i ) = 0 → y i f ( x i ) ≤ 1. \begin{array}{l} \alpha_i=0\rightarrow \mu_i=C\rightarrow \xi_i=0\rightarrow y_if(x_i)\geq 1\\ 0<\alpha_i

6 SMO算法

现在我们的问题就是,如何快速的求解下面这个优化问题。

( D 3 ) { min a 1 2 ∑ i = 1 N ∑ i = 1 N y i y j x i T x j α i α j − ∑ i = 1 N α i s . t . 0 ≤ α i ≤ C ∑ i = 1 N y i α i = 0 (D_3)\left\{\begin{array}{l} \min\limits_{a}\frac{1}{2}\sum\limits_{i=1}^N\sum\limits_{i=1}^N y_iy_jx_i^Tx_j\alpha_i\alpha_j-\sum\limits_{i=1}^N\alpha_i\\ s.t. \ \ 0\leq \alpha_i\leq C\\ \qquad \sum\limits_{i=1}^Ny_i\alpha_i=0\end{array}\right. (D3)⎩⎪⎪⎪⎪⎨⎪⎪⎪⎪⎧amin21i=1∑Ni=1∑NyiyjxiTxjαiαj−i=1∑Nαis.t. 0≤αi≤Ci=1∑Nyiαi=0

写成核函数形式为:

( D 3 ′ ) { min a 1 2 ∑ i = 1 N ∑ i = 1 N y i y j K ( x i , x j ) α i α j − ∑ i = 1 N α i s . t . 0 ≤ α i ≤ C ∑ i = 1 N y i α i = 0 (D_3')\left\{\begin{array}{l} \min\limits_{a}\frac{1}{2}\sum\limits_{i=1}^N\sum\limits_{i=1}^N y_iy_jK(x_i,x_j)\alpha_i\alpha_j-\sum\limits_{i=1}^N\alpha_i\\ s.t. \ \ 0\leq \alpha_i\leq C\\ \qquad \sum\limits_{i=1}^Ny_i\alpha_i=0\end{array}\right. (D3′)⎩⎪⎪⎪⎪⎨⎪⎪⎪⎪⎧amin21i=1∑Ni=1∑NyiyjK(xi,xj)αiαj−i=1∑Nαis.t. 0≤αi≤Ci=1∑Nyiαi=0

解决带不等式限制的凸优化问题,采取的一般都是内点法。但是内点法的代价太大,需要存储一个 N 2 N^2 N2的矩阵,在内存有限的条件下不可行,且每次求全局导数花费时间很多,此外还牵涉到数值问题。而SMO是解决二次优化问题的神器。他每次选择两个拉格朗日乘子 α i , α j \alpha_i,\alpha_j αi,αj来求条件最小值,然后更新 α i , α j \alpha_i,\alpha_j αi,αj。由于在其他拉格朗日乘子固定的情况下, α i , α j \alpha_i,\alpha_j αi,αj有如下关系:

∑ i = 1 N α i y i = 0 → α i y i + α j y j = − ∑ k ≠ i , j N α k y k . \sum\limits_{i=1}^N\alpha_iy_i=0\rightarrow \alpha_iy_i+\alpha_jy_j=-\sum\limits_{k\neq i,j}^N\alpha_ky_k. i=1∑Nαiyi=0→αiyi+αjyj=−k=i,j∑Nαkyk.

这样 α i \alpha_i αi就可以通过 α j \alpha_j αj表示出来,此时优化问题可以转变为一个变量的二次优化问题,这个问题的计算量非常少。所以SMO包括两个过程,一个过程选择两个拉格朗日乘子,这是一个外部循环,一个过程来求解这两个变量的二次优化问题,这个是循环内过程。我们先来解决两个变量的lagrange multipliers问题,然后再去解决乘子的选择问题。

6.1 α i , α j \alpha_i,\alpha_j αi,αj的计算

由SVM优化目标函数的约束条件 ∑ i = 1 N a i y i = 0 \sum\limits_{i=1}^Na_iy_i=0 i=1∑Naiyi=0,可以得到:

a 1 y 1 + a 2 y 2 = − ∑ j = 3 N a j y j ≜ ζ → a 1 = ( ζ − a 2 y 2 ) y 1 a_1y_1+a_2y_2=-\sum\limits_{j=3}^Na_jy_j\triangleq \zeta\rightarrow a_1=(\zeta-a_2y_2)y_1 a1y1+a2y2=−j=3∑Najyj≜ζ→a1=(ζ−a2y2)y1.

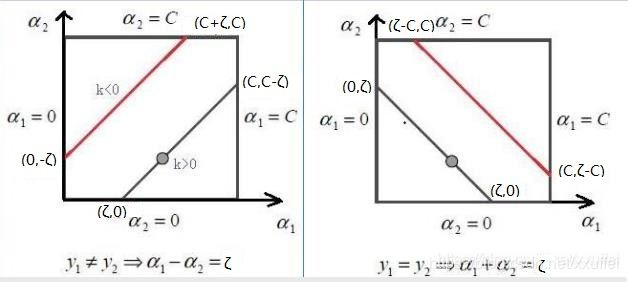

为了计算两个lagrange Multiplier的优化问题,SMO首先计算这两个乘子的取值范围,然后再这个范围限制下解决二次优化问题。为了书写方便,现在用1,2来代替i,j。这两个变量之间的关系为 a 1 y 1 + a 2 y 2 = ζ a_1y_1+a_2y_2=\zeta a1y1+a2y2=ζ,下面这幅图生动形象的解释了这两个变量的关系。

现在我们来讨论在进行双变量二次优化时 α 2 \alpha_2 α2的取值范围。如果 y 1 ≠ y 2 y_1\neq y_2 y1=y2,则 α 2 \alpha_2 α2的下界L和上界H可以表示为:

L = max ( 0 , α 2 − α 1 ) , H = min ( C , C + α 2 − α 1 ) . ( 13 − Platt论文中编号 ) L=\max(0,\alpha_2-\alpha_1),\quad H=\min(C,C+\alpha_2-\alpha_1).\qquad(13-\text{Platt论文中编号}) L=max(0,α2−α1),H=min(C,C+α2−α1).(13−Platt论文中编号)

反之,如果y1=y2,则 α 2 \alpha_2 α2的下界L和上界H可以表示为:

L = max ( 0 , α 2 + α 1 − C ) , H = min ( C , α 2 + α 1 ) . ( 14 − Platt论文中编号 ) L=\max(0,\alpha_2+\alpha_1-C),\quad H=\min(C,\alpha_2+\alpha_1).\qquad(14-\text{Platt论文中编号}) L=max(0,α2+α1−C),H=min(C,α2+α1).(14−Platt论文中编号)

对于上面的问题 ( D 3 ′ ) (D_3') (D3′):

( D 3 ′ ) { min a 1 2 ∑ i = 1 N ∑ i = 1 N y i y j K ( x i , x j ) α i α j − ∑ i = 1 N α i s . t . 0 ≤ α i ≤ C ∑ i = 1 N y i α i = 0 (D_3')\left\{\begin{array}{l} \min\limits_{a}\frac{1}{2}\sum\limits_{i=1}^N\sum\limits_{i=1}^N y_iy_jK(x_i,x_j)\alpha_i\alpha_j-\sum\limits_{i=1}^N\alpha_i\\ s.t. \ \ 0\leq \alpha_i\leq C\\ \qquad \sum\limits_{i=1}^Ny_i\alpha_i=0\end{array}\right. (D3′)⎩⎪⎪⎪⎪⎨⎪⎪⎪⎪⎧amin21i=1∑Ni=1∑NyiyjK(xi,xj)αiαj−i=1∑Nαis.t. 0≤αi≤Ci=1∑Nyiαi=0

首先,将原优化目标函数展开为与 α 1 \alpha_1 α1和 α 2 \alpha_2 α2有关的部分和无关的部分:

min Φ ( α 1 , α 2 ) = 1 2 K 11 α 1 2 + 1 2 K 22 α 2 2 + y 1 y 2 K 12 α 1 α 2 − ( α 1 + α 2 ) + a 1 y 1 ∑ i = 3 N α i y i K 1 i + α 2 y 2 ∑ j = 3 N α j y j K 2 j + c ) = 1 2 K 11 α 1 2 + 1 2 K 22 α 2 2 + y 1 y 2 K 12 α 1 α 2 − ( α 1 + α 2 ) + α 1 y 1 v 1 + α 2 y 2 v 2 + c ) ( 1 ) \begin{array}{rl}\min\Phi(\alpha_1,\alpha_2)&=\frac{1}{2}K_{11}\alpha_1^2+\frac{1}{2}K_{22}\alpha_2^2+y_1y_2K_{12}\alpha_1\alpha_2-(\alpha_1+\alpha_2)+a_1y_1\sum\limits_{i=3}^N\alpha_iy_iK_{1i}+\alpha_2y_2\sum\limits_{j=3}^N\alpha_jy_jK_{2j}+c)\\ &=\frac{1}{2}K_{11}\alpha_1^2+\frac{1}{2}K_{22}\alpha_2^2+y_1y_2K_{12}\alpha_1\alpha_2-(\alpha_1+\alpha_2)+\alpha_1y_1v_1+\alpha_2y_2v_2+c)\qquad (1)\end{array} minΦ(α1,α2)=21K11α12+21K22α22+y1y2K12α1α2−(α1+α2)+a1y1i=3∑NαiyiK1i+α2y2j=3∑NαjyjK2j+c)=21K11α12+21K22α22+y1y2K12α1α2−(α1+α2)+α1y1v1+α2y2v2+c)(1),

其中 v i = ∑ j = 3 N α j y j K i j ( i = 1 , 2 ) v_i=\sum\limits_{j=3}^N\alpha_jy_jK_{ij}\ (i=1,2) vi=j=3∑NαjyjKij (i=1,2),c是与 α 1 \alpha_1 α1和 α 2 \alpha_2 α2无关的部分,在本次优化中当做常数项处理。

我们将优化目标中所有的 α 1 \alpha_1 α1都替换为用 α 2 \alpha_2 α2表示的形式,得到如下式子:

min Φ ( α 2 ) = 1 2 K 11 ( ζ − α 2 y 2 ) 2 + 1 2 K 22 α 2 2 + y 2 K 12 α 2 ( ζ − α 2 y 2 ) − y 1 ( ζ − α 2 y 2 ) − α 2 + ( ζ − α 2 y 2 ) ∑ i = 3 N α i y i K 1 i + α 2 y 2 ∑ j = 3 N α j y j K 2 j + c = 1 2 K 11 ( ζ − α 2 y 2 ) 2 + 1 2 K 22 α 2 2 + y 2 K 12 α 2 ( ζ − α 2 y 2 ) − y 1 ( ζ − α 2 y 2 ) − α 2 + ( ζ − α 2 y 2 ) v 1 + α 2 y 2 v 2 + c . ( 2 ) \begin{array}{rl}\min\Phi(\alpha_2)=&\frac{1}{2}K_{11}(\zeta-\alpha_2y_2)^2+\frac{1}{2}K_{22}\alpha_2^2+y_2K_{12}\alpha_2(\zeta-\alpha_2y_2)-\\ &y_1(\zeta-\alpha_2y_2)-\alpha_2+(\zeta-\alpha_2y_2)\sum\limits_{i=3}^N\alpha_iy_iK_{1i}+\alpha_2y_2\sum\limits_{j=3}^N\alpha_jy_jK_{2j}+c\\ =&\frac{1}{2}K_{11}(\zeta-\alpha_2y_2)^2+\frac{1}{2}K_{22}\alpha_2^2+y_2K_{12}\alpha_2(\zeta-\alpha_2y_2)-\\ &y_1(\zeta-\alpha_2y_2)-\alpha_2+(\zeta-\alpha_2y_2)v_1+\alpha_2y_2v_2+c.\qquad (2) \end{array} minΦ(α2)==21K11(ζ−α2y2)2+21K22α22+y2K12α2(ζ−α2y2)−y1(ζ−α2y2)−α2+(ζ−α2y2)i=3∑NαiyiK1i+α2y2j=3∑NαjyjK2j+c21K11(ζ−α2y2)2+21K22α22+y2K12α2(ζ−α2y2)−y1(ζ−α2y2)−α2+(ζ−α2y2)v1+α2y2v2+c.(2).

此时,优化目标中仅含有 α 2 \alpha_2 α2一个待优化变量了,现在将待优化函数对 α 2 \alpha_2 α2求偏导得到如下结果:

∂ Φ ( α 2 ) ∂ α 2 = ( K 11 + K 22 − 2 K 12 ) a l p h a 2 − K 11 ζ y 2 + K 12 ζ y 2 + y 1 y 2 − 1 − y 2 ∑ i = 3 N a i y i K 1 i + y 2 ∑ j ≠ 1 , 2 N α j y j K 2 j = ( K 11 + K 22 − 2 K 12 ) α 2 − K 11 ζ y 2 + K 12 ζ y 2 + y 1 y 2 − 1 − y 2 v 1 + y 2 v 2 ( 3 ) \begin{array}{rl}\frac{\partial \Phi(\alpha_2)}{\partial \alpha_2}=&(K_{11}+K_{22}-2K_{12})alpha_2-K_{11}\zeta y_2+K_{12}\zeta y_2+y_1y_2-1-y_2\sum\limits_{i=3}^Na_iy_iK_{1i}+y_2\sum\limits_{j\neq 1,2}^N\alpha_jy_jK_{2j}\\ =&(K_{11}+K_{22}-2K_{12})\alpha_2-K_{11}\zeta y_2+K_{12}\zeta y_2+y_1y_2-1-y_2v_1+y_2v_2\qquad (3)\end{array} ∂α2∂Φ(α2)==(K11+K22−2K12)alpha2−K11ζy2+K12ζy2+y1y2−1−y2i=3∑NaiyiK1i+y2j=1,2∑NαjyjK2j(K11+K22−2K12)α2−K11ζy2+K12ζy2+y1y2−1−y2v1+y2v2(3).

前面我们已经定义SVM超平面的模型为 f ( x ) = w T x + b f(x)=w^Tx+b f(x)=wTx+b,将已推导出w的表达式带入得

f ( x ) = ∑ i = 1 N α i y i K ( x i , x ) + b ; f ( x i ) f(x)=\sum\limits_{i=1}^N\alpha_iy_iK(x_i,x)+b;f(x_i) f(x)=i=1∑NαiyiK(xi,x)+b;f(xi)表示样本 x i x_i xi的预测值, y i y_i yi表示样本 x i x_i xi的真实值,定义 E i E_i Ei表示预测值与真实值之差为 E i = f ( x i ) − y i E_i=f(x_i)-y_i Ei=f(xi)−yi,从而,

v i = ∑ j = 3 N α i y i K i j , i = 1 , 2 v_i=\sum\limits_{j=3}^N\alpha_iy_iK_{ij},\ i=1,2 vi=j=3∑NαiyiKij, i=1,2,因此,

v 1 = f ( x 1 ) − ∑ j = 1 2 y j α j K 1 j − b = f ( x 1 ) − y 1 α 1 K 11 − y 2 α 2 K 12 − b ( 4 ) v 2 = f ( x 2 ) − ∑ j = 1 2 y j α j K 2 j − b = f ( x 2 ) − y 1 α 1 K 21 − y 2 α 2 K 22 − b ( 5 ) \begin{array}{rl} v_1&=f(x_1)-\sum\limits_{j=1}^2y_j\alpha_jK_{1j}-b=f(x_1)-y_1\alpha_1K_{11}-y_2\alpha_2K_{12}-b\qquad (4)\\ v_2&=f(x_2)-\sum\limits_{j=1}^2y_j\alpha_jK_{2j}-b=f(x_2)-y_1\alpha_1K_{21}-y_2\alpha_2K_{22}-b\qquad (5)\end{array} v1v2=f(x1)−j=1∑2yjαjK1j−b=f(x1)−y1α1K11−y2α2K12−b(4)=f(x2)−j=1∑2yjαjK2j−b=f(x2)−y1α1K21−y2α2K22−b(5).

优化前的解记为 α 1 o l d , α 2 o l d \alpha_1^{old},\alpha_2^{old} α1old,α2old,更新后的解记为 α 1 n e w , α 2 n e w \alpha_1^{new},\alpha_2^{new} α1new,α2new,则(1),(4),(5)可得,

{ a 1 o l d y 1 + a 2 o l d y 2 = ζ ∑ i = 3 N α i y i K 1 i = f ( x 1 ) − y 1 α 1 o l d K 11 − y 2 α 2 o l d K 12 + b ∑ j = 3 N α j y j K 2 j = f ( x 2 ) − y 1 α 1 o l d K 21 − y 2 α 2 o l d K 22 + b \left\{\begin{array}{l} a_1^{old}y_1+a_2^{old}y_2=\zeta\\ \sum\limits_{i=3}^N\alpha_iy_iK_{1i}=f(x_1)-y_1\alpha_1^{old}K_{11}-y_2\alpha_2^{old}K_{12}+b\\ \sum\limits_{j=3}^N\alpha_jy_jK_{2j}=f(x_2)-y_1\alpha_1^{old}K_{21}-y_2\alpha_2^{old}K_{22}+b\end{array}\right. ⎩⎪⎪⎪⎪⎨⎪⎪⎪⎪⎧a1oldy1+a2oldy2=ζi=3∑NαiyiK1i=f(x1)−y1α1oldK11−y2α2oldK12+bj=3∑NαjyjK2j=f(x2)−y1α1oldK21−y2α2oldK22+b

将以上三个条件带入偏导式子中,得到如下结果:

∂ Φ ( α 2 ) ∂ α 2 = ( K 11 + K 22 − 2 K 12 ) a l p h a 2 − ( K 11 − K 12 ) ( α 1 o l d y 1 + α 2 o l d y 2 ) y 2 + y 1 y 2 − 1 − y 2 ( u ( x 1 ) − y 1 α 1 o l d K 11 − y 2 α 2 o l d K 12 − b ) + y 2 ( u ( x 2 ) − y 1 α 1 o l d K 21 − y 2 α 2 o l d K 22 − b ) = 0 \begin{array}{rl}\frac{\partial \Phi(\alpha_2)}{\partial \alpha_2}&=(K_{11}+K_{22}-2K_{12})alpha_2-(K_{11}-K_{12})(\alpha_1^{old}y_1+\alpha_2^{old}y_2)y_2\\ &+y_1y_2-1-y_2(u(x_1)-y_1\alpha_1^{old}K_{11}-y_2\alpha_2^{old}K_{12}-b)\\ &+y_2(u(x_2)-y_1\alpha_1^{old}K_{21}-y_2\alpha_2^{old}K_{22}-b)=0\end{array} ∂α2∂Φ(α2)=(K11+K22−2K12)alpha2−(K11−K12)(α1oldy1+α2oldy2)y2+y1y2−1−y2(u(x1)−y1α1oldK11−y2α2oldK12−b)+y2(u(x2)−y1α1oldK21−y2α2oldK22−b)=0.

化简后得:

( K 11 + K 22 − 2 K 12 ) α 2 = ( K 11 + K 22 − 2 K 12 ) α 2 o l d + y 2 [ ( f ( x 1 ) − y 1 ) − ( f ( x 2 ) − y 2 ) ] (K_{11}+K_{22}-2K_{12})\alpha_2=(K_{11}+K_{22}-2K_{12})\alpha_2^{old}+y_2[(f(x_1)-y_1)-(f(x_2)-y_2)] (K11+K22−2K12)α2=(K11+K22−2K12)α2old+y2[(f(x1)−y1)−(f(x2)−y2)]

记: η = K 11 + K 22 − 2 K 12 \eta=K_{11}+K_{22}-2K_{12} η=K11+K22−2K12, 则得到 α 2 \alpha_2 α2的更新公式:

α 2 n e w = α 2 o l d + y 2 ( E 1 − E 2 ) η \alpha_2^{new}=\alpha_2^{old}+\frac{y_2(E_1-E_2)}{\eta} α2new=α2old+ηy2(E1−E2),

但是,我们还需要考虑 α 2 \alpha_2 α2的取值范围,所以我们最后得到的 α 2 \alpha_2 α2的新值为:

α 2 n e w , c l i p = { H i f α 2 n e w ≥ H α 2 n e w i f L < a 2 n e w < H L i f α 2 n e w ≤ L ( 6 ) \alpha_2^{new,clip}=\left\{\begin{array}{rl} H&if \alpha_2^{new}\geq H\\ \alpha_2^{new}& if L< a_2^{new}

由 α 1 o l d y 1 + α 2 o l d y 2 = α 1 n e w y 1 + α 2 n e w y 2 = C \alpha_1^{old}y_1+\alpha_2^{old}y_2=\alpha_1^{new}y_1+\alpha_2^{new}y_2=C α1oldy1+α2oldy2=α1newy1+α2newy2=C可得 α 1 \alpha_1 α1的更新公式:

α 1 n e w = α 1 o l d + y 1 y 2 ( α 2 o l d − α 2 n e w , c l i p ) ( 7 ) \alpha_1^{new}=\alpha_1^{old}+y_1y_2(\alpha_2^{old}-\alpha_2^{new,clip})\qquad (7) α1new=α1old+y1y2(α2old−α2new,clip)(7).

6.2 α 1 , α 2 \alpha_1,\alpha_2 α1,α2取临界情况

大部分情况下,有 η = K 11 + K 22 − 2 K 12 > 0 \eta = K_{11}+K_{22}-2K_{12}>0 η=K11+K22−2K12>0。但是在如下几种情况下, α 2 n e w \alpha_2^{new} α2new需要取临界值L或者H:

- η<0,当核函数K不满足Mercer定理( Mercer定理:任何半正定对称函数都可以作为核函数)时,矩阵K非正定;

- η<0,样本x1与x2输入特征相同;

也可以如下理解,(3)式对 α 2 \alpha_2 α2再求导,或者说(2)式中 Φ ( α 2 ) \Phi(\alpha_2) Φ(α2)对 α 2 \alpha_2 α2求二阶导数就是 η = K 11 + K 22 − 2 K 12 > 0 \eta = K_{11}+K_{22}-2K_{12}>0 η=K11+K22−2K12>0,

- 当η<0时,目标函数为凸函数,没有极小值,极值在定义域边界处取得。

- 当η=0时,目标函数为单调函数,同样在边界处取极值。

计算方法如下:

将 α 2 n e w = L \alpha_2^{new}=L α2new=L和 α 2 n e w = H \alpha_2^{new}=H α2new=H分别带入(7)式中,计算出 α 1 n e w = L 1 \alpha_1^{new}=L_1 α1new=L1和 α 1 n e w = H 1 \alpha_1^{new}=H_1 α1new=H1,其中 L 1 = α 1 + s ( α 2 − L ) , H 1 = α 1 + s ( α 2 − H ) L_1=\alpha_1+s(\alpha_2-L), H_1=\alpha_1+s(\alpha_2-H) L1=α1+s(α2−L),H1=α1+s(α2−H).

将其带入目标函数(1)内,比较 Ψ L ≜ Ψ ( α 1 = L 1 , α 2 = L ) \Psi_L\triangleq\Psi(\alpha_1=L_1,\alpha_2=L) ΨL≜Ψ(α1=L1,α2=L)与 Ψ H ≜ Ψ ( α 1 = H 1 , α 2 = H ) \Psi_H\triangleq\Psi(\alpha_1=H_1,\alpha_2=H) ΨH≜Ψ(α1=H1,α2=H)的大小, α 2 \alpha_2 α2取 Ψ L \Psi_L ΨL和 Ψ H \Psi_H ΨH中较小的函数值对应的边界点, Ψ L \Psi_L ΨL和 Ψ H \Psi_H ΨH计算如下:

Ψ L = L 1 f 1 + L f 2 + 1 2 L 1 2 K 11 + 1 2 L 2 K 22 + s L L 1 K 12 , Ψ H = H 1 f 1 + H f 2 + 1 2 H 1 2 K 11 + 1 2 H 2 K 22 + s H H 1 K 12 , \begin{array}{rl} \Psi_L&=L_1f_1+Lf_2+\frac{1}{2}L_1^2K_{11}+\frac{1}{2}L^2K_{22}+sLL_1K_{12},\\ \Psi_H&=H_1f_1+Hf_2+\frac{1}{2}H_1^2K_{11}+\frac{1}{2}H^2K_{22}+sHH_1K_{12},\end{array} ΨLΨH=L1f1+Lf2+21L12K11+